Deviation from the Mean

advertisement

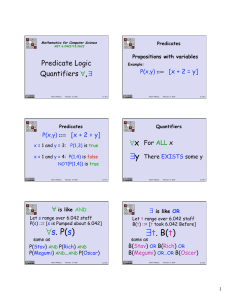

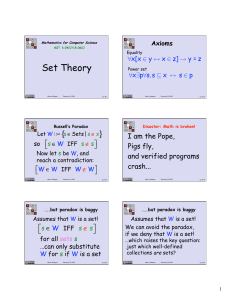

Mathematics for Computer Science

Example: IQ

IQ measure was constructed so

that

MIT 6.042J/18.062J

Deviation from

the Mean

Albert R Meyer,

May 7, 2010

average IQ = 100.

What fraction of the people

can possibly have an IQ 300?

lec 13F.1

Albert R Meyer,

lec 13F.15

May 7, 2010

IQ Higher than 300?

IQ Higher than 300?

Fraction f with IQ 300

adds 300f to average,

so 100 = avg IQ 300f:

At most 1/3 of people

have IQ 300

f 100/300 = 1/3

Albert R Meyer,

May 7, 2010

lec 13F.16

IQ Higher than x?

If R is nonnegative, then

Pr{R x} IQ is always nonnegative

May 7, 2010

lec 13F.17

May 7, 2010

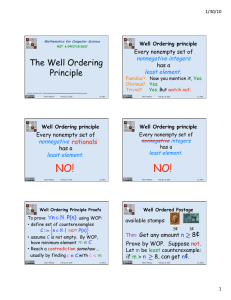

Markov Bound

Besides mean = 100,

we used only one fact about

the distribution of IQ:

Albert R Meyer,

Albert R Meyer,

lec 13F.19

E R x

for x E[R]

Albert R Meyer,

May 7, 2010

lec 13F.20

1

IQ 300, again

Markov Bound

Suppose we are given that

IQ is always 50?

Get a better bound using

(IQ – 50)

since this is now 0.

•Weak

•Obvious

•Useful anyway

Albert R Meyer,

May 7, 2010

lec 13F.22

f contributes (300-50)f to

the average of (IQ-50), so

50 = E[IQ-50] 250f

f 50/250 = 1/5

Better bound from Markov by

shifting R to have 0 as minimum

May 7, 2010

lec 13F.25

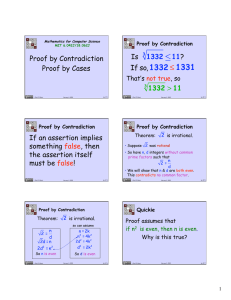

Chebyshev Bound

May 7, 2010

lec 13F.24

Pr{|R| x}

= Pr{(R)2 x2}

by Markov:

variance of R

Albert R Meyer,

May 7, 2010

lec 13F.26

Standard Deviation

Var[R]

Pr{|R - μ | x} x2

Var[R] ::= E[(R - )2 ]

Albert R Meyer,

May 7, 2010

Improving the Markov Bound

IQ 300, again

Albert R Meyer,

Albert R Meyer,

lec 13F.28

2

Pr{|R - μ | x} 2

x

R probably not many ’s from μ: further than 2

3

4

Albert R Meyer,

Pr 1

Pr 1/4

Pr 1/9

Pr 1/16

May 7, 2010

lec 13F.32

2

Variance of an Indicator

Calculating Variance

I an indicator with E[I]=p:

Var[aR + b] = a 2 Var[R]

= E I 2p p + p2

Albert R Meyer,

May 7, 2010

simple proofs applying linearity

of E[] to the def of Var[]

lec 13F.34

Calculating Variance

Albert R Meyer,

May 7, 2010

lec 13F.35

Mathematics for Computer Science

Pairwise Independent Additivity

MIT 6.042J/18.062J

Deviation of

Repeated Trials

providing R1,R2,…,Rn are

pairwise independent

again, a simple proof applying

linearity of E[] to the def of Var[]

Albert R Meyer,

May 7, 2010

lec 13F.43

Jacob D. Bernoulli (16591705)

---Ars Conjectandi (The Art of Guessing), 1713*

*taken from Grinstead \& Snell,

http://www.dartmouth.edu/~chance/teaching_aids/books_articles/probability_book/book.html

Introduction to Probability, American Mathematical Society, p. 310.

May 7, 2010

May 7, 2010

lec 13F.44

Jacob D. Bernoulli (16591705)

Even the stupidest man by some instinct of

nature per se and by no previous instruction

(this is truly amazing) knows for sure that

the more observations ...that are taken, the

less the danger will be of straying from the

mark.

Albert R Meyer,

Albert R Meyer,

lec 13F.45

It certainly remains to be inquired whether

after the number of observations has been

increased, the probability…of obtaining the

true ratio…finally exceeds any given degree

of certainty; or whether the problem has, so

to speak, its own asymptote that is, whether

some degree of certainty is given which one

can never exceed.

Albert R Meyer,

May 7, 2010

lec 13F.46

3

Repeated Trials

Repeated Trials

take average:

Random var R with mean μ

n independent observations

Bernoulli question: is it

probably close to μ if n is big

R1,, Rn

{

Pr A n μ Albert R Meyer,

May 7, 2010

lec 13F.54

Weak

Law of Large

Numbers

Bernoulli

answer:

lim Pr{ A n - μ } = 1?

n

lim Pr{ A n - μ >} = 0

n

Albert R Meyer,

May 7, 2010

lec 13F.57

Weak Law of Large Numbers

Albert R Meyer,

} =?

May 7, 2010

lec 13F.55

Jacob D. Bernoulli (1659 – 1705)

Therefore, this is the problem which I

now set forth and make known after I

have pondered over it for twenty years.

Both its novelty and its very great

usefulness, coupled with its just as

great difficulty, can exceed in

weight and value all the remaining

chapters of this thesis.

Albert R Meyer,

May 7, 2010

lec 13F.58

Repeated Trials

will follow easily by Chebyshev

& variance properties

lim Pr{ A n - μ >} = 0

n

Albert R Meyer,

May 7, 2010

lec 13F.59

=

nμ

n

Albert R Meyer,

=μ

May 7, 2010

lec 13F.60

4

Weak Law of Large Numbers

Repeated Trials

(

Var A n Pr{ A n - μ >} 2

(

need only show

Var[An] 0 as n Albert R Meyer,

May 7, 2010

lec 13F.61

{

Pr A n - μ > lec 13F.64

lec 13F.63

May 7, 2010

Albert R Meyer,

}

1 n May 7, 2010

2

lec 13F.65

Team Problems

Pairwise Independent Sampling

The punchline:

we now know how big a sample is

needed to estimate the mean of

any* random variable within

any* desired tolerance with

any* desired probability

*variance < , tolerance > 0,

probability < 1

May 7, 2010

Albert R Meyer,

Theorem:

Let R1,…,Rn be pairwise independent

random vars with the same finite

mean μ and variance 2. Let

Then

•same mean

•same variance

•& variances add

which follows from

pairwise independence

Albert R Meyer,

0

Pairwise Independent Sampling

proof only used that R1,…,Rn have

May 7, 2010

)

QED

Analysis of the Proof

Albert R Meyer,

)

R + R ++ R 1

2

n Var A n = Var n

Var R 1 + Var R 2 + + Var R n =

2

n

So by Chebyshev

Problems

13

lec 13F.66

Albert R Meyer,

May 7, 2010

lec 13F.67

5

MIT OpenCourseWare

http://ocw.mit.edu

6.042J / 18.062J Mathematics for Computer Science

Spring 2010

For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms.