LECTURE 1 Sample space Ω

advertisement

Sample space Ω

LECTURE 1

• Readings: Sections 1.1, 1.2

• “List” (set) of possible outcomes

• List must be:

– Mutually exclusive

Lecture outline

– Collectively exhaustive

• Probability as a mathematical framework for:

• Art: to be at the “right” granularity

– reasoning about uncertainty

– developing approaches to inference problems

• Probabilistic models

– sample space

– probability law

• Axioms of probability

• Simple examples

Sample space: Discrete example

Sample space: Continuous example

• Two rolls of a tetrahedral die

Ω = {(x, y) | 0 ≤ x, y ≤ 1}

– Sample space vs. sequential description

1

4

Y = Second

y

1,1

1,2

1,3

1,4

1

2

3

roll

3

2

1

1

1

2

3

X = First roll

4

4

4,4

1

x

Probability axioms

Probability law: Example with finite sample

space

• Event: a subset of the sample space

4

• Probability is assigned to events

Y = Second 3

roll

Axioms:

2

1. Nonnegativity: P(A) ≥ 0

1

1

2. Normalization: P(Ω) = 1

2

3

4

X = First roll

3. Additivity: If A ∩ B = Ø, then P(A ∪ B) = P(A) +

P(B)

• Let every possible outcome have probability 1/16

– P((X, Y ) is (1,1) or (1,2)) =

• P({s1, s2, . . . , sk }) = P({s1}) + · · · + P({sk })

– P({X = 1}) =

= P(s1) + · · · + P(sk )

– P(X + Y is odd) =

– P(min(X, Y ) = 2) =

• Axiom 3 needs strengthening

• Do weird sets have probabilities?

Discrete uniform law

Continuous uniform law

• Let all outcomes be equally likely

• Then,

P(A) =

• Two “random” numbers in [0, 1].

y

1

number of elements of A

total number of sample points

• Computing probabilities ≡ counting

1

• Defines fair coins, fair dice, well-shuffled card decks

x

• Uniform law: Probability = Area

– P(X + Y ≤ 1/2) = ?

– P( (X, Y ) = (0.5, 0.3) )

2

Probability law: Ex. w/countably infinite sample

space

• Sample space: {1, 2, . . .}

– We are given P(n) = 2−n, n = 1, 2, . . .

– Find P(outcome is even)

p

1/2

1/4

1/8

1

2

3

1/16

…..

4

P({2, 4, 6, . . .}) = P(2) + P(4) + · · · =

1

1

1

1

+ 4 + 6 + ··· =

22

3

2

2

• Countable additivity axiom (needed for this calcu­

lation):

If A1, A2, . . . are disjoint events, then:

P(A1 ∪ A2 ∪ · · · ) = P(A1) + P(A2) + · · ·

3

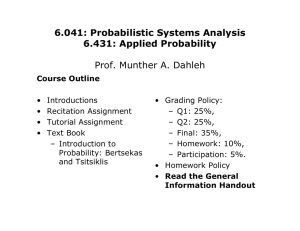

MIT OpenCourseWare

http://ocw.mit.edu

6.041SC Probabilistic Systems Analysis and Applied Probability

Fall 2013

For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms.