National League Table Methodologies

advertisement

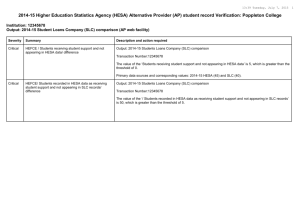

National League Table Methodologies Weighting 2014 (%) Measure Description Teaching quality Percentage of final-year students satisfied with the teaching they received (2012 NSS). 10 Feedback (assessment) Percentage of final-year students satisfied with the feedback and assessment by lecturers (2012 NSS). 10 Overall satisfaction Expenditure per student Student:Staff ratio Percentage of final-year students satisfied with overall quality (2012 NSS). 5 Amount of money spent on each student, (rating out of 10). 15 Number of students per member of teaching staff. 15 Percentage of graduates who take up graduate-level employment or further study within six months of graduation (2010 graduates). 15 Job prospects Value added Entry score Comparison of students' individual degree results with their entry qualifications; to show how effective the teaching is (rating out of 10). Average entry tariff means the typical UCAS scores of students currently studying. 15 15 Table 1. Summary of measures used to compile the Guardian University Guide 2014 For further information on the full Guardian methodology please visit: http://www.theguardian.com/education/interactive/2013/jun/04/universityguide-students Detailed Descriptors The methodology focuses on subject-level league tables, ranking institutions that provide each subject according to their relevant statistics. Eight statistical measures are employed to approximate a university's performance in teaching each subject. The measures are knitted together to get a Guardian score, against which institutions are ranked. The Guardian scores are averaged for each institution across all subjects to generate an institutionlevel table. National Student Survey – Teaching (10%) During the 2012 National Student Survey, final year first degree students were asked the extent to which they agreed with four positive statements regarding their experience of teaching in their department. The summary of responses to all four questions can either be expressed as a percentage who 'definitely agree' or 'mostly agree' National Student Survey - Assessment & Feedback (10%) Students were also asked for their perception of five statements regarding the way in which their efforts were assessed and how helpful any feedback was. 1 National Student Survey - Overall Satisfaction (5%) Students also answer a single question which encompasses all aspects of their courses. Caveat: Because the NSS surveys final year students it is subjective and dependent upon expectations. Students at a university that generally has a high reputation may be more demanding in the quality of teaching they expect. On the other hand, students in a department that has been lower in the rankings may receive teaching that exceeds their prior expectations and give marks higher than would be achieved in a more objective assessment of quality. Value Added Scores (15%) Based upon an indexing methodology that tracks students from enrolment to graduation. Qualifications upon entry are compared with the award that a student receives at the end of their studies. Each full time student is given a probability of achieving a 1st or 2:1, based on the qualifications that they enter with. If they manage to earn a good degree then they score points which reflect how difficult it was to do so (in fact, they score the reciprocal of the probability of getting a 1st or 2:1). Thus an institution that is adept at taking in students with low entry qualifications, which are generally more difficult to convert into a 1st or 2:1, will score highly in the value-added measure if the number of students getting a 1st or 2:1 exceeds expectations. Student-Staff Ratios (15%) SSRs compare the number of staff teaching a subject with the number of students studying it, to get a ratio where a low SSR is treated positively in the league tables. Caveat: This measure only includes staff who are contracted to spend a significant portion of their time teaching. It excludes those classed as 'research only' but includes researchers who also teach. Expenditure per Student (15%) The amount of money that an institution spends providing a subject (not including the costs of academic staff, since these are already counted in the SSR) is divided by the volume of students learning the subject to derive this measure. Added to this figure is the amount of money the institution has spent on Academic Services - which includes library & computing facilities – over the past two years, divided by the total volume of students enrolled at the university in those years. Year-on-year inconsistency or extreme values can also cause suppression (or spreading) of results. Entry Scores (15%) Average Tariffs are determined by taking the total tariff points of 1st year 1st degree full time entrants who were aged under 20 at the start of their course, and subtracting the tariffs ascribed to Key Skills, Core Skills and to 'SQA intermediate 2'. Caveat: This measure seeks to approximate the aptitude of fellow students that a prospective student can anticipate. However, some institutions run access programmes that admit students on the basis that their potential aptitude is not represented by their lower tariff scores. Such institutions can expect to see lower average tariffs but higher value added scores 2 Career Prospects (15%) The employability of graduates is assessed by looking at the proportion of graduates who find graduate-level employment, and/or study at an HE or Professional level, within 6 months of graduation. Graduates who report that they are unable to work are excluded from the study population, which must have at least 25 respondents in order to generate results. Standardisation of Scores For those institutions that qualify for inclusion in the subject table, each score is compared to the average score achieved by the other institutions that qualify, using standard deviations to gain a normal distribution of standardised scores (S-scores). The standardised score for Student Staff Ratios is negative, to reflect that low ratios are regarded as better. We cap certain S-scores - extremely high expenditure and SSR figures - at three standard deviations. This is to prevent a valid but extreme value from exerting an influence that far exceeds that of all other measures. Missing Scores Where an indicator of performance is absent, a process introduces substitute S-scores. Institutional Table The Institutional Table ranks institutions according to their performance in the subject tables, but considers two other factors when calculating overall performance. Firstly, the number of students in a department influences the extent to which that department's Total S-score contributes to the institution's overall score and secondly, the number of institutions included in the subject table also determines the extent to which a department can affect the institutional table. 3 Measure Description Weighting Student satisfaction Simple average of responses (% agree) to the seven sections of the 2013 National Student Survey. 1.5 Research quality Grades from RAE 2008. Scoring mirrors the process used to distribute research funding in England. 4*rated work is weighted by a factor of three and 3* work by a factor of one. The measure makes use of contextual data provided by HESA regarding the proportion of eligible staff submitted to RAE 2008. 1.5 *Entry standards Average UCAS score tariff of new students under 21. Scores represent what students achieved and not what the University requested. Zero scores have been removed from the analysis. 1.0 *Student:staff ratio Scores represent the average number of students to each member of academic staff (apart from those purely engaged in research). 1.0 Services and Facilities Spend Amount spent per student on facilities, including library and computing resources, averaged over two years (HESA 10/11 & 11/12). 1.0 Completion Percentage of students predicted to finish their studies or transfer to another HE institution, based on HESA performance indicators published March 2013 (2010/11 cohort). 1.0 *Good Honours Percentage of graduates achieving a first or upper second class degree as a proportion of graduates with a classified degree (11/12). Enhanced first degrees (e.g. MEng) are treated as a first/upper second degree. 1.0 *Graduate Prospects Percentage of graduates who take up graduate-level employment or further study within six months of graduation (DLHE 2011/12, 2012 graduates). 1.0 Table 2. Summary of measures used to compile the Times-Sunday Times Good University Guide Detailed Descriptors In building the institutional table, the indicators were combined using a z-score transformation and the totals were transformed to a scale with 1000 for the top score. For entry standards, student-staff ratios, First and 2:1s and graduate prospects the score was adjusted for subject mix. The detailed definitions of the indicators are given below. Student Satisfaction The percentage of positive responses (Agree & Definitely Agree) in each of the six question areas (Teaching, Assessment & Feedback, Academic Support, Organisation & Management, Learning Resources and Personal Development) plus the Overall Satisfaction question were combined to provide composite score. Source: 2013 National Student Survey. Research Quality Overall quality of research based on the 2008 Research Assessment Exercise. The output of the RAE gave each institution a profile in the following categories - 4* world-leading, 3* internationally excellent, 2* internationally recognised, 1* nationally recognised and unclassified. The Funding Bodies decided to direct more funds to the very best research by applying weightings. Those 4 adopted by HEFCE (the funding council for England) for 2012/13 funding are used in the tables - 4* is weighted by a factor of 3 and 3* is weighted by a factor of 1. Outputs of 2*, 1* and unclassified carry zero weight. Estimations of the eligible staff for each university were made using contextual data provided by HESA. The score is presented as a percentage of the maximum possible score of 3. Source: Higher Education Funding Council for England (Hefce) and Hesa. Entry Standards Mean tariff point scores on entry for first year, first degree students under 21 years of age based on A and AS Levels and Highers and Advanced Highers and other equivalent qualifications (e.g. International Baccalaureate). Entrants with zero tariffs were excluded from the calculation. Source: Hesa, 2011/12. Graduate Prospects Destinations of full-time first degree UK domiciled leavers. The indicator is based upon the activity of leavers 6 months after graduation and whether they entered professional or non-professional employment or entered graduate level further study. The professional employment marker is derived from the latest Standard Occupational Classification (SOC2010) codes. The data were derived from the Hesa Destination of Leavers from HE (DLHE) Record. Source: Hesa, 2012/13 based on 2012 graduates. First and 2:1s The number of students who graduated with a first or upper second class degree as a proportion of the total number of graduates with classified degrees. Enhanced first degrees, such as a MEng gained after four-year engineering course, were treated as equivalent to a first or upper second. Source: Hesa, 2011/12. Completion Rates Percentage of students projected to complete their degree, including students who transfer to other institutions as a proportion of known data. The measure used in the table projects what proportion of students will eventually gain a degree, what proportion will leave their current university or college but transfer into higher education and is presented as a proportion of students with known data. Source: Hesa Performance Indicators published March 2013 (table T5 - uses 2010/11 entrant cohort). Student-staff ratio The number of students at each institution as defined in the HESA Session HE and FE populations as an FTE (full-time equivalent) divided by the number of staff FTE based on academic staff including Teaching Only and Teaching & Research staff but excluding Research Only staff. Source: Hesa, 2011/12. Services and Facilities Spend A two-year average of expenditure on academic services and staff & student facilities, divided by the total number of full time equivalent students. Source: Hesa, 2010/11 and 2011/12. 5 Measure Description Weighting *Student satisfaction The average satisfaction score (% agree) for all sections of the 2012 NSS (except learning resources). 1.5 Research assessment Grade point average from RAE 2008, weighted across the institution by department size. 1.5 *Entry standards The average UCAS tariff score of new students (2011/12). 1.0 *Student:staff ratio Number of students per member of teaching staff (2011/12). 1.0 Academic services spend The expenditure per student on all academic services (averaged over three years 2009/10-2011/12). 1.0 Facilities spend The expenditure per student on staff and student facilities (averaged over three years 2009/10-2011/12). 1.0 *Good honours The percentage of graduates achieving a first or upper second class honours degree (2011/12). 1.0 *Graduate prospects Percentage of graduates who take up graduate employment or further study (DLHE 2011/12). 1.0 Completion Percentage of students expected to complete their course or transfer to another institution. 1.0 Table 3. Summary of measures used to compile the Complete University Guide 2014 Detailed Descriptors Institutional Table To create the institutional table, a Z-transformation was applied to each measure to create a score for that measure. The Z-scores on each measure were then weighted by 1.5 for Student Satisfaction and Research Assessment and 1.0 for the rest and summed to give a total score for the University. Finally, these total scores were transformed to a scale where the top score was set at 1,000 with the remainder being a proportion of the top score. This scaling does not affect the overall ranking but it avoids giving any university a negative overall score. In addition, some measures (Student Satisfaction, Entry Standards, Student/Staff Ratio, Good Honours, and Graduate Prospects) have been adjusted to take account of the subject mix at the institution. Student Satisfaction (maximum score 5.00) A measure of the view of students of the teaching quality at the university taken from the National Student Survey 2012. The average satisfaction score for all questions except the three about learning resources was calculated and then adjusted for the subject mix at the university. 6 The survey is a measure of student opinion, not a direct measure of quality. It may therefore be influenced by a variety of biases, such as the effect of prior expectations. A top-notch university expected to deliver really excellent teaching could score lower than a less good university which, while offering lower quality teaching, nonetheless does better than students expect from it. Research Assessment (maximum score 4.00) A measure of the average quality of the research undertaken in the university from the 2008 Research Assessment Exercise undertaken by the funding councils. For the research assessment measure, the categories 4* to 1* were given a numerical value of 4 to 1 which allowed a grade point average to be calculated. An overall average was then calculated weighted according to the number of staff in each department. A measure of research intensity (the proportion of staff in the university undertaking the research that contributed to the research quality rating) was calculated. About half of universities agreed to release this information; for the other half it was estimated using a statistical transformation of HESA staff data. The two measures were combined in a ratio of 2:1 with research quality more heavily weighted. Entry Standards (maximum score n/a) The average UCAS tariff score of new students (HESA data for 2011–12). Each student's examination results were converted to a numerical score (A level A=120, B=100 ... E=40, etc; Scottish Highers A=72, B=60, etc) and added up to give a score total. HESA then calculates an average for all students at the university. The results were adjusted to take account of the subject mix at the university. Student–Staff Ratio (maximum score n/a) A measure of the average staffing level in the university calculated using HESA data for 2011–12. A student–staff ratio (i.e. the number of students divided by the number of staff) was calculated in a way designed to take account of different patterns of staff employment in different universities. Again, the results were adjusted for subject mix. Academic Services Spending (maximum score n/a) The expenditure per student on all academic services (HESA data for 2009–10, 2010–11, and 2011– 12). A university's expenditure on library and computing facilities (books, journals, staff, computer hardware and software, but not buildings), museums, galleries and observatories was divided by the number of full-time equivalent students. Libraries and information technology are becoming increasingly integrated (many universities have a single Department of Information Services encompassing both) and so the two areas of expenditure have both been included alongside any other academic services. Expenditure over three years was averaged to allow for uneven expenditure. Some universities are the location for major national facilities, such as the Bodleian Library in Oxford and the national computing facilities in Bath and Manchester. The local and national expenditure is very difficult to separate and so these universities will tend to score more highly on this measure. 7 Facilities Spending (maximum score n/a) The expenditure per student on staff and student facilities (HESA data for 2009–10, 2010–11, and 2011–12). A university's expenditure on student facilities (sports, careers services, health, counselling, etc) was divided by the number of full-time equivalent students. Expenditure over three years was averaged to allow for uneven expenditure. Good Honours (maximum score 100.0) The percentage of graduates achieving a first or upper second class honours degree (HESA data for 2011–12). Enhanced first degrees, such as an MEng awarded after a four-year engineering course, were treated as equivalent to a first or upper second for this purpose. The results were then adjusted to take account of the subject mix at the university. Graduate Prospects (maximum score 100.0) A measure of the employability of a university's graduates (HESA data for 2010–11). The number of graduates who take up employment or further study divided by the total number of graduates with a known destination expressed as a percentage. Only employment in an area that normally recruits graduates was included. The results were then adjusted to take account of the subject mix at the university. Completion (maximum score 100.0) A measure of the completion rate of those studying at the university based on HESA performance indicators, based on data for 2011–12 and earlier years. HESA calculate the expected outcomes for a cohort of students based on what happened to students in the current year. The figures in the tables show the percentage of students who were expected to complete their course or transfer to another institution. 8