18.05 Problem Set 6, Spring ...

advertisement

18.05 Problem Set 6, Spring 2014 Solutions

Problem 1. (10 pts.) (a) Throughout this problem we will let x be the data of

140 heads out of 250 tosses. We have 140/250 = .56. Computing the likelihoods:

� �

� �

250

250

250

(.5)

p(x|H0 ) =

p(x|H1 ) =

(.56)140 (.44)110

140

140

which yields Bayes factor

p(x|H0 )

(.5)250

=

= 0.16458,

(.56)140 (.44)110

p(x|H1 )

(Actually, we computed the log Bayes factor since it is numerically more stable. Then

we exponentiated to get the Bayes factor.)

Since we chose the probability 140/250 of H1 to exactly match the data it is not

surprising that the probability of the data given H1 is much greater than the proba­

bility given H0 . Said differently, the data will pull our prior towards one centered at

140/250.

25

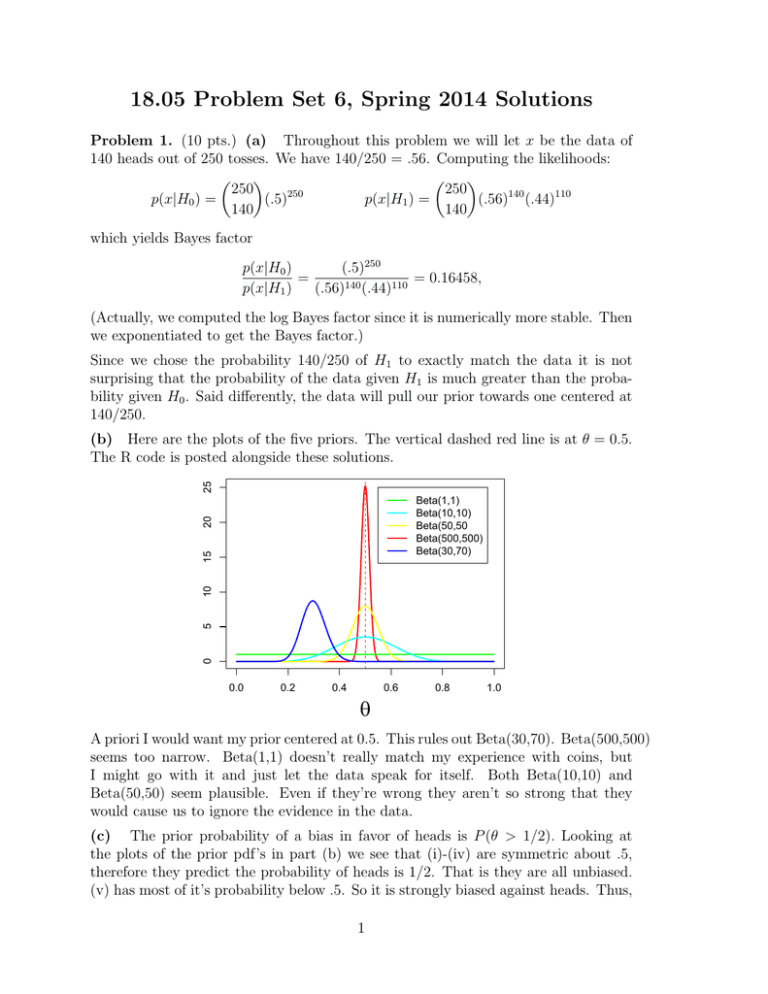

(b) Here are the plots of the five priors. The vertical dashed red line is at θ = 0.5.

The R code is posted alongside these solutions.

0

5

10

15

20

Beta(1,1)

Beta(10,10)

Beta(50,50

Beta(500,500)

Beta(30,70)

0.0

0.2

0.4

0.6

0.8

1.0

θ

A priori I would want my prior centered at 0.5. This rules out Beta(30,70). Beta(500,500)

seems too narrow. Beta(1,1) doesn’t really match my experience with coins, but

I might go with it and just let the data speak for itself. Both Beta(10,10) and

Beta(50,50) seem plausible. Even if they’re wrong they aren’t so strong that they

would cause us to ignore the evidence in the data.

(c) The prior probability of a bias in favor of heads is P (θ > 1/2). Looking at

the plots of the prior pdf’s in part (b) we see that (i)-(iv) are symmetric about .5,

therefore they predict the probability of heads is 1/2. That is they are all unbiased.

(v) has most of it’s probability below .5. So it is strongly biased against heads. Thus,

1

18.05 Problem Set 6, Spring 2014 Solutions

2

the ranking in order of bias from least to greatest is (v) followed by a four-way tie

between (i)-(iv).

(d) All of the prior pdf’s are beta distributions, so they have the form

f (θ) = c1 θa−1 (1 − θ)b−1 .

For a fixed hypothesis θ the likelihood function (given the data x) is

� �

250 140

θ (1 − θ)110 .

p(x|θ) =

140

Thus the posterior pdf is

f (θ|x) = c2 θ140+a−1 (1 − θ)110+b−1 ∼ beta(140 + a, 110 + b).

So the five posterior distributions (i)-(v) are beta(141, 111), beta(150, 120), beta(190, 160),

beta(640, 610), and beta(170, 180).

25

Here are the plots of the five posteriors.

0

5

10

15

20

Beta(1,1)

Beta(10,10)

Beta(50,50

Beta(500,500)

Beta(30,70)

0.0

0.2

0.4

0.6

0.8

1.0

θ

Each prior is centered on a value of θ. The sharpness of the peak is a measure of the

prior ‘commitment’ to this value. So prior (iv) is strongly committed to θ = .5, but

prior (ii) is only weakly committed and (i) is essentially uncommitted. The effect of

the data is to pull the center of the prior towards the data mean of .56. That is, it

averages the center of the prior and the data mean. The stronger the prior belief the

less the data pulls the center towards .56. So prior (iv) is only moved a little and

prior (i) is moved almost all the way to .56. Priors (ii) and (iii) are intermediate.

Prior (v) is centered at θ = .3. The data moves the center a long way towards .56.

But, since it starts so much farther from .56 than the other priors, the posterior is

still centered the farthest from .56.

18.05 Problem Set 6, Spring 2014 Solutions

3

(e) For each of the five posterior distributions, we compute p(θ ≥ 0.5|x) :

P(i) (θ

P(ii) (θ

P(iii) (θ

P(iv) (θ

P(v) (θ

> 0.5|x) = 1> 0.5|x) = 1> 0.5|x) = 1> 0.5|x) = 1> 0.5|x) = 1-

pbeta(.5,

pbeta(.5,

pbeta(.5,

pbeta(.5,

pbeta(.5,

141,111) = 0.9710

150,120) = 0.9664

190,160) = 0.9459

640,610) = 0.8020

170,180) = 0.2963

This is consistent with the plot in d), as the posterior computed from the uniform

prior has the most density past 0.5 while the posterior computed from prior (v) has

the least.

(f ) Step 1.

Since the intervals are small we can use the relation

probability ≈ density · Δθ.

So

P(i) (H0 |x) = P(i) (0.49 ≤ θ ≤ 0.51|x) ≈ ·f(i) (0.5|x) · .2

and

P(i) (H1 |x)Pi (0.55 ≤ θ ≤ 0.57) ≈ ·f(i) (0.56) · .2.

So the the posterior odds (using prior (i)) of H1 versus H0 are approximately

f(i) (0.56)

P(i) (H1 |x)

c(0.56)140 (0.44)110

dbeta(.56,141,111)

≈

=

=

≈ 6.07599

P(i) (H0 |x)

f(i) (0.5)

dbeta(.5,141,111)

c(0.5)140 (0.5)110

By similar reasoning, the posterior odds (using prior (iv)) of H1 versus H0 is approx­

imately 0.00437.

Problem 2. (10 pts.) Let A be the event that Alice is collecting tickets and B the

event that Bob is collecting tickets. Denoting our data as D, we have the likelihoods

1012+10+11+4+11 e−50

12!10!11!4!11!

1512+10+11+4+11 e−75

P (D|B) =

.

12!10!11!4!11!

P (D|A) =

P (A)

P (B)

1

= 10

. Thus, our posterior odds are

�

48

�

P (D|A)

10

1

e25 ·

O(A|D) =

O(A) =

≈ 25.408

15

10

P (D|B)

Moreover, we are given prior odds, O(A) =

Note that the Bayes factor is about 250.

Problem 3. (10 pts.) (a) We have a flat prior pdf f (θ) = 1. For a single data

value x, our likelihood function is:

f (x|θ) =

0

1

θ

if θ < x

if x ≤ θ ≤ 1

18.05 Problem Set 6, Spring 2014 Solutions

4

Thus our table is

hyp.

θ<x

x≤θ≤1

Tot.

prior likelihood

f (θ)

f (x|θ)

dθ

0

1

dθ

θ

1

unnormalized posterior

posterior

f (θ|x)

0

0

dθ

c

dθ

θ

θ

T

1

The normalizing constant c must make the total posterior probability 1, so

1

dθ

1

.

=1 ⇒ c=−

θ

ln(x)

c

x

Note that since x ≤ 1, we have c = −1/ ln(x) > 0.

Here are plots for x = .2 (c = 0.621) and x = .5 (c = 1.443).

3.5

f(θ|x = .2)

f(θ|x = .5)

3

2.5

2

1.5

1

0.5

0

0

0.2

0.4

0.6

0.8

1

θ

(b) Notice that θ cannot be less than any one of x1 , . . . , xn . So the likelihood function

is given by

(

0 if θ < max {x1 , . . . , xn }

f (x1 , . . . , xn |θ) = 1

if max {x1 , . . . , xn } ≤ θ ≤ 1.

θn

Let xM = max {x1 , . . . , xn }. So our table is

hyp.

θ < xM

xM ≤ θ ≤ 1

Tot.

prior likelihood unnormalized posterior

f (θ) f (data|θ)

posterior

f (θ|data)

dθ

0

0

0

1

dθ

c

dθ

dθ

θn

θn

θn

1

T

1

The normalizing constant c must make the total posterior probability 1, so

1

c

xM

dθ

n−1

.

= 1 ⇒ c = 1−n

n

θ

xM − 1

The posterior pdf depends only on n and xM , therefore the data (.1,.5) and (.5,.5)

have the same posteriors. Here are the plots of the posteriors for the given data.

18.05 Problem Set 6, Spring 2014 Solutions

5

10

data = (.1,.5) or (.5,.5)

data = (.1,...,.5)

8

6

4

2

0

0

0.2

0.4

0.6

0.8

1

θ

(c) We now have xM = 0.5 so from part (b) the posterior density is

(

0 if θ < .5

f (θ|x1 , . . . , x5 ) = c

if .5 ≤ θ ≤ 1,

θ5

where c =

4

.5−4 −1

=

4

.

15

Now, let x be amount by which Jane is late for the sixth class. The likelihood is

(

0 if θ < x

f (x|θ) = 1

if θ ≥ x

θ

We have the posterior predictive probability

Z

f (x|x1 , . . . , x5 ) = f (x|θ)f (θ|x1 , . . . , x5 ) dθ

⎧ 1

4 −5 1

4

⎨

.5 1θ · 15θ

=

124

5 dθ = − 75 θ

75

.5

=

1

⎩

1 1 4

· 5 dθ = − 4 θ−5 =

4 (x

−5 − 1)

x θ

15θ

75

x

75

if 0 ≤ x < .5

if .5 ≤ x ≤ 1

Thus the posterior predictive probability that x ≤ 0.5 is

Z 0.5

Z .5

124

62

dx =

= 0.82667 .

P (x ≤ .5|x1 , . . . , x5 ) =

f (x|x1 , . . . , xn ) dx =

75

75

0

0

(d) The graphs or the formula in part (a) show that f (θ|x) is decreasing for θ ≥ x,

so the MAP is when θ = x.

(e) Extra credit: 5 points

(i) From part (a) we have the posterior pdf f (θ|x). The conditional expectation is

Z

Z 1

c

1−x

E(θ|x) = θf (θ|x) dθ =

θ · = c(1 − x) = −

.

θ

ln(x)

x

18.05 Problem Set 6, Spring 2014 Solutions

6

(ii) After observing x, we know that θ ≥ x, and as a result the conditional expectation

E[θ|x] ≥ x. So the conditional expectation estimator is always at least as big as the

MAP estimator. However, the MAP estimator is precisely x, the amount by which

Jane is late on the first class. In this context, the MAP is not reasonable as it suggests

that on the first class, Jane arrived as late as possible and that in the future, she will

arrive less than x hours late.

1

0.8

0.6

0.4

0.2

MAP

Cond. expect.

0

0

0.2

0.4

0.6

0.8

1

x

Problem 4. (10 pts.) (a) Leaving the scale factors as letters our table is

hyp.

θ

Tot.

prior

f (θ) ∼ N(5, 9)

−(θ−5)2 /18

c1 e

likelihood

f (x|θ) ∼ N(θ, 4)

dθ

c2 e

(6−θ)2 /8

posterior

f (θ|x)

(θ − 5)2 (6 − θ)2

c exp −

−

18

8

�

1

�

1

All we need is some algebraic manipulations of the exponent in the posterior:

�

�

(θ − 5)2 (6 − θ)2

1 θ2 − 12θ + 36 θ2 − 10θ + 25

−

−

=−

+

18

8

2

4

9

�

�

1 13θ2 − 148θ + 424

=−

2

36

!

1 (θ − 74/13)2

+k

=−

2

36/13

where k is a constant. Thus the posterior

(θ − 74/13)2

f (θ|x) ∝ exp −

2 · 36/13

This has the form of a pdf for N

74 36

,

13 13

.

QED

!

18.05 Problem Set 6, Spring 2014 Solutions

7

2

(b) We have µprior = 5, σprior

= 9, x = 6, σ 2 = 4, n = 4 So we have

1

a= ,

9

b = 1,

a+b=

10

5/9 + 6

= 5.9,

⇒ µpost =

9

10/9

2

σpost

=

1

= .9.

10/9

0.5

Prior N(5,9)

Posterior N(5.9,.9).

0.4

0.3

0.2

0.1

0

-5

0

5

10

15

θ

After observing x1 , . . . , x4 , we see that the posterior mean is close to x and the

posterior variance is much smaller than the prior variance. The data has made us

more certain about the location of θ.

(c) As more data is received n increases, so b increases, so the mean of the posterior

is closer to the data mean and the variance of the posterior decreases. Since the

variance goes down, we gain more certainty about the true value of θ.

(d) With no new data we are given the prior f (θ) ∼ N(100, 152 ). For data x = score

on the IQ test we have the likelihood f (x|θ) ∼ N(θ, 102 ). Using the update formulas

2

we have µprior = 100, σprior

= 152 , σ 2 = 102 , n = 1. So a = 1/225, b = 1/100 and

a · 100 + b · 80

= 86.15

a+b

a · 100 + b · 150

= 134.62

(ii) Mary, x = 150: µpost =

a+b

Regression towards the mean!

(i) Randall, x = 80: µpost =

(e) Extra credit: 5 points.

This is essentially the same manipulation as in part

(a). First suppose we have one data value x1 then

hyp.

θ

Tot.

prior

2

f (θ) ∼ N(µprior , σprior

)

2

−(θ−µprior )2 /2σprior

c1 e

1

dθ

likelihood

f (x1 |θ) ∼ N(θ, σ 2 )

c2 e

(x1 −θ)2 /2σ 2

posterior

f (θ|x1 )

(θ − µprior )2 (x1 − θ)2

c exp −

−

2

2σprior

2σ 2

�

1

All we need is some algebraic manipulations of the exponent in the posterior. When­

�

18.05 Problem Set 6, Spring 2014 Solutions

8

ever we get a term not involving θ we just absorb it into the constant k1 , k2 etc.

�

�

(θ − µprior )2 (x1 − θ)2

1 θ2 − 2µprior θ + µ2prior θ2 − 2x1 θ + x21

−

=−

+

−

2

2

σ2

2σ 2

2

σprior

2σprior

1

= − (a + b)θ2 − 2(aµprior + bx1 )θ + k1

2

1 θ−

=−

2

aµprior +bx1

a+b

1

a+b

2

+ k2

Thus the posterior

⎛

⎜ 1 θ−

f (θ|x1 ) ∝ exp ⎝−

2

aµ

aµprior +bx1

a+b

1

a+b

2

⎞

⎟

⎠

+bx

1

prior

1

. This proves the formulas (1) when

This has the form of a pdf for N

, a+b

a+b

n = 1. The formulas when n > 1 are a simple consequence of updating one data

point at a time using the formulas when n = 1.

Problem 5. (10 pts.) Censored data.

We note that we assume that, given a

particular dice, the rolls are independent. Let x be the censored value on one roll.

The Bayes factor for x is

( 3/4

= 9/10 if x = 0

p(x|4-sided)

/6

Bayes factor =

= 51/4

p(x|6-sided)

= 3/2

if x = 1

1/6

Starting from the prior odds of 1, we multiply by the appropriate Bayes factor and

get the posterior odds after rolls 1-5 are

3

= 1.5,

2

27

= 1.35,

20

81

= 2.025,

40

243

= 3.0375,

80

729

= 4.5562

160

(b) In part (a) we saw the Bayes factor when x = 1 is 3/2. Since this is more than 1

it is evidence in favor of the 4-sided die. When x = 0 the Bayes factor is 9/10, which

is evidence in favor of the 6-sided die.

We saw this in part (a) because after every value of 1 the odds for the 4-sided die

went up and after the value of 0 the odds went down.

MIT OpenCourseWare

http://ocw.mit.edu

18.05 Introduction to Probability and Statistics

Spring 2014

For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms.