1 the gaussian approximation to the binomial

1 the gaussian approximation to the binomial we start with the probability of ending up j steps from the origin when taking a total of N steps, given by

P j

=

2 N

N + j

2

N !

!

N − j

2

!

(1) taking the logarithm of both sides, we have ln P j

= ln N !

− N ln 2 − ln

N + j

2

!

− ln

N − j

2

!

now we apply stirling’s approximation, which reads ln N !

≈ N ln N − N +

1

2 ln 2 πN for large N . this gives ln P j

≈ N ln N − N +

1

2 ln 2 πN − N ln 2 −

N + j N + j N + j

N

2

−

2 j ln ln

N

2

−

2 j

−

−

N

2

−

2 j

+

1

2 ln 2 π

+

1

2 ln 2 π

N + j

2

N − j

2

−

(2)

(3) the second term in each of the square brackets cancel each other. regrouping the first term in each of the square brackets together, ln P j

≈ N ln N −

N + j

2

+

1

2 ln 2 πN −

1

2 ln 2 π ln

N

N + j

2

+

2 j

−

N

−

1

2 ln 2 π

−

2 j ln

N − j

2

N − j

2

− N ln 2

(4)

(5) looking at only the terms in square brackets and rearranging a bit,

[ ] = N ln N −

N

2 ln

N +

2 j N − j

2

− j

2 ln

N

2

(1 −

4 j

2

N 2

) − j

2 ln

N

2

N

(1 +

+

2 j

+ j

N

) + j

2 j

2 ln ln

N −

2

= N ln N −

N

2 ln

= N ln N −

N

2 ln

N 2

4

−

N

2 ln(1 − j 2

N 2

) − j

2 j ln(1 +

N

) + j

2 ln(1 − j

N

) j

N

(1 −

2 j

N

) now we use the taylor expansion ln(1 ± x ) ≈ ± x for x 1 and work to “second order in j

N

”:

N j

2

[ ] ≈ N ln 2 +

2 j 2

N 2

= N ln 2 −

2 N

− j j

2 N j

−

2 j

N simplifying Eqn. (4) a bit and plugging the above in gives ln P j j 2

≈ N ln 2 −

2 N

−

1

2 ln

2 π

N

N + j N − j

2 2

− N ln 2 (6) now we approximate the second term in the same manner as before: ln P j j 2

≈ −

2 N j

2

≈ −

2 N

−

1

2 ln

π

2 N

−

1

2 ln

πN

2

N

2

(1 − j 2

N 2

)

1

we exponentiate to get P j back:

P j

≈ r

2

πN e

− j

2

2 N to get P ( x ) =

P j

2 L

, we use j = x/L and L = p

2 Dt/N :

P ( x ) =

=

1

2 r

N

2 Dt

1

√

4 πDt r

2

πN x 2 exp( −

2 N x 2 exp( −

4 Dt

)

N

2 Dt

) which is, at last, a gaussian distribution.

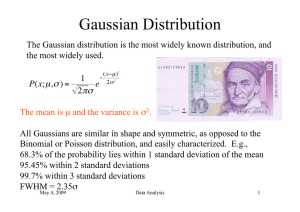

lesson: the largeN limit of a “fair” binomial distribution is a gaussian distribution.

c 2006 jake hofman.

(7)

(8)

(9)

2