ESTIMATION-BASED ADAPTIVE FILTERING AND CONTROL

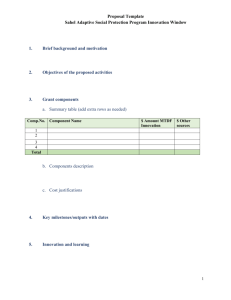

advertisement

ESTIMATION-BASED ADAPTIVE

FILTERING AND CONTROL

a dissertation

submitted to the department of electrical engineering

and the committee on graduate studies

of stanford university

in partial fulfillment of the requirements

for the degree of

doctor of philosophy

Bijan Sayyar-Rodsari

July 1999

c Copyright by Bijan Sayyar-Rodsari 1999

All Rights Reserved

ii

I certify that I have read this dissertation and that in my opinion it is fully

adequate, in scope and quality, as a dissertation for the degree of Doctor of

Philosophy.

Professor Jonathan How

(Principal Adviser)

I certify that I have read this dissertation and that in my opinion it is fully

adequate, in scope and quality, as a dissertation for the degree of Doctor of

Philosophy.

Professor Thomas Kailath

I certify that I have read this dissertation and that in my opinion it is fully

adequate, in scope and quality, as a dissertation for the degree of Doctor of

Philosophy.

Dr. Babak Hassibi

I certify that I have read this dissertation and that in my opinion it is fully

adequate, in scope and quality, as a dissertation for the degree of Doctor of

Philosophy.

Professor Carlo Tomasi

Approved for the University Committee on Graduate Studies:

iii

Abstract

Adaptive systems have been used in a wide range of applications for almost four

decades. Examples include adaptive equalization, adaptive noise-cancellation, adaptive vibration isolation, adaptive system identification, and adaptive beam-forming.

It is generally known that the design of an adaptive filter (controller) is a difficult nonlinear problem for which good systematic synthesis procedures are still lacking.

Most existing design methods (e.g. FxLMS, Normalized-FxLMS, and FuLMS) are adhoc in nature and do not provide a guaranteed performance level. Systematic analysis

of the existing adaptive algorithms is also found to be difficult. In most cases, addressing even the fundamental question of stability requires simplifying assumptions

(such as slow adaptation, or the negligible contribution of the nonlinear/time-varying

components of signals) which at the very least limit the scope of the analysis to the

particular problem at hand.

This thesis presents a new estimation-based synthesis and analysis procedure for

adaptive “Filtered” LMS problems. This new approach formulates the adaptive filtering (control) problem as an H∞ estimation problem, and updates the adaptive weight

vector according to the state estimates provided by an H∞ estimator. This estimator

is proved to be always feasible. Furthermore, the special structure of the problem

is used to reduce the usual Riccati recursion for state estimate update to a simpler

Lyapunov recursion. The new adaptive algorithm (referred to as estimation-based

adaptive filtering (EBAF) algorithm) has provable performance, follows a simple update rule, and unlike previous methods readily extends to multi-channel systems

and problems with feedback contamination. A clear connection between the limiting behavior of the EBAF algorithm and the classical FxLMS (Normalized-FxLMS)

iv

algorithm is also established in this thesis.

Applications of the proposed adaptive design method are demonstrated in an Active Noise Cancellation (ANC) context. First, experimental results are presented for

narrow-band and broad-band noise cancellation in a one-dimensional acoustic duct.

In comparison to other conventional adaptive noise-cancellation methods (FxLMS

in the FIR case and FuLMS in the IIR case), the proposed method shows much

faster convergence and improved steady-state performance. Moreover, the proposed

method is shown to be robust to feedback contamination while conventional methods

can go unstable. As a second application, the proposed adaptive method was used

for vibration isolation in a 3-input/3-output Vibration Isolation Platform. Simulation results demonstrate improved performance over a multi-channel implementation

of the FxLMS algorithm. These results indicate that the approach works well in

practice. Furthermore, the theoretical results in this thesis are quite general and can

be applied to many other applications including adaptive equalization and adaptive

identification.

v

Acknowledgements

This thesis has greatly benefited from the efforts and support of many people whom

I would like to thank. First, I would like to thank my principle advisor Professor

Jonathan How. This research would not have been possible without Professor How’s

insights, enthusiasm and constant support throughout the project. I appreciate his

attention to detail and the clarity that he brought to our presentations and writings.

I would also like to acknowledge the help and support of Dr. Alain Carrier from Lockheed Martin’s Advanced Technology Center. His careful reading of all the manuscripts

and reports, his provocative questions, and his dedication to meaningful research has

greatly influenced this work. I would like to gratefully acknowledge members of my

defense and reading committee, Professor Thomas Kailath, Professor Carlo Tomasi,

and Dr. Babak Hassibi. It was from a class instructed by Professor Kailath and Dr.

Hassibi that the main concept of this thesis originated, and it was their research that

this thesis is based on. It is impossible to exaggerate the importance of Dr. Hassibi’s

contributions to this thesis. He has been a great friend and advisor throughout this

work for which I am truly thankful.

My thanks also goes to Professor Robert Cannon and Professor Steve Rock for giving me the opportunity to interact with wonderful friends in the Aerospace Robotics

Laboratory. The help from ARL graduates, Gordon Hunt, Steve Ims, Stef Sonck,

Howard Wang, and Kurt Zimmerman was crucial in the early stages of the research

at Lockheed. I have also benefited from interesting discussions with fellow ARL students Andreas Huster, Kortney Leabourne, Andrew Robertson, Heidi Schubert, and

Bruce Woodley, on both technical and non-technical issues. I am forever thankful for

their invaluable friendship and support. I also acknowledge the camaraderie of more

vi

recent ARL members, Tobe Corazzini, Steve Fleischer, Eric Frew, Gokhan Inalhan,

Hank Jones, Bob Kindel, Ed LeMaster, Mel Ni, Eric Prigge, and Luis Rodrigues.

I discussed all aspects of this thesis in great detail with Arash Hassibi. He helped

me more than I can thank him for. Lin Xiao and Hong S. Bae set up the hardware for

noise cancellation and helped me in all experiments. I appreciate all their assistance.

Thomas Pare, Haitham Hindi, and Miguel Lobo provided helpful comments about the

research. I also acknowledge the assistance from fellow ISL students, Alper Erdogan,

Maryam Fazel, and Ardavan Maleki. I would like to also name two old friends, Khalil

Ahmadpour and Mehdi Asheghi, whose friendship I gratefully value.

I owe an immeasurable amount of gratitude to my parents, Hossein and Salehe, my

sister, Mojgan, and my brother, Bahman, for their support throughout the numerous

ups and downs that I have experienced. Finally, my sincere thanks goes to my wife,

Samaneh, for her gracious patience and strength. I am sure they agree with me in

dedicating this thesis to Khalil.

vii

Contents

Abstract

iv

Acknowledgements

vi

List of Figures

xii

1 Introduction

1

1.1

Motivation

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1

1.2

Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4

1.3

An Overview of Adaptive Filtering (Control) Algorithms . . . . . . .

6

1.4

Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

10

1.5

Thesis Outline

12

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2 Estimation-Based adaptive FIR Filter Design

14

2.1

Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

15

2.2

EBAF Algorithm - Main Concept

. . . . . . . . . . . . . . . . . . .

16

2.3

Problem Formulation

. . . . . . . . . . . . . . . . . . . . . . . . . .

18

2.4

2.3.1

H2 Optimal Estimation

. . . . . . . . . . . . . . . . . . . . .

19

2.3.2

H∞ Optimal Estimation . . . . . . . . . . . . . . . . . . . . .

20

H∞ -Optimal Solution

. . . . . . . . . . . . . . . . . . . . . . . . . .

21

2.4.1

γ-Suboptimal Finite Horizon Filtering Solution . . . . . . . .

21

2.4.2

γ-Suboptimal Finite Horizon Prediction Solution . . . . . . .

22

2.4.3

The Optimal Value of γ . . . . . . . . . . . . . . . . . . . . .

23

2.4.3.1

23

Filtering Case

. . . . . . . . . . . . . . . . . . . . .

viii

2.4.3.2

2.4.4

Prediction Case . . . . . . . . . . . . . . . . . . . .

27

Simplified Solution Due to γ = 1 . . . . . . . . . . . . . . . .

29

2.4.4.1

Filtering Case: . . . . . . . . . . . . . . . . . . . . .

29

2.4.4.2

Prediction Case: . . . . . . . . . . . . . . . . . . . .

30

2.5

Important Remarks

. . . . . . . . . . . . . . . . . . . . . . . . . . .

30

2.6

Implementation Scheme for EBAF Algorithm . . . . . . . . . . . . .

32

2.7

Error Analysis

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

35

2.7.1

Effect of Initial Condition . . . . . . . . . . . . . . . . . . . .

35

2.7.2

Effect of Practical Limitation in Setting y(k) to ŝ(k|k) (ŝ(k))

36

2.8

Relationship to the Normalized-FxLMS/FxLMS Algorithms . . . . .

2.8.1

Prediction Solution and its Connection to the FxLMS Algorithm

2.8.2

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

38

Filtering Solution and its Connection to the Normalized-FxLMS

Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.9

38

Experimental Data & Simulation Results

40

. . . . . . . . . . . . . . .

41

2.10 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

57

3 Estimation-Based adaptive IIR Filter Design

58

3.1

Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

59

3.2

Problem Formulation

. . . . . . . . . . . . . . . . . . . . . . . . . .

61

Estimation Problem . . . . . . . . . . . . . . . . . . . . . . .

63

Approximate Solution . . . . . . . . . . . . . . . . . . . . . . . . . .

65

3.2.1

3.3

3.3.1

γ-Suboptimal Finite Horizon Filtering Solution to the Linearized

Problem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.2

γ-Suboptimal Finite Horizon Prediction Solution to the Linearized Problem

3.3.3

66

. . . . . . . . . . . . . . . . . . . . . . . . .

Important Remarks

66

. . . . . . . . . . . . . . . . . . . . . . .

66

3.4

Implementation Scheme for the EBAF Algorithm in IIR Case . . . .

67

3.5

Error Analysis

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

69

3.6

Simulation Results . . . . . . . . . . . . . . . . . . . . . . . . . . . .

70

3.7

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

77

ix

4 Multi-Channel Estimation-Based Adaptive Filtering

4.1

4.2

Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

79

4.1.1

79

4.4

Multi-Channel FxLMS Algorithm

. . . . . . . . . . . . . . .

Estimation-Based Adaptive Algorithm for Multi Channel Case

. . .

81

. . . . . . . . . . . . . . . . . . . . . .

85

Simulation Results . . . . . . . . . . . . . . . . . . . . . . . . . . . .

85

4.3.1

Active Vibration Isolation . . . . . . . . . . . . . . . . . . . .

86

4.3.2

Active Noise Cancellation . . . . . . . . . . . . . . . . . . . .

89

4.2.1

4.3

78

H∞ -Optimal Solution

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

5 Adaptive Filtering via Linear Matrix Inequalities

104

5.1

Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

5.2

LMI Formulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

5.2.1

Including H2 Constraints

. . . . . . . . . . . . . . . . . . . . 110

5.3

Adaptation Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . 111

5.4

Simulation Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

5.5

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

6 Conclusion

121

6.1

Summary of the Results and Conclusions

6.2

Future Work

. . . . . . . . . . . . . . . 121

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

A Algebraic Proof of Feasibility

A.1 Feasibility of γf = 1

126

. . . . . . . . . . . . . . . . . . . . . . . . . . . 126

B Feedback Contamination Problem

128

C System Identification for Vibration Isolation Platform

132

C.1 Introduction

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

C.2 Identified Model

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

C.2.1 Data Collection Process . . . . . . . . . . . . . . . . . . . . . 133

C.2.2 Consistency of the Measurements . . . . . . . . . . . . . . . . 134

C.2.3 System Identification

. . . . . . . . . . . . . . . . . . . . . . 137

x

C.2.4 Control design model analysis . . . . . . . . . . . . . . . . . . 140

C.3 FORSE algorithm

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

Bibliography

155

xi

List of Figures

1.1

General block diagram for an FIR Filterm . . . . . . . . . . . . . . . . . .

13

1.2

General block diagram for an IIR Filter

. . . . . . . . . . . . . . . . . . .

13

2.1

General block diagram for an Active Noise Cancellation (ANC) problem . . . .

46

2.2

A standard implementation of FxLMS algorithm . . . . . . . . . . . . . . .

47

2.3

Pictorial representation of the estimation interpretation of the adaptive control

problem: Primary path is replaced by its approximate model

. . . . . . . . .

47

2.4

Block diagram for the approximate model of the primary path

. . . . . . . .

48

2.5

Schematic diagram of one-dimensional air duct . . . . . . . . . . . . . . . .

48

2.6

Transfer functions plot from Speakers #1 & #2 to Microphone #1

. . . . . .

49

2.7

Transfer functions plot from Speakers #1 & #2 to Microphone #2

. . . . . .

49

2.8

Validation of simulation results against experimental data for the noise cancellation problem with a single-tone primary disturbance at 150 Hz. The primary

disturbance is known to the adaptive algorithm. The controller is turned on at

t ≈ 3 seconds.

2.9

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

50

Experimental data for the EBAF algorithm of length 4, when a noisy measurement

of the primary disturbance (a single-tone at 150 Hz) is available to the adaptive

algorithm (SNR=3). The controller is turned on at t ≈ 5 seconds.

. . . . . .

51

2.10 Experimental data for the EBAF algorithm of length 8, when a noisy measurement

of the primary disturbance (a multi-tone at 150 and 180 Hz) is available to the

adaptive algorithm (SNR=4.5). The controller is turned on at t ≈ 6 seconds.

.

52

2.11 Experimental data for the EBAF algorithm of length 16, when a noisy measurement of the primary disturbance (a band limited white noise) is available to the

adaptive algorithm (SNR=4.5). The controller is turned on at t ≈ 5 seconds.

xii

.

53

2.12 Simulation results for the performance comparison of the EBAF and (N)FxLMS

algorithms. For 0 ≤ t ≤ 5 seconds, the controller is off. For 5 < t ≤ 20 seconds

both adaptive algorithms have full access to the primary disturbance (a singletone at 150 Hz). For t ≥ 20 seconds the measurement of Microphone #1 is used

as the reference signal (hence feedback contamination problem). The length of

the FIR filter is 24.

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

54

2.13 Simulation results for the performance comparison of the EBAF and (N)FxLMS

algorithms. For 0 ≤ t ≤ 5 seconds, the controller is off. For 5 < t ≤ 40 seconds

both adaptive algorithms have full access to the primary disturbance (a band

limited white noise). For t ≥ 40 seconds the measurement of Microphone #1 is

used as the reference signal (hence feedback contamination problem). The length

of the FIR filter is 32.

. . . . . . . . . . . . . . . . . . . . . . . . . . .

55

2.14 Closed-loop transfer function based on the steady state performance of the EBAF

and (N)FxLMS algorithms in the noise cancellation problem of Figure 2.13.

3.1

. .

56

General block diagram for the adaptive filtering problem of interest (with Feedback

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

72

3.2

Basic Block Diagram for the Feedback Neutralization Scheme . . . . . . . . .

72

3.3

Basic Block Diagram for the Classical Adaptive IIR Filter Design . . . . . . .

73

3.4

Estimation Interpretation of the IIR Adaptive Filter Design

. . . . . . . . .

73

3.5

Approximate Model For the Unknown Primary Path . . . . . . . . . . . . .

74

3.6

Performance Comparison for EBAF and FuLMS Adaptive IIR Filters for Single-

Contamination)

Tone Noise Cancellation. The controller is switched on at t = 1 second. For

1 ≤ t ≤ 6 seconds adaptive algorithm has full access to the primary disturbance.

For t ≥ 6 the output of Microphone #1 is used as the reference signal (hence

feedback contamination problem). . . . . . . . . . . . . . . . . . . . . . .

3.7

75

Performance Comparison for EBAF and FuLMS Adaptive IIR Filters for MultiTone Noise Cancellation. The controller is switched on at t = 1 second. For

1 ≤ t ≤ 6 seconds adaptive algorithm has full access to the primary disturbance.

For t ≥ 6 the output of Microphone #1 is used as the reference signal (hence

feedback contamination problem). . . . . . . . . . . . . . . . . . . . . . .

xiii

76

4.1

General block diagram for a multi-channel Active Noise Cancellation (ANC) problem

4.2

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

91

Pictorial representation of the estimation interpretation of the adaptive control

. . . . . . . . .

91

4.3

Approximate Model for Primary Path . . . . . . . . . . . . . . . . . . . .

92

4.4

Vibration Isolation Platform (VIP)

. . . . . . . . . . . . . . . . . . . . .

92

4.5

A detailed drawing of the main components in the Vibration Isolation Platform

problem: Primary path is replaced by its approximate model

(VIP). Of particular importance are: (a) the platform supporting the middle mass

(labeled as component #5), (b) the middle mass that houses all six actuators (of

which only two, one control actuator and one disturbance actuator) are shown

(labeled as component #11), and (c) the suspension springs to counter the gravity (labeled as component #12). Note that the actuation point for the control

actuator (located on the left of the middle mass) is colocated with the load cell

(marked as LC1). The disturbance actuator (located on the right of the middle

. . . . . . . . . . . . . . . . . .

93

4.6

SVD of the MIMO transfer function . . . . . . . . . . . . . . . . . . . . .

94

4.7

Performance of a multi-channel implementation of EBAF algorithm when distur-

mass) actuates against the inertial frame.

bance actuators are driven by out of phase sinusoids at 4 Hz. The reference signal

available to the adaptive algorithm is contaminated with band limited white noise

(SNR=3). The control signal is applied for t ≥ 30 seconds.

4.8

95

Performance of a multi-channel implementation of FxLMS algorithm when simulation scenario is identical to that in Figure 4.7.

4.9

. . . . . . . . . .

. . . . . . . . . . . . . . .

96

Performance of a multi-channel implementation of EBAF algorithm when disturbance actuators are driven by out of phase multi-tone sinusoids at 4 and 15 Hz.

The reference signal available to the adaptive algorithm is contaminated with band

limited white noise (SNR=4.5). The control signal is applied for t ≥ 30 seconds.

97

4.10 Performance of a multi-channel implementation of FxLMS algorithm when simulation scenario is identical to that in Figure 4.9.

. . . . . . . . . . . . . . .

98

4.11 Performance of a Multi-Channel implementation of the EBAF for vibration isolation when the reference signals are load cell outputs (i.e. feedback contamination

exists). The control signal is applied for t ≥ 30 seconds.

xiv

. . . . . . . . . . .

99

4.12 Performance of the Multi-Channel noise cancellation in acoustic duct for a multitone primary disturbance at 150 and 200 Hz. The control signal is applied for

t ≥ 2 seconds.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

4.13 Performance of the Multi-Channel noise cancellation in acoustic duct when the

primary disturbance is a band limited white noise. The control signal is applied

for t ≥ 2 seconds.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

4.14 Closed-loop vs. open-loop transfer functions for the steady state performance of

the EBAF algorithm for the simulation scenario shown in Figure 4.13.

. . . . 102

5.1

General block diagram for an Active Noise Cancellation (ANC) problem . . . .

5.2

Cancellation Error at Microphone #1 for a Single-Tone Primary Disturbance

5.3

Typical Elements of Adaptive Filter Weight Vector for Noise Cancellation Problem

in Fig. 5.2

. 116

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

5.4

Cancellation Error at Microphone #1 for a Multi-Tone Primary Disturbance

5.5

Typical Elements of Adaptive Filter Weight Vector for Noise Cancellation Problem

in Fig. 5.4

B.1

. 118

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Block diagram of the approximate model for the primary path in the presence of

the feedback path

C.1

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Magnitude of the scaling factor relating load cell’s reading of the effect of control

actuators to that of the scoring sensor . . . . . . . . . . . . . . . . . . . .

C.2

146

Magnitude of the scaling factor relating load cell’s reading of the effect of disturbance actuators to that of the scoring sensor after diagonalization . . . . . . .

C.5

145

Magnitude of the scaling factor relating load cell’s reading of the effect of control

actuators to that of the scoring sensor after diagonalization . . . . . . . . . .

C.4

144

Magnitude of the scaling factor relating load cell’s reading of the effect of disturbance actuators to that of the scoring sensor . . . . . . . . . . . . . . . . .

C.3

115

147

Comparison of SVD plots for the transfer function to the scaled/double-integrated

load cell data

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

C.6

Comparison of SVD plots for the transfer function to the actual load cell data .

C.7

Comparison of SVD plots for the transfer function to the scoring sensors

C.8

Comparison of SVD plots for the transfer function to the position sensors colocated

with the control actuators

148

. . . 149

. . . . . . . . . . . . . . . . . . . . . . . . . 149

xv

C.9

Comparison of SVD plots for the transfer function to the position sensors colocated

with the disturbance actuators

. . . . . . . . . . . . . . . . . . . . . . . 150

C.10 The identified model for the system beyond the frequency range for which measurements are available . . . . . . . . . . . . . . . . . . . . . . . . . . .

151

C.11 The final model for the system beyond the frequency range for which measurements are available . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

152

C.12 The comparison of the closed loop and open loop singular value plots when the

controller is used to close the loop on the identified model

. . . . . . . . . . 153

C.13 The comparison of the closed loop and open loop singular value plots when the

controller is used to close the loop on the real measured data

xvi

. . . . . . . . . 154

Chapter 1

Introduction

This dissertation presents a new estimation-based procedure for the systematic synthesis and analysis of adaptive filters (controllers) in “Filtered” LMS problems. This

new approach uses an estimation interpretation of the adaptive filtering (control)

problem to formulate an equivalent estimation problem. The adaptation criterion for

the adaptive weight vector is extracted from the H∞ -solution to this estimation problem. The new algorithm, referred to as Estimation-Based Adaptive Filtering (EBAF),

applies to both Finite Impulse Response (FIR) and Infinite Impulse Response (IIR)

adaptive filters.

1.1

Motivation

Least-Mean Squares (LMS) adaptive algorithm [51] has been the centerpiece of a wide

variety of adaptive filtering techniques for almost four decades. The straightforward

derivation, and the simplicity of its implementation (especially at the time of limited

computational power) encouraged experiments with the algorithm in a diverse range

of applications (e.g. see [51,33]). In some applications however, the simple implementation of the LMS algorithm was found to be inadequate. Subsequent attempts

to overcome its shortcomings have produced a large number of innovative solutions

that have been successful in practice. Commonly used algorithms such as normalized

1

1.1. MOTIVATION

2

LMS, correlation LMS [47], leaky LMS [21], variable-step-size LMS [25], and FilteredX LMS [35] are the outcome of such efforts. These algorithms use the instantaneous

squared error to estimate the mean-square error, and often assume slow adaptation

to allow for the necessary linear operations in their derivation (see Chapters 2 and 3

in [33] for instance). As Reference [2] points out:

“Many of the algorithms and approaches used are of an ad hoc nature;

the tools are gathered from a wide range of fields; and good systematic

approaches are still lacking.”

Introducing a systematic procedure for the synthesis of adaptive filters is one of the

main goals of this thesis.

Parallel to the efforts on the practical application of the LMS-based adaptive

schemes, there has been a concerted effort to analyze these algorithms. Of pioneering

importance are the results in Refs. [50] and [23]. Reference [50] considers the adaptation with LMS on stationary stochastic processes, and finds the optimal solution

to which the expected value of the weight vector converges. For sinusoidal inputs

however, the discussion in [50] does not apply. In [23] it is shown that for sinusoidal

inputs, when time-varying component of the adaptive filter output is small compared

to its time-invariant component (see [23], page 486), the adaptive LMS filter can be

approximated by a linear time-invariant transfer function. Reference [13] extends the

approach in [23] to derive an equivalent transfer function for the Filtered-X LMS

adaptive algorithm (provided the conditions required in [23] still apply). The equivalent transfer function is then used to analytically derive an expression for the optimum

convergence coefficients. A frequency domain model of the so-called filtered LMS algorithm (i.e. an algorithm in which the input or the output of the adaptive filter or

the feedback error signal is linearly filtered prior to use in the adaptive algorithm)

is discussed in [17]. The frequency domain model in [17] decouples the inputs into

disjoint frequency bins and places a single frequency adaptive noise canceler on each

bin. The analysis in their work utilizes the frequency domain LMS algorithm [11]

and assumes a time invariant linear behavior for the filter. Other important aspects

1.1. MOTIVATION

3

of the adaptive filters have also been extensively studied. The effect of the modeling error on the convergence and performance properties of the LMS-based adaptive

algorithms (e.g. [17,7]), and tracking behavior of the LMS adaptive algorithm when

the adaptive filter is tuned to follow a linear chirp signal buried in white noise [5,6],

are examples of these studies∗ . In summary, existing analysis techniques are often

suitable for analyzing only one particular aspect of the behavior of an adaptive filter

(e.g. its steady-state behavior). Furthermore, the validity of the analysis relies on

certain assumptions (e.g. slow convergence, and/or the negligible contribution of the

nonlinear/time-varying component of the adaptive filter output) that can be quite

restrictive. Providing a solid framework for the systematic analysis of adaptive filters

is another main goal of this thesis.

The reason for the difficulty experienced in both synthesis and analysis of adaptive

algorithms is best explained in Reference [37]:

“It is now generally realized that adaptive systems are special classes of

nonlinear systems . . . general methods for the analysis and synthesis of

nonlinear systems do not exist since conditions for their stability can be

established only on a system by system basis.”

This thesis introduces a new framework for the synthesis and analysis of adaptive

filters (controllers) by providing an estimation interpretation of the above mentioned

“nonlinear” adaptive filtering (control) problem. The estimation interpretation replaces the original adaptive filtering (control) synthesis with an equivalent estimation

problem, the solution of which is used to update the weight vector in the adaptive

filter (and hence the name estimation-based adaptive filtering). This approach is

applicable (due to its systematic nature) to both FIR and IIR adaptive filters (controllers). In the FIR case the equivalent estimation problem is linear, and hence exact

solutions are available. Stability, performance bounds, transient behavior of adaptive

FIR filters are thus precisely addressed in this framework. In the IIR case, however,

only an approximate solution to the equivalent estimation problem is available, and

∗

The survey here is intended to provide a flavor of the type of the problems that have captured

the attention of researchers in the field. The shear volume of the literature makes subjective selection

of the references unavoidable.

1.2. BACKGROUND

4

hence the proposed estimation-based framework serves as a reasonable heuristic for

the systematic design of adaptive IIR filters. This approximate solution however, is

based on realistic assumptions, and the adaptive algorithm maintains its systematic

structure. Furthermore, the treatment of feedback contamination (see Chapter 3 for a

precise definition), is virtually identical to that of adaptive IIR filters. The proposed

estimation-based approach is particularly appealing if one considers the difficulty with

the existing design techniques for adaptive IIR filters, and the complexity of available

solutions to feedback contamination (e.g. see [33]).

1.2

Background

The development of the new estimation-based framework is based on recent results

in robust estimation. Following the pioneering work in [52], the H∞ approach to

robust control theory produced solutions [12,24] that were designed to meet some

performance criterion in the face of the limited knowledge of the exogenous disturbances and imperfect system models. Further work in robust control and estimation

(see [32,46] and the references therein) produced straightforward solutions that allowed in-depth studies of the properties of the robust controllers/estimators. The

main idea in H∞ estimation is to design an estimator that bounds (in the optimum

case, minimizes) the maximum energy gain from the disturbances to the estimation

errors. Such a solution guarantees that for disturbances with bounded energy, the

energy of the estimation error will be bounded as well. In the case of an optimal

solution, an H∞ -optimal estimator will guarantee that the energy of the estimation

error for the worst case disturbance is indeed minimized [28].

Of crucial importance for the work in this thesis, is the result in [26] where the H∞ optimality of the LMS algorithm was established. Note that despite a long history

of successful applications, prior to the work in [26], the LMS algorithm was regarded

as an approximate recursive solution to the least-squares minimization problem. The

work in [26] showed that instead of being an approximate solution to an H2 minimization, the LMS algorithm is the exact solution to a minmax estimation problem. More

1.2. BACKGROUND

5

specifically, Ref. [26] proved that the LMS adaptive filter is the central a priori H∞ optimal filter. This result established a fundamental connection between an adaptive

control algorithm (LMS algorithm in this case), and a robust estimation problem.

Inspired by the analysis in [26], this thesis introduces an estimation interpretation of

a far more general adaptive filtering problem, and develops a systematic procedure for

the synthesis of adaptive filters based on this interpretation. The class of problems

addressed in this thesis, commonly known as “Filtered” LMS [17], encompass a wide

range of adaptive filtering/control applications [51,33], and have been the subject of

extensive research over the past four decades. Nevertheless, the viewpoint provided

in this thesis not only provides a systematic alternative to some widely used adaptive

filtering (control) algorithms (such as FxLMS and FuLMS) with superior transient

and steady-state behavior, but it also presents a new framework for their analysis.

More specifically, this thesis proves that the fundamental connection between adaptive filtering (control) algorithms and robust estimation extends to the more general

setting of adaptive filtering (control) problems, and shows that the convergence, stability, and performance of these classical adaptive algorithms can be systematically

analyzed as robust estimation questions.

The systematic nature of the proposed estimation-based approach enables an alternative formulation for the adaptive filtering (control) problem using Linear Matrix

Inequalities (LMIs), the ramifications of which will be discussed in Chapter 5. Several

researchers (see [18] and references therein) in the past few years have shown that

elementary manipulations of linear matrix inequalities can be used to derive less restrictive alternatives to the now classical state-space Riccati-based solution to the H∞

control problem [12]. Even though the computational complexity of the LMI-based

solution remains higher than that of solving the Riccati equation, there are three main

reasons that justify such a formulation [19]: (a) a variety of design specifications and

constraints can be expressed as LMIs, (b) problems formulated as LMIs can be solved

exactly by efficient convex optimization techniques, and (c) for the cases that lack

analytical solutions such as mixed H2 /H∞ design objectives (see [4], [32] and [45] and

references therein), the LMI formulation of the problem remains tractable (i.e. LMIsolvers are viable alternatives to analytical solutions in such cases). As will be seen

1.3. AN OVERVIEW OF ADAPTIVE FILTERING (CONTROL) ALGORITHMS

6

in Chapter 5, the LMI framework provides the machinery required for the synthesis

of a robust adaptive filter in the presence of modeling uncertainty.

1.3

An Overview of Adaptive Filtering (Control)

Algorithms

To put this thesis in perspective, this section provides a brief overview of the vast

literature on adaptive filtering (control). Reference [36] recognizes 1957 as the year

for the formal introduction of the term “adaptive system” into the control literature.

By then, the interest in filtering and control theory had shifted towards increasingly

more complex systems with poorly characterized (possibly time varying) models for

system dynamics and disturbances, and the concept of “adaptation” (borrowed from

living systems) seemed to carry the potential for solving the increasingly more complex control problems. The exact definition of “adaptation” and its distinction from

“feedback”, however, is the subject of long standing discussions (e.g. see [2,36,29]).

Qualitatively speaking, an adaptive system is a system that can modify its behavior

in response to changes in the dynamics of the system or disturbances through some

recursive algorithm. As a direct consequence of this recursive algorithm (in which

the parameters of the adaptive system are adjusted using input/output data), an

adaptive system is a “nonlinear” device.

The development of adaptive algorithms has been pursued from a variety of view

points. Different classifications of adaptive algorithms (such as direct versus indirect

adaptive control, model reference versus self-tuning adaptation) in the literature reflect this diversity [2,51,29]. For the purpose of this thesis, two distinct approaches for

deriving recursive adaptive algorithms can be identified: (a) stochastic gradient approaches that include LMS and LMS-Based adaptive algorithms, and (b) least-squares

estimation approaches that include adaptive recursive least-squares (RLS) algorithm.

The central idea in the former approach, is to define an appropriate cost function

that captures the success of the adaptation process, and then change the adaptive

1.3. AN OVERVIEW OF ADAPTIVE FILTERING (CONTROL) ALGORITHMS

7

filter parameters to reduce the cost function according to the method of steepest descent. This requires the use of a gradient vector (hence the name), which in practice

is approximated using instantaneous data. Chapter 2 provides a detailed description

of this approach for the problem of interest in this Thesis. The latter approach to

the design of adaptive filters is based on the method of least squares. This approach

closely corresponds to Kalman filtering. Ref. [44] provides a unifying state-space approach to adaptive RLS filtering. The main focus in this thesis however, is on the

LMS-based adaptive algorithms.

Since adaptive algorithms can successfully operate in a poorly known environment,

they have been used in a diverse field of applications that include communication

(e.g. [34,41]), process control (e.g. [2]), seismology (e.g. [42]), biomedical engineering

(e.g. [51]). Despite the diversity of the applications, different implementations of

adaptive filtering (control) share one basic common feature [29]: “an input vector and

a desired response are used to compute an estimation error, which is in turn used to

control the values of a set of adjustable filter coefficients.” Reference [29] distinguishes

four main classes of adaptive filtering applications based on the way the desired

signal is defined in the formulation of the problem: (a) identification: in this class of

applications an adaptive filter is used to provide a linear model for an unknown plant.

The plant and the adaptive filter are driven by the same input, and the output of the

plant is the desired response that adaptive filter tries to match. (b) inverse modeling:

here the adaptive filter is placed in series with an unknown (perhaps noisy) plant, and

the desired signal is simply a delayed version of the plant input. Ideally, the adaptive

filter converges to the inverse of the unknown plant. Adaptive equalization (e.g. [40])

is an important application in this class. (c) prediction: the desired signal in this case

is the current value of a random signal, while past values of the random signal provide

the input to the adaptive filter. Signal detection is an important application in this

class. (d) interference canceling: here adaptive filter uses a reference signal (provided

as input to the adaptive filter) to cancel unknown interference contained in a primary

signal. Adaptive noise cancellation, echo cancellation, and adaptive beam-forming

are applications that fall in this last class. The estimation-based adaptive filtering

algorithm in this thesis is presented in the context of adaptive noise cancellation, and

1.3. AN OVERVIEW OF ADAPTIVE FILTERING (CONTROL) ALGORITHMS

8

therefore a detailed discussion of the fourth class of adaptive filtering problems is

provided in Chapter 2.

There are several main structures for the implementation of adaptive filters (controllers). The structure of the adaptive filter is known to affect its performance,

computational complexity, and convergence. In this thesis, the two most commonly

used structures for adaptive filters (controllers) are considered. The finite impulse

response (FIR) transversal filter (see Fig. 1.1) is the structure upon which the main

presentation of the estimation-based adaptive filtering algorithm is primarily presented. The transversal filter consists of three basic elements: (a) unit-delay element,

(b) multiplier, and (c) adder, and contains feed forwards paths only. The number

of unit-delays specify the length of the adaptive FIR filter. Multipliers weight the

delayed versions of some reference signal, which are then added in the adder(s). The

frequency response for this filter is of finite length (hence the name), and contains

only zeros (all poles are at the origin in the z-plane). Therefore, there is no question

of stability for the open-loop behavior of the FIR filter. The infinite-duration impulse

response (IIR) structure is shown in Figure 1.2. The feature that distinguishes the

IIR filter from an FIR filter is the inclusion of the feedback path in the structure of

the adaptive filter.

As mentioned earlier, for an FIR filter all poles are at the origin, and a good

approximation of the behavior of a pole, in general, can only be achieved if the length

of the FIR filter is sufficiently long. An IIR filter, ideally at least, can provide a

perfect match for a pole with only a limited number of parameters. This means

that for a desired dynamic behavior (such as resonance frequency, damping, or cutoff

frequency), the number of parameters in an adaptive IIR filter can be far fewer than

that in its FIR counterpart. The computational complexity per sample for adaptive

IIR filter design can therefore be significantly lower than that in FIR filter design.

The limited use of adaptive IIR filters (compared to the vast number of applications for the FIR filters) suggests that the above mentioned advantages come at

a certain cost. In particular, adaptive IIR filters are only conditionally stable, and

therefore some provisions are required to assure stability of the filter at each iteration.

There are solutions such as Schur-Cohn algorithm ([29] pages 271-273) that monitor

1.3. AN OVERVIEW OF ADAPTIVE FILTERING (CONTROL) ALGORITHMS

9

the stability of the IIR filter (by determining whether all roots of the denominator of

the IIR filter transfer function are inside the unit circle). This however requires intensive on-line calculations. Alternative implementations of adaptive IIR filters (such

as parallel implementation [48], and lattice implementation [38]) have been suggested

that provide simpler stability monitoring capabilities. The monitoring process is independent of the adaptation process here. In other words, the adaptation criteria

do not inherently reject de-stabilizing values for filter weights. The monitoring process detects these de-stabilizing values and prevents their implementation. Another

significant problem with adaptive IIR filter design stems from the fact that the performance surface (see [33], Chapter 3) for adaptive IIR filters is generally non-quadratic

(see [33] pages 91-94 for instance) and often contains multiple local minima. Therefore, the weight vector may converge to a local minimum only (hence non-optimal

cost). Furthermore, it is noted that the adaptation rate for adaptive IIR filters can

be slow when compared to the FIR adaptive filters [33,31]. Early works in adaptive

IIR filtering (e.g. [16]) are for the most part extensions to Widrow’s LMS algorithm

of adaptive FIR filtering [51]. More recent works include modifications to recursive

LMS algorithm (e.g. [15]) that are devised for specific applications. In other words,

existing design techniques for adaptive IIR filters are application-specific and rely on

certain restrictive assumptions in their derivation. Our description of the Filtered-U

recursive LMS algorithm in Chapter 3 will further clarify this point. Furthermore,

as [33] points out: “The properties of an adaptive IIR filter are considerably more

complex than those of the conventional adaptive FIR filter, and consequently it is

more difficult to predict their behavior.” Thus, a framework that allows a unified

approach to the synthesis and analysis of adaptive IIR filters, and does not require

restrictive assumptions for its derivation would be extremely useful. As mentioned

earlier, this thesis provides such a framework.

Finally, for a wide variety of applications such as equalization in wireless communication channels, and active control of sound and vibration in an environment

where the effect of a number of primary sources should be canceled by a number of

control (secondary) sources, the use of a multi-channel adaptive algorithm is well justified. In general, however, variations of the LMS algorithm are not easy to extend to

1.4. CONTRIBUTIONS

10

multi-channel systems. Furthermore, the analysis of the performance and properties

of such multi-channel algorithms is complicated [33]. As Ref. [33] points out, in the

context of active noise cancellation, the successful implementation of multi-channel

adaptive algorithms has so far been limited to cases involving repetitive noise with a

few harmonics [39,43,49,13]). For the approach presented in this thesis, the syntheses

of single-channel and multi-channel adaptive algorithms are virtually identical. This

similarity is a direct result of the way the synthesis problem is formulated (see 4).

1.4

Contributions

In meeting the goals of this research, the following contributions have been made to

adaptive filtering and control:

1. An estimation-interpretation for adaptive “Filtered” LMS filtering (control)

problems is developed. This interpretation allows an equivalent estimation formulation for the adaptive filtering (control) problem. The adaptation criterion

for adaptive filter weight vector is extracted from the solution to this equivalent estimation problem. This constitutes a systematic synthesis procedure for

adaptive filters in filtered LMS problems. The new synthesis procedure is called

Estimation-Based Adaptive Filtering (EBAF).

2. Using an H∞ criterion to formulate the “equivalent” estimation problem, this

thesis develops a new framework for the systematic analysis of Filtered LMS

adaptive algorithms. In particular, the results in this thesis extend the fundamental connection between the LMS adaptive algorithm and robust estimation

(i.e. H∞ optimality of the LMS algorithm [26]) to the more general setting of

filtered LMS adaptive problems.

3. For the EBAF algorithm in the FIR case:

(a) It is shown that the adaptive weight vector update can be based on the

central filtering (prediction) solution to a linear H∞ estimation problem,

the existence of which is guaranteed. It is also shown that the maximum

1.4. CONTRIBUTIONS

11

energy gain in this case can be minimized. Furthermore, the optimal energy gain is proved to be unity, and the conditions under which this bound

is achievable are derived.

(b) The adaptive algorithm is shown to be implementable in real-time. The

update rule requires a simple Lyapunov recursion that leads to a computational complexity comparable to that of filtered LMS adaptive algorithms

(e.g. FxLMS). The experimental data, along with extensive simulations

are presented to demonstrate the improved steady-state performance of

the EBAF algorithm (over FxLMS and Normalized-FxLMS algorithms),

as well as a faster transient response.

(c) A clear connection between the limiting behavior of the EBAF algorithm

and the existing FxLMS and Normalized-FxLMS adaptive algorithms has

been established.

4. For the EBAF algorithm in the IIR case, it is shown that the equivalent estimation problem is nonlinear. A linearizing approximation is then employed

that makes systematic synthesis of adaptive IIR filter tractable. The performance of the EBAF algorithm in this case is compared to the performance of

the Filtered-U LMS (FuLMS) adaptive algorithm, demonstrating the improved

performance in the EBAF case.

5. The treatment of feedback contamination problem is shown to be identical to

the IIR adaptive filter design in the new estimation-based framework.

6. A multi-channel extension of the EBAF algorithm demonstrates that the treatment of the single-channel and multi-channel adaptive filtering (control) problems in the new estimation based framework is virtually the same. Simulation

results for the problem of vibration isolation in a 3-input/3-output vibration isolation platform (VIP) prove feasibility of the EBAF algorithm in multi-channel

problems.

7. The new estimation-based framework is shown to be amenable to a Linear Matrix Inequality (LMI) formulation. The LMI formulation is used to explicitly

1.5. THESIS OUTLINE

12

address the stability of the overall system under adaptive algorithm by producing a Lyapunov function. It is also shown to be an appropriate framework to

address the robustness of the adaptive algorithm to modeling error or parameter uncertainty. Augmentation of an H2 performance constraint to the H∞

disturbance rejection criterion is also discussed.

1.5

Thesis Outline

The organization of this thesis is as follows. In Chapter 2, the fundamental concepts

of the estimation-based adaptive filtering (EBAF) algorithm are introduced. The

application of the EBAF approach in the case of adaptive FIR filter design is also

presented in this chapter. In Chapter 3, the extension of the EBAF approach to the

adaptive IIR filter design is discussed. A multi-channel implementation of the EBAF

algorithm is presented in Chapter 4. An LMI formulation for the EBAF algorithm is

derived in Chapter 5. Chapter 6 concludes this dissertation with a summary of the

main results, and the suggestions for future work. This dissertation contains three

appendices. An algebraic proof for the feasibility of the unity energy gain in the

estimation problem associated with adaptive FIR filter design (in Chapter 2) is discussed in Appendix A. The problem of feedback contamination is formally addressed

in Appendix B. A detailed discussion of the identification process is presented in Appendix C. The identified model for the Vibration Isolation Platform (VIP), used as a

test-bed for multi-channel implementation of the EBAF algorithm, is also presented

in this appendix.

1.5. THESIS OUTLINE

13

x(k − 1)

x(k)

z −1

W0

x(k − 2)

x(k − N )

z −1

W1

z −1

WN −1

W2

WN

+

u(k)

Fig. 1.1: General block diagram for an FIR Filterm

x(k)

u(k)

r(k)

a0

+

+

z −1

a1

b1

z −1

a2

b2

r(k − 2)

z −1

bN

aN

Fig. 1.2: General block diagram for an IIR Filter

Chapter 2

Estimation-Based adaptive FIR

Filter Design

This chapter presents a systematic synthesis procedure for H∞ -optimal adaptive FIR

filters in the context of an Active Noise Cancellation (ANC) problem. An estimation

interpretation of the adaptive control problem is introduced first. Based on this interpretation, an H∞ estimation problem is formulated, and its finite horizon prediction

(filtering) solutions are discussed. The solution minimizes the maximum energy gain

from the disturbances to the predicted (filtered) estimation error, and serves as the

adaptation criterion for the weight vector in the adaptive FIR filter. This thesis refers

to the new adaptation scheme as Estimation-Based Adaptive Filtering (EBAF). It

is shown in this chapter that the steady-state gain vectors in the EBAF algorithm

approach those of the classical Filtered-X LMS (Normalized Filtered-X LMS) algorithm. The error terms, however, are shown to be different, thus demonstrating that

the classical algorithms can be thought of as an approximation to the new EBAF

adaptive algorithm.

The proposed EBAF algorithm is applied to an active noise cancellation problem

(both narrow-band and broad-band cases) in a one-dimensional acoustic duct. Experimental data as well as simulations are presented to examine the performance of

the new adaptive algorithm. Comparisons to the results from a conventional FxLMS

algorithm show faster convergence without compromising steady-state performance

14

2.1. BACKGROUND

15

and/or robustness of the algorithm to feedback contamination of the reference signal.

2.1

Background

This section introduces the context in which the new estimation-based adaptive filtering (EBAF) algorithm will be presented. It defines the adaptive filtering problem

of interest and describes the terminology that is used in this chapter. A conventional

solution to the problem based on the FxLMS algorithm is also outlined in this section. The discussion of key concepts of the EBAF algorithm and the mathematical

formulation of the algorithm are left to Sections 2.2 and 2.3, respectively.

Referring to Fig. 2.1, the objective in this adaptive filtering problem is to adjust

the weight vector in the adaptive FIR filter, W (k) = [w0 (k) w1 (k) ... wN (k)]T (k is

the discrete time index), such that the cancellation error, d(k) −y(k), is small in some

appropriate measure. Note that d(k) and y(k) are outputs of the primary path P (z)

and the secondary path S(z), respectively. Moreover,

1. n(k) is the input to the primary path,

2. x(k) is a properly selected reference signal with a non-zero correlation with the

primary input,

4

3. u(k) is the control signal applied to the secondary path (generated as u(k) =

[x(k) x(k − 1) · · · x(k − N)] W (k)),

4. e(k) is the measured residual error available to the adaptation scheme.

Note that in a typical practice, x(k) is obtained via some measurement of the primary

input. The quality of this measurement will impact the correlation between the

reference signal and the primary input. Similar to the conventional development of

the FxLMS algorithm however, this chapter assumes perfect correlation between the

two.

The Filtered-X LMS (FxLMS) solution to this problem is shown in Figure 2.2

where perfect correlation between the primary disturbance n(k) and the reference

signal x(k) is assumed [51,33]. Minimizing the instantaneous squared error, e2 (k), as

2.2. EBAF ALGORITHM - MAIN CONCEPT

16

an approximation to the mean-square error, FxLMS follows the LMS update criterion

(i.e. to recursively adapt the weight vector in the negative gradient direction)

µ 2

∇e (k)

2

e(k) = d(k) − y(k) = d(k) − S(k) ⊕ u(k)

W (k + 1) = W (k) −

where µ is the adaptation rate, S(k) is the impulse response of the secondary path,

and “⊕” indicates convolution. Assuming slow adaptation, the FxLMS algorithm

then approximates the instantaneous gradient in the weight vector update with

4

T

∇e2 (k) ∼

= −2 [x0 (k) x0 (k − 1) · · · x0 (k − N)] e(k) = −2h0 (k)e(k)

(2.1)

4

where x0 (k) = S(k) ⊕ x(k) represents a filtered version of the reference signal which

is available to the LMS adaptation (and hence the name (Normalized) Filtered-X

LMS). This yields the following adaptation criterion for the FxLMS algorithm

W (k + 1) = W (k) + µh0 (k)e(k)

(2.2)

A closely related adaptive algorithm is the one in which the adaptation rate is

normalized with the estimate of the power of the reference vector, i.e.

W (k + 1) = W (k) + µ

h0 (k)

e(k)

1 + µh∗ 0 (k)h0 (k)

(2.3)

where ∗ indicates complex conjugate. This algorithm is known as the NormalizedFxLMS algorithm.

In practice, however, only an approximate model of the secondary path (obtained

via some identification scheme) is known, and it is this approximate model that is

used to filter the reference signal. For further discussion on the derivation and analysis

of the FxLMS algorithm please refer to [33,7].

2.2

EBAF Algorithm - Main Concept

The principal goal of this section is to introduce the underlying concepts of the new

EBAF algorithm. For the developments in this section, perfect correlation between

2.2. EBAF ALGORITHM - MAIN CONCEPT

17

n(k) and x(k) in Fig. 2.1 is assumed (i.e. x(k) = n(k) for all k). This is the same

condition under which the FxLMS algorithm was developed. The dynamics of the

secondary path are assumed known (e.g. by system identification). No explicit model

for the primary path is needed.

As stated before, the objective in the adaptive filtering problem of Fig. 2.1 is to

generate a control signal, u(k), such that the output of the secondary path, y(k), is

“close” to the output of the primary path, d(k). To achieve this goal, for the given

reference signal x(k), the series connection of the FIR filter and the secondary path

must constitute an appropriate model for the unknown primary path. In other words,

with the adaptive FIR filter properly adjusted, the path from x(k) to d(k) must be

equivalent to the path from x(k) to y(k). Based on this observation, in Fig. 2.3 the

structure of the path from x(k) to y(k) is used to model the primary path. The

modeling error is included to account for the imperfect cancellation.

The above mentioned observation forms the basis for an estimation interpretation of the adaptive control problem. The following outlines the main steps for this

interpretation:

1. Introduce an approximate model for the primary path based on the architecture

of the adaptive path from x(k) to y(k) (as shown in Fig. 2.3). There is an

optimal value for the weight vector in the approximate model’s FIR filter for

which the modeling error is the smallest. This optimal weight vector, however,

is not known. State-space models are used for both FIR filter and the secondary

path.

2. In the approximate model for the primary path, use the available information to

formulate an estimation problem that recursively estimates this optimal weight

vector.

3. Adjust the weight vector of the adaptive FIR filter to the best available estimate

of the optimal weight vector.

Before formalizing this estimation-based approach, a closer look at the signals

(i.e. information) involved in Fig. 2.1 is provided. Note that e(k) = d(k) − y(k) +

Vm (k), where

2.3. PROBLEM FORMULATION

18

a. e(k) is the available measurement.

b. Vm (k) is the exogenous disturbance that captures the effect of measurement

noise, modeling error, and the initial condition uncertainty in error measurements.

c. y(k) is the output of the secondary path.

d. d(k) is the output of the primary path.

Note that unlike e(k), the signals y(k) and d(k) are not directly measurable. With

u(k) fully known, however, the assumption of a known initial condition for the secondary path leads to the exact knowledge of y(k). This assumption is relaxed later

in this chapter, where the effect of an “inexact” initial condition in the performance

of the adaptive filter is studied (Section 2.7).

The derived measured quantity that will be used in the estimation process can

now be introduced as

4

m(k) = e(k) + y(k) = d(k) + Vm (k)

2.3

(2.4)

Problem Formulation

Figure 2.4 shows a block diagram representation of the approximate model to the

primary path. A state space model, [ As (k), Bs (k), Cs (k), Ds (k) ], for the secondary

path is assumed. Note that both primary and secondary paths are assumed stable.

The weight vector, W (k) = [ w0 (k) w1 (k) · · · wN (k) ]T , is treated as the state vector

capturing the trivial dynamics, W (k + 1) = W (k), that is assumed

for the FIR filter.

With θ(k) the state variable for the secondary path, then ξ T =

W T (k) θT (k)

is

the state vector for the overall system.

The state space representation of the system is then

#"

#

"

#

"

0

W (k) 4

W (k + 1)

I(N +1)×(N +1)

= Fk ξk

=

Bs (k)h∗ (k) As (k)

θ(k)

θ(k + 1)

(2.5)

2.3. PROBLEM FORMULATION

19

where h(k) = [x(k) x(k − 1) · · · x(k − N)]T captures the effect of the reference input

x(·). For this system, the derived measured output defined in Eq. (2.4) is

#

"

h

i W (k)

4

m(k) = Ds (k)h∗ (k) Cs (k)

+ Vm (k) = Hk ξk + Vm (k)

θ(k)

(2.6)

A linear combination of the states is defined as the desired quantity to be estimated

"

#

h

i W (k)

4

s(k) = L1,k L2,k

= Lk ξk

(2.7)

θ(k)

For simplicity, the single-channel problem is considered here. Extension to the multichannel case is straight forward and is discussed in Chapter 4. Therefore, m(k) ∈

R1×1 , s(k) ∈ R1×1 , θ(k) ∈ RNs ×1 , and W (k) ∈ R(N +1)×1 . All matrices are then

of appropriate dimensions. There are several alternatives for selecting Lk and thus

the variable to be estimated, s(k). The end goal of the estimation based approach

however, is to set the weight vector in the adaptive FIR filter such that the output

of the secondary path, y(k) in Fig. 2.3, best matches d(k). So s(k) = d(k) is chosen,

i.e. Lk = Hk .

Any estimation algorithm can now be used to generate an estimate of the desired

quantity s(k). Two main estimation approaches are considered next.

2.3.1

H2 Optimal Estimation

Here stochastic interpretation of the estimation problem is possible. Assuming that

ξ0 (the initial condition for the system in Figure 2.4) and Vm (·) are zero mean uncorrelated random variables with known covariance matrices

"

#

#

"

h

i

ξ0

0

Π

0

∗

E

=

ξ0∗ Vm

(j)

0 Qk δkj

Vm (k)

(2.8)

4

ŝ(k|k) = F (m(0), · · · , m(k)), the causal linear least-mean-squares estimate of s(k), is

given by the Kalman filter recursions [27].

There are two primary difficulties with the H2 optimal solution: (a) The H2 solution is optimal only if the stochastic assumptions are valid. If the external disturbance

2.3. PROBLEM FORMULATION

20

is not Gaussian (for instance when there is a considerable modeling error that should

be treated as a component of the measurement disturbance) then pursuing an H2

filtering solution may yield undesirable performance; and (b) regardless of the choice

for Lk , the recursive H2 filtering solution does not simplify to the same extent as

the H∞ solution considered below. This can be of practical importance when the

real-time computational power is limited. Therefore, the H2 optimal solution is not

employed in this chapter.

2.3.2

H∞ Optimal Estimation

To avoid difficulties associated with the H2 estimation, we consider a minmax formulation of the estimation problem in this section. Here, the main objective is to limit

the worst case energy gain from the measurement disturbance and the initial condition uncertainty to the error in a causal (or strictly causal) estimate of s(k). More

specifically, the following two cases are of interest. Let ŝ(k|k) = Ff (m(0), · · · , m(k))

denote an estimate of s(k) given observations m(i) for time i = 0 up to and including

4

time i = k, and let ŝ(k) = ŝ(k|k − 1) = Fp (m(0), · · · , m(k − 1)) denote an estimate

of s(k) given m(i) for time i = 0 up to and including i = k − 1. Note that ŝ(k|k)

and ŝ(k) are known as filtering and prediction estimates of s(k), respectively. Two

estimation errors can now be defined: the filtered error

ef,k = ŝ(k|k) − s(k)

(2.9)

ep,k = ŝ(k) − s(k)

(2.10)

and the predicted error

Given a final time M, the objective of the filtering problem can now be formalized as

finding ŝ(k|k) such that for Π0 > 0

M

X

sup

Vm , ξ0

e∗f,k ef,k

k=0

ˆ

(ξ0 − ξˆ0 )∗ Π−1

0 (ξ0 − ξ0 ) +

M

X

k=0

≤ γ2

∗

Vm

(k)Vm (k)

(2.11)

2.4. H∞ -OPTIMAL SOLUTION

21

for a given scalar γ > 0. In a similar way, the objective of the prediction problem can

be formalized as finding ŝ(k) such that

M

X

sup

Vm , ξ0

e∗p,k ep,k

k=0

ˆ

(ξ0 − ξˆ0 )∗ Π−1

0 (ξ0 − ξ0 ) +

M

X

≤ γ2

(2.12)

∗

Vm

(k)Vm (k)

k=0

for a given scalar γ > 0. The question of optimality of the solution can be answered

by finding the infimum value among all feasible γ’s. Note that, for the H∞ optimal

estimation there is no statistical assumption regarding the measurement disturbance.

Therefore, the inclusion of the output of the modeling error block (see Fig. 2.3) in

the measurement disturbance is consistent with H∞ formulation of the problem. The

elimination of the “modeling error” block in the approximate model of primary path

in Fig. 2.4 is based on this characteristic of the disturbance in an H∞ formulation.

2.4

H∞ -Optimal Solution

For the remainder of this chapter, the case where Lk = Hk is considered. Referring

to Figure 2.4, this means that s(k) = d(k). To discuss the solution, from [27] the

solutions to the γ-suboptimal finite-horizon filtering problem of Eq. (2.11), and the

prediction problem of Eq. (2.12) are drawn. Finally, we find the optimal value of γ

and show how γ = γopt simplifies the solutions.

2.4.1

γ-Suboptimal Finite Horizon Filtering Solution

Theorem 2.1: [27]Consider the state space representation of the block diagram of

Figure 2.4, described by Equations (2.5)-(2.7). A level-γ H∞ filter that achieves

(2.11) exists if, and only if, the matrices

0

0

Hk

Ip

Ip

Rk =

and Re,k =

+

Pk Hk∗ L∗k

(2.13)

2

2

0 −γ Iq

0 −γ Iq

Lk

(here p and q are used to indicate the correct dimensions) have the same inertia for

all 0 ≤ k ≤ M, where P0 = Π0 > 0 satisfies the Riccati recursion

∗

Pk+1 = Fk Pk Fk∗ − Kf,k Re,k Kf,k

(2.14)

2.4. H∞ -OPTIMAL SOLUTION

22

where Kf,k =

Fk Pk

Hk∗ L∗k

−1

Re,k

If this is the case, then the central H∞ estimator is given by

ξˆk+1 = Fk ξˆk + Kf,k m(k) − Hk ξˆk , ξˆ0 = 0

−1

ŝ(k|k) = Lk ξˆk + (Lk Pk Hk∗ ) RHe,k

m(k) − Hk ξˆk

(2.15)

(2.16)

(2.17)

−1

with Kf,k = (Fk Pk Hk∗ ) RHe,k

and RHe,k = Ip + Hk Pk Hk∗ .

Proof: see [27].

2.4.2

γ-Suboptimal Finite Horizon Prediction Solution

Theorem 2.2: [27]For the system described by Equations (2.5)-(2.7), level-γ H∞

filter that achieves (2.12) exists if, and only if, all leading sub-matrices of

Lk

−γ 2 Ip 0

−γ 2 Ip 0

p

p

(2.18)

Rk =

and Re,k =

+

Pk L∗k Hk∗

0

Iq

0

Iq

Hk

have the same inertia for all 0 ≤ k < M. Note that Pk is updated according to Eq.

(2.14). If this is the case, then one possible level-γ H∞ filter is given by

ξˆk+1 = Fk ξˆk + Kp,k m(k) − Hk ξˆk , ξˆ0 = 0

(2.19)

ŝ(k) = Lk ξˆk

where

Kp,k =

Fk P̃k Hk∗

(2.20)

I+

Hk P̃k Hk∗

−1

(2.21)

and

P̃k = I − γ −2 Pk L∗k Lk

−1

Pk ,

(2.22)

Proof: see [27].

Note that the condition in Eq. (2.18) is equivalent to

I − γ −2 Pk L∗k Lk > 0,

for k = 0, · · · , M

(2.23)

and hence P̃k in Eq. (2.22) is well defined. P̃k can also be defined as

P̃k−1 = Pk−1 − γ −2 L∗k Lk ,

for k = 0, · · · , M

(2.24)

2.4. H∞ -OPTIMAL SOLUTION

23

which proves useful in rewriting the prediction coefficient, Kp,k in Eq. (2.21), as

follows. First, note that

−1

−1

Fk P̃k Hk∗ I + Hk P̃k Hk∗

= Fk P̃k−1 + Hk∗ Hk

Hk∗

(2.25)

and hence, replacing for P̃k−1 from Eq. (2.24)

Kp,k = Fk Pk−1 − γ −2 L∗k Lk + Hk∗ Hk

−1

Hk∗

(2.26)

Theorems 2.1 and 2.2 (Sections 2.4.1 and 2.4.2) provide the form of the filtering and

prediction estimators, respectively. The following section investigates the optimal

value of γ for both of these solutions, and outlines the simplifications that follow.

2.4.3

The Optimal Value of γ

The optimal value of γ for the filtering solution will be discussed first. The discussion

of the optimal prediction solution utilizes the results in the filtering case.

2.4.3.1

Filtering Case

2.4.3.1.1 γopt ≤ 1: First, it will be shown that for the filtering solution γopt ≤ 1.

Using Eq. (2.11), one can always pick ŝ(k|k) to be simply m(k). With this choice

ŝ(k|k) − s(k) = Vm (k), for all k

(2.27)

and Eq. (2.11) reduces to

M

X

sup

Vm ∈ L2 , ξ0

Vm (k)∗ Vm (k)

k=0

ˆ

(ξ0 − ξˆ0 )∗ Π−1

0 (ξ0 − ξ0 ) +

M

X

(2.28)

Vm (k)∗ Vm (k)

k=0

which can never exceed 1 (i.e. γopt ≤ 1). A feasible solution for the H∞ estimation

problem in Eq. (2.11) is therefore guaranteed when γ is chosen to be 1. Note that

it is possible to directly demonstrate the feasibility of γ = 1. Using simple matrix

2.4. H∞ -OPTIMAL SOLUTION

24

manipulation, it can be shown that for Lk = Hk and for γ = 1, Rk and Re,k have the

same inertia for all k.

2.4.3.1.2 γopt ≥ 1: To show that γopt is indeed 1, an admissible sequence of disturbances and a valid initial condition should be constructed such that γ could be made

arbitrarily close to 1 regardless of the filtering solution chosen. The necessary and

sufficient conditions for the optimality of γopt = 1 are developed in the course of

constructing this admissible

sequence

of disturbances.

T

T

T

Assume that ξˆ0 = Ŵ0 θ̂0

is the best estimate for the initial condition of the

system in the approximate model of the primary path (Fig. 2.4). Moreover, assume

that θ̂0 is indeed the actual initial condition for the secondary path in Fig. 2.4. The

actual initial condition for the weight vector of the FIR filter in this approximate

model is W0 . Then,

h

m(0) =

H0 ξˆ0 =

h

Ds (0)h∗ (0) Cs (0)

Ds (0)h∗ (0) Cs (0)

i

i

"

"

W0

θ̂0

Ŵ0

#

+ Vm (0)

(2.29)

#

θ̂0

where m(0) is the (derived) measurement at time k = 0. Now, if

∗

Vm (0) = Ds (0)h (0) Ŵ0 − W0 = KV (0) Ŵ0 − W0

(2.30)

(2.31)

then m(0) − H0 ξˆ0 = 0 and the estimate of the weight vector will not change. More

specifically, Eqs. (2.16) and (2.17) reduce to the following simple updates

ξˆ1 = F0 ξˆ0

(2.32)

ŝ(0|0) = L0 ξˆ0

(2.33)

which given L0 = H0 generates the estimation error

ef,0 = ŝ(0|0) − s(0)

= L0 ξˆ0 − L0 ξ0

= Ds (0)h∗ (0) Ŵ0 − W0

= Vm (0)

(2.34)

2.4. H∞ -OPTIMAL SOLUTION

25

Repeating a similar argument at k = 1 and 2, it is easy to see that if

Vm (1) = [Ds (1)h∗ (1) + Cs (1)Bs (0)h∗ (0)] Ŵ0 − W0 = KV (1) Ŵ0 − W0

(2.35)

and

∗

∗

∗

Vm (2) = [Ds (2)h (2) + Cs (2)Bs (1)h (1) + Cs (2)As (1)Bs (0)h (0)] Ŵ0 − W0

= KV (2) Ŵ0 − W0

(2.36)

then

m(k) − Hk ξˆk = 0,

for k = 1, 2

(2.37)

Note that when Eq. (2.37) holds, and with Lk = Hk , Eq. (2.17) reduces to

ŝ(k|k) = Lk ξˆk = Hk ξˆk

(2.38)

and hence

ef,k = ŝ(k|k) − s(k)

= ŝ(k|k) − [m(k) − Vm (k)]

= Hk ξˆk − [m(k) − Vm (k)]

h

i

= Hk ξˆk − m(k) + Vm (k)

= Vm (k)

for k = 1, 2

(2.39)

Continuing this process, KV (k), for 0 ≤ k ≤ M can be defined as

KV (0)

Ds (0)

0

0

0

···

0

h(0)

KV (1) Cs (1)Bs (0)

Ds (1)

0

0 ···

0 h(1)

C (2)A (1)B (0) C (2)B (1) D (2) 0 · · ·

0

(2)

K

h(2)

=

V

s

s

s

s

s

s

..

..

..

..

.

.

.

.

..

KV (M)

h(M)

.

···

Ds (M)

4

= ∆M ΛM

(2.40)

2.4. H∞ -OPTIMAL SOLUTION

26

such that Vm (k), ∀ k, is an admissible disturbance. In this case, Eq. (2.11) reduces

to

M

X

sup

ξ0

Vm (k)∗ Vm (k)

k=0

ˆ

(ξ0 − ξˆ0 )∗ Π−1

0 (ξ0 − ξ0 ) +

M

X

Vm (k)∗ Vm (k)

k=0

M

X

#

"

=

(Ŵ0 − W0 )∗

sup

ξ0

KV∗ (k)KV (k)

k=0

"

∗

ˆ

(ξ0 − ξˆ0 )∗ Π−1

0 (ξ0 − ξ0 ) + (Ŵ0 − W0 )

M

X

(Ŵ0 − W0 )

#

KV∗ (k)KV (k)

(Ŵ0 − W0 )

k=0

(2.41)

From Eq. (2.40), note that

M

X

KV∗ (k)KV (k) = Λ∗M ∆∗M ∆M ΛM = k ∆M ΛM k22

(2.42)

k=0

and hence the ratio in Eq. (2.41) can be made arbitrarily close to one if

lim k∆M ΛM k2 → ∞

M →∞

(2.43)

Eq. (2.43) will be referred to as the condition for optimality of γ = 1 for the

filtering solution.

Equation (2.43) can now be used to derive necessary and sufficient conditions for

optimality of γ = 1. First, note that a necessary condition for Eq. (2.43) is

lim kΛM k2 → ∞

M →∞

(2.44)

or equivalently

lim

M →∞

M

X

h∗ (k)h(k) → ∞

(2.45)

k=0

The h(k) that satisfies the condition in (2.45) is referred to as exciting [26]. Several

sufficient conditions can now be developed. Since

k∆M ΛM k2 ≥ σmin (∆M ) kΛM k2

(2.46)

2.4. H∞ -OPTIMAL SOLUTION

27

one sufficient condition is that

σmin (∆M ) > ,

∀ M, and > 0

(2.47)

Note that for LTI systems, the sufficient condition (2.47) is equivalent to the requirement that the system have no zeros on the unit circle. Another sufficient condition

is that h(k)’s be persistently exciting, that is

#

"

M

1 X

lim σmin