The empirical investigation of mental representations and their composition Joe Thompson

advertisement

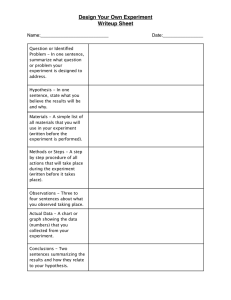

The empirical investigation of mental representations and their composition Joe Thompson∗ Simon Fraser University May 5, 2010 ∗ This work owes a great deal to the suggestions of Ray Jennings and Kate Slaney. Thanks also goes to Trey Boone for suggesting that the evidence presented here might bear directly on the LOT hypothesis. 1 Contents 1 The Language of Thought Hypothesis 3 1.1 Mental Representations . . . . . . . . . . . . . . . . . . . . . 3 1.2 Composition and LOT . . . . . . . . . . . . . . . . . . . . . . 4 2 LOT and Language Science 7 2.1 LOT and Language Change . . . . . . . . . . . . . . . . . . . 7 2.2 Productivity and Systematicity of Language . . . . . . . . . . 10 2.3 Recursion and Hierarchical Complexity . . . . . . . . . . . . . 12 3 Testing the LOT hypothesis 17 3.1 The problem with Recursive, Centre-Embedded, Elements . . 17 3.2 The Strength of the Case Against LOT . . . . . . . . . . . . 20 3.3 Construct-validity Theory . . . . . . . . . . . . . . . . . . . . 22 2 1 The Language of Thought Hypothesis 1.1 Mental Representations The Language of thought hypothesis (or LOT hypothesis) seems implicit in a number of theories of neural architecture. The hypothesis is that structured entities in the brain represent the world. The syntactic composition of these mental representations determine their expressive and causal powers. What a mental representation represents is determined by (a) its syntactic composition and (b) the representational contents of its atomic parts1 (Fodor, 2008). A representation’s causal powers are exhausted by the capacity for mutually supporting computational systems to track syntactic structure (computational systems do not track semantic content directly). It will be difficult to construct a research project with the specific goal of evaluating the LOT hypothesis. This is not to say that claims about compositional mental representations are inherently untestable, or in danger of being unfalsifiable. But the belief in compositional mental representations is so central to cognitive science that it seems, for all practical purposes, insulated from revision. Throwing out the language of thought, at least for those already working under its purview, would be tantamount to a paradigm shift. Denying that there are any mental representations at all would be even more traumatic. The relative insulation of the LOT hypothesis might explain why it tends to be the subject of a priori evaluation. Fodor (1981), for example, argued that compositional mental representations are the ally of belief-desire psychology, because they provide an intuitive explanation for how the content of a belief could cause behaviour. Objections to the coherence of mental representations, along the lines of private language arguments (Wittgenstein, 1958), are similarly a priori. While we need not object to a priori argumentation in science, we should be 1 The atomic components of a language of thought are the components containing no further parts. 3 realistic about the prospects for winning over our theoretical competitors. It is not contentious that the ultimate success or failure of the LOT hypothesis will lie in its capacity to provide explanations, predictions, and direction to research. What is needed, however, is a non-tendentious method for evaluating how the LOT hypothesis fares on these criteria. The best way to evaluate the LOT hypothesis, it seems, would be to formulate research projects whose consequences bear directly on its plausibility. The difficulty is that such research projects seem hard to design. I will argue that such research projects do exist, and it may be that they can be used to reject the LOT hypothesis, or at least revise our views on the composition of mental representations. It should be emphasized that if conceptual attacks on the LOT hypothesis succeed, then the LOT hypothesis must be incoherent, and my work here will be largely irrelevant. I will assume, however, that LOT theorists will be unconvinced by such arguments. I only wish to argue, from within LOT’s philosophical framework, that basal ganglia research poses empirical problems for the LOT hypothesis. Even if I am wrong, it may still turn out that the LOT hypothesis is incoherent. 1.2 Composition and LOT Compositional mental representations allow for an intuitive kind of beliefdesire psychology which I will call the computational-representational theory of mind (CRTM). The view accepts that propositional attitudes (beliefs that P desires that P and so on2 . . . ) are to be understood as relations between organisms and mental representations (Fodor, 2008; Rey, 1997). CRTM, however, takes this relationship to be spelled out in computational terms.3 An agent will have a belief that P iff they have a mental representation of P and this representation plays the right role in a computational system. 2 P is, of course, a poor choice for representing propositional attitude contents, as it is used in propositional logic to represent an atom. LOT theorists will expect propositional attitude contents to have compositional structure. 3 To say that a brain is a computational system is to say that we can describe the brain in terms of physical machine states (in an appropriate Turing machine), and then map these machine states to sentences in a computing language. For a fuller discussion of the notion of computation see Fodor (1975) and Rey (1997). 4 This is supposed to provide an intuitive account of the causal efficacy of propositional attitudes. How my belief that P will influence my behaviour is to be determined by (a) the fact that I believe that P rather than, say, desire that P and (b) the fact that I believe that P rather than that Q. Manipulating the computational role of a representation (insofar as this is possible), and consequently manipulating an individual’s attitude toward a proposition, will have different behavioural consequences from those that involve manipulating the mental representation itself. This respects the intuition that a desire that P should have different behavioural consequences from those of a belief that Q. Compositional mental representations assist in explaining how manipulating the mental representation could influence behaviour because, as the CRTM theorist argues, the mind’s computational architecture is sensitive to the syntactic structure of its mental representations. This notion of composition is central to the LOT hypothesis. The syntactic composition of a formal language is defined without appeal to semantics. Recursive rules generate a set of well formed sentences, and (because the class of atoms is well defined) their compositional structure can be defined with a notion of uniform substitution. Semantic composition is defined along these recursive rules, ensuring that the semantic values of complex sentences are a function of the semantic values of the component parts. Given independent definitions of a ‘proof’ and ‘inference’ we can seek to demonstrate the soundness and completeness of the proof theory with respect to the independent semantic theory of inference, and show that for every proof there is a corresponding valid inference, and vice versa. As a consequence, any machine that reliably generates proofs will also generate valid inferences, even though it has no direct access to our semantic interpretation of the language. Mental representations are supposed to represent the world, but possess causal powers in virtue of their syntactic composition. This is ontologically 5 respectable because the sentences of Mentalese are just physical structures, and their syntactic operations just physical events. Of course, the syntax and the semantics of Mentalese must be suitably related if this account is going to work. Ideally, semantic composition would be definable along the same rules that capture the syntactic composition. This would allow physical systems to track semantic content in the same way that a proof checker can track inference, at least in those cases where organisms are supposed to track content.4 Consider, for instance, the causal/inferential powers that Fodor (2008) bestows upon the content of belief “granny left and auntie stayed.” That the logical syntax of the thought is conjunctive (partially) determines, on the one hand, its truth-conditions and its behavior in inference and, on the other hand, its causal/computational role in mental processes. I think that this bringing of logic and logical syntax together with a theory of mental processes is the foundation of our cognitive science [. . . ] (Fodor, 2008, p. 21). The principle distinction between a theory positing mental representations and the Language of Thought hypothesis, then, lies in the notion of composition.5 The idea is that there could be mental representations that are not compositional. The content of these representations would be determined by the contents of their atoms alone, and not their structural features. A 4 There are a number of cases where systems are not supposed to be able to track what it is that they represent. Imagine a possible world identical to ours, except that on twin-earth there is no H2 0, only the compound xyz. H2 O and xyz have all the same surface properties, but a different underlying physical structure. Suppose that twinearth-English contains the linguistic construction water, and (intuitively) WaterT win − earth means xyz. By our previous supposition, neither human beings, nor any neural system, is capable of distinguishing H2 O from xyz. The twin-earth Mentalese expression representing H2 O could be syntactically identical to the earth representation of H2 O. A computational system will not be sensitive to whether it represents H2 O or xyz. 5 It should be noted that, on the present understanding of the LOT hypothesis, sentences in Mentalese need not be the contents of thought. Thoughts, beliefs, desires, and other psychological states, according to the computational theory of mind, imply certain computational relationships between an organism and a representation. A Mentalese sentence, on its own, is merely a compositionally structured mental representation. 6 radical associationist, for example, might explain behaviour by appealing only to associative pairings between structurally atomic ideas (for a discussion, see Rey, 1997). Such a view could allow for mental representations without taking up the LOT hypothesis. I must also emphasize that there are many ways in which representations could be composed. Consider, for example, a Language of Thought in which atomic representations of perceived objects are “tagged” with location and date representations. Sentences of the language are thus of the form Objectlocation,date and contain sentences such as “bearbackyard,6AM .” The composition of this language is distinct from one which takes mental representations of propositions to be composed of a noun phrases and a verb phrases, as in (the girl)N P (kicked the dog)V P I take it that mental representations of either sort of composition are sufficient for a LOT, even though the former language is presumably too simple to be very helpful. In section 2 I will argue that the LOT theorist, in order to account for linguistic behaviour, will be under empirical pressure to represent natural language and a LOT as having comparable structure. 2 2.1 LOT and Language Science LOT and Language Change Empirical arguments for the existence of mental representations do exist. These arguments tend to proceed by seeking out uncontestable observations that can only be explained by the positing of mental representations. Gallistel (2000, 1990), for instance, argues that the intricacies of conditioned learning require positing mental representations. But theories of conditioning have been hailed as the paradigmatic cases where non-representational 7 explanation is to be had. But, if anti-representationalism cannot even account for conditioning, then it seems we will be forced to buy into a paradigm of mental representations (Carruthers, 2006).6 Unfortunately, this work is outside our present purview and must be considered in more detail elsewhere. It should not be surprising, however, that research into language is also relevant to the Language of Thought hypothesis. In fact, the two share many foundational assumptions. Suppose, following Saussure, we believe that studying language in a disciplined manner requires ignoring much of language change. An absolute state is defined by the absence of changes, and since language changes somewhat in spite of everything, studying a language-state means in practice disregarding changes of little importance. (de Saussure, 1959, p. 102). Natural languages, at least in the conversational sense of English, French and so on, change all the time, perhaps with every linguistic intervention. But the Language of Thought cannot change so haphazardly. Changes to the syntactic structure of Mentalese, as a whole, would probably be catastrophic, unless these changes could first be accommodated by a brain’s neural systems. The claim that a Language of Thought could undergo significant change without mutually supporting changes to the relevant computational mechanisms (and without resulting in an inviable organism) is either incomprehensible or, at the very least, grossly implausible.7 Such a change might be analogous to using Morse code to communicate over a channel, only to have one party spontaneously change the language without notice. 6 Studying the composition of mental representations will be similarly indirect. Theorists may look to various domains (such as vision) and ask what kind of composition is required of the mental representations there. 7 One might wonder whether the composition of Mentalese might change during an individual’s development (perhaps during language acquisition). One problem with allowing individuals to possess different languages of thought is that if two individuals had distinct mental languages, then it might be impossible for their beliefs to share syntactically identical contents. At best, two structurally distinct sentences (of different languages) could possess the same representational content. 8 The Language of Thought hypothesis, therefore, has a friend in Saussure, who does not view language as the kind of thing that changes. Of course, speech or parole, changes frequently. But the good linguist is supposed to distinguish speech and language and much of the variation in speech, on this view, is disregarded as incidental variation in how people produce language. Taken as a whole, speech is many-sided and heterogeneous; [...] we cannot put it into any category of human facts, for we cannot discover its unity. Language, on the contrary, is a self-contained whole and a principle of classification. As soon as we give language first place among the facts of speech, we introduce a natural order into a mass that lends itself to no other classification (p. 9 de Saussure, 1959). To understand linguistic behaviour, the linguist must study language, which is a synchronic, unchanging, entity (de Saussure, 1959, p. 102).8 Change, on this view, is only an incidental feature of natural languages. There is nothing problematic about an unchanging language, such as the LOT. It follows that if we dropped the Saussurean philosophy of language, and instead viewed a language as a complex system of practices, then LOT might lose its status as a language. While the LOT hypothesis takes up this foundational assumption about language, I will argue that the LOT theorist will also be inclined to take up certain assumptions about Mentalese composition in order to explain linguistic behaviour. These assumptions, I shall argue, can be studied with respectable research projects. 8 Theorists recognize, of course, that English changes. Chomsky (1964), for example, introduces a notion of rule-changing novelty, where the grammar of a natural language actually changes. But synchronic (formal) representations of natural-language must treat such changes as, in effect, entirely new languages. 9 2.2 Productivity and Systematicity of Language Even when we abstract away from language change we still find problematic variation in the linguistic data. Individuals seem to be able to produce and apprehend a massive variety of sentences (we are often asked to consider, for example, the odds that a complex sentence will be repeated in a book9 ). Here linguists have emphatically appealed to the notion of composition. The central fact to which any significant linguistic theory must address itself is this: a mature speaker can produce a new sentence of his language on the appropriate occasion, and other speakers can understand it immediately, though it is equally new to them. [...] On the basis of limited experience with the data of speech, each normal human has developed for himself a thorough competence in his native language. This competence can be represented, to an as yet undetermined extent, as a system of rules that we can call the grammar of his language. To each phonetically possible utterance [...], the grammar assigns a certain structural description that specifies the linguistic elements of which it is constituted and their structural relations [...] (Chomsky, 1964, p. 50-51). The set of well formed sentences in a natural language are to be captured recursively by reference to a well defined set of atoms and syntactic composition. This view allows languages to contain a staggering (and potentially infinite) number of never-before-uttered sentences in a language. I will follow Fodor (2008) in calling this the productivity of language.10 The capacity 9 Chomsky and Miller (1963) 10 It should be noted that the notion of composition does more than simply account for the capacity to comprehend a potentially infinite class of sentences. Composition also purchases linguistic systematicity. “[The] ability to produce/understand some of the sentences is intrinsically connected to the ability to produce/understand many of the others” (Fodor, 1987, p.149). If one can understand the sentence dogs are cuter than cats, then one should be able to understand cats are cuter than dogs. Regardless of the number of sentences in the English, then, composition remains important because “the very same combinatorial mechanisms that determine the meaning of any of the sentences determine the meaning of all the rest”(Fodor, 1987, p.150). 10 to produce and comprehend such a broad class of sentences, on this view, is a relatively simple matter of coming to grips with the facts of composition.11 If language is best understood as a synchronic entity and linguistic behaviour is best accounted for by compositionality, then this would be a great boon to the Language of Thought hypothesis. This is because the LOT hypothesis runs into the same problem of productivity that is present for natural languages. If there are a staggering number of permissible English sentences then there will need to be at least as many permissible mental representations. This is because a person’s sensitivity to any phonological, morphological, syntactic, or even semantic feature of an utterance must ultimately be explained, on a LOT architecture, by both the composition of mental representations and the computational systems that are sensitive to them (Fodor, 2008). The result is that many of the explanatory jobs traditionally associated with syntactic and semantic theory must be accomplished by the syntax of mental representations (and their supporting computational systems). This includes issues of syntactic and semantic ambiguity. Each reading for a syntactically ambiguous sentence, for instance, needs to correspond to a uniquely structured mental representation (Fodor, 2008, p. 58).12 11 Of course, for some theorists coming to grips with compositionality will some degree of innate knowledge or innate structure. 12 If each reading of an ambiguous sentence did not correspond to a unique representation, then computational systems could not distinguish readings that some people plainly do. Mentalese must be powerful enough, then, to allow me to disambiguate no trees have fallen over here. (no trees have fallen) (over here). (No trees have fallen over) (here) (Mary Shaw’s example). Each reading of this sentence must correspond to its own mental representation (Fodor, 2008). This is because (once again), computational systems need to distinguish these readings if they are to explain how we treat them differently. What might be surprising is that many of the semantic distinctions present in English must be accounted for by syntactic distinctions in Mentalese. A classic requirement for a good semantic theory, for instance, is the ability to sort out semantic ambiguities (Katz and Fodor, 1964). In the Language of Thought there can be no structural ambiguity between bank qua financial institution and bank qua geographical feature. Each reading of the English sentence I walked to the bank must correspond to a unique mental representation (Fodor, 2008). 11 This leaves the theorist who works on the syntax and semantics of English in a rather awkward position. A natural language is ultimately a feature of a population. It is therefore conceptually possible for us to describe a population’s linguistic behaviour in a theoretical idiom that is independent from our best theories of neural processing. English, for example, could be a feature that is weakly emergent (Bedau, 1997) on the properties of individual brains.13 But while it is conceptually possible to study the syntax and semantics of English without concern for neural processing, this will be a difficult line for LOT theorists to take in practice. It is the burden of the LOT theorist to posit computations defined over structured mental representations in such a way that, sooner or later, all aspects of linguistic behaviour, including productivity and ambiguity, are accounted for. Our best grammatical theories of natural languages are therefore crucial to the LOT theorist because they clarify what abilities must be accounted for. 2.3 Recursion and Hierarchical Complexity While our theory of English grammar is clearly relevant to the LOT theorist, it will still be difficult to formulate a research project that bears on the LOT hypothesis. I need to be more specific about how LOT theorists plan to explain linguistic behaviour. I believe that the LOT theorist will be very sympathetic to claims about the recursive structure of mental representations. My worry, however, is that such claims are problematic on empirical grounds. Another brief detour is 13 I am not concerned with the various notions of emergence that exist in philosophical literature. I am not even committed to the usefulness of Bedau’s (1997) explication of weak emergence. What is crucial to the notion, however, is that weakly emergent properties bear a kind of independence from the “lower level” properties. At the very least, this independence is epistemic. It is perfectly respectable for a science to study weakly emergent properties in a theoretical language of their choosing. They do not first have to provide definitions of their theoretical terms in the theoretical language of “lower level” sciences. In this sense, a majority of psychologists seem comfortable with the idea that the property of mind is weakly emergent. In fact, I am happy to view even weather patterns as emergent properties. 12 therefore worthwhile. One might try to defend claims about the structural complexity of a natural language by arguing that adequate formal representation of a language requires a grammar of certain complexity. Chomsky and Miller (1963) argue, for instance, that natural language exhibits hierarchical complexity, which is supposed to be apparent in relative clauses where the subject is separated from the verb phrase by a center embedded clause. For example, The following conversation, which took place between the two friends in the pump-room one morning, after an acquaintance of eight or nine days, is given as a specimen of their very warm attachment [. . . ] (emphasis added, Austen, 1870). Representation of this class of sentences supposedly requires a formal grammar of a certain complexity (Chomsky and Miller, 1963). One can see how the long-distance dependencies apparent in such clauses pose a sequencing problem for organisms (Hauser, Chomsky, and Fitch, 2002). At the very least, understanding the sentence seems to require sensitivity to the relationship between the main verb and the subject, despite the distance between them. Phrase-structure grammars have traditionally been used to provide structural descriptions for these sentences. Consider a simpler example,14 14 The triangles stand in for a collection of lower branches. 13 Figure 1: A recursively centre-embedded sentence S VP NP T N the S boy who lifted the ball kicked the dog While the LOT theorist will happily accept that English sentences contain noun phrases and verb phrases, it is not obvious that this phrase-structure tree could be read as describing the structure of a mental representation. It is unclear, for instance, whether a formal representation of the syntax of Mentalese would include noun phrases and verb phrases as grammatical categories.15 Fortunately, this stronger claim about the syntax of representations is not important for my argument. Here I am only interested in whether mental representations contain recursive elements. In phrase-structure grammars a grammatical category (or non-terminal symbol such as NP, N, VP, etc.), is a recursive element iff the symbol is dominated by another instance of the category further up the tree. A recursive element is also centre-embedded if it occurs in neither the left-most branch, nor the right-most branch, of the tree (for a full definition, see Chomsky and Miller, 1963). The symbol S (which stands for “sentence”) in figure 1 is a recursive centre-embedded sentence. In what follows I will argue that empirical evidence will press the LOT theorist to accept 1. Some Mentalese sentences contain centre-embedded recursive elements, and perhaps other Mentalese sentences, as constituent parts. 15 It is important to distinguish the claim that some part of a mental representation represents a noun phrase and the claim that noun phrases are part of the structure of mental representations. The latter is a claim about the syntax of LOT, while the former is a claim about semantics. 14 1 should seem plausible to the LOT theorist. This is because long-distance dependencies, so the story goes, present an insurmountable problem for radical associationism, one of the LOT theorist’s most vigorous competitors. Radical associationism is the view that linguistic behaviour can be explained with respect to association-relations between ideas, stimuli, or responses without positing compositional mental representations. The problem with such views, according to Fodor (2008), is that they tend to assume that the associations are formed on the basis of temporal-contiguity. [. . . ] Hume held that ideas became associated as a function of the temporal contiguity of their tokenings. [. . . ] Likewise, according to skinnerian theory, responses become conditioned to stimuli as a function of their temporal contiguity to reinforcers. By contrast, Chomsky argued that the mind is sensitive to relations among interdependent elements of mental or linguistic representations that may be arbitrarily far apart (Fodor, 2008, p. 103). The worry, then, was that the radical associationist could not account for why humans are so good at producing and understanding sentences that exhibit long-distance dependencies (especially if the relationships are very long16 ). I will not discuss this objection in any detail here (for a discussion see, Fodor, 2008). What is important is that these supposed difficulties with radical associationism are often hailed as a chief advantage of the LOT hypothesis. 16 It is important to note here that Fodor (2008) may not have the relatively short ‘long distance’ dependencies of Jane Austen in mind. The productivity of language extends into the domain of long-distance dependencies, and it sometimes seems as if English grammar allows for an arbitrary number of centre-embeddings. The boy, who lifted the ball, kicked the dog. The boy, who lifted the ball, which was lighter than 1 kilogram, kicked the dog. The boy, who lifted the ball, which was lighter than one kilogram, which is lighter than 2 kilograms, kicked the dog. and so on . . . Our linguistic intuitions are supposed to suggest that the sentences picked out here are all grammatical, along with sentences containing any finite number of embeddings. 15 On the one hand, there is no reason why computational relations need to be contiguity-sensitive, so if mental processes are computational they can be sensitive to ‘long-distance’ dependencies between the mental (/linguistic) objects over which they are defined. On the other hand, we know that computations can be implemented by causal mechanisms; that’s what computers do for a living. [. . . ] In short, a serious cognitive science requires a theory of mental processes that is compatible with the productivity of mental representations, and [the computational theory of mind] obliges by providing such a theory (Fodor, 2008, p. 105) Accounting for centre-embedded relative clauses is therefore crucial for the LOT theorist, who wishes to gain ground on the radical associationist (and Skinner). The LOT theorist will do this by explaining the comprehension of long-distance dependencies in English by positing recursive centre-embedded elements in Mentalese sentences. If they do not take up this claim, then it is unclear how the relevant computations can be sensitive to long-distance dependencies. Theorists will, no doubt, be concerned about allowing English sentences to retain a structural complexity over and above that of Mentalese sentences.17 I worry that if the LOT theorist posits recursive elements in Mentalese, then she will also adopt another claim. 2. Production and comprehension of center embedded relative clauses is made possible by computational operations that exploit this syntactic feature of mental representations. 2 seems to be a natural consequence of 1 for the LOT theorist, because comprehending complex sentences will be a matter of composing complex Mentalese expressions to represent the relevant propositions. But any psychological process for the LOT theorist, including understanding, will be a matter of computational transformations of mental representations. 17 For a fuller discussion of the importance of centre-embedded recursive elements to sequence complexity, see Chomsky and Miller (1963). 16 If you think that a mental process - extensionally, as it were - as a sequence of mental states each specified with reference to its intentional content, then mental representations provide a mechanism for the construction of these sequences; and they allow you to get, in a mechanical way, from one such state to the next by performing operations on the representations (Fodor, 1987, p. 145). Our theory of the composition of mental representations, then, constrains our theory of what kinds of computations can be performed on those mental representations. Any posited computational features had better be relevant to computation or there will be no reason for positing the structural feature in the first place. I will argue that viewing the comprehension of a relative clause as the construction of a complex mental representation (with centreembedded recursive elements) places problematic constraints on our theory of sentence processing. 3 3.1 Testing the LOT hypothesis The problem with Recursive, Centre-Embedded, Elements Some theorists have argued that the brain does not employ recursion in order to deal with center-embedded relative clauses. It is an open question as to whether Chomsky’s theories have any biologic validity. Can we really be sure that the relative, prepositional, and adverbial clauses that occur in complex sentences are derived from embedded sentences? [. . .] In short, recursion as defined by Hauser, Chomsky, and Fitch (2002) follows from hypothetical syntactic structures, hypothetical insertions of sentences and phrase nodes, and hypothetical theory-specific rewriting operations on these insertions to form the sentences we actually 17 here or read (Lieberman, 2006, p.359-360). It may not be obvious that Lieberman’s issue here is with a theory of neural processing (although one guesses this is what he means by “biologic validity”). Lieberman’s argument is actually based on a theory of basal ganglia operations or, more specifically, a theory about the operations of segregated circuits that run from various areas of the cortex to segregated regions of the basal ganglia and back. I will postpone evaluating the evidential support for these claims until the discussion of how the LOT theorist should respond to this argument. The argument begins from either of the following premises. I Segregated circuits seem to perform similar computations (see, for example, Alexander and Crutcher, 1990; Delong, 1990) and these segregated circuits perform what can be considered motor, cognitive, and linguistic sequencing18 (Lieberman, 2006). One such circuit is involved in the comprehension of centre-embedded relative clauses. II A single circuit may perform cognitive and linguistic sequencing (see, for example, Lieberman, 2006; Hochstadt et al., 2006). This circuit is involved in the comprehension of centre-embedded relative clauses. The basic problem posed by I is that motor control sequences are often thought to be less complex than linguistic ones. Devlin (2006) assumes, for instance, that motor sequences lack the hierarchical complexity of natural language. But if motor sequences were less complex than linguistic sequences, one would predict that the neural systems underlying complex behavioural sequences would employ different kinds of computations, a proposition that is inconsistent with I. Comprehension of sentences with centre-embedded recursive elements, for the LOT theorist, should require a specific kind of recursion. “[. . . The] recursions [. . . relevant to language] are defined over the constituent structure, [and more specifically the hierarchical constituent structure] of mental representations” (Fodor, 2008, p. 105). If motor control sequences are less 18 for a discussion of what is meant by sequencing see 3.2 18 complex, then the computations allowing for complex motor control should not need to exploit centre-embedded recursive elements in motor representations. One way to avoid this problem is to accept, as Lieberman (2006) does, that motor sequences are hierarchically complex. But even this does not completely dissolve the difficulty. Eventually, the LOT theorist will have to propose a similar computational processes for the two circuits. These computations will have to be defined over centre-embedded recursive elements and usefully accounts for both linguistic and motor sequencing. II is problematic for similar reasons. If II is true, the LOT theorist will have to explain how the same computational system sequences cognitive and linguistic acts. The circuit in question is presumed to be important for the correct apprehension of certain center embedded clauses. For example, patients with Parkinson’s disease have difficulties comprehending a certain class of centre-embedded sentences from structural information alone (Hochstadt et al., 2006). Furthermore, the circuit also seems to be important for cognitive set shifting, a supposed component of executive function (Edabi and Pfeiffer, 2005) If it turned out that a single system underlay the sequencing of both cognitive and linguistic acts, then it would burden the LOT theorist with making a unified account of the circuit’s computations. If the LOT theorist accepts 1, then these computations had better be defined over the hierarchicalconstituent-structure of these representations. LOT theorists must avoid, for instance, modeling the brain as performing cognitive-shifts at relative clauses boundaries (Hochstadt et al., 2006) unless these shifting operations are suitably defined over hierarchical-representations. I propose, then, that I and II are in tension with the claim that (1) the LOT permits recursive, centre-embedded, elements and the claim that (2) the comprehension of relative clauses is made possible by the recursive elements in LOT. 19 3.2 The Strength of the Case Against LOT I am not claiming that the current evidence is sufficient to overthrow the LOT hypothesis. There are, of course, plenty of presuppositions in Lieberman’s argument that a theorist can object to. Note, however, that my case is made if I and II are respectable hypotheses that are in tension with the LOT hypothesis. I have only the burden of showing that I and II can be evaluated by serious research projects. It is an old hypothesis that segregated cortical-subcortical-cortical circuits may employ similar functions. Rather than viewing the basal ganglia as a single functional system taking projections from various cortical locations, evidence suggests that the basal ganglia are part of a number of largely (anatomically) segregated circuits (Alexander et al., 1986). The dorsolateral prefrontal cortex, for instance, seems to comprise a cortical-subcorticalcircuit that is segregated from the motor circuit. Both circuits are composed of relatively segregated neural populations in the striatum, interior globus pallidus, and thalamus (Alexander et al., 1986). It has been suggested that this anatomical division might also be a functional division (Alexander et al., 1986; Alexander and Crutcher, 1990). However, this functional division is to be made on the basis of those circuits’ respective cortical areas. The circuits, themselves, seem to perform fundamentally similar operations. Because of the parallel nature of the basal ganglia-thalamocortical circuits and the apparent uniformity of synaptic organization at corresponding levels of these functionally segregated pathways [. . . ] it would seem likely that similar neuronal operations are performed at comparable stages of each of the five proposed circuits (Alexander et al., 1986, p.361). This poses a serious architectural hypothesis of cortical-subcortical-cortical circuits. What is still needed is a method for empirically testing I and II. We must empirically ascertain whether these circuits have anything to do 20 with language, motor control, or cognition. Two sources of evidence for this comes from pathology and imaging data. i Dysfunction in the Circuits (as seen in Parkinson’s disease and Hypoxia) is associated with a wide array of cognitive, linguistic, and motor difficulties (including a difficulty with center-embedded clauses) (see, for example, Lieberman et al., 1995, 2005; Lieberman, 2006; Hochstadt et al., 2006; Feldman et al., 1990; Lieberman et al., 1992). Furthermore, difficulty in comprehending relative clauses from structural information alone (Hochstadt et al., 2006). ii Circuits are implicated with the performance of similar cognitive (for example, Monchi and Petrides, 2001; Monchi et al., 2004) and motor (Edabi and Pfeiffer, 2005; Doyon et al., 2009, for a review, see) sequencing acts in healthy individuals.19 I will discuss how theorists measure these various kinds of sequencing in a moment. First I will explain why is meant by sequencing. A neural system’s sequencing powers allow an organism to partake of certain complex behavioural sequences. These may be a complex motor sequence, such as that of jumping a hurdle while running, or that of producing a syntactically complex sentence. But even if we were satisfied with motor and linguistic sequencing, the notion of cognitive sequencing remains unclear. We are left to assume that what is meant (or perhaps should be meant) by cognitive sequencing is supposed to be sorted out through the validation of our measures. Lieberman views the Wisconsin Card Sort as measuring a capacity to sequence cognitive acts. This is crucial to his argument, as it ultimately leads him to view a cortical-subcortical-circuit as performing cognitive sequencing. The Wisconsin Card Sort is perhaps the most exhaustively studied measure that Lieberman relies upon, yet it is not obvious that the WCST is a valid measure of cognitive sequencing. It is, however, extremely common 19 I know of no strong evidence for the claim that cortical-subcortical-cortical circuits are involved in the comprehension of centre-embedded relative clauses in healthy individuals. Lieberman (personal communication) does predict, however, that imaging studies should find basal ganglia activation during this kind of linguistic sequencing. 21 to view the WCST as measuring (among other things) a capacity for shifting one’s strategic disposition to behave in response to changing environmental demands (see Flowers and Robertson, 1985, p. 517; Taylor and Saint-Cyr, 1995, p. 283; Edabi and Pfeiffer, 2005, p. 350). Set-shifting might be called upon, for example, when one must shift away from a failing chess strategy. At least some of the measures Lieberman employs are in need of constructvalidity. Hochstadt et al. (2006), for instance, employed the Test of Meaning from Syntax (TMS), which requires extensive further study before validity can be claimed. This test is crucial for his argument as it is presented here since it is taken to measure an individual’s ability to comprehend centreembedded relative clauses from syntactic information alone. Whether the TMS actually does so will, no doubt, be highly contentious, regardless of its early promise (Hochstadt et al., 2006; Pickett, 1998). But if we believe in something like the Chronbach and Meehl (1955) account of constructvalidity, then these difficulties are to be expected. Furthermore, whether I and II can be evaluated by serious research projects will turn on the respectability of construct-validity theory itself. 3.3 Construct-validity Theory Demonstrating the construct-validity of a test is a long and arduous process. As with other forms of test validity, a theorist furthers the construct-validity of a test by demonstrating its relationship with other observables. What makes construct-validity problematic is that it requires theorists to defend the claim that the test measures a hypothetical construct, a theoretical entity that cannot be directly observed (such as aggression). As a result, theorists need to draw upon a number of theoretical propositions in order to determine what kinds of observations should further construct-validity (Chronbach and Meehl, 1955). If a test battery really measures intelligence one might predict that it should predict academic success, though this requires the background assumption that academic performance is related to intelligence. What seems to be the most contentious element of construct-validity is the idea that at the beginning of validation our theoretical constructs may be vague, but further study will make the meaning of our constructs clear. 22 Chronbach and Meehl (1955) relied on a nomological network of scientific laws20 in order to explain how demonstrating construct-validity could influence the meaning of a hypothetical construct. The scientific laws in the nomological network make reference to both hypothetical constructs and observables. For Chronbach and Meehl (1955) hypothetical constructs get their meaning from the role they play in this nomological network. “[Even a] vague, avowedly incomplete network still gives the constructs whatever meaning they do have” (Chronbach and Meehl, 1955, p. 12). A nomological network might, for instance, have laws spelling out the consequences of anger on aggression. It might also have laws capturing the behavioural consequences of both. As we develop the network, our understanding of aggression is supposed to improve.21 Lieberman needs such a view to support his belief that further empirical work will allow him to clarify what is meant by cognitive sequencing. More importantly, construct-validity theory allows theorists to use empirical methods to determine whether the TMS actually measures what it is supposed to. This will amount to defending a nomological network that explains why the TMS tracks the ability to comprehend centre-embedded relative clauses from structural information alone. Of course, one might worry that Chronbach and Meehl (1955) are presupposing a problematic philosophy of language.22 But these concerns are of little relevance to my argument. While some may view construct-validity 20 Chronbach and Meehl (1955) made no assumptions regarding whether the laws were statistical or deterministic. 21 Theorists may want to distinguish possession of a concept and explication of a concept in order to fit construct-validity theory into contemporary semantic theories. If an individual possesses a concept then they will have a mental representation corresponding to the concept. Possessing the concept dog is necessary for Jennifer to believe that “that is a dog.” Possession of a concept does not, however, imply that one can explicate the concept. When asked what dogs are, there is no guarantee that Jennifer can say anything useful. One might, then, view nomological networks as influencing only our ability to explicate concepts. Whether we actually possess a concept in the first place may be a distinct question. 22 According to Wittgenstein, for instance, our psychological concepts are already as clear as they are ever going to be. For the Wittgensteinian, there is no deeper meaning to the word ”reasoning” beyond the conversational practices already associated with it. Since everyday speakers participate in these practices perfectly well, it is unclear how empirical investigation will clear up the meaning of a term. 23 theory as objectionable, this will not be true for LOT theorists. They need some way of measuring mental processes. A theorist cannot simply stare at a brain until a computational theory dawns. Theorists need to develop measures that will track underlying computational processes. They will have no idea what kind of computational relationship will correspond to a belief until the science is well under way. They must rely upon some form of construct-validity theory to justify a methodology of starting from a loose understanding of beliefs as some kind of computational relationship between an organism and its mental representations. In other words, construct-validity theory relieves the scientist of the burden of laying down a computational theory of belief in advance. As theorists fill in the details of the nomological network (and develop a computational theory of mind) they are supposed to come closer to explicating the concept of belief. Assuming that some form of construct-validity theory holds, theorists can continue the process of validating measures of motor, linguistic, and cognitive sequencing. Such measures will allow theorists to make claims about the importance of cortical-subcortical-circuits for various sequencing abilities. Results from such studies will put a burden on the LOT theorist, who hopes to account for complex sequencing behaviours in terms of computations on mental representations. At some point, these theorists must provide accounts of how similar computations will account for structurally complex motor, linguistic, and cognitive behaviour. Furthermore, these computations must make use of the composition of mental representations. If the LOT theorist posits centre-embedded recursive elements to account for the comprehension of relative clauses, then the computations they posit in explaining behaviour had better exploit this composition. If the comprehension of centre-embedded sentences (such as “the boy who was fat kicked the clown”) has more in common with a cognitive set-shifting ability (as tracked by the Wisconsin Card Sort), then the LOT theorist must reconcile such facts with his theory of computation. Reconciling the LOT hypothesis with these facts may still be possible. Theorists can try to explain cognitive set-shifting with computations that are defined over recursive elements. Perhaps cognitive sets, themselves, contain recursive elements.23 Theorists are welcome to respond this way to anoma23 Another option is to argue that Broca’s area forms its own cortical-striatal-cortical circuit (Ullman, 2006) that performs its own unique computations. 24 lous results, but their responses will not be insulated from revision. This evidence may turn out to be a great boon for LOT theorists, as it may allow them to theorize about the composition of mental representations in a disciplined manner. However, it must be emphasized that language is a particularly important domain for the LOT hypothesis. The explanation of linguistic behaviour is supposed to be a task which, though a unsolvable mystery for radical associationism, is a manageable problem for a LOT (Fodor, 2008). But surely if the LOT hypothesis generates serious anomalies in the domain in which it is supposed to be most successful, then the entire hypothesis must come under suspicion. References Alexander, G. and M. Crutcher (1990). Functional architecture of basal ganglia circuits: Neural substrates of parallel processing. Trends in Neuroscience. 13 (7), 266–271. Alexander, G. E., M. R. DeLong, and P. L. Strick (1986). Parallel organization of functionally segregated circuits linking basal ganglia and cortex. Annual Review of Neuroscience 9, 357–381. Austen, J. (1870). Northanger Abbey. London: Spottiswoode and Co. Bedau, M. (1997). Weak emergence. In J. Tomberlin (Ed.), Philosophical Perspectives: Mind, Causation, and World., Volume 11, pp. 375–399. Malden, MA: Blackwell. Carruthers, P. (2006). The Architecture of the Mind. Oxford University Press. Chomsky, N. (1964). Current issues in linguistic theory. In J. A. Fodor and J. J. Katz (Eds.), The Structure of Language: Readings in the Philosophy of Language. Englewood Cliffs, New Jersey: Prentice-Hall, Inc. Chomsky, N. and G. A. Miller (1963). Introduction to the formal analysis of natural languages. In R. D. L. Galanter, R. R. Bush, and E. (Eds.), Handbook of Mathematical Psychology. New York and London: John Wiley and Sons, Inc. 25 Chronbach, L. J. and P. E. Meehl (1955). Construct validity in psychological tests. Psychological Bulletin 52, 287–302. de Saussure, F. (1959). Course In General Linguistics. New York: Philosophical Liberary. Delong, M. (1990). Primate models of movement disorders of basal ganglia origin. Trends in Neuroscience. 13 (7), 281–285. Devlin, J. (2006). Are we dancing apes? Science 314 (10). Doyon, J., P. Bellec, R. Amsel, V. Penhune, O. Monchi, J. Carrier, S. Lehéricy, and H. Benali. (2009). Contributions of the basal ganglia and functionally related brain structures to motor learning. Behavioral Brain Research. 199 (1), 61–75. Edabi, M. S. and R. Pfeiffer (Eds.) (2005). Parkinson’s Disease. CRC press. Feldman, P. L., J. Friedman, and L. S (1990). Syntax comprehension deficits in Parkinson’s disease. Journal of Nervous and Mental Disease 178 (360365). Flowers, K. A. and C. Robertson (1985). The effect of Parkinson’s disease on the ability to maintain a mental set. Journal of Neurology, Neuroscience, and Psychiatry. 48, 517–529. Fodor, H. (2008). LOT 2: The Language of Thought Revisited. Oxford, England: Oxford University Press. Fodor, J. (1975). The Language of Thought. New York: Crowell. Fodor, J. (1981). Introduction. In J. Fodor. (Ed.), Representations: Philosophical essays on the foundations of cognitive science. Hassocks: Harvester. Fodor, J. (1987). Books. Psychosemantics. Cambridge.: MIT press/Bradford Gallistel, R. (1990). The Organization of Learning. MIT Press. Gallistel, R. (2000). The replacement of general-purpose learning models with adaptively specialized learning modules. In M. Gazzaniga (Ed.), The New Cognitive Neurosciences, Second Edition. MIT Press. 26 Hauser, M. D., N. Chomsky, and W. T. Fitch (2002). The Faculty of Language, what is it, who has it, and how did it evolve? Neuroscience 298 (22), 1569–1679. Hochstadt, J., H. Nakano, P. Lieberman, and J. Friedman (2006). The roles of sequencing and verbal working memory in sentence comprehension deficits in Parkinson’s disease. Brain and Language 97, 243–257. Katz, J. and J. Fodor (1964). The structure of a semantic theory. In J. Katz and J. Fodor (Eds.), The Structure of Language: Readings in the Philosophy of Language. Englewood Cliffs, New Jersey.: Prentice-Hall, Inc. Lieberman, P. (2006). Toward an Evolutionary Biology of Language. Cambridge Massachusetts: The Belknap Press of Harvard University Press. Lieberman, P., E. T. Kako, J. Friedman, G. Tajchman, L. S. Feldman, and E. B. Jiminez (1992). Speech production, syntax comprehension, and cognitive deficits in Parkinson’s disease. Brain and Language 43, 169–189. Lieberman, P., B. G. Kanki, and A. Protopappas (1995). Speech production and cognitive decrements on Mount Everest. Aviation, Space and Environmental Medicine 66, 857–864. Lieberman, P., A. Morey, J. Hochstadt, M. Larson, and S. Mather (2005). Mount Everest: A space-analogue for speech monitoring of cognitive deficits and stress. Aviation, Space and environmental Medicine 76, 198– 207. Monchi, O., M. Petrides, J. Doyon, R. Postuma, K. Worsley, and A. Dagher (2004). Neural bases of set-shifting deficits in Parkinson’s disease. The Journal of Neuroscience. 24 (3), 702–710. Monchi, O. and P. Petrides (2001). Wisconsin Card Sorting revisited: Distinct neural circuits participating in different stages of the task identified by event- related functional magnetic resonance imaging. Neuroscience 21, 7733–7741. Pickett, E. (1998). Language and the Cerebellum. Ph. D. thesis, Brown University. Rey, G. (1997). Contemporary Philosophy of Mind: A Contentiously Classical Approach. Blackwell Publishing. 27 Taylor, A. E. and J. A. Saint-Cyr (1995). The neuropsychology of Parkinson’s Disease. Brain and Cognition 28, 281–296. Ullman, M. (2006). Special issue position paper: Is broca’s area part of a basal ganglia thalamocortical circuit? Cortex 42, 480–485. Wittgenstein, L. (1958). Macmillan Company. Philosophical Investigations. 28 New York: The