Roles for Government in Evaluation Quality Assurance: Discussion Paper

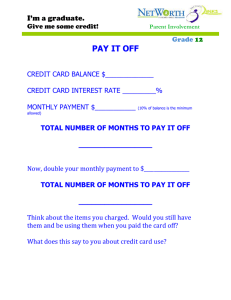

advertisement