Achieving Scalability to over 1000 Processors on the HPCx system

advertisement

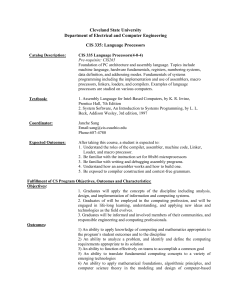

Achieving Scalability to over 1000 Processors on the HPCx system Joachim Hein, Mark Bull, Gavin Pringle EPCC The University of Edinburgh Mayfield Rd Edinburgh EH9 3JZ Scotland, UK HPCx is the UK’s largest High Performance Computing Service, consisting of 40 IBM Regatta-H SMP nodes, each containing 32 POWER4 processors. The main objective of the system is to provide a capability computing service for a range of key scientific applications, i.e. a service for applications that can utilise a significant fraction of the resource. To achieve this capability computing objective, applications must be able to scale effectively to around 1000 processors. This presents a considerable challenge, and requires an understanding of the system and its bottlenecks. In this paper we present results from a detailed performance investigation on HPCx, highlighting potential bottlenecks for applications and how these may be avoided. For example, we achieve good scaling on a benchmark code through effective use of environment variables, level 2 cache and under populated logical partitions. 1 Introduction HPCx is the UK’s newest and largest National High Performance Computing system. This system has been funded by the British Government, through the Engineering and Physical Sciences Research Council (EPSRC). The project is run by the HPCx Consortium, a consortium led by the University of Edinburgh (through Edinburgh Parallel Computing Centre (EPCC)), with the Central Laboratory for the Research Councils in Daresbury (CLRC) and IBM as partners. The main objective of the system is to provide a world-class service for capability computing for the UK scientific community. Achieving effective scaling on over 1000 processors for the broad range of application areas studied in the UK, such as materials science, atomic and molecular physics, computational engineering and environmental science, is a key challenge of the service. To achieve this, we require a detailed un- derstanding of the system and its bottlenecks. Hence in this paper we present results from a detailed performance investigation on HPCx, using a simple iterative Jacobi application. This highlights a number of potential bottlenecks and how these may be avoided. 2 The HPCx system HPCx consists out 40 IBM p690 Regatta H frames. Each frame has 32 POWER4 processors with a clock of 1.3 GHz. This provides a peak performance 6.6 Tflop/s and up to 3.2 Tflop/s sustained performance. The frames are connected via IBM’s SP Colony switch. Per frame, these processors are grouped into 4 multi chip modules (MCM), where each MCM has 8 processors. In order to increase the communication bandwidth of the system, the frames have been divided into 4 logical partitions (lpar), coinciding with the MCMs. Each lpar is operated as an 8-way SMP, running its own copy of the operating system AIX. The system has three levels of cache. There is is 32kB of level 1 cache per processor. The level 2 cache of 1440kB is shared between two processors. The eight processors inside an lpar share 8GB of main memory, the memory bus and the level 3 cache of 128MB. this context it is interesting to compare the results for 8 processors and different numbers of lpars for the medium problem size. For example, running an 8 processor job across 8 lpars (i.e. 1 processor per lpar), rather than with 1 lpar (i.e. 8 processors per lpar) reduces the execution time by 35%. With 8 processors across 8 lpars, each processor is no longer sharing its memory bus and level 3 cache with 7 other pro3 Case study code cessors. Also the level 2 cache is no longer Our case study inverts a lattice Laplacian in shared between two active processors. As a contwo dimensions using the Jacobi algorithm sequence, the processors can access their data at a higher rate. However, time on HPCx is In (x1 , x2 ) charged to users on an lpar basis. Hence, the run with 8 lpar is 8 times as expensive but only 1h = In−1 (x1 + 1, x2 ) + In−1 (x1 − 1, x2 ) 35% more efficient than the single lpar run. In 4 summary running with a single CPU per lpar +In−1 (x1 , x2 + 1) + In−1 (x1 , x2 − 1) i is not a good deal. On HPCx it is advisable to −E(x1 , x2 ) (1) choose the number of processors per lpar which gives the fastest wall clock time for the selected We start the iteration with I0 = E. The matrix number of lpars. Figure 1 does not give a conE is constant. This benchmark contains typical sistent picture here. Depending on the paramfeatures of a field-theory with next neighbour eters either 7 or 8 processors per lpar appears interactions. The code has been parallelised us- to be optimal. ing MPI_Sendrecv to exchange the halos. The When comparing the different single procespresent version does not contain global com- sor results, the run for the small size is 4 times munications. The modules have been compiled faster than the medium size run. This is exusing version 8.1 of the IBM XL Fortran com- pected, since the problem is 4 times smaller. piler with the options -O3 and -qarch=pwr4. However the large size is more than 5 times We have been using version 5.1 of the AIX op- slower than the medium size. This reflects the erating system. fact that the large size does not fit into the level 3 cache of a single lpar. When running on two lpar it fits into level 3 cache and we observe rea4 Tasks per logical parti- sonable scaling between the 2 lpar runs for the medium and large problem size. tion We measured the performance of our application code on three different problem sizes, small =840 × 1008, medium=1680 × 2016 and large=3360 × 4032 on a range of processors and lpars. Our results are shown in Figure 1. The points give the fastest observed run time out of three or more trials. To guide the eye, we connected runs on the same number of lpars. The straight lines give “lines of perfect scaling”. They are separated by factors of two. We start the discussion with the results for a single lpar and medium problem size (1680 × 2016). By increasing the number of active processors on the lpar, we note a drop in efficiency to slightly less than 50%. This pattern is observed for all numbers of lpars and problem sizes. When using large numbers of processors per lpar the data is required at a higher rate than the memory system is able to deliver. In 5 Cache utilisation For the above, the algorithm has been implemented using Fortran90 array syntax. In total we used three different arrays corresponding to the matrices In , In−1 and E in eq. (1). After each iteration, In has to be copied into In−1 . To investigate the efficiency of code generated from array syntax we compared against an implementation using two explicit do-loops for eq. (1) and another two do-loops for copying In into In−1 . It is possible to fuse these sets of do-loops by copying element In (x1 , x2 − 1) into element In−1 (x1 , x2 −1) directly after having calculated In (x1 , x2 ), assumeing x2 is the index of the outer loop. For this algorithm only two lines of In need to be stored. We call this the “compact Figure 1: Wall clock time vs number of processors for a given number of logical partitions. Results are for 2000 iterations. 1Lpar 1Lpar 1Lpar 2Lpar 2Lpar 2Lpar 4Lpar 4Lpar 4Lpar 8Lpar 8Lpar 8Lpar Wallclock CPU in seconds 100 3360*4032 1680*2016 840*1008 3360*4032 1680*2016 840*1008 3360*4032 1680*2016 840*1008 3360*4032 1680*2016 840*1008 10 1 1 10 100 # of processors Figure 2: Performance comparison of different version of the update code for 8 processors on a single lpar. 6 Runtime relative to ‘Array Syntax’ 1.4 Array Syntax Do Loop Compact 1.2 1 0.8 0.6 0.4 0.2 0 which fits into level 2 cache, the difference reduces but is still significant. 420x504 840x1008 1680x2016 3360x4032 version” of the update code. The performance of the three implementations is compared in Figure 2. For none of the four problem sizes do we observe any significant difference between the version using array syntax and the one using explicit do-loops. However the compact version is faster in all cases. This improvement is dramatic for the three larger problem sizes. Here the compact version is more than 2 times faster, which is due to less memory traffic and better cache reuse. For the smallest problem size, MPI protocol The environment variable MP_EAGER_LIMIT controls the protocol used for the exchange of messages under MPI. For messages of a size smaller than MP_EAGER_LIMIT an MPI standard send is implemented as a buffered send, leading to a lower latency but increasing the memory consumption of the MPI library. For messages larger than the MP_EAGER_LIMIT the standard send is implemented as a synchronous send. Both the default and maximum values of MP_EAGER_LIMIT depend on the number of MPI tasks. In Figure 3 we demonstrate the effect of eager (full symbols) and non-eager (open symbols) sending on the performance of our Jacobi inverter. The figure shows the efficiency E(nproc ) = t(1)/[t(nproc )nproc ]. This study uses the compact version of the update code. For larger messages, i.e. smaller number of processors, there is little difference between eager and non-eager sending. However for 1024 processors using eager sending improves the efficiency from 49% to 73%. When increasing the number of lpars, the up- Figure 3: Efficiency for the different values of the MP EAGER LIMIT. The problem size is 6720 × 8064 and we use 8 tasks per lpar. 1.6 Update Eager=Max Update Eager=0 Total Eager=Max Total Eager=0 1.4 efficiency 1.2 Table 1: Time in ms spend in communication and calculation averaged over 20000 iterations. The numbers in the parentheses give the standard deviation in last digits. size: tasks/lpar: communication: calculation: size tasks/lpar: communication: calculation: 1 0.8 0.6 0.4 6720 × 8064 7 8 0.22(9) 0.31(99) 0.304(11) 0.265(11) 26880 × 32256 7 8 1.28(49) 1.67(380) 8.74(36) 6.66(33) 0.2 0 In Table 1 we show results for the averaged communication and calculation times for two different problem sizes using 7 or 8 tasks/lpar. In all cases we used 128 lpar, which is the full date code (computation) shows significant su- production region of the HPCx system. Using perlinear scaling. This is a typical behaviour for only 7 tasks/lpar substantially reduces the avera modern cache based architecture. When us- age and the standard deviation of the communiing a larger number of logical partitions, there cation time. This confirms our above expectais more cache memory available. Above 32 pro- tion of the interruptions being a major cause of cessors, the problem fits into level 3 cache and the run time variations. Obviously the calculafor 1024 processors it fits into level 2 cache. tion time increases when using fewer tasks/lpar. This superlinear scaling compensates for most With respect to the overall execution time, of the overhead associated with the increased for the smaller problem it is advantageous to communication when running on a larger num- use 7 task/lpar since the advantage in commuber of processors. When using eager sending, nication time outweighs the penalty on the calthe efficiency of the total code is almost level culation time. For the larger problem size this in a range 32 ≤ nproc ≤ 1024. For 1024 pro- is the other way round and it is advantageous cessors we observe an execution speed of 0.51 to use 8 tasks/lpar and to live with the run time Tflop/s. Considering that the code spends half variations. of its time in communication and is unbalanced with respect to multiplications vs additions1 , 8 Summary we believe this is satisfactory. 1 10 100 1000 # proc 7 Run time variations When running our code on a large number of lpars, we observed a wide variation of run times. Interruptions by the operating system, demons and helper tasks in the MPI system might be a cause of this noise. If these interruptions are indeed the cause, using only 7 tasks/lpar, which leaves 1 processor free to deal with these interruptions, should improve the situation, since each lpar is operated as an independent SMP. 1 The IBM POWER4 processors have two floating point multiply-addition units. For optimum performance these require an equal number of multiplications and additions. In this publication we investigate the performance of a case study code on the HPCx system when using up to 1024 processors. The memory bus has been identified as a potential bottle neck. Better utilisation of the cache system by reducing local workspace inside subroutines and loop fusion improves the situation. For codes using MPI the environment variable MP_EAGER_LIMIT has a large impact on the performance and should be tuned properly. When using large numbers of processors, the interrupts from the operating system and the various demons can impact the performance of the code. Using fewer then eight tasks per lpar can improve the overall performance of the application.