Document 13356838

advertisement

3/6/00

Probabilistic

Model-based Diagnosis

10/28/07

Brian C. Williams

16.410/16.413

November 17th, 2010 1

copyright Brian Williams, 2005-09

Brian C. Williams, copyright 2000-09

Image credit: NASA.

Notation

St+1

st+1

ot+1

o1:t

α

10/28/07

set of hidden variables in the t+1 time slice

set of values for those hidden variables at t+1

set of observations at time t+1

set of observations from all times from 1 to t

normalization constant

copyright Brian Williams, 2005-09

2

1

3/6/00

Multiple Faults Occur

• three shorts, tank-line and

pressure jacket burst, panel

flies off.

Lecture 12: Framed as CSP.

• How do we compare the space of

alternative diagnoses?

• How do we prefer diagnoses that

explain failure?

Image source: NASA.

APOLLO

13

10/28/07

3

copyright Brian Williams, 2005-09

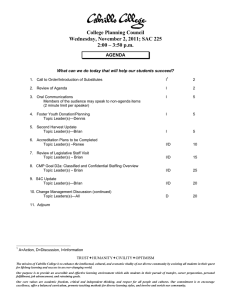

Due to the unknown mode, there tends to be

an exponential number of diagnoses.

G

G

Good

Good

F1

Fn

U

Candidates with

UNKNOWN failure modes

U

Candidates with

KNOWN failure U

modes

1.

Introduce fault models.

•

More constraining, hence more easy to rule out.

•

Increases size of candidate space.

2.

Enumerate most likely diagnoses Xi based on probability.

Prefix (k) ( Sort {Xi} by decreasing P(Xi | O) )

•

Most of the probability mass is covered by a few diagnoses.

4

10/26/10

2

3/6/00

Model-based Diagnosis

Xor(i):

G(i):

Out(i) = In1(i) xor In2(i)

Stuck_0(i):

Out(i) = 0

U(i):

1

1

1

0

1

A

A1

X

B

C

X1

A2

Input:

• Finite Domain Variables

X2

A3

0

G

1

Y

D

E

F

Z

~ GDE & Sherlock

[de Kleer & Williams, 87, 89]

Assumptions:

– <X,Y>

•

•

X

Y

– O

•

•

•

Φ(X, Y)

o

P(Xi)

mode variables

model variables

• Modes are static

observable variables O ⊆ Y.

• uniform dist on logical models.

model constraints

observations oi:n ∈ DO

a prior probability of modes

Output:

• {x ∈DX | ∃y∈DY s.t. x ∧ o ∧ Φ(x,y) is consistent}

⇒ P(X | oi:n)

• Xi ⊥ Xj for i ≠ j

(apriori)

• Oi ⊥ Oj | X

for i ≠ j

Consistency-based

Probabilistic,

Sequential Observations

copyright Brian Williams, 2005-09

10/28/07

5

Compute Conditional Probability via Bayes Rule

(Method 6)

P(X | o) =

P(o | X)P(X)

P(o)

=

P(o | X)P(X)

∑ P(o, x)

= αP(o | X)P(X)

x ∈X

=

P(o | X)P(X)

∑ P(o | x)P(x)

x ∈X

10/28/07

copyright Brian Williams, 2005-09

6

3

3/6/00

Candidate Prior Probabilities

P(X) = ∏ P(X i | X1:i−1 )

Chain rule

i

Assume Xi ⊥ Xj for i ≠ j:

P(X) =

∏ P(X )

i

X i ∈X

P(A = G,B = G,C = G) = .97

P(A = S1,B = G,C = G) = .008

P(A = S1,B = G,C = S0) = .00006

P(A = S1,B = S1,C = S0) = .0000005

7

10/26/10

in

out

0

A

X

B

Y

C

Leading diagnoses before output observed

10/26/10

8

4

3/6/00

Estimate probability by assuming a uniform

distribution (Method 7)

P(X | o) = αP(o | X)P(X)

How do we compute P(o | X), from logical formulae Φ(X,Y)?

Given theory Φ, sentence Q ….

… is inconsistent.

… could be true.

… must be true.

M(Q)

M(Φ)

P(Q | Φ)=

M(Q)

M(Φ)

M(Q)

0

M(Φ)

1

Problem: Logic doesn’t specify P(si) for models of consistent sentences.

copyright Brian Williams, 2005-09

10/28/07

9

Estimate probability by assuming a uniform

distribution (Method 7)

Problem: Logic doesn’t specify P(si) for models of consistent sentences.

⇒ Assume all models are equally likely → count models.

Model-based Diagnosis using model counting:

P(o | x) =

10/28/07

P(o, x) M(o ∧ x ∧Φ(x,Y ))

=

P(x)

M(x ∧Φ(x,Y ))

copyright Brian Williams, 2005-09

10

5

3/6/00

Simplify P(O | M) using the

Naïve Bayes Assumption (Method 7)

P(X | o) = αP(o | X)P(X)

Problem: P(o | X) can be hard to determine for large |o|.

Assume: observations o are independent given mode X.

o1 ⊥ o2 … ⊥ on | X

P(o | X) = P(o1 | X)P(o2 | X)…P(on | X)

by Naïve Bayes

copyright Brian Williams, 2005-09

10/28/07

11

Diagnosis with Sequential Observations

and the Naïve Bayes Assumption

P(X | o1:n ) =

P(on | X,o1:n−1 )P(X | o1:n−1 )

P(on | o1:n−1 )

=

= αP(on | X,o1:n−1 )P(X | o1:n−1 )

P(on | X,o1:n−1 )P(X | o1:n−1 )

∑ P(on , x | o1:n−1)

x ∈X

=

P(on | X,o1:n−1 )P(X | o1:n−1 )

∑ P(on | x , o1:n−1)P(x | o1:n−1)

x ∈X

Assume: observations o are independent given mode X.

o1 ⊥ o2 … ⊥ on | X

=

P(on | X)P(X | o1:n−1 )

∑ P(on | x)P(x | o1:n−1)

x ∈X

10/28/07

copyright Brian Williams, 2005-09

12

6

3/6/00

Estimating the Observation

Probability P(oi | M, o1:n-1) in GDE

GDE used naïve Bayes AND assumed consistent observations

for candidate m are equally likely.

P(on | x, o1:n-1) is estimated using model Φ(x,Y) according to:

If o1:n-1 ∧ x ∧ Φ(x,Y) entails on

Then P(on | x, o1:n-1) = 1

If o1 : ni-1 ∧ x ∧ Φ(x,Y) entails On ≠ on

Then P(on | m, o1:n-1) = 0

Otherwise, Assume all consistent assignments to On are equally likely:

let DCn ≡ {oc∈ DOn | o1:n-1 ∧ x ∧ Φ(x,Y) is consistent with On = oc }

Then P(on | x, o1:n-1) = 1 / |DCn|

10/28/07

copyright Brian Williams, 2005-09

13

in

0

A

X

B

Y

C

out

out

1

P(X | o 1:n ) = αP(o n | X)P(X | o 1:n−1 )

Observe out = 1:

x = <A=G, B=G, C=G>

Prior: P(x)

= .97

P(out = 0 | x)

=1

P(x | out = 0 )

= α x 1 x .97 = .97

10/28/07

copyright Brian Williams, 2005-09

14

7

3/6/00

in

0

A

X

B

Y

C

out

out

0

P(X | o 1:n ) = αP(o n | X)P(X | o 1:n−1 )

Observe out = 0:

x = <A=G, B=G, C=G>

Prior: P(x)

= .97

P(out = 0 | x)

=0

P(x | out = 0 )

= 0 x .97 x α = 0

10/28/07

copyright Brian Williams, 2005-09

15

in

out

0

A

X

B

Y

C

0

Priors for Single Fault Diagnoses:

10/26/10

16

8

3/6/00

in

out

0

A

X

B

Y

C

Leading diagnoses before output observed

17

10/26/10

in

out

0

A

X

B

Y

C

0

Top 6 of 64 = 98.6% of P

Leading diagnoses after output observed

10/26/10

18

9

3/6/00

Due to the unknown mode, there tends to be

an exponential number of diagnoses.

G

G

Good

Good

F1

Fn

U

Candidates with

UNKNOWN failure modes

Candidates with

KNOWN failure U

modes

U

But “unknown” diagnoses represent a small fraction

of the probability density space.

Most of the density space may be approximated by

enumerating the few most likely diagnoses.

19

10/26/10

Diagnosing Dynamic Systems: Probabilistic

Constraint Automata

• Devices modes • Probabilistic transitions between modes

• State constraints for each mode

• One automata per component

vlv=stuck open => Outflow = Mz+(inflow);

vlv=open => Outflow = Mz+(inflow);

Stuck

open

Open

Open

Cost 5

Prob .9

Close

Stuck

closed

Closed

Vlv = closed => Outflow = 0;

10/28/07

Unknown

copyright Brian Williams, 2005-09

vlv=stuck closed=> Outflow = 0;

20

10

3/6/00

Estimating Dynamic Systems

S

T

Given sequence of commands and observations:

• Infer current distribution of (most likely) states.

• Infer most likely trajectories.

10/28/07

copyright Brian Williams, 2005-09

21

11

MIT OpenCourseWare

http://ocw.mit.edu

16.410 / 16.413 Principles of Autonomy and Decision Making

Fall 2010

For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms.