Outlook for Euclid Data Processing Tom Kitching

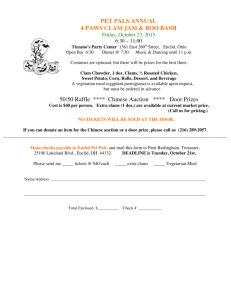

advertisement

Outlook for Euclid Data Processing Tom Kitching [Euclid: OU Level 3 Cosmology Lead; WLWG Cosmic Shear Sub-Group; Theory DM Sub-Group] Assessment Phase Summary What is Euclid? Summary of some of the data challenges for Euclid A quick summary of the current data management plan Euclid is still in the planning stage Slides only have information from Assessment Phase Yellow Book http://xxx.lanl.gov/abs/0912.0914 Assessment Phase Summary Euclids science goals place raw data requirements Data processing challenges Large amount of data needed : statistics for dark Univ. science High fidelity to measure the lensing effect and control systematics Extreme precision (high CPU) needed for weak lensing measurements Data processing is I/O intensive Inhomogenous Work Flow and resources required Data Distribution challenges Distributed Science Data centers Peta-byte raw data Giga-scale catalogues Visualisation of final science products Euclid Space-based cosmology experiment Proposal to ESA Cosmic Visions Assessment Phase Summary M-Class Mission Currently in the Definition Phase (B) Other M-Class missions in CV : Plato, Solar Orbiter 2 missions will be selected for launch in 2 slots : 2017, 2018 Euclid was ranked 1st in the Assesment Phase downselect Single Consortium (FR, IT, GE, UK, SP, CH, NW, NL, AU, US) Currently in activity of submitting a response to the M-class CV AO (due on Friday!) Assessment Phase Summary Assessment Phase Summary Mission 1.2m Mirror, L2 orbit 5 Years Mission Duration Map the sky in 1 optical band, 3 NIR bands 0.16’’ optical pixels for weak lensing measurement 0.20’’ IR pixels for photometry Low-res NIR Spectra 20,000 sqdeg to a median z=1.0 Assessment Phase Summary Assessment Phase Summary Science Goals Use Weak Gravitational Lensing and Baryon Acoustic Oscillations Constrain the Dark Energy Equation of state to 1% Test General Relativity on cosmic scales Map Dark Matter, and constrain its properties Probe the initial conditions (perfect low-z complement to CMB) Assessment Phase Summary Will generate approx 2 Peta-Bytes of raw data Many times this will be needed in simulations Assessment Phase Summary Assessment Phase Summary shear needs to be measured to 10-3 accuracy Assessment Phase Summary Cosmic Lensing gi~0.2 Real data: gi~0.03 12/19 Slide from S. Bridle Atmosphere and Telescope Assessment Phase Summary Convolution with kernel Real data: Kernel size ~ Galaxy size 13/19 Slide from S. Bridle Pixelisation Assessment Phase Summary Sum light in each square Real data: Pixel size ~ Kernel size /2 14/19 Slide from S. Bridle Noise Assessment Phase Summary Mostly Poisson. Some Gaussian and bad pixels. Uncertainty on total light ~ 5 per cent 15/19 Slide from S. Bridle Assessment Phase Summary shear needs to be measured to 10-3 accuracy Assessment Phase Summary 3 billion galaxies 1 billion with spectra Assessment Phase Summary Data Intensive, Inhomogenous I/O and CPU Intensive Assessment PS1 Example Phase Summary More effects (e.g. CTI), higher accuracy needed for Euclid Assessment Phase Summary PS1 Example For PS1 redo obj detection, stacking, PSF model etc. WL needs very high precision, check IPP, possible incorporation Euclid Ground Segment (assessment phase, slightly updated now, qualitatively the same) Consortium Assessment Phase Summary Assessment Phase Summary Data Distribution “Data centric approach” through the Euclid Mission Archive Data will be “distributed/federated/partially replicated” over all participants Still not clear *exactly* what this means for x PetaBytes of Data and Giga-scale catalogue products Consortium Conclude Euclids science goals place raw data requirements Large amount of data needed : statistics for dark Univ. science High fidelity to measure the lensing effect and control systematics Data processing challenges Assessment Phase Summary Extreme precision (high CPU) needed for weak lensing measurements Data processing is I/O intensive Inhomogenous Work Flow and resources required Data Distribution challenges Distributed Science Data centers Peta-byte raw data Giga-scale catalogues Visualisation of final science products