Computational Steering on Grids A survey of RealityGrid Peter V. Coveney

advertisement

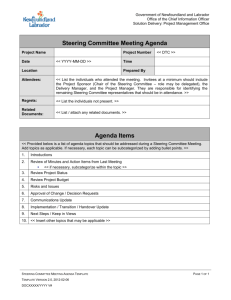

Computational Steering on Grids A survey of RealityGrid Peter V. Coveney Centre for Computational Science, University College London Talk contents • Some RealityGrid science applications • High performance “capability” computing • Computational steering • Using the UK Level 2 Grid 2 RealityGrid “Instruments”: XMT devices, LUSI,… Grid infrastructure (Globus, Unicore,…) HPC resources Scalable MD, MC, mesoscale modelling Performance control/monitoring 3 Storage devices User with laptop/PDA (web based portal) Visualization engines Steering ReG steering API VR and/or AG nodes Moving the bottleneck out of the hardware and into the human mind… RealityGrid: Goals Use grid technology to closely couple high performance computing, high performance visualization and high throughput experiment by means of computational steering. Molecular and mesoscale condensed matter simulation in the terascale regime. Deployment of component based middleware and performance control for optimal utilisation of dynamically varying grid resources. Contribute to global grid standards via the GGF for benefit of RealityGrid and general modelling and simulation community. Operate in a robust grid environment 4 Mesoscale Simulations: Lattice-Boltzmann methods Coarse-grained lattice gas automaton. Continuum fluid dynamicists see it as a numerical solver for the BGK approximation to Boltzmann’s equation The dynamics relaxes mass densities to an equilibrium state, conserving mass and momentum (given infinite machine precision) – Densities at each lattice node are altered during “collision” (relaxation) step. – Judicious choice of equilibrium state. – Relaxation time (viscosity) is an adjustable parameter. Computer codes are simple, algorithmically efficient and readily parallelisable, but numerical stability is a serious problem. 5 Three dimensional Lattice-Boltzmann simulations 6 Code (LB3D) written in Fortran90 and parallelized using MPI. Scales linearly on all available resources. Fully steerable. Future plans include move to parallel data format PHDF5. Data produced during a single large scale simulation can exceed hundreds of gigabytes or even terabytes. Simulations require supercomputers High end visualization hardware and parallel rendering software (e.g. VTK) needed for data analysis. 3D datasets showing snapshots from a simulation of spinodal decomposition: A binary mixture of water and oil phase separates. ‘Blue’ areas denote high water densities and ‘red’ visualizes the interface between both fluids. Large Scale Molecular Dynamics Molecules are modelled as particles moving according to Newton’s equations of motion with real atomistic interactions. Simulated systems are LARGE: 30,000-300,000 atoms. Interaction potentials: Lennard-Jones and Coulombic interactions, harmonic bond potentials, constraints on atoms, etc. We use scalable codes (LAMMPS Large-scale Atomic/Molecular Massively Parallel Simulator & NAMD). 7 MHC-peptide complexes Ribbon representation of the HLA-A*0201:MAGE-A4 complex. 8 TCR-peptide-MHC complex Peptide MHC binding is just like the binding of drugs to other receptors. We can use molecular dynamics (MD) simulation method to examine/model MHC-peptide interaction. Garcia, K.C. et al., (1998). Science 279, 1166-1172. 9 Big iron currently used by us • CSAR: CRAY T3E; Origin3800; Altix (Available in October 2003, 256 CPU machine) • HPCx UK terascale facility (DL/EPCC): IBM Power 4, initially 1280 processors, 3.24 Teraflops. • Pittsburgh Supercomputing Center Lemieux: HP Alphaserver Cluster comprising 750 4-processor compute nodes 10 MHC-peptide complexes: Simulation models ... for the 58,825 atom model (whole model), we can perform 1 ns simulation in 17 hours' wall clock time on 256 processors of Cray T3E using LAMMPS Wan S., Coveney P. V., Flower D. R., preprint (2003). 11 MHC-peptide complexes: Conclusions • For 58,825 atoms system, 1 ns simulation can be performed in 17 hours' wall clock time on 256 processors of Cray T3E. • More accurate results are obtained by simulating the whole complex than just a part of it. • The α3 and β2m domains have a significant influence on the structural and dynamical features of the complex, which is very important for determining the binding efficiencies of epitopes. We are now doing TCR-peptide-MHC simulations (ca. 100,000 atom model) using NAMD. 12 TCR-peptide-MHC complex: Simulation models SGI Origin 3800 ‘Green’ Alpha based Linux Cluster ‘LeMieux’ ... we can perform 1 ns simulation in 16 hours' wall clock time on 256 processors of an SGI Origin 3800 using NAMD. The Alpha cluster is about two times faster. 13 TCR-peptide-MHC complex: First results with HPCx HPCx Alpha based Linux Cluster ‘LeMieux’ ... 1 ns simulation in < 8 hours' wall clock time on 256 processors of HPCx using NAMD, which is faster than LeMieux 14 High Performance Computing Benchmarks: HPCx versus LeMieux HPCx Alpha based Linux Cluster ‘LeMieux’ NAMD gets bronze star rating 100,000 atom MD simulation on 256 processors of HPCx 15 Capability Computing What is “Capability Computing”? – “Uses more than half of the available resources (CPUs, memory,…) on a single supercomputer for one job.” – This requires ‘draining’ the machine’s queues in order to make the needed resources available. Examples inside RealityGrid – – – – LB3D on CSAR’s SGI Origin 3800 using up to 504 CPUs LB3D on HPCx using up to 1024 CPUs NAMD on LeMieux @ PSC using 2048 CPUs NAMD on CSAR’s SGI Origin 3800 using up to 256 CPUs LB3D gets gold star rating for 10243 simulations on 1024 processors of HPCx 16 Capability Computing: Storage requirements NAMD (TCR-peptide-MHC system, 96,796 atoms, 1ns): – Trajectory data: 1.1GB – Checkpointing files: 4.5MB each, write every 50ps. – Total (including input files and data from analysis): 1.4GB LB3D (512x512x512 system, 5,000 timesteps, porous media) – – – – XMT sandstone data: 1.7GB Single dataset: 0.5-1.5GB, up to 7.5GB in total per measurement. Checkpointing files: 60GB Total (measure every 50 timesteps, checkpoint every 500 timesteps): 100 x 7.5GB + 10 x 60GB + XMT data: 1.5TB Need terabyte storage facilities! 17 Capability Computing: Visualization requirements We use the Visualization Toolkit (VTK), IRIS Explorer and AVS for parallel volume rendering and isosurfacing of 3D datasets. Large scale simulations require specialised hardware like our SGI Onyx2 because – data files can be huge, i.e. upwards of tens of gigabytes each – isosurfaces can be very complicated. Self-assembly of the gyroid cubic mesophase by latticeBoltzmann simulation Nélido González-Segredo and Peter V. Coveney, preprint (2003) Lattice-Boltzmann simulation of an oil-filled sandstone 18 Computational Steering with LB3D All simulation parameters are steerable using the ReG steering library. Checkpointing/restarting functionality allows ‘rewinding’ of simulations and run time job migration across architectures. Steering reduces storage requirements because the user can adapt data dumping frequencies. CPU time can be saved because users does not have to wait for jobs to be finished if they can already see that nothing relevant is happening. Instead of doing “task farming”, i.e. launching many simulations at the same time, parameter searches can be done by “steering” through parameter space. Analysis time is significantly reduced because less irrelevant data is produced. 19 A typical steered LB3D simulation Cubic micellar phase, high surfactant density gradient. Cubic micellar phase, low surfactant density gradient. Initial condition: Self-assembly Random water/ starts. surfactant mixture. Rewind and restart from checkpoint. 20 Lamellar phase: surfactant bilayers between water layers. Progress on short term goals We are grid-based (GT2, Unicore, GT3…) Capable of distributed and heterogeneous operation Do not require wholesale commitment to a single framework Fault tolerant (we can close down, move, and re-start either the simulation or the visualisation without disrupting the other) We have a steering API and library that insulates application from details of implementation We do steering in an OGSA framework We have learned how to expose steering controls through OGSA services 21 SGI Bezier SGI Onyx @ Manchester Vtk + VizServer Ope nGL Viz S er v er Firewall “Fast Track” Steering Demo UNICORE Gateway and NJS Manchester Laptop SHU Conference Centre Simulation Data Dirac SGI Onyx @ QMUL LB3D with RealityGrid Steering API UNICORE Gateway and NJS QMUL Steering (XML) 22 9 VizServer client 9Steering GUI 9 The Mind Electric GLUE web service hosting environment with OGSA extensions 9Single sign-on using UK eScience digital certificates Progress on long term goals Steering timely for scientific research using capability computing. Need to make steering capabilities genuinely useful for scientists: value added quantified. See Contemporary Physics article. Many codes have now been interfaced to the RealityGrid steering library: LB3D, NAMD, Oxford’s Monte Carlo code, Loughborough’s MD code, and Edinburgh’s Lattice-Boltzmann code. Moving to component architecture & incorporating performance control capabilities (“deep track” timeline) – checkpoint/restart/migration now available for LB3D Web based portal development (EPCC) - steering in a web environment. HCI recommendations inform ultimate steering GUI’s 23 Deploying applications on a persistent grid: The “Level 2 Grid” The components of this Grid are the computing and data resources contributed by the UK e-Science Centres linked through the SuperJanet4 backbone, regional and metropolitan area networks. Many of the infrastructure services available on this Grid are provided by Globus software. A national Grid directory service links the information servers operated at each site and enables tasks to call on resources at any of the e-Science Centres. The Grid operates a security infrastructure based on X.509 certificates issued by the e-Science Certificate Authority at the UK Grid Support Centre at CLRC. In contrast to other Grid projects (like DataGrid), L2G resources are highly heterogeneous. 24 Examples of currently available resources on the “Level 2 Grid” Compute resources: – – – – – Various Linux clusters Various SGI Origin machines Various SUN clusters HPCx & CSAR resources And many others Visualization resources: – Our local SGI Onyx2 is currently the only L2G resource which is not based at an e-science centre. (… and of course ESNW’s famous Sony Playstation.) 25 RealityGrid-L2: LB3D on the L2G Visualization SGI Onyx Vtk + VizServer SGI Simulation Data GLOBUS-IO program lbe use lbe_init_module use lbe_steer_module use lbe_invasion_module izSe rv e r red ) a L h XM a s u t is i ( v g o n o u tp ri n i t e a X St e nic GUI ssh. u g m g o m e r i n u s in c k e sed : St bac a em e d eb Fil syst nnell tu f ile Simulation LB3D with RealityGrid Steering API 26 Ope nGL V ReG steering GUI Laptop Vizserver Client Steering GUI GLOBUS used to launch jobs The Level 2 Grid: First experiences from a user’s point of view In principle, use of the L2G is attractive for application scientists because there are many resources available which can be accessed in a similar manner using GLOBUS. But today one has to be very enthusiastic to use it for daily production work, because: – It is not trivial to get started, as the available documentation does not answer the users’ questions properly. For example: • Which resources are actually available? • How can I access these resources in the correct way (queues,logins,…)? • How do I get sysadmins to sort out firewall problems? – Support is limited because most sysadmins seem not to have extensive/favourable experience with GLOBUS. – So far, most people involved are computer scientists who are more interested in the technology than in the usability of the grid. “As you run into bumps in the road, remember that you are a Grid pioneer. Do not expect all the roads to be paved. (Do not expect roads.) Grids do not yet run smoothly.” From the Globus Quickstart Guide 27 Summary Fast track – Steering capabilities deployed in several RealityGrid codes. – We work with Unicore and Globus Toolkit 2; GT 3 forthcoming – Capability computing possible via L2G Deep track – – – – 28 Checkpoint/restart and migration General performance control Componentisation via ICENI framework GGF standards for advanced reservation/coallocation of resources Reality Grid and the UK/USA TeraGrid Project Linking US Extended Terascale Facilities and UK HPC resources via a Trans-Atlantic Grid We plan to use these combined resources as the basis for an exciting project – to perform scientific research on a hitherto unprecedented scale Computational steering, spawning, migrating of massive simulations for study of defect dynamics in gyroid cubic mesophases Visualisation output will be streamed to distributed collaborating sites via the Access Grid For that, we must operate in a robust L2G grid environment At Supercomputing, Phoenix, USA, November 2003 29