Specifying Scientific Applications by Means of Scientific Dataflow Eric Simon

advertisement

Specifying Scientific Applications by Means of

Scientific Dataflow

Eric Simon

eric.simon@inria.fr

1

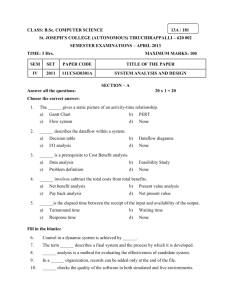

Mediation between three actors

predictions

d

en

s

er

us

s

ce

i

v

er

id

v

o

pr

s WasteTransp

r

e

OceanCirc

bathymetry globalcurrents wind emissions

data providers

2

User needs

Scientists want to share data

Huge amount of « raw » data

But most valuable data are « derived »

Scientists want to share programs (or services)

Use someone else’s program

Assemble a whole « data processing chain »

3

Issues commonly addressed

Data integration

Define metadata standards

Publish metadata associated with shared data sets

Provide location and data access services based on metadata

Typical: metadata catalogs

Interoperability

Adopt a middleware infrastructure

Make individual programs or services accessible through them

Typical: web services

4

Problems

Solving the data integration problem is not enough

Need to understand the « lineage » of derived data

Need to compute derived data on demand

Assuring the interoperability of services is not enough

Need to easily compose services

Need to transparently monitor their execution

Self-organizing communities

Scientists are autonomous: no central authority

Authorship and confidentiality of resources is preserved

5

Key concept: scientific dataflow

predictions

d

en

Localcurrents

s

er

us

s

ce

i

v

er

id

v

o

pr

s WasteTransp

r

e

OceanCirc

bathymetry globalcurrents wind emissions

data providers

6

Overview of the proposed approach

Data integration

virtual data integration approach, aka mediation

Data sources are published through wrappers

Wrapped data are mapped to a mediation schema

Service integration

Programs are published through wrappers

Wrapped programs can be assembled through dataflow

7

Mediation database schema

Virtual database defined by the members of a community

Quite often: a metadatabase that describes the properties of shared data

sets

Example of a (relational) mediation schema:

Bathymetry (Id, Coord, Provider, GridRes, Map)

Globalcurrents (Id, Coord, Provider, GridRes, Map)

Provider_nomenclature (reference, code, description)

8

Publishing data

Bathymetry (Id, Coord, Provider, GridRes, Map)

mapping as a view

Bathym

Id

Coord

Author

MAP

bathy00100

bathy00101

(x1,y1);(x2,y2)

(x3,y3);(x4,y4)

HRW

HRW

blob

blob

Source table

wrapper

bathy00100

Files

Bathymmap/(x1,y1);(x2,y2)/HRW/

155200.0 4940480.0 -500.0

155250.0 4940500.0 -510.0

9

Publishing functions

Functions are published as « table functions »

Overlay

Rect1

Rect2

Result

Source table

with restricted

access pattern

wrapper

Function overlay (rect1: (point,point);

rect2: (point,point)

): boolean

determines if a

rectangle overlays

another rectangle

10

Publishing programs

Input 1

OceanCirc

local_currents

Input 2

gridRes

input 1: [map_bathym: blob];

input 2: [map_global_currents: blob];

grid_Res: double precision

local_currents: [Id, coord, gridRes,

map_local_currents]

wrapper

Program OceanCirc ({bathym files}, {global_currents files},

grid_Res: double precision):

{local_currents files}

math. model that

computes local currents

from global currents

and bathymetry

11

Abstract scientific dataflow

Input 3

Input 1

Waste

transport

CircModel

Input 2

local_currents

emissions

input 1:

input 2:

local_currents:

Emissions:

Wind:

Prediction:

prediction

wind

[map_bathym: blob];

[map_global_currents: blob];

[map_local_currents: blob]

[coord: point, date:date, type: string]

[coord1: point, coord2: point, wind_strength: float]

[Id: int, Rect: rect, date: date, gridRes: float, map: blob]

12

Encapsulated scientific dataflow

Encapsulation provides a higher level of abstraction of a dataflow

Input 3

Input 1

Waste

transport

CircModel

Input 2

local_currents

emissions

prediction

wind

Input 1

dfu

Input 2

prediction

13

Iterative scientific dataflow

Encapsulation defines the scope of iteration

seq’

seq

dfu1

it

e11

s11

s21

e21

e22

s21’

dfu2

s22

data links

seq

s

14

A complex example

TCSI

TCSI

TCSI

LUPnew

intID

LUPupd

intLUM

LUPold

TCSIold

intLUM

intLUM

TCSIold

LUPold

insID

insLUM

LUPnew

LUPpast

15

Benefits of abstract scientific dataflow

Describe the lineage of derived data (additional text

documentation can be attached)

Provide means to build new services by assembly of

existing services

Not a scientific programming language !

Data structures are limited to atoms, sets and lists

Expressiveness is limited to provide guarantees of termination

and determinism

16

A concrete dataflow

A concrete dataflow results from the instantiation of an

abstract dataflow

Forward-instantiation

Provide input data to the entries of an abstract dataflow

• queries over the virtual DB and source tables

Associate abstract data flow units with programs

• select a compatible program

• map the abstract dfu inputs to program inputs

• map the program outputs to abstract dfu outputs

Associate stores with results

• indicate where to store intermediate and final results

Instantiation can be done dynamically or statically

17

Example of concrete dataflow

Input 3

Input 1

Waste

transport

CircModel

Input 2

local_currents

emissions

prediction

wind

« Retrieve all bathymetry maps of gridRes 2 produced by HRW that overlap

the rectangle of coordinates (155200, 940480);(160000,4950000) »

Select map

from bathymetry as bathym

//www-inria.fr/GisWrapper/overlay as overlay

where overlay.Coord1 = ‘(155200, 940480);(160000,4950000)’

and overlay.Coord2= bathym.Coord and overlay.Result= ‘true’

and bathym.Provider = ‘GB-HRW’ and bathym.GridRes= 2

18

Example continued

Input 3

Input 1

Waste

transport

CircModel

Input 2

local_currents

emissions

mapping

Input 1

prediction

wind

mapping

OceanCirc

local_currents

Input 2

gridRes

will receive a default

value of ‘2’

19

Self-organizing communities

Peer to Peer mediator architecture

Each peer publishes data, programs, and scientific dataflows

Programs execute on their peer

Data are transferred between peers

Security and confidentiality

access privileges to resources are defined on each peer

Security certificates can be assigned to peers

Each peer has access to all authorized peers and resources

20

Execution of concrete dataflow

The distributed execution of a concrete dataflow is

monitored by the scientific dataflow engine

Each execution of dataflow unit entails a demand for an

asynchronous program execution to the mediator that

publishes it

The results of a dataflow unit are stored accordingly to

the user specification

A trace of execution is automatically generated and

stored by the scientific dataflow engine

21

Example of dataflow execution

Input 1

CircModel

Input 2

local_currents

Query sent to the mediator :

job execute //www.HRW.com/ModelWrapper/OceanCirc

parameter gridRes = 2

input 1 is select map from bathymetry as bathym, …. ;

input 2 is select map from Global-currents as Gcurr, …. ;

data

Mediator

query

wrapper

GCurrents

Mediator

Bathym

wrapper

OceanCirc

exec

wrapper

LCurrents

22

Project status

Research & development

Scientific dataflow language

System architecture to define, instantiate, and execute dataflow

Mediator to publish data, functions, and programs

Operational prototype

Experience

First experiments within the Thetis (98-00) and Decair (99-02)

european projects

On-going projects with environmental applications and medical

applications

23

Future work

Research & Development

Graphical user interface to define and instantiate dataflow

Optimisation methods to process concrete dataflow

Support for explanation of results

Location of resources in a large scale network of peers (joint work

with University of Paris 6)

24