Abstract

advertisement

Testing for scalability in a Grid Resource Usage Service

John D. Ainsworth, John M. Brooke

Manchester Computing, The University of Manchester

{john.ainsworth, john.brooke }@manchester.ac.uk

Abstract

The increased availability of web service

implementations of core Grid functions has yet to

be matched by a corresponding increase in their

deployment. This is partly because they remain

largely untested for their ability to scale to levels

obtained by the pre-Web Service Grid mechanisms.

Tests of scalability are a prerequisite to the

deployment of services in working production

Grids. We describe the testing for scalability of a

Resource Usage Service (RUS), which is based on

two emerging Global Grid Forum (GGF) standards,

and is implemented using Web Services

technology. This work has been undertaken within

the UK e-Science project Markets for

Computational Services. It can be considered as a

test case for the methodology involved in taking the

software produced in experimental projects and

evaluating its usage in realistic deployments. For

our Resource Usage Service, the target is to scale

the service to the levels likely to be required by the

UK National Grid Service (NGS).

1. Introduction

In a previous paper [1] (hereafter Paper 1) we have

described the development of a Record Usage

Service (RUS) to record usage of capacity resources,

principally CPU, which implements as far as

possible the proposed specification from the GGF

Resource Usage Service working group [5]. Such

standards compliance is important, but it is also

important to show that proposed standards-based

specifications can be realised in working software

that has the necessary scaling properties appropriate

for Grids intended for productive scientific usage.

The Market for Computational Services (MCS)

project [3] funded as part of the UK e-Science

programme aims to provide services to enable Grids

to be built on economic principles. The MCS

services are composable, meaning that they can be

used in a stand-alone manner or can be combined to

enable functionality beyond the range of a particular

service. The ability of services to be composable in

this manner will be a key test of the utility of the

Service Oriented Approach to Grid computing. It

was very hard to pick apart the different functions of

the early Grid systems such as GT2 and Unicore.

Consequently one had to buy into the whole system

rather than to compose services in a flexible

manner.

We describe how one of the component services in

the MCS project, the RUS, has been tested for

scalability to levels appropriate for usage on the UK

National Grid Service. The methodology of the

scaling experiments has wider ramifications. We

believe that testing for scalability is essential in

order to prove that services can scale to appropriate

levels. In section 2 we briefly describe the

components of the MCS and locate the

functionality of the RUS within this framework. In

section 3 we discuss implementation of the RUS,

and in section 4 we describe the testing

methodology and results. In section 4 we discuss

conclusions and suggest future areas for research

2. Role and functionality of the

RUS

Most current Grids in the academic environment

have resources which are provided for specific goals

and participants are allowed access to the resources

of the Grid if they are part of the teams working on

these specific goals. Examples are the various Grids

for particle physics, e.g. LCG and Open Science

Grid which, are targeted towards very specific

applications. Other, more general purpose Grids

exist, such as the UK National Grid Service, the

TeraGrid in the US, and DEISA in Europe. In these

Grids access is via peer-reviewed projects deemed to

be of sufficient scientific value to merit the

allocation of a given fraction of the Grid resources,

usually in the form of a grant of CPU time and disk

quota allocation. However these Grids are not

economically self-sustaining. They are funded via

some grant process and without new grants of

money they cannot grow and accumulate increased

resources as their usage increases. They will

therefore eventually become over used unless usage

is limited via the granting of resource allocations to

projects.

The concept of a market for computational resources

has as one of its principal aims the provision of an

economic mechanism for self-sustainable and

expansible Grids. In this model usage is paid for in

some way and as usage expands, so new resources

can be added by the owners or managers of the Grid

from this income. In this economic model there

exists the potential for a natural feedback

mechanism controlling the process of resource

allocation according to the usage that groups or

individuals make of the Grid. This clearly involves

the ability to record usage of Grid resources and to

trace this to those who have used those resources so

that they can be billed accordingly.

Another mechanism whereby resources on a Grid

can grow, is by the federation of resources provided

by different organisations. This is already

happening in the UK National Grid Service NGS

[13] as new sites are added via a partnering

programme. One motivation for federating in this

manner is to gain access to a wider range of

resources than is possible at any one site. An

example of this is the used of specialised computers

which have the ability to do advanced rendering for

scientific visualization. These require additional

components, (e.g. programmable graphics cards)

that are not available on the average node in a grid

cluster. Sites that have such resources can trade

them for CPU time at a favourable rate, which in

turn provides their users with access to much greater

quantities of basic compute resource. In this way

the functionality available on the federated grid is

increased. Clearly this requires traceable and

auditable recording of resource usage. It might be

described as a barter economy as opposed to a

payment based economy. Of course these two

models can and should co-exist. The monetary

value of a unit of a resource type can be used to

determine the quantities to be exchanged in a trade

negotiation .

We are thus led to a requirements specification for a

Grid service that:

1. Records usage of given resources against a

unique identifier that represents a user on the

Grid (e.g. distinguished name).

2. Enables administrators and managers (either

human or software) to update usage and to

administer the storage of the records, e.g. via a

database.

3.

Enables clients representing users to query their

usage.

4. Interacts with other services that provide other

functionality to the computational market. e.g.

billing services.

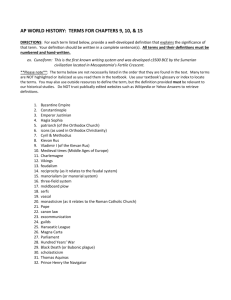

In Figure 1 we show the architecture used in MCS

to create the infrastructure to support a market for

computational services. The roles are divided into

three main classes. Firstly, consumers, which are

implemented by agents and viewers to allow

consumers to access resources and check usage.

Secondly intermediary services that control access

to the Grid resources and which can utlilise the

economic structure to provide quality of service

(QoS). This is principally illustrated by the resource

broker which can obtain offers for client-specified

QoS associated with differing costs of payment (for

details of such a brokers see [4]). Such brokers

also play a major role in the electricity grid spot

market which allows the matching of supply and

demand in an grid in which the utility is electrical

power. Thirdly we have services representing the

providers, a quote service whereby the provider can

interact with the broker, a job submission service

whereby the user agent can submit jobs to the

underlying service after a quote has been mutually

agreed and a Resource Usage Service (RUS) that can

be used to allow all usage to be auditable and

traceable. Note that billing on a per-job basis can be

the responsibility of another service, the payment

service, which may or may not utilise the RUS to

inform the billing process. Another possible

intemediary component of an MCS (not present in

this architecture) could be a Grid Bank , which

could interact with the RUS as part of an

independent checking process when authorising

payment from users Grid accounts.

Consumer

Provider

Intermediary

Request

Quote

Resource

Broker

Request

Quote

Quote Service

Check

Resources

Lookup

User Agent

Service

Directory

Job

Submission

Service

Submit

Authorise

Account

Viewer

Payment

Service

Retrieve Usage Records

Underlying

Service

Complete

Record

Usage

Resource Usage

Service

Figure 1: The architecture of the MCS

showing the interacting services and

agents

3. Implementation of the RUS

Our implementation consists of a web service that

implements the RUS interface, and performs access

control, and an underlying database which provides

the storage and retrieval functionality, as is shown

in figure 1. In Paper 1, we concentrated on the

security and architectural implications of this

method of constructing a RUS. In the present paper

we consider how well the service can handle the

levels of record insertion and management that are

appropriate to the UK NGS. Note that in our

implementation of the RUS we use an XML

database. This is a natural and obvious

implementation for the Resource Usage Service

since the specifications of the RUS are in XML.

However we could implement these specifications

with a relational database or a database using

efficient binary representations of data (as might be

required of a web service for handling image based

data for example). In this case the Web service

would need to translate the XML of the documents

that it receives into these specialised formats or it

could enlist another service that allowed XML

queries to be translated into queries in another

format (this could be by an OGSA-DAI interface).

The decision between t h e s e

different

implementations must be made on criteria such as

efficiency and fitness for purpose, which is why

testing is so essential.

Web Service Container

Service

Interface

RUS Web

Service

Application

Access

control

list

XMLDB API

XML

Database

Figure 2: RUS Web Service

The RUS was implemented where possible in

accordance with the draft specifications of the GGF

RUS working group. They have defined an OGSI

Resource Usage Service (RUS) [5] whose purpose is

to store accounting information. As this is only a

draft, it is incomplete; some areas are

underspecified, ambiguous and open to

interpretation. The RUS specification itself depends

upon the Usage Record format specification [6],

which is another draft GGF standard, albeit one

which is nearer completion. This standard provides

a common way of representing usage information

for batch and interactive compute jobs in XML,

which is independent of the system it was generated

from. This enables the recording of usage in a

homogeneous way from a set of heterogeneous

resources. The Usage Record format has also been

used in the accounting system developed as part of

GridBank [3], but this does not use the RUS

specification.

The most recent GGF specification of the RUS uses

the OGSI to express the state of the record service.

We deviated from this by writing a stateless RUS.

This was for two reasons. Firstly, the OGSI was

deprecated by major Grid projects such as Globus

early in 2004 and therefore did not appear to

constitute a long-term basis for service design. The

second, more positive reason was that a simpler and

more elegant design of a stateless RUS service

could be employed by using the underlying

database to provide persistence for state

information.

The RUS web service is capable of receiving a

request to insert multiple records. It handles a

multiple record insertion request by breaking them

into atomic insert operations on the database.

Should a failure of the database occur during the

insert operation then the RUS web service will

inform the client of the status of each record in the

insert operation. Should the RUS web service fail

during the handling of the insert operation then the

client has no knowledge of the status of individual

records and must resend the entire insert request.

The RUS web service handles this scenario by

checking for the existence of a record in the database

before inserting to avoid the creation of duplicates.

The three fundamental functional requirements,

which drive the architecture of the Resource Usage

Service, are as follows. Firstly, it must provide

persistent storage and retrieval of XML Usage

Records, conforming to the format defined by the

GGF Usage Record Working Group. Secondly, it

must implement the WSDL port-types defined in

the GGF Resource Usage Service Specification. The

final requirement is that read and write access to the

data stored must be restricted to ensure privacy and

prevent fraud. In aaddition to these functional

requirements, we added a performance requirement,

that the RUS remains sufficiently responsive as the

size of its database grows to a level commensurate

with the Grid it is serving.

The Markets implementation of the RUS is in

plain web services, and has been deployed in the

Sun Application Server container, which is part of

J2EE. It does not depend on any Grid middleware,

but does need WS-Security to be supported in the

web service container, which we had to implement

in J2EE.

We have chosen the Apache Xindice [6] XML

database as our underlying database, which

implements the XML:DB API [8]. An XML

database was used as opposed to a conventional

relational database, because we are storing usage

records that are XML documents, and so we can

store them directly in XML format without the need

to map to a different database schema. The

XML:DB API supports queries using XPath [9] and

record modification using XUpdate [10]. These are

both native XML technologies and eliminate the

translation required with non-XML databases. The

Xindice XML database is itself implemented as a

web service and is hosted in the same container

instance as the RUS web service application.

The Resource Usage Service Specification states

that Usage Records should be stored in a single

XML document whose root element is of the type

rus:RUSUsageRecords, which contains multiple

rus:RUSUsageRecord elements. This has not been

implemented as described for the following reason.

The Xindice database is optimized for storing large

numbers of small to medium sized XML

documents. It does not handle single large

documents well, and indeed, its maximum

document size is constrained to 5 MB. Therefore we

have stored each rus:RUSUsageRecord as a separate

document. This is viable with the Xindice database,

as it allows XPath queries to span all documents in

a collection.

The Markets implementation of the RUS is in plain

web services, and has been deployed in the Sun

Application Server container, which is part of J2EE.

It does not depend on any Grid middleware,.

The GGF RUS working group has not yet

produced a WSDL description of the service

interface, and so we have defined one based upon

the Service Interface Definition given in the RUS

specification. We have used the document-literal

encoding for the SOAP messages. There are three

fault types defined, namely RUSInputFault,

R U S P r o c e s s i n g F a u l t

and

RUSUserNotAuthorisedFault. The first is used

when an operation is invoked with invalid input,

for example a usage record that does not conform to

the schema. The second is returned if the RUS

encounters an internal error. The third is used when

a user has no authorisation to access any of the

operations of the RUS instance. For each operation

we have tried to use the types defined in existing

schemas where possible. For responses, we have

defined our own types for OperationalResult and

R U S I d L i s t in accordance with the RUS

specification. We have used JAX-RPC (1.1.1) [11]

for compiling our WSDL to Java, with the

databinding option switched on. However due to

the complexity of the urwg:UsageRecord format,

translation completely to Java classes is not

possible, and so those operations which pass

urwg:UsageRecords pass them as a SOAPElement.

The RUS then parses the SOAPElement and verifies

it against the schema to ensure that the usage record

is valid.

The URWG schema specifies only two

mandatory elements in a usage record, namely

urwg:RecordIdentity and urwg:Status. Our

implementation requires that usage records must

also contain the elements urwg:MachineName ,

urwg:SubmitHost , urwg:GlobalJobId and

ds:X509SubjectName from urwg:UserIdentity.

If these elements are not present then the record is

rejected.

The rationale for this is that without these four

fields,

there would be no way to trace which user or

resource the record relates to. In future the elements

that are mandatory in this way will be a

configurable option

User’s

web

browser

HTTPS

RUS

Query

Servlet

SOAP XML

RPC

RUS

Web

Service

Figure 3: RUS Query Architecture

As well as providing access to administors and

resource managers of the RUS, we wish users to be

able to access information about their own usage.

This could be done by extending the security model

of the RUS to include a role of user. However, this

is inelegant and the less entities that can access the

RUS, the more secure it is likely to be. We

illustrate in Figure 3 our solution to this problem.

Users are able to access the query system through a

web browser, using the SSL protocol for client

of these machines is shown in Table 1. For both

servers the container was Sun Java System

Application Server Platform Edition 8.0.0_01 and

the XML database was Xindice 1.1b4

Processor

No.

processors

RAM

OS

The Resource Usage Service (RUS) is a web service

which uses the Xindice XML database as its

underlying persistent storage, which is also a web

service. Both web services were running in the same

container for these tests. The RUS is secured using

SSL, which is configured for mutual authentication.

The container used in the tests was the Sun

Application Server, which is available as part of

J2EE. Two machines with different specifications

were used to run the same test so that a performance

comparison can be made. The specification of each

Server 2

Intel 3.00 GHz

1

The RUS was fed Usage Records, which had been

produced by the CSAR [12] machines at

Manchester, by a spooler written in Perl. Since

CSAR is one of the HPC nodes on the NGS [6]

this is an appropriate choice. A base set of 3000

records were used, with the urwg:GlobalJobId

appended with the count of the number of iterations

over this set, and the ds:X509SubjectName was

appended as a random string. This guarantees that

each record will be unique, and so will not be

rejected by the RUS as a duplicate

2.5

2

Data Set

Linear Fit

1.5

y = 2E-08x + 0.0487

1

0.5

0

0

3. Design of the scaling tests

Server 1

Intel 3.06 GHz

of 2

4GB

0.5GB

RH Enterprise Fedora Core 1

3

Disks

2x120GB

1x25GB

Table 1 Test Server Specifications

Avergae Insertion Time (s)

authentication. A query form is presented to the

user, which allows them to select a pre-defined

query or enter their own XPath query. The form is

sent to the Java Servlet and it appends an extra

predicate to the XPath query, which ensures that

only the records corresponding to the authenticated

user’s usage can be returned. The Servlet sends the

query to the RUS web service, and returns the

results to the users as a HTML page. The RUS

Query Servlet must have permission to access the

RUS as either an administrator or a resource

manager. The alternative to this mutli-tiered

approach would be to append the extra predicate at

the RUS itself when a query operation is invoked

and the requesting entity is not know to the RUS

through the access control list. This approach has

several disadvantages - the user must have a

bespoke application capable of querying the RUS;

intermediate processing of the query and responses

is not possible; a denial of service attack exists as

anyone can make the RUS execute a query. We have

successfully used this approach to provide a webbased administration interface, which exposes all

operations of the RUS.

Having implemented the RUS we need to ensure

that it can handle a sufficient number of records for

a grid of the size of the NGS, with four nodes of 64

to 128 processors and two HPC machines from

CSAR and HPCx. We would like to get some idea

of the scaling of the time to complete atomic

operations like insert, delete, update over this range.

If it is linear then we can scale up our service by

adding more processors, memory and disk storage

on the server on which it is hosted. Thus we can

scale the resources providing services to the Grid as

the Grid itself scales. This is clearly a highly

desirable property for an implementation of a Web

Services standard.

500000 1000000 1500000 2000000 2500000 3000000

Number of Records

Figure 4 Record insertion tests for Server 1 (full

data)

In Figure 4 we show the results of the test of the

time to insert a record on the first server as

described in table 1 (Server 1). The purpose of this

test was to show how the average insertion time for

a record changes as the database increases in size.

The spooler was run and the insertion time was

recorded at intervals of 1000 records. The two lines

at the bottom represent the vast majority of the

results. The values that can be seen to curve

Average Record Insertion Time (s)

0.1

0.09

0.08

0.07

involve computing a hash function on the

mandatory fields of the record. In the 0.03% of

cases there already exists a record with an identical

hash value and then a second search takes place on

the full record. The percentage is sufficiently small

for this to be non-problematical, but it shows how

performance of Web Services is affected by details

of implementation.

To gain some idea of how the specification of the

server hosting the database might affect the results,

we also tested on Server 2 of Table 1, which has a

lower specification appropriate to a desktop

machine. The results are shown in Figures 6 and 7.

6

5

Average Insertion Time (s)

upwards to insertion times up to 1 secon are

anomalous results which we explain below. The

trend of the majority of the results can be seen more

clearly in figure 5. The dark line represents the

linear fitting and the grey lines are composed of the

individual times, which were only recorded to a

granularity of 0.01 second. In figure 5 it can be seen

that the amount of time for insertion increases

linearly as the size of the database increases,

linearity being maintained throughout the range up

to 3 million records. For a database of 1 million

records the insert time is 0.064s, and a database of

2 million records it is to 0.071s. The linear trend is

highly encouraging since clearly the linear trend

indicates that we could achieve a reasonable

response time of order 0.1 seconds for databases at

least up to the 5 million record level.

4

Data Set

Linear Fit

3

y = 3E-07x + 0.0213

2

1

0.06

0.05

0

0

0.04

200000

400000

600000

800000

1000000

Number of Records

Source Data

Linear Fit

0.03

0.02

y = 7E-09x + 0.0565

Figure 6 Insertion test results for Server 2 – full

data

0.01

0.35

0

0

500000 1000000 1500000 2000000 2500000 3000000

Number of Records in Database

Scaling is approximately linear in this range. To

put this in context, the CSAR HPC service, which

is part of the UK NGS, produces at most 500

records per day. One can expect that including all

nodes in the NGS may increase this by a factor of

10 (more usage would be expected of a generalpurpose Grid as opposed to a specialised HPC

machine) but clearly the proposed database solution

provides more than satisfactory performance and

could scale to larger Grids. The CPU usage of the

server during the test was 40-45% and the memory

usage grew from 9.3GB to 14.0 GB as the number

of records grew from 1.5 million to 2.6 million.

We now explain the anomalous values shown in

Figure 4. The time for insertion remained <0.1s

throughout the tests except for 0.03% of cases

where times of order 0.5s were obtained. This is

because, for efficiency, tests for duplicate records

Average Insertion Time (s)

Figure 5 Record insertion tests for Server 1

excluding anomalous data values.

0.3

0.25

0.2

0.15

0.1

Series1

Linear Fit

0.05

y = 2E-07x + 0.0504

0

0

200000

400000

600000

800000

1000000

Number of Records

Figure 7 Insertion tests results for Server 2

excluding anomalously high values

It can be seen that the trends in the results of Server

2 are very similar to those for Server 1. The vast

majority are of order 0.05 seconds, starting from

0.02 seconds and increasing linearly with database

size (Figure 7). There are a small percentage of

anomalous times up to 5 seconds (Figure 6). The

difference resulting from the specifications of the

two servers, is revealed by the slopes of the linear

trends. For Server 1 with a memory of 4 GB RAM

the slope was 2 x 10-8 while for Server 2 with 0.5

GB the slope was 3 x 10-7. Thus for the machine

with lower memory the insertion rate rises with

database size at 15 times the rate. In addition for

Server 2 the database crashed when 1 million

records were inserted. Since the processing speeds

of the two servers are very similar, this indicates

that large memory is the most important

specification for hosting the database, which would

be the expeted result. The high CPU usage recorded

(40-45%) argues also for the use of a dedicated

server. We did not investigate the effects of moving

the actual RUS service to a different machine from

the database, this might further optimise memory

usage.

We also simulated the effects of multiple clients

accessing the service. The purpose of this test is to

verify the operation of simultaneous readers and

writers operating on the RUS. We have successfully

used 10 insertion clients operating with 2 extraction

clients and 2 deletion clients. The insertion clients

are as used in the insertion time tests. The

extraction client queries the RUS for 10 records by

rus:RUSId, with values chosen at random. The

deletion client deletes 10 records per request by

specific rus:RUSId, with values chosen at random.

The results of these tests showed that the RUS was

able to function in this situation; there was no

locking or deadlock observed.

4. Conclusions and future work

We have described a Record Usage Service

implemented according to the specifications of

relevant GGF working groups. We have shown how

this can be an important part of any Grid where

resources need to be accounted for and exchanged

between different sites or organisations. It can also

be utilised as a component in a market for

computational services.

The implementation showed how certain stateful

aspects of the original specification could be

delegated to the underlying database producing a

web service that is stateless and therefore has less

dependency on changes in standards. An important

part of the analysis of this paper is to show that

these standards can be realised in an implementation

that can scale to a Grid the size of the NGS while

being hosted on a modest size dual processor server.

This is important because the size of the servers

hosting grid services should be much smaller than

the size of the productive resources of the Grid.

Particularly encouraging is the linear scaling of

insertion time with database size indicating that

extension to larger Grids is feasible.

Further research will include analysis of the

functionality and scaling of the services of the MCS

as they are composed, since in important cases the

behaviour of the composite services shows

dependencies and delays that cannot be observed

from the services operating in stand-alone mode.

This raises issues of implementation of the Web

Services, such as whether the services share servers

or have dedicated servers. There are also important

considerations of archival and retrieval of historical

data which may be important in studying longerterm behaviour and scaling in Computational Grids.

Acknowledgements

The work described here was funded via the Markets

for Computational Services project as part of the

DTI/EPSRC funding awarded to the e-Science

North-West Centre (ESNW) for industrially-linked

projects.

References

[ 1 ] J. Ainsworth, J. MacLaren, J. Brooke,

“Implementing a Secure, Service Oriented

Accounting System for Computational

Economies”, Proceedings of CCGrid 2005, Cardiff

UK

[2] Markets for Computation Service Project website.

http://www.lesc.ic.ac.uk/markets/

[3] A. Barmouta and R. Buyya,, “GridBank: A Grid

Accounting Services Architecture (GASA) for

Distributed Systems Sharing and Integration”, i n

Workshop on Internet Computing and ECommerce, Proceedings of the 17th Annual

International Parallel and Distributed

Processing Symposium (IPDPS 2003), IEEE

Computer Society Press, USA, April 22-26, 2003,

Nice, France

[ 4 ] J. Brooke, D. Fellows, J. MacLaren, “Resource

Brokering: the EuroGrid/GRIP approach”, in

Proceedings of the UK e-Science All Hands

Meeting, Nottingham, UK, Sep. 2004

[5] S. Newhouse and J. MacLaren, “Resource Usage

Service RUS” Global Grid Forum Resource Usage

Service Working Group draft-ggf-rus-service-4.

Available

online

at

https://forge.gridforum.org/projects/ruswg/document/draft-ggf-rus-service-4-public/en/1

[6] R. Mach et al, “Usage Record – XML Format”,

Global Grid Forum Usage Record Working Group

draft version 12. Available online at

http://www.psc.edu/~lfm/Grid/UR-WG/URWGSchema.12.doc

[ 7 A] p a c h e

Xindice

XML

database.

http://xml.apache.org/xindice/

[ 8 ] “XML:DB API” draft specification September

2001, available online at http://xmldborg.sourceforge.net/xapi/xapi-draft.html

[9] “XML Path Language (XPath) Version 1.0”, W3C

Recommendation 16 November 1999. Available

online at http://www.w3.org/TR/xpath

[ 1 0 “XUpdate”

]

working draft September 2000,

available

online

at

http://xmldborg.sourceforge.net/xupdate/xupdate-wd.html

[ 1 1 ] “JSR-000101 Java API for XML-Based RPC

Specification 1.1.”, Available online at

http://java.sun.com/xml/jaxrpc/index.jsp.

[12] Computational Services for Academic Research

website. http://www.csar.cfs.ac.uk

[ 1 3 ] The UK National Grid Service website.

http://www.ngs.ac.uk