Integrating with the Access Grid: Experiences and Issues Chris Greenhalgh , Alex Irune

advertisement

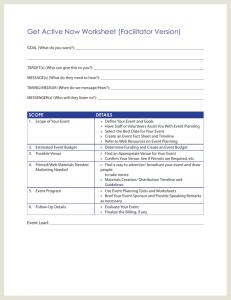

Integrating with the Access Grid: Experiences and Issues Chris Greenhalgh a, Alex Irune a, Anthony Steed b, Steve North c a. School of Computer Science & IT, University of Nottingham b. Department of Computer Science, UCL c. Department of Computer Science, University of Glasgow Abstract The Access Grid supports collaboration between geographically distributed groups, by allowing each group’s local “node” to connect to one of several shared “virtual venues”. We have explored ways of extending the Access Grid Toolkit version 2, specifically: to integrate a collaborative mobile pollution monitoring and visualisation application; and to allow immersive virtual reality interfaces to be used as nodes. In this paper we report our experiences and evaluate the integration approaches available, in particular with reference to application sharing, based on: new node services interacting with venue media streams; toolkit-supported shared applications; and external applications coordinated via service URLs or shared data files. 1. Introduction The Access Grid [1] supports collaboration between geographically distributed groups, by allowing each group’s local “node” to connect to one of several shared “virtual venues”. Each node is typically a meeting room with audio-visual facilities. All of the nodes connected to a single virtual venue are inter-connected by a combination of streaming audio, video, text and shared applications to facilitate collaboration. The EPSRC-funded project “Advanced Grid Interfaces for Environmental e-science in the Lab and in the Field” has been exploring areas of escience involving significant field-based activity, including environmental monitoring in the Antarctic [2] and urban pollution in London [3]. In this project we have also explored ways of integrating with the Access Grid, in particular the Access Grid Toolkit version 2 (currently version 2.3). Specifically, we have worked to (1) integrate a collaborative mobile pollution monitoring and visualisation application, and (2) allow immersive virtual reality interfaces (e.g. CAVE™ and RealityCentre™ type devices) to be used as nodes. In this paper we report our experiences and evaluate the integration approaches available, in particular with reference to application sharing, in its many possible forms [4]. In section 2 we review the main ways in which further shared applications or alternative interfaces can be integrated with the Access Grid version 2. In section 3 we present examples of our alternative interfaces to the Access Grid for use in immersive virtual reality interfaces, integrated with our collaborative pollution monitoring application. 2. Access Grid Toolkit Extension 2.1. Media streams and node services Venue Server Virtual Venue Venue Event bus Venue Data n 1. Join 2. Streams Node manager Service Manager: Vic (video) 3. Exec rat Cameras Video windows Service Manager: Rat (audio) 3. Exec vic Venue client Local App n Shared App n User Data n Mics speakers Figure 1: Joining a venue and its streams The main software processes and applications making up a typical node and virtual venue are shown in figure 1. Associated with the venue is information about the (by default multicast) audio and video streams associated with the venue. On joining the venue this information is used by the client to start appropriate “node services” to handle those streams. New node services can also be added to a node, allowing other applications to access or contribute to the same media streams. The Access Grid Toolkit’s node services for video and audio are simple wrapper applications for versions of the well-known RAT and VIC multicast conferencing tools. These wrappers – like the vast majority of the Toolkit in version 2 – are implemented in the Python scripting language. The Toolkit’s use of non-standard SOAP over GSI (the Globus security “standard”) protocols makes interoperability with other languages and toolkits problematic at present. To allow immersive VR interfaces to be used as nodes we have created an embeddable library version of the VIC video tool (see section 3). We have created a new node service based on the existing VIC wrapper application so that we can obtain current stream information for our own (non-python) applications in the local node, allowing them to track changes in virtual venue. We and other projects have also demonstrated capturing application 2D graphical output (e.g. rendered visualisations) and generating a video stream in the appropriate multicast session, e.g. using a custom Chromium [5] Stream Processing Unit. Of course, this does in itself allow remote input to the application. However, it does allow any node to view the application output without requiring additional software, and copes reasonably well with highly dynamic views, although it is less appropriate for standard 2D GUIs or high resolutions. 2.2. Toolkit-supported shared applications allows almost any application to be shared with no special support from the application itself. However, video may not work correctly in some cases, and applications with very dynamic displays (e.g. rapid animation) may not distribute well. Input to the application – at the level of mouse and keyboard input – may be limited to the single site hosting the application itself, or open to all sites, but typically without management or remote awareness. Although not part of the 2.3 release, a VNC client shared application is present in the code-base. Creating other shared applications is complicated by the same language and interoperability issues noted in relation to node services, and in addition the protocol used to communicate with the event bus is proprietary. However a tutorial and supporting code makes developing a new (python) application relatively simple, providing that appropriate libraries and facilities are available. 2.3. Shared data and services Venue Server 1. create/2. get Virtual Venue 1. Get info (Join) / Save data 2. Get data Node manager Venue Event bus 3. start Venue client Venue Data n Local App n Venue Server Virtual Venue Venue Event bus 1. Get info (Join) Node manager Service Manager: Vic (video) Service Manager: Rat (audio) Cameras Video windows Mics speakers Venue client Venue Data n 4.events 3. get/set state Local App n 2. start Shared App n User Data n Figure 2: Running a shared application The Access Grid Toolkit has direct support for “shared applications”, which are logically associated with a particular virtual venue as shown in figure 2. Standard examples included with version 2.3 are a shared web browser, PowerPoint™ presentation viewer and questionqueueing application. An instance of the shared application runs at each node, and coordinates via (simple) shared state in the venue service plus asynchronous events distributed via an associated event bus. VNC – which shares the final 2D screen appearance of any application using a VNC server – is a common method for sharing applications. It Service Manager: Vic (video) Service Manager: Rat (audio) Cameras Video windows Mics speakers Shared App n User Data n Figure 3: Sharing data The Access Grid Toolkit also supports sharing of data in the form of service URLs or files (figure 3). These can be published via a venue or a node, and downloaded by other nodes which can then view them, assuming that they have a suitable local application installed (determined by MIME type match). Distributing a data file or document provides a straight-forward way to independently view the same information, such as a web page or image. Custom viewers can also be registering using an OS-specific MIME-type registration process, e.g. allowing data-sets or 3D models to be viewed provided the node has installed the appropriate viewing application in advance. Alternatively, a service URL or configuration file can be used (via a custom MIME-type) to start up an application which directly supports distributed collaboration. In this case any coordination is dependent upon the application itself, independent of the Access Grid’s normal facilities. For example, the project’s pollution monitoring application uses the EQUIP distributed data-space for coordination between application instances. Note, however, that this raises additional complications for firewalls and security. 3. 3.1. Immersive Access Grid Use Supporting Immersive Interfaces Previously when we have needed to support immersive interfaces such as CAVEs™ and RealityCentres™ [6], we have written custom renderers using the support of libraries such as VRJuggler or CAVElib. Although designed to be easy to use, these libraries enforce a particular architecture on the application developer. On this occasion, because we had existing client applications, we decided instead to explore the use of the Chromium OpenGL streaming package [5]. In particular we wished to explore using a Java3D1 renderer that could be embedded into the existing java software and then use Chromium to share the resulting OpenGL calls over the network to dedicated display machines. We developed a relatively simple Java3Dbased client component for the “eGS” (e-Science George Square) system, which is a synchronous collaborative pollution monitoring application, which uses the EQUIP shared dataspace platform for peer-to-peer coordination (see also [6]). This 3D client maintains a graphical scene including: simple representations of each user, the area map, historical pollution data and recent pollution samples. We also developed Chromium plug-ins (Stream Processing Units) to handle issues specified to our immersive interfaces: geometry correction and edge blending in a curved-screen interface such as a RealityCentre, and head tracking in a CAVE™-like interface. 3.2. Centre™-like immersive interface with the same subjective consistency from the user’s perspective. The current clients display the incoming video streams in an arc in front of the user, with the size and position of each image dynamically adjusted as video sources join and leave the session. Figure 4 shows the Java3D Access Grid-VR client in use (our Reality Centre is currently shared with a driving simulator!). Access Grid-VR We have developed two 3D Access Grid video clients, one in C++ using OpenGL, and one in Java using Java3D (and hence OpenGL). These clients use our embedded version of VIC described in section 2.1 to display the video streams as live textures on objects within the 3D scene. Because the video streams are all consistently positioned within the 3D scene they can be viewed in a CAVE™ and Reality 1 Java3D uses OpenGL for rendering, except on Windows where a DirectX renderer is also available. Figure 4: Access Grid-VR in use Collaborative audio and video from the node are provided by a standard Access Grid client running on another machine. With the normal Access Grid video client it is necessary to place and size video windows by hand across the virtual desktop. Our AG-VR clients, however, will automatically size and organise the video streams. Unlike to basic auto-place facility in the normal client, we make use of information in the RTP source descriptions to identify video streams likely to be from the same site (node) and where possible their spatial order (left, centre, right) and provide a concise interface to allow users to selectively hide streams and sites, and adjust their display order. It is possible to use these applications directly with Chromium to drive a multi-projector display. However the performance is very poor because the full video texture is typically changing on each frame. To allow Chromium to be used more efficiently we have created another SPU which incorporates the VIC module and replaces a small fake texture image sent by the client application (which contains the URI of the appropriate video stream) with the latest image available in the local VIC module. The final arrangement of software components to drive the Reality Centre interface is shown in figure 5 (excluding eGS’ own communication and coordination elements). Field”, the EQUATOR Interdisciplinary Research Collaboration (EPSRC Grant GR/N15986/01). Chromium Array SPU References displays OpenGL API render Java3D OpenGL video sub AG-VR/eGS VIC application Chromium servers warp VIC Multicast AG Video Tilesort SPU Stream IDs Figure 5: Using Chromium to drive an immersive display including AG video 3.3. Immersive Application Sharing Finally, we have also integrated the Java3D AG-VR client with the eGS system to show how this approach allows immersive 3D graphics to be combined with distributed collaboration on the Access Grid. As well as the standard audio and video communication provided by the Access Grid, the peer-to-peer coordination facilities of the eGS serve to coordinate the 2D and 3D map and pollution views of the distributed participants. To support this over the typical wide-area usage common in the Access Grid a discovery rendezvous process is run on an agreed machine to bootstrap the EQUIP peer discovery process. Figure 6 shows the eGS system being viewed in the UCL ReaCTor with integrated Access Grid video streams (and audio). Figure 6: A user inside the UCL ReaCTor facility using the collaborative application Acknowledgements This work was supported by EPSRC Grant GR/R81985/01 “Advanced Grid Interfaces for Environmental e-science in the Lab and in the [1] The Access Grid Project, http://www.accessgrid.org/ (verified 200503-15). [2] Steve Benford, Neil Crout, John Crowe, Stefan Egglestone, Malcom Foster, Chris Greenhalgh, Alastair Hampshire , Barrie Hayes-Gill, Jan Humble, Alex Irune, Johanna Laybourn-Parry, Ben Palethorpe, Timothy Reid, Mark Sumner, “e-Science from the Antarctic to the GRID”, Proceedings of UK e-Science All Hands Meeting 2003, 2-4th September, Nottingham, UK [3] Anthony Steed, Salvatore Spinello, Ben Croxford, Chris Greenhalgh, “e-Science in the Streets: Urban Pollution Monitoring”, Proceedings of UK e-Science All Hands Meeting 2003, 2-4th September, Nottingham, UK [4] Clarence A. Ellis, Simon J. Gibbs and Gail Rein, “Groupware: some issues and experiences”, Commun. ACM, 34 (1), 1991, issn:0001-0782, pp. 39-58, ACM Press [5] Greg Humphreys, Mike Houston, Yi-Ren Ng, Randall Frank, Sean Ahern, Peter Kirchner, and James T. Klosowski: Chromium: A Stream Processing Framework for Interactive Rendering on Clusters, ACM Transactions on Graphics (TOG) , Proceedings of the 29th annual conference on Computer graphics and interactive techniques, Volume 21 Issue 3, pp. 693 – 702, 2002. [6] Chris Greenhalgh: Equip: An Extensible Platform For Distributed Collaboration, Proceedings from Workshop on Advanced Collaborative Technologies (WACE) 2002, Edinburgh, Scotland.