Research Journal of Applied Sciences, Engineering and Technology 4(14): 2024-2029,... ISSN: 2040-7467

advertisement

Research Journal of Applied Sciences, Engineering and Technology 4(14): 2024-2029, 2012

ISSN: 2040-7467

© Maxwell Scientific Organization, 2012

Submitted: October 15, 2011

Accepted: November 25, 2011

Published: July 15, 2012

Optimal Penalty Functions Based on MCMC for Testing Homogeneity of

Mixture Models

1

Rahman Farnoosh, 2Morteza Ebrahimi and 3Arezoo Hajirajabi

Department of Mathematics, South Tehran Branch, Islamic Azad University, Tehran, Iran

2

Department of Mathematics, Karaj Branch, Islamic Azad University, Karaj, Iran

3

School of Mathematics, Iran University of Science and Technology, Narmak, Tehran 16846, Iran

1

Abstract: This study is intended to provide an estimation of penalty function for testing homogeneity of

mixture models based on Markov chain Monte Carlo simulation. The penalty function is considered as a

parametric function and parameter of determinative shape of the penalty function in conjunction with

parameters of mixture models are estimated by a Bayesian approach. Different mixture of uniform distribution

are used as prior. Some simulation examples are perform to confirm the efficiency of the present work in

comparison with the previous approaches.

Key words: Bayesian analysis, expectation-maximizationtest, markov chain monte carlo simulation, mixture

distributions, modified likelihood ratio test

INTRODUCTION

There have been numerous applications of finite

mixture models in various branches of science and

engineering such as medicine, biology and astronomy.

They have been used when the statistical population is

heterogeneous and contains several subpopulation. The

main problem in application of finite mixture models is

estimation of parameters. To date various methods have

been proposed for estimating the parameters of finite

mixture models. Some of the most importantones are

Bayesian method, maximum likelihood method,

minimum-distance method and method of moments

(Lindsay and Basak, 1993; Diebolt and Robert, 1994;

Lavine and West, 1992). After estimating the parameters

of finite mixture models a particular statistical problem of

interest is whether the data are from a mixture of two

distribution or a single distribution. The problem is called

testing of homogeneity in these models. At the beginig for

testing homogeneity may be the Likelihood Ratio Test

(LRT) that is the most extensively used method for

hypothesis testing problem, is employed. The LRT has a

chi-squared null limiting distribution under the standard

regularity condition. Since the mixture models don’t have

regularity conditions therefore limiting distribution of

LRT isvery complex (Liu and Shao, 2003). For overcome

the above mentioned weakness of LRT method the

Modified Likelihood Ratio Test (MLRT) has been

introduced by Chen (1998), Chen and Chen (2001) and

Chen et al. (2004). In MLRT a penalty is added to the

Log-likelihood function and as a result a simple and

smoot manner is established to the problem. But

dependence on establishment of several regularity

conditions and chosen penalty function are the

mainlimitation of MLRT. Li et al. (2008) has been

proposed Expectation-Maximization (EM) test based on

another form of penalty function that suggested

previously by Chen et al. (2004). This test is independent

of some necessary conditions for MLRT. But both of the

MLRT and EM tests are based on penalized likelihood

function. Therefore the efficiency of these tests is

influenced by the shape of the chosen penalty function.

Hence none of the these tests is generally optimal. To

remove the above mentioned disadvantage, in the present

study we consider the penalty function as a parametric

function and employ Metropolis-Hastings sampling as a

MCMC method for estimation parameters of mixture

models and parameter of determinative shape of the

penalty function. To our best knowledge the parametric

penalty function has not been studied before.

Furthermore, according to latest information from the

research works it is believed that the MCMC estimation

of penalty function based on different priors to testing

homogeneity of mixture model has been investigated for

the first time in the present study.

METHODOLOGY

The finite mixture models: A mixture distribution is a

convex combination of standard distributions fj :

∑ kj =1 p j f j ( y), ∑ kj =1 p j = 1

(1)

Corresponding Author: Rahman Farnoosh, Department of Mathematics, South Tehran Branch, Islamic Azad University, Tehran,

Iran

2024

Res. J. Appl. Sci. Eng. Technol., 4(14): 2024-2029, 2012

In most cases, the fj s are from a parametric family, with

unknown parameter 2 j leading to the parametric mixture

model:

∑ kj = 1 p j f ( y θ j ),

(2)

∑ kj = 1 p j = 1

where, 2 j , 1 and 1 is compact subset of real line. When

a sample y = (y1,...,yn) of mixture distribution is observed,

since it is not known tahteach observation is belongto the

what subpopulation, so obtained observations are called

incomplete data. In this case likelihood function and loglikelihood function of incomplete data is as follows,

respectively:

(

) ∏in= 1∑ kj = 1 p j f j ( yi θ j )

l(θ , P y ) = ∑ in= 1 log(∑ kj = 1 p j f j ( yi θ j ))

L θ, P y =

where, θ = (θ1 ,...,θ k ) and p = (p1,...,pk). Incomplete data

can be converted to complete data by considering a set of

indicator variables z = (z1,…, zn) and zi = (zi1,…, zik)

Therefor it is determinedthat what subpopulation

includewhich observations:

yi*zij = 1:f(yi*2j),

i = 1, ..., n, j = 1, ... k

l n ( p,θ1 ,θ2 ) =

(

( )

)

(

{

The LRT and the MLRT:

Suppose Y1,…, Yn is a random sample of mixture

distribution:

(

)

(4)

where 2 j , 1, j = 1, 2 and 1 is compact subset of real

line. We wish to test:

H0: p(1-p)(21!22) = 0

H1: p(1-p)(21!22) … 0

(

)

(

Rn = 2 log ln p$ ,θ$1,θ$2 − log l n p$ ,θ$0 ,θ$0

)}

Large value of this statistic leads to rejecting of null

hypothesis. Chen and Chen (2001) showed that two

sources of non-regularity are exist which complicate the

asymptotic null distribution of the LRT. One of these

sources is that the hypothesis lies on the boundary of

parameter space p = 0 or p = 1 and other is the mixture

model that is not identifiable under null model therefore

p = 0 or p = 1 and 21 = 22 are equivalent.For overcoming

the boundary problem and non-identifiability, Chen and

Chen (2001) proposed a penalty function in terms of p as

T(p) add to LRT statistic such that:

lim

p→ 0 or 1

T ( p) = −∞ ,arg max T ( p) = 0.5

(6)

p∈ [ 0, 1]

Tl n ( p,θ1,θ2 ) = l n ( p,θ1 ,θ2 ) + T ( p)

Use of this penalty function lead to the fitted value of

p under the modified likelihood that is bounded away

from 0 or 1. Chen and Chen (2001) showed if some

regularity conditions hold on density kernel then

asymptotic null distribution of MLRT statistic is the

mixture of the χ12 andχ 02 with the same weights, i.e.,

Zij

) ∑ in=1∑ kj =1 zij ⎧⎨⎩ log p j + log⎛⎜⎝ f j ( yi θ j )⎞⎟⎠ ⎫⎬⎭

( )

)

In this case penalized Log-likelihood function is as

follows:

(3)

lc θ , P y , z =

pf Y y θ1 (1 − P) f Y y θ 2

(

p$ ,θ$1 ,θ$2

If θ$0 and

are maximization of the

likelihood function under null and alternative hypothesis,

respectively, then the statistic that obtained from LRT is

as follow:

The likelihood function and Log-likelihood function

of complete data which is usually more useful for doing

inference is as follows, respectively:

⎛

⎞

Lc θ , P y , z = ∏ in= 1 ∏ kj = 1 ⎜⎝ p j f j yi θ j ⎟⎠

∑ in=1 log{ pf ( y θ1) + (1 − p) f ( y θ2 )}

0.5χ12 + 0.5χ 02

where, χ 02 is a degenerate distribution with all its mass at

0.

Parameters estimation based on EM algorithm: EM

algorithmis the most popular method for finding the

estimations of likelihood maximum. This algorithm is

consist of two steps E and M. In E-step conditional

expectation of complete data provide observational data

and unknown parameters, i.e.,

where, null hypothesis means homogeneity of population

and alternative hypothesis means consisting population

from two heterogeneous subpopulation as (1). Loglikelihood function can be written as follow:

( ) substitute

f (yθ )

pj f j yθ j

(5)

∑

k

j =1 p j

j

j

in zij and in M-step the value of parameters which

maximize log-likelihood function of complete data is

compute. Then E and M steps repeat until a stop condition

and reaching to convergence.

2025

Res. J. Appl. Sci. Eng. Technol., 4(14): 2024-2029, 2012

SupposeY1,…,Yn is a random sample of mixture

distribution (4), then log-likelihood function of complete

data is as follows:

∑ in=1[zi{log( p) + log( f ( yi θ1))}

+ (1 − zi ){log(1 − p) + log( f ( yi θ2 ))}⎤⎥

⎦

∑

p(t )

lcn (θ , p) =

(7)

In E-step, mathematical expectation is computed as

follow:

zi(1t ) = E ⎜⎛ zi yi;θ ( t −1) p ( t −1) ⎟⎞

⎝

⎠

=

(

)

+

−

1

p

f ( yi θ

) (

p ( t − 1) f yi θ1 ( t −1)

(

p ( t − 1) f yi θ1 ( t −1)

( t − 1)

1

( t − 1)

)

, i = 1, 2,..., n

(8)

∑ in=1

∑ in=1{ zi(1t ) log f ( yi θ1) )}

θ2( t ) = arg max

∑ in=1{(1 − zi(1t ) ) log( f ( yi θ2 ))}

θ1 ∈Θ

and

θ 2 ∈Θ

Now MLE is obtained by iteration of steps until

convergence of algorithm. Estimation of p depends on

choosing penalty function. Chen and Chen

(2001)proposed a penalty function as follows:

T ( p) = C log(4 p(1 − p))

(

)

and

n

(t )

∑ i =1 zi1 + c

2C + n

∑

(

)

2

) = log(4 p(1 − p))

Optimal proposed penalty function: In the present study

the following penalty function that is able to overcome the

disadvantages of the penalty functions (9) and (10) is

proposed:

(

)

g (h, p) = C log 1 − 1 − 2 p h , 0 < h ≤ 2

(10)

These test function, (9) and (10), hold in condition

(6). It is simply shown that by using penalty functions (9)

and (10), the value of p in M-step from (t+1)-th iteration

of EM algorithm is obtained as follow respectively:

p(t ) =

(

log 1 − 1 − 2 p ≤ log 1 − 1 − 2 p

(9)

where, C is a positive constant. Li et al. (2008) also used

a penalty function as the following form:

T ( p) = C * log 1 − 1 − 2 p

∑

For p = 0.5 the above mentioned inequality convert to

equality (1 − 1 − 2 p ) ≈ − 1 − 2 p . . So penalty functions (9) and

(10) are almost equivalent for values of p that are close to

0.5, but for values of p that are close to 0 or 1, a

considerable difference exist between these two penalty

function. The penalty function that suggested by Li et al.

(2008) has advantages and increase the efficiency of LRT,

but there is no reason that it can remove the problem of

optimality of these penalty functions.

zi(1t )

n

θ1( t ) = arg max

∑

The choice of penalty function: However the limiting

distribution of the MLRT does not depend on the specific

form of T(p), but the precision of the approximation and

its power do. A modified test can obtained from the

penalty function (9) and solve the problem of LRT. Since

the penalty function (9) puts too much penalty on the

mixing proportion when it is close to 0 or 1thenin spite of

clear observation of mixture distribution, MLRT statistic

can't reject null hypothesis. Hence a more reasonable

penalty function need such that power of MLRT increases

even mixing proportion is close to 0 or 1. Therefore the

penalty function (10) has been proposed that is holds in

the following relation:

In M-step, by putting th value of (8) in log-likelihood

function of complete data and maximizing this function

with respect to model parameters, we have:

p( t ) =

∑

(t )

(t )

n

n

⎧

i = 1 zi1 + C *

i = 1 zi1

⎪ min{

< 0.5

, 0.5}

⎪

n+ C*

n

=⎨

(t )

(t )

(t )

n

n

n

⎪

i = 1 zi1

i = 1 zi1

i = 1 zi1

> 0.5

0.5, max{

, 0.5

⎪ 0.5

n

n+ C*

n

⎩

(11)

It is clear that the penalty functions (9) and (10) are

special case of penalty function (11) and they are obtained

from (11) by substituting h = 1 and h = 2, respectively.

How to choose the value of h effects on the shape of

penalty function and consequently on the obtained

inferences. In classical approaches the choice of another

value for h leads to complexity of penalty function (11),

that it also would lead to difficulty of maximization in Mstep of EM algorithm. Due to difficulty of classical

approach in determining parameters of model and penalty

function, these parameters will be estimated in paradigm

of Bayesian approach. Therefore at the bigining suitable

prior distributions are considered for given parameters

and then the estimation of these parameters will be

computed as posterior. Range of parameter h in penalty

function (11) is interval (0, 2] but because the values of h

2026

Res. J. Appl. Sci. Eng. Technol., 4(14): 2024-2029, 2012

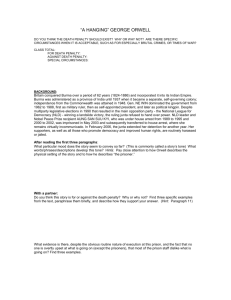

Fig. 1: Comparison of four penalty functions

Table 1: Mixture models that a data set is simulated from them

Model

p

µ2

µ1

1

2

3

4

0.05

0.10

0.25

0.5

-2.179

-1.500

-0.866

-0.500

MCMC algorithm: Suppose (Y1,…Yn) is a random

sample from a mixture pN (:1,1)+(1-p) (:2, 1) with an

unknown mixing proportion P = (p1 = p, p2 = 1-p), p

therefore for the penalized likelihood function we can

write:

0.115

0.167

0.289

0.500

∏i =1∏ j =1⎛⎜⎝ p j f ( yi µ j )⎞⎟⎠

2

2

h

n

∝ pn1(1 − p) 2 ∏ ∏ e − 0.5( yi − µ j ) (1 − 1 − 2 p )

j =1

i , zij = 1

Lc ( µ , Ρ , h z , y ) =

Table 2: Mean square error of bayesian and classic estimators by using

U(0, 1) as prior distribution for h

Parameters of model

------------------------------------------------Model

Estimator

h$

p$

µ$

µ$

1

1

2

3

n

−

n1

n2

( y1 − µ1 )2 − 2 ( y2 − µ 2 )2

2

e

θ$ML

2.5230

0.0680

0.0570

-

θ$

B

0.2430

0.0045

0.0044

0.0094

θ$ ML

0.1505

0.0210

0.01670

-

θ$

0.0031

0.0002

0.0025

0.1943

ML

0.3212

0.0021

0.0610

-

B

0.0081

0.0006

0.0022

0.0290

ML

0.00021

0.0380

0

-

π ( µ , Ρ , h) ∝ e

B

0.0004

0.0260

0.0009

0.0165

π µ , Ρ , h y , z = L µ , Ρ , h z , y π ( µ , Ρ , h)

B

θ$

θ$

4

= pn1 (1 − p) 2 e

2

θ$

θ$

zij

2

n

(1 − 1 − 2 p )

h

where, : = (:1, :2) and nj (for j = 1 and 2) are the number

of observations associated to the j components. Now, by

considering the normal prior distribution N (a, 1/b), a ,

Randb > 0, for both :1 and :2, prior distribution (a1, a2)

for p and finally by considering prior distribution U (0, 1)

and aU (0, 0.5)+(1 – a)U(0.5, 1) for h,we obtain:

(

in interval (0, 1) may further improve the power of MLRT

in this study the uniform distribution has been used as

prior distribution for p, in order to holding impartiality

between null and alternative hypothesis. The Uniform and

mixture of two uniform distributions have been

considered as prior distribution for h. In the next section

we are going to demonstrate MCMC algorithm that is

used in the present study for estimation of penalty

function.

)

∝e−

e−

−

b

( µ1 − a )

2

c

(

×e

−

b

( µ 2 − a ) a1 −1

2

p

)

(1 − p)a2 −1(1 − 1 − 2 p h)

n1

b

n

( y − µ )2 e − ( µ1 − a ) 2 e − 2 ( y2 − µ2 ) 2

2 1 1

2

2

b

h

( µ2 − a ) 2 pn1+ α 1− 1 (1 − p) n2 + α 2 −1 (1 − 1 − 2 p )

2

Bayesian analysis of mixture models has many

difficulties because of its natural complexity (Diebolt and

Robert, 1994). In this case posterior distribution dosn't has

a closed form hence for doing inference, MCMC

algorithm facilitates sampling of posterior distribution.

2027

Res. J. Appl. Sci. Eng. Technol., 4(14): 2024-2029, 2012

Table 3: Values and Mean square error for bayesian and classic estimators by using 0.25U (0,0.5)+0.75U (0.5,1) as prior distribution for h

Parameters of model

-------------------------------------------------------------------------------------------------------------Model

Estimator

h$

p$

µ$ 2

µ$ 1

1

-1.1262(1.1104)

0.3013(0.0348)

0.2194(0.0289)

θ$

ML

2

θ$B

1.9087(0.0763)

0.1003(0.0002)

0.0372(0.0058)

θ$ ML

θ$

0.5419(0.9290)

0.3861(0.0488)

0.4885(0.1518)

-

1.3100(0.0362)

0.0778(0.0095)

0.0896(0.0031)

0.6002(0.1005)

B

3

4

0.5445(0.0126)

θ$ ML

0.3870(0.2299)

0.5022(0.0456)

0.4976(0.0614)

-

θ$B

0.7564(0.0122)

0.3153(0.0007)

0.2497(0.0013)

0.7373(0.0185)

θ$ ML

0.2310(0.0725)

0.2444(0.0655)

0.5(0)

-

θ$B

0.3671(0.0175)

0.3908(0.0119)

0.4961(0.0007)

0.5555(0.0111)

Table 4: Values and Mean square error for bayesian and classic estimators by using 0.75U(0,0.5)+0.25U(0.5,1) as prior distribution for h

Parameters of model

-------------------------------------------------------------------------------------------------------------Model

Estimator

h$

p$

µ$ 2

µ$ 1

$

1

-1.6041(0.3314)

0.1871(0.0053)

0.2010(0.0230)

θ

ML

θ$B

-2.2611(0.0082)

0.0970(0.0002)

0.0584(0.0052)

0.4302(0.0034)

2

θ$ ML

θ$

-1.9715(0.0984)

0.2922(0.0162)

0.1881(0.0086)

-

-1.6471(0.0226)

0.2157(0.0023)

0.0979(0.0032)

0.3919(0.0039)

3

θ$ ML

-0.3041(0.3164)

0.6712(0.0332)

0.4978(0.0615)

-

θ$B

-0.7539(0.0127)

0.3521(0.0040)

0.2371(0.0017)

0.7787(0.0597)

B

4

θ$ ML

-0.3205(0.0341)

0.4122(0.0079)

0.4253(0.0063)

-

θ$B

-0.4044(0.0092)

0.5178(0.0003)

0.4631(0.0026)

0.2117(0.0168)

distribution.Typical use of MCMC sampling can only

approximate the target distribution. Two generation

mechanism for production such Markov chains are Gibbs

and Metropolis-Hastings. Since the Gibbs sampler may

fail to escape the attraction of the local mode (Marin

et al., 2005) a standard alternative i.e., MetropolisHastings is used for sampling from posterior distribution.

This algorithm uses a proposal density which depends on

the current state. In the following the general MetropolisHastings sampling is introduced:

Initialization: Choose P(0) and 2(0), h(0)

Stept: For t = 1, 2, ....

(

( t − 1) ( t − 1) ( t − 1)

~ ~ ~

,Ρ

,h

C Generate (θ , Ρ , h ) from q θ , Ρ , h θ

C Compute

r=

(

)(

~ ~

~

~

π θ ,~

p , h y q θ ( t − 1) , P( t − 1) , h( t − 1) θ , ~

p, h

⎛

⎝

π ⎜ θ ( t −1) , P( t −1) , h

( t − 1) ⎞ ⎛ ~ ~ ~ ( t − 1) ( t − 1) ( t − 1) ⎞

⎟

y⎟ q ⎜⎝ θ , p , h θ

,P

, h

⎠

⎠

C Generateu: u-U[0, 1]

if r < u then (2(t), p(t), h(t)) =

)

)

where q is proposal distribution that often is considered as

the random walk Metropolis-Hastings (Marin et al.,

2005).

SIMULATION STUDY AND DISCUSSION

Figure 1 exhibits the graph of two penalty functions

(9) and (10) for c* =1 and penalty function with Bayesian

estimation for h = 0.7373 and h = 0.3913. It is seen that

penalty function (9) in the point p = 0.5 is almost smooth

and puts no penalty while penalty function (10) and

penalty function with h = 0.7373 and h = 0.3913 put more

less penalty for values that are close to 0 or 1. In fact the

purpose of this section is to compare estimation of

parameters using the penalty function of (10) and penalty

function that obtained from Bayesian estimation. The

simulation experiment was conducted under normal

kernel with a known variance 1. The mean value for the

null distribution and alternative distributions is 0 and the

variance for the alternative models is set to be 1.25 times

greater than the variance under null model. Therefore we

can consider:

EHo(y) = 0, VarH0(y) = 1

(θ~, ~p , h~)

EH1 (Y) = p:1+(1!p):2

Else (2(t), p(t), h(t)) = (2(t!1), p(t!1), h(t!1))

2028

Res. J. Appl. Sci. Eng. Technol., 4(14): 2024-2029, 2012

and

REFERENCES

VarH1(y) = EH1(y2)!E2H1(y) = p(1!p)(:1!:2)2+1

Table 1 present four alternative models under these

conditions. The sample size n = 200 is simulated from

these models. Table 2, 3 and 4 present comparison

between classical and bayesian estimation of mixture

model parameters. The distributions U(0,1),

0.75U(0,0.5)+0.25U(0.5,1) and 0.25U(0,0.5)+

0.75U(0.5,1) are used as the prior distribution for h in

Table 2, 3 and 4, respectively. The simulation result show

that when p is close to 0 or 1 the Mean Square Error

(MSE) of bayesian estimator is less than one in classical

estimator that obtained via EM algorithm.

CONCLUSION

In This study an optimal penalty function in

comparison to well known penalty functions for testing

homogeneity of mixture models is proposed and

successfully Bayesian approach is employed to estimation

parameter of penalty function and parameters of mixture

models. Simulation experiments show that Bayesian

approach is better than the classical approach both in

estimation of model parameters and in determining of

optimal penalty function in position that mixture models

goes to non-identifiability.

Chen, J., 1998. Penalized likelihood ratio test for finite

mixture models with multinomial observations. Can.

J. Stat., 26: 583-599.

Chen, H. and J. Chen, 2001. The likelihood ratio test for

homogeneity in the finite mixture models. Can. J.

Stat., 29: 201-215.

Chen, H., J. Chen and J.D. Kalbfleisch, 2004. Testing for

a finite mixture model with two components. J. R.

Stat. Soc. B., 66: 95-115.

Diebolt, J. and C.P. Robert, 1994. Estimation of finite

mixture distributions through Bayesian sampling. J.

R. Stat. Soc. B., 56: 363-375.

Lavine, M. and M. West, 1992. A bayesian method for

classification and discrimination. Can. J. Stat., 20:

451-461.

Li, P., J. Chen and P. Marriott, 2008. Non-finite Fisher

information and homo-geneity: The EM approach.

Biometr., 96: 411-426.

Lindsay, B.G. and E. Basak, 1993. Multivariate normal

mixtures: A fast consistent method of moments. J.

Ame. Stat. Ass., 88: 468-476.

Liu, H.B. and X.M. Shao, 2003. Reconstruction of

january to april mean temperature in the qinling

mountain from 1789 to 1992 using tree ring

chronologies. J. Appl. Meteorol. Sci., 14: 188-196.

Marin, J.M., K. Mengerson and C. Robert, 2005.

Bayesian modeling and inference on mixture of

distributions. Handbook Stat., 25: 459-507.

2029