International Journal of Application or Innovation in Engineering & Management... Web Site: www.ijaiem.org Email: Volume 3, Issue 3, March 2014

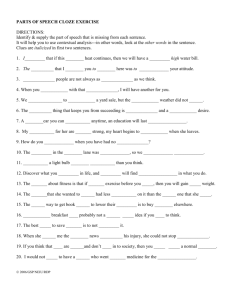

advertisement

International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 3, March 2014 ISSN 2319 - 4847 An Applied Research of Rasch GSP for Evaluating Difficulty of Test Questions Tian-Wei Sheu1, Phung-Tuyen Nguyen2, Duc-Hieu Pham3 , Phuoc-Hai Nguyen4and Nagai Masatake5 1,2,3,4,5 Graduate Institute of Educational Measurement and Statistics, National Taichung University of Education, Taichung, Taiwan ABSTRACT Educational assessment is usually focused on determining how well students are learning and has become an integral part of the quest for improving education, and assessment method is also required to be simple, understandable, easily applied but objective and accurate. The purpose of this paper is to propose a new application of Rasch GSP which can evaluate the difficulty of questions in educational testing. This method promotes the advantages of Rasch model and Grey Student-Problem (GSP) chart to create the creative application of evaluation on the basis of statistics and arrangement in order of priority criteria. In order to identify the rationality of method, the experimental results of the method have been compared with results processed by BILOGMG 3 software which implemented the task based on Item Response Theory (IRT). The research result shows that the advantage of the proposed method is simple, and easy to apply. Especially, it is not only best for handling small samples but also applicable for large sample. This new application is a more convenience way for teachers and education managers to carry out the evaluation process. Keywords: assessment method, Rasch GSP, GSP, small sample, large sample. 1. INTRODUCTION Assessment plays an integral role in teaching and learning, it is used in classrooms to help students learn, so it must be transformed in two fundamental ways that the content and character of assessments must be significantly improved and the gathering and use of assessment information must become a part of the ongoing learning process [1].Whether within the scope of a class or within the wider scope of a school, evaluating academic achievement of students occurs frequently to ensure the provision of timely feedback results in service of teaching, it requires evaluation results to be obtained quickly but accurately and objectively. Therefore, the construction and design of models and assessment methods that have high effectiveness are interesting. An important requirement for assessment methods is that be accurate and easy to apply but also objective, valid, reliable, and practicable to meet the needs of teaching[2]. In the classroom space, the Student-Problem chart (S-P chart) is an evaluation method, which effectively evaluates the students’ results of learning through the tests, it has been in use for many years. In 1969, S-P chart was invented by Takahiro Sato, it can help teachers diagnose abnormal performance. The main purpose of the S-P chart is to get the diagnostic data of each student, and teachers can provide better advise for each student academically depending on this analyzed data[3].In 1982, Deng proposed Grey system theory wherein grey relational analysis is an effective mathematical tool. Grey relational analysis measures the degree of similarity or difference between two sequences based on the grade of relationship between them[4]. In order to overcome the weaknesses of S-P chart which only processed dichotomous data, Nagai proposed GSP chart in 2010, it is a combination of S-P chart and Grey system theory to analyze S-P chart data more specifically. With GSP chart analysis, the uncertainty factors in the study are analyzed clearly[5]. In 1960, Rasch introduced a model for analyzing the test data whose test broadly refers to an assessment of an examinee’s level of ability in a particular domain such as math or reading, or a survey of an examinee’s behaviors or attitudes toward something[6]. The aim of this model is to measure each examinee’s level of a latent trait that underlies his or her scores on items of a test, so it suitable for the analysis of large data. The concept of item response theory (IRT) was known during the 1950s and 1960s, but it did not become widely used until the late 1970s and 1980s by three of the pioneers, namely Lord, Rasch, and Lazarsfeld who pursued parallel research independently. The purpose of IRT is to provide a framework for evaluating how well assessments work, and how well individual items on assessments work. The most common application of IRT is in education, where psychometricians use it for developing and designing exams, building item banks for exams, and equating the difficulties of items for successive versions of exams [7]. In practical application, the parameters of IRT are estimated by computer programs because of the vast number of parameters that must be estimated. One of the most popular software used is BILOG-MG [8]. The view of the Rasch model was applied to GSP chart was a creativity suggestion by Nagai in 2010. This theory has become a method to judge uncertain factors [9]. Rasch GSP can make problem analysis more specific and clear, it shows the subject’s identity status completely and provides the best result which can then be used to provide digital curriculum design and aid development of a reference index [10]. Volume 3, Issue 3, March 2014 Page 214 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 3, March 2014 ISSN 2319 - 4847 S-P chart analysis method has been used popularly for many years, however it was only suitable for small samples; on the other hand, it only evaluated students and questions at a general level with no specific arrangement of difficulty order of questions. Meanwhile, BILOG-MG software processed data based on IRT theory which gave the evaluation results with specific question difficulties, however, the samples which satisfied its assumptions are always large statistical data sets. It is hypothesized that it was possible to construct an assessment method which not only applied for small sample but could also handle large statistical data sets. The current study proposes a new application of Rasch GSP which can evaluate the difficulty of questions in the test, it could be applied for both small and large samples. The Rasch GSP is implemented as a method of non-parametric statistics for small samples, its success has been proven through some studies[10, 11], now this study would like to propose its new application. In order to show its features, the study applies it in educational testing with large statistical sample whose data collected from 46-question test with more than 1000 students. Evaluation results are compared with the results processed by BILOG-MG 3 software to determine the rationality. 2. BASIC THEORY This study proposes a new application of Rasch GSP method to evaluate difficulty of questions, and then its experimental results are compared with treatment results of BILOG-MG 3. So, the basic theories needed to introduce as follows: 2.1 Rasch model The Rasch model was named after the Danish mathematician Georg Rasch. The model shows what should be expected in responses to items if measurement is to be achieved [12]. The model assumes that the probability of a given respondent affirming an item is a logistic function of the relative distance between the item location and the examinee location on a linear scale. Georg Rasch first announced this model for analyzing the response of the answerers to obtain an objective interval scale that can measure the latent trait of an answerer [13]. In the Rasch model, the correct response probability of a student is a logistic function of the difference between that student’s ability and the item difficulty [14]. The relation between latent trait (theta) and correct response probability is described by an item characteristic curve (ICC). The Rasch model has descriptive function and predictive function. In descriptive function, this model can clearly explain the relationship between student’s ability and item difficulty, the difference between students and the difference between items. In predictive function, this model can predict the probability of a student who has a specified ability to answer a specified item correctly [13]. Figure 1 ICC for three different items in Rasch model 2.2 Student - Problem Chart S-P chart is known as a method that can analyze, process, and arrange data in a defined order, it is very useful for diagnosing the learning state of student and question quality[15, 16]. Definition 1: S-P chart matrix Let X [ x ij ] m n be the student response matrix and called S-P chart matrix, where i 1, 2 , , m is the order of student, j 1,2, , n is the order of question, m, n N , and 0, student i gives wrong answer to problem j xij 1, student i gives correct answer to problem j (1) Caution Index for Student (CS): n (x ij CS i 1 )( x j ) ( xi )( x ) j 1 where x n 1 n x j and l xi xij n j 1 j 1 where x ' 1 m l (x j ) ( xi )( x ) (2) j 1 Caution Index for Problem ( CP ): m (x ij CPj 1 )( xi ) ( x j )( x') i 1 l' (x i ) ( x j )( x') m x i 1 m i and l ' x j xij (3) i 1 i 1 Volume 3, Issue 3, March 2014 Page 215 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 3, March 2014 ISSN 2319 - 4847 Based on the CS value and the rate of problems being answered correctly by student, the students would be classified and diagnosed, similarly, based on the CP value with the rate of students answering problem correctly, the problems would be also classified. 2.3 Grey Relational Analysis (GRA) In grey system theory, GRA is an effective mathematical tool to treat the uncertain, multiple, discrete and incomplete information. This study would like to refer the localized grey relational grade which is proposed by Nagai [17]. Its procedure as follows: 2.3.1 Establishment of original vectors Definition 2: Basic vectors in GRA The reference vector x0 and inspected vectors of original data xi are established as followed: x 0 ( x (1), x (2), , x ( j ), , x (n )) 0 0 0 0 x1 ( x1 (1), x1 (2), x1 ( j ), x1 (n)) x 2 ( x 2 (1), x 2 (2), x 2 ( j ) , x 2 ( n)) xi ( x i (1), xi ( 2), x i ( j ) , xi (n)) (4) x m ( x m (1), x m (2), x m ( j ) , x m (n)) i 1,2, , m; j 1,2, , n; m, n N There is X [ x i ( j)] [ xij ] m n representing original data in S-P chart, if S i is called a series of answered states by the i-th student for all questions and Pj is a series of answered states by all students for the j-th question, then: S i ( x (1), xi (2), , x ( j ), , x i (n )) i (5) i Pj ( x1 ( j ), x ( j ), , x ( j ), , x m ( j )) 2 i The data of S-P chart which have been shown in formula (5) will be applied for GRA. 2.3.2 Calculation of GRA In original formula, x0 is reference sequence of local grey relational grade, and xi are inspected sequences. The established sequences have to satisfy three conditions: non-dimension, scaling, polarization. Grey relational generation has three ways: larger-the-better (the expected goal is bigger the better), smaller-the-better (the expected goal is smaller the better), and nominal-the-better (the expected goal is between maximum and minimum). Definition 3: Localized grey relational grade (LGRG) The localized grey relational grade is defined as follows: 0i ( x 0 ( j ), x i ( j )) max 0i (6) max min where max and min are maximum value and minimum value of 0i respectively, 0i is the absolute distance between x0 and xi , its formula as follows: 0i x0 xi n 1 ( ( x0 ( j) xi ( j)) ) (7) j 1 0i is called Minkowski distance. This study applies 2 , so 0i is also known as Euclidean distance. When 0i is close to 1, it means that x0 and xi are highly correlated, in contract, 0i is close to 0, the relationship between x0 and xi is lower. Grey relational ordinal: The whole decision-making is made by the comparison of the grey relation 0i . Through the ordinal, different causes can be identified, and the most important influence can be found, becoming the relational standard in the system. 2.4 GSP chart and Rasch GSP GSP chart is the combination of GRA and S-P chart, it was developed in order to overcome the weaknesses of the S-P chart. GSP chart can make the analysis more concrete and accurate, and the uncertain factors in the studies can also be analyzed [18]. Its description is shown in Table 1. Definition 4: Gamma value In GSP chart, GS i is the localized grey relational grade of the i -th student, and GPj is the localized grey relational grade of the j -th problem. They are general called Gamma value, and in specific, GS is called Gamma value for student and GP is called Gamma value for problem: Volume 3, Issue 3, March 2014 Page 216 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 3, March 2014 GS i 0 i max j 0 max 0 i , j 1,2, , n , i 1,2, , m ; GPj j 0 max min max min ISSN 2319 - 4847 (8) Table 1: GSP chart Nagai applied the view of Rasch model in GSP chart to propose the Rasch GSP method, it used logistic regression to analyze the relationship between two sets of data which were sets of the order value of students (or problem) and the localized grey relational grades. The purpose was to find a function that represented the characteristics of the entire data [11]. This function is called Rasch GSP function, and its graph is called the Rasch GSP graph. Definition 5: Rasch GSP function 1 Let y f ( x ) (9) 1 e ( x ) be the three-parameter logistic regression function, where , , R are regression coefficients. When x is the order of student ability or the order of item difficulty and y is the localized grey relational grade, the above function y f (x ) is called Rasch GSP function. If x is the order of student ability and y is LGRG-S then the above function y f (x ) is called Rasch GSP function for students, similarly, if x is the order of item difficulty and y is the LGRG-P then y f (x ) is called Rasch GSP function for problems. 2.6 Item Response Theory with BILOG-MG software IRT has become one of the most popular scoring frameworks for measurement data. IRT models are used frequently in computerized adaptive testing, cognitively diagnostic assessment, and test equating. One of the programs that exists for this purpose is BILOG-MG, that has proven particularly useful and reliable over recent decades for many applications. In order to fit into IRT models estimated with BILOG-MG, experimental data have to satisfy three assumptions, these are local independence, monotonicity, and uni-dimensionality[19]. For estimation of the IRT model parameters in BILOG-MG, the degree of bias and estimation error for parameter estimates depends on factors such as the number of parameters, number of examinees and test length. If any general guidelines can be given, it appears that for tests with between 15 and 50 items, approximately at least 250 examinees are required for the oneparameter logistic model, and two-parameter logistic model approximately at least 500, maybe even 1,000 examinees are required for the three-parameter logistic model [20]. 3. METHODOLOGY In this paper, three terms are used in the same meaning: problem = question = item. This study creatively applies Rasch GSP method to promote its effectiveness in assessing academic achievement, the process of applying is presented in the following diagram (Figure 2). Raw data Analyze data by GSP chart Rearrange data by CS, CP Apply regression method by using Rasch GSP curve Determine the last Gamma values Figure 2 Diagram of new application of Rasch GSP method Volume 3, Issue 3, March 2014 Page 217 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 3, March 2014 ISSN 2319 - 4847 The main purpose of study is to evaluate the difficulty of questions, so the process of processing data is carried out through the algorithm which is presented as follows: Algorithm and process of processing data to evaluate the difficulty of questions in the test: Step 1: Calculate and arrange the data with GSP The original data are processed and arranged according to the rule of GSP chart, the results are presented according to specifications in table 1 (in the basic theory section). Step 2: Give priorities in order to arrange the data according to Gamma value The questions are arranged in the order of their Gamma values (GP) from small to large. Step 3: Rearrange the order according to the caution index for problem (CP) The questions, which have the same Gamma value, are rearranged in order of CP value from large tosmall. Step 4: Apply the logistic regression method for arranged Gamma values according to the above steps The Rasch GSP method is applied, Rasch GSP curve is plotted for the entire data set. Step 5: Match the new Gamma values for all the questions Each question corresponds to a point on the Rasch GSP curve, the value corresponding to each question on the y axis is its new Gamma value that needs to be determined. The difficulty of the questions is calculated according to new Gamma value. 4. EXPERIMENT, RESULTS, AND DISCUSSION 4.1 Experimental design The study has performed two experiments to test the Rasch GSP method about the ability to assess the question difficulty, those are: Experiment 1 conducted a 22-question Math test for 37 students in Taichung, Taiwan, the obtained result had Cronbach’s Alpha reaching 0.884. Using the MATLAB toolbox for Rasch GSP to analyze the data and applying the method mentioned, the result would be obtained and compared with the result processed based on Classical Test Theory. In order to demonstrate the strengths of the Rasch GSP method, the research continued to conduct an experiment with large statistical data. Experiment 2 conducted a 46-question English test for 1119 students also in Taichung, the test result also had high Cronbach’s Alpha, it reached 0.833. This satisfied the assumptions of IRT and estimation of IRT model parameters in BILOG-MG. Data were analyzed using the Rasch GSP method to give assessment results, then these data were also analyzed with the BILOG-MG 3 software, the two analytical results were compared with each other. 4.2 Results The result of Rasch GSP analysis (as shown in Figure 3) is Rasch GSP curve for problem that is mentioned in step 4 of the methodology section. Figure 3 Rasch GSP curve for problem in experiment 1 To show the result of evaluating difficulty of each question, a coordinate method was applied. The difficulty of questions were calculated and exported from a program written by MATLAB (Program for Rasch GSP) and presented in Table 2, they were arranged in order from small value to large value. This result was compared with the result calculated by Classical Test Theory (Table 3). The questions were highlighted in two tables to compare together to show that the order of their difficulties were arranged in the same position for the two methods. Table 2: Evaluation result for questions difficulty by the proposed method in experiment 1 Question-number 9 11 8 1 6 19 2 5 21 7 16 Difficulty 0.153 0.176 0.201 0.229 0.261 0.295 0.331 0.370 0.412 0.445 0.449 Question-number 10 15 14 12 17 4 20 18 13 22 3 Difficulty 0.544 0.589 0.634 0.677 0.719 0.759 0.796 0.831 0.863 0.892 0.919 Volume 3, Issue 3, March 2014 Page 218 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 3, March 2014 ISSN 2319 - 4847 Table 3: Evaluation result for question difficulty by Classical Test Theory in experiment 1 Question-number 9 11 8 1 6 19 2 5 21 7 16 Dj 0.865 0.838 0.784 0.757 0.757 0.730 0.730 0.730 0.676 0.649 0.649 Question-number 10 15 14 12 17 4 20 18 13 22 3 Dj 0.622 0.622 0.595 0.568 0.541 0.541 0.514 0.486 0.459 0.405 0.351 In Table 3, the difficulty D j of the j-th question was calculated by the following formula: D j nj N Where n j is the number of students who answered the j-th question correctly, N is the number of students taking this test. The larger the D j value is the smaller the question difficulty gets, so the questions are arranged by value of D j from large to small. In experiment 2, Rasch GSP analysis was applied (Figure 4), the difficulty of questions were put out from Program for Rasch GSP and then arranged in order from small value to large value (Table 4). Figure 4 Rasch GSP curve for problem in experiment 2 Figure 5 Evaluation results for questions processed by BILOG-MG 3 in experiment 2 The results of evaluating difficulty of the questions, which were processed by BILOG-MG 3, were presented in Figure 5, in in which, a, and b are two parameters of question estimated by Item Response Theory. The difficulty of questions Volume 3, Issue 3, March 2014 Page 219 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 3, March 2014 ISSN 2319 - 4847 represented by the parameter b were also arranged from small value to large value (Table 5) in order to compare with the results of the proposed method, question number 1 corresponds item 01 and question number 2 corresponds item 02, etc. Table 4: Evaluation results for question difficulty by the proposed method in experiment 2 Question-number 5 22 28 36 20 2 9 16 29 19 17 31 Difficulty 0.156 0.165 0.174 0.184 0.194 0.205 0.216 0.227 0.239 0.252 0.265 0.278 Question-number 6 24 23 21 18 33 25 40 30 41 26 39 Difficulty 0.292 0.307 0.322 0.338 0.354 0.370 0.388 0.405 0.423 0.442 0.461 0.480 Question-number 42 4 34 35 44 1 45 43 15 32 13 14 Difficulty 0.499 0.520 0.540 0.561 0.581 0.603 0.624 0.645 0.667 0.688 0.710 0.731 Question-number 10 3 27 38 12 7 11 46 37 8 Difficulty 0.753 0.774 0.796 0.817 0.837 0.858 0.878 0.898 0.918 0.937 Table 5: Evaluation results for question difficulty from BILOG-MG 3 in experiment 2 Question-number 5 22 28 36 20 2 9 16 29 19 17 31 Difficulty -4.141 -3.99 -2.934 -2.896 -2.813 -2.647 -2.626 -2.576 -2.536 -2.243 -1.992 -1.971 Question-number 6 24 23 21 18 33 25 40 30 41 26 39 Difficulty -1.936 -1.882 -1.796 -1.758 -1.665 -1.641 -1.558 -1.305 -1.305 -1.264 -1.088 -0.902 Question-number 42 4 34 35 44 1 45 43 15 32 13 14 -0.186 -0.157 Difficulty -0.879 -0.838 -0.793 -0.792 -0.729 -0.668 -0.663 -0.628 -0.615 -0.468 Question-number 10 3 27 38 12 7 11 46 37 8 Difficulty 0.113 0.130 0.201 0.247 0.310 0.784 1.427 1.450 1.782 2.070 4.3 Discussion It was found that the proposed method has evaluated the question difficulty in the test and given the evaluated difficulty of each question. In experiment 1 with small sample, the sorting the order of question difficulty by the proposed method and the Classical Test Theory were similar, namely at highlighted position of 11 questions (50%). At these positions, the difficulties of the questions are completely different, so they are arranged in logical order. At the positions which are not highlighted, the proposed method distinguished the difficulty of questions obviously, so they are also arranged in logical order. Meanwhile, according to Classical Test Theory, these questions are not arranged in order clearly, namely at two questions number 1 and 6, three questions number 19, 2 and 5, two question number 7 and 16, two questions number 10 and 15, two questions number 17 and 4, because they have the same difficulty values. This weakness is due to the fact that the Classical Test Theory did not consider the priority criteria, the questions which have the same correctly answered rate, always have the same difficulty while the ability of students are different from each other. This shows the advantages of the proposed method which has already considered the quality and reliability of questions. In experiment 2 with large sample, the results of arrangement of questions according to their difficulties were completely similar for the results processed by two methods. This confirms the rationality and reliability of the proposed method which can be used to handle large sample. The basic difference is that the difficulty distance estimated between two corresponding questions of two methods are not similar, the reason is the difficulty scale of question of two methods are not similar. This is also a limitation of the proposed method. 5. CONCLUSION With the assumption that it was possible to build an educational assessment method which can be applied to both small and large statistical samples, the present study proposed a new evaluation method based on a new application of Rasch GSP in evaluating question difficulty. The contributions of this study are as follows: 1. The study has developed a method of assessment that does not only well apply for small statistical samples, but also handle large statistical samples. 2. Evaluation results of the proposed method are compared with the evaluation results of the previous methods, the fit is relatively high and shows the advantages of the new method. 3. The advantage of the proposed method is that it is simple, easily understandable, and easy to apply; the processed results are accurate and objective. In summary, this new application of Rasch GSP with its features helps teachers and education managers to have more convenience to perform their evaluation in the teaching process. They will have more time for the construction of multiplechoice questions in a better way. Volume 3, Issue 3, March 2014 Page 220 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 3, March 2014 ISSN 2319 - 4847 References [1] L. A. Shepard and L. A. Sheppard, "The role of classroom assessment in teaching and learning," 2000. [2] D. Pulakos, "Selection assessment methods," SHRM Foundation’s Effective Practice Guidelines, Society for Human Resource Management, Alexandria, VA, p. 55, 2005. [3] M. N. Yu, Educational Testing and Assessment, Third ed. Taiwan: Psychology Publisher, 2011. [4] K. L. Wen, C. Chao, H. Chang, S. Chen, and H. Wen, Grey system theory and applications. Taipei: Wu-Nan Book Inc, 2009. [5] T. W. Sheu, C. P. Tsai, J. W. Tzeng, D. H. Pham, H. J. Chiang, C. L. Chang, et al., "An Improved Teaching Strategies Proposal Based on Students' Learning Misconceptions," International Journal of Kansei Information, vol. 4, pp. 1-12, 2013. [6] Karabatsos, "The Rasch model, additive conjoint measurement, and new models of probabilistic measurement theory," Journal of Applied Measurement, vol. 2, pp. 389-423, 2001. [7] R. K. Hambleton, H. Swaminathan, and H. J. Rogers, Fundamentals of item response theory: Sage, 1991. [8] M. Du Toit, IRT from SSI: Bilog-MG, multilog, parscale, testfact: Scientific Software International, 2003. [9] T. W. Sheu, J. W. Tzeng, J. C. Liang, B. T. Wang, and M. Nagai, "The Use of Rasch Model GSP Chart and Grey Structural Model Analysis for Vocational Education and Training Courses: Taking Enterprise Ethics and Professional Ethics Courses as an Example," Journal of Educational Research and Development, vol. 8(4), pp. 53-80, 2012. [10] J. W. Tzeng, T. W. Sheu, J. C. Liang, B. T. Wang, and M. Nagai, "A new proposal based on raschmodel GSP chart and grey structural model withanalysis and discussion," International Journal of Advancements in Computing Technology, pp. 111-121, 2012. [11] J. W. Tzeng, T. W. Sheu, J. C. Liang, B. T. Wang, and M. Nagai, "A New Proposal Based on Rasch Model GSP Chart and Grey Structural Model with Analysis and Discussion.," International Journal of Advancements in Computing Technology, vol. 4, pp. 111-121, 2012. [12] Rasch, Probabilistic models for some intelligence and attainment tests. Chicago: University of Chicago Press, 1960. [13] W. C. Wang, "Rasch Measurement Theory and Application in Education and Psychology," Journal of Education & Psychology, vol. 27, pp. 637-694, 2004. [14] B. Baker, The basics of item response theory, Second ed. United States of America: ERIC Clearinghouse on Assessment and Evaluation., 2001. [15] Y. C. Ho, "An Experimental Study of the Effects of Mastery Learning Combined with the Diagnosis of Microcomputerized S-P Chart Analysis on Students' Learning," Educational Psychology, vol. 22, pp. 191-214, 1989. [16] S. C. You and M. N. Yu, "The Relationships among Indices of Diagnostic Assessments Knowledge Structures and S-P Chart Analysis," Education and Psychology, vol. 29, pp. 183-208, 2006. [17] Yamaguchi, G.-D. Li, and M. Nagai, "Verification of effectiveness for grey relational analysis models," Journal of Grey System, vol. 10, pp. 169-181, 2007. [18] T. W. Sheu, T. L. Chen, J. W. Tzeng, C. P. Tsai, H. J. Chiang, C. L. Chang, et al., "Applying Misconception Domain and Structural Analysis to Explore the Effects of the Remedial Teaching," Journal of Grey System, vol. 16, pp. 17-34, 2013. [19] A. Rupp, "Item response modeling with BILOG-MG and MULTILOG for Windows," International Journal of Testing, vol. 3, pp. 365-384, 2003. [20] L. Hulin, R. I. Lissak, and F. Drasgow, "Recovery of two-and three-parameter logistic item characteristic curves: A Monte Carlo study," Applied psychological measurement, vol. 6, pp. 249-260, 1982. Volume 3, Issue 3, March 2014 Page 221 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 3, March 2014 ISSN 2319 - 4847 Appendices Table 6: Response of students in experiment 1 Student Code 11201 11202 11203 11204 11205 11206 11207 11208 11209 11210 11211 11212 11213 11214 11215 11216 11217 11218 11219 11220 11221 11222 11223 11224 11225 11226 11227 11228 11229 11230 11231 11232 11233 11234 11235 11236 11237 Students’ response 1 1 1 0 1 1 1 1 1 1 1 0 0 1 1 0 1 1 0 1 1 1 0 1 1 0 1 1 1 1 1 0 1 0 1 1 1 1 1 1 0 0 1 1 1 1 1 0 0 1 1 0 1 1 1 0 1 0 1 1 1 1 0 1 0 1 1 1 1 1 0 1 1 1 0 1 1 0 1 1 1 1 1 0 1 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 0 0 1 0 1 1 1 1 0 0 0 1 0 0 1 1 1 1 0 0 0 1 0 0 0 1 1 0 0 1 0 1 1 1 1 0 0 1 0 1 1 1 0 1 1 0 1 0 1 0 1 1 0 1 1 1 0 1 1 1 0 0 1 1 1 1 1 1 1 0 1 1 1 1 1 0 1 1 1 1 1 1 0 0 1 1 1 1 1 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 0 1 1 0 0 1 0 1 1 1 Volume 3, Issue 3, March 2014 1 1 0 0 0 1 1 0 1 1 1 0 0 1 1 1 1 1 0 0 1 1 0 1 1 1 1 1 0 1 0 0 1 0 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 0 1 1 1 1 1 0 0 0 1 1 1 0 1 1 0 1 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 1 1 0 1 1 1 0 1 1 1 1 1 1 0 1 1 1 0 1 0 0 1 1 1 0 0 1 0 0 0 1 0 1 1 1 1 0 1 1 0 1 1 0 1 1 0 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 0 1 1 1 0 1 1 1 1 1 1 0 1 1 1 0 1 1 1 1 0 1 1 1 1 1 0 1 1 1 0 1 1 0 0 1 1 0 1 1 0 0 0 1 1 0 1 1 0 1 1 1 0 1 1 0 0 0 0 1 0 1 1 0 1 1 1 1 1 0 0 0 1 1 0 1 0 0 1 0 1 0 1 1 1 0 1 1 0 0 1 1 0 0 0 0 1 0 0 1 0 0 1 0 0 1 0 0 0 1 1 0 1 1 1 1 1 1 0 1 1 1 0 0 1 1 0 1 1 0 0 0 0 1 1 1 0 0 1 1 1 Number of correct 1 1 1 0 0 1 1 1 0 1 0 0 1 1 0 1 0 1 1 1 1 1 0 1 0 0 1 0 1 1 0 1 0 0 1 1 1 0 1 0 0 0 1 1 1 0 1 0 0 0 1 1 0 0 1 0 1 1 1 0 1 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1 0 0 1 1 1 0 0 0 0 0 1 0 0 1 1 0 1 0 1 0 1 1 1 1 0 1 1 0 0 0 0 1 1 1 0 1 0 1 0 1 1 0 0 0 0 0 0 1 0 1 0 1 0 1 1 0 1 1 1 0 0 0 0 1 1 0 1 0 1 1 1 1 1 0 0 1 1 1 0 1 1 1 0 0 1 0 1 1 1 0 1 1 1 0 1 1 1 1 1 1 1 1 0 1 0 1 1 1 0 1 0 0 0 1 1 0 0 1 0 0 0 0 1 1 1 1 1 0 0 0 1 1 1 0 0 1 0 0 1 1 1 0 1 1 1 1 1 0 0 0 1 1 1 1 1 0 1 1 1 1 1 0 1 0 1 1 1 1 1 0 1 0 1 0 1 1 0 0 0 1 1 1 0 1 0 0 1 1 1 0 0 0 0 1 1 0 0 1 1 0 0 0 1 0 0 0 0 0 1 1 1 0 0 0 0 0 1 1 1 13 22 10 4 9 20 22 10 14 17 9 8 11 18 10 16 17 19 4 14 18 17 7 19 17 8 13 11 13 20 13 11 16 0 21 21 21 Page 222 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org Volume 3, Issue 3, March 2014 ISSN 2319 - 4847 AUTHORS Tian-Wei Sheu received the Ph.D. degree in Mathematics from National Osaka University, Japan in 1990. He is the Dean of College of Education and a professor of Graduate Institute of Educational Measurement, National Taichung University, Taichung, Taiwan. His studies focus in IRT, Educational Measurement, and e-Learning, etc. He is the director of TKIA (Taiwan Kansei Information Association). Phung-Tuyen Nguyen is currently a Ph.D. candidate in Graduate Institute of Educational Measurement and Statistics, National Taichung University, Taiwan. He received Master’s degree in Physics, in 2003 from Hanoi University of education, Vietnam. His research interests focus on item response theory, grey system theory, and educational measurement. Duc-Hieu Pham received Master’s degree of education at Hanoi Pedagogical University No2 of Vietnam in in 2009. He works as a lecturer the Primary Education Faculty of Hanoi Pedagogical University No 2, Vietnam. He is currently a Ph.D. candidate in Graduate Institute of Educational Measurement and Statistics, National Taichung University, Taiwan. His research interests include grey system theory, educational measurement and primary education. Phuoc-Hai Nguyen received Master’s degree in Biology from Hanoi University of education of Vietnam in in 2006. He is currently a Ph.D. candidate in Graduate Institute of Educational Measurement and Statistics, Statistics, National Taichung University, Taiwan. His research interests include biology, item response theory, grey system theory, ordering theory and educational measurement. Masatake Nagai received his Master’s degree in Engineering from Toukai University of Japan in 1969. He He worked in Oki Electric Industry Co., Ltd. for 18 years and was mainly engaged in the design development of ME systems, communication network systems, OA systems, etc. He was also a researcher (Dr. Matsuo research) at the TohokuUniversity while working toward his Ph.D in Engineering. From 1989, 1989, he worked at the Teikyo University Department of Science and Engineering as an assistant professor professor and eventually as an engineering professor. Chair professor in Graduate Institute of Educational Measurement, National Taichung University, Taiwan now. His research interests include approximation, strategy system engineering, information communication network technology, agent, kansei information processing, grey system theory and engineering engineering application. A regular of IEICE member. Volume 3, Issue 3, March 2014 Page 223