Taylor Polynomials with Error Term

advertisement

Taylor Polynomials with Error Term

√

The functions ex , sin x, 1/ 1 − x, etc are nice functions but they are not as nice as polynomials.

Specifically polynomials can be evaluated completely based on multiplication, subtraction and

addition (realizing that integer powers are just multiple multiplications). Thus when you build

your computer, if you teach it how to multiply, subtract and add, your computer can also evaluate

polynomials. These other functions are not that simple (even division creates many problems). The

way that computers evaluate the more complex functions is to approximate them by polynomials.

There are many other applications where it is useful to have a polynomial approximation to

a function. Generally, polynomials are just easier to use. In this section we will show you how

to obtain a polynomial approximation of a function. The approximation will include the error

term—extremely important since we must know that our approximation is a sufficiently good

approximation—how good depends on our application. The main tool that we will use is integration by parts. Specifically, we will use integration by parts in the form

Z b

0

F Gdt

a

= [FG]ba −

Z b

FG0 dt.

a

If you are not particularly familiarR with this form of integration by parts, you should review exactly

why this form is the same as the udv, etc, form of integration by parts.

We consider the function

f and desire to find a polynomial approximation of f near x = 0. We

Z

x

begin by noting that

f 0 (t)dt = f (x) − f (0) or

0

Z x

f (x) = f (0) +

f 0 (t)dt

(1)

0

If we write expression (1) as f (x) = T0 (x) + R0 (x) where T0 (x) = f (0) and R0 (x) = 0x f 0 (t)dt,

then T0 would be referred to as the zero order Taylor polynomial of the function f about x = 0

and R0 would be the zero order error term—of course the trivial case—and generally T0 would

not be a very good approximation of f .

R

We obtain the next order of approximation by integrating 0x f 0 (t)dt by parts. We let G = f 0 ,

F 0 = 1. Then GR0 = f 00 and F = t − x. You should note the last step carefully. The dummy variable

in the integral 0x f 0 (t)dt is t. Hence, if you were to integrate by parts with out being especially

clever (or even sneaky), you would say that F = t. However, there is no special reason that you

could not use F = t + 1 or F = t + π instead. The only requirement is that the derivative of F

must be 1. Since the integration (and hence, the differentiation) is with respect to t, x is a constant

with respect to this operation (no different from 1 or π) and we want it, it is perfectly OK to set

F = t − x. Then application of integration by parts gives

R

Z x

f 0 (t)dt =

0

Z x

0

Z x

=

0

F 0 Gdt = [FG]x0 −

Z x

FG0 dt

0

t=x Z

0

0

1 · f (t)dt = (t − x) f (t) t=0 −

0

= 0 − (−x) f (0) −

Z x

t=x

(t − x) f 00 (t)dt

t=0

00

0

(t − x) f (t)dt = x f (0) −

0

Z x

0

1

(t − x) f 00 (t)dt.

If we plug this result into (1), we get

0

f (x) = f (0) + x f (0) −

Z x

(t − x) f 00 (t)dt

(2)

0

Expression (2) can be written as f (x) = T1 (x) + R1 (x)Z where T1 (x) = f (0) + x f 0 (0) is the first

x

order Taylor polynomial of f at x = 0 and R1 (x) = −

(t − x) f 00 (t)dt is the first order error

0

term. At this time it is not clear that T1 is a better approximation of f than T0 . We must be patient.

Note: In the computation above we used the notation t = x and t = 0 on the integral and the

evaluation bracket. This was done to try to emphasize the fact that the variable being substituted

and the dummy variable in the integral is t.

We continue by again integrating the integral in expression (2) by parts. We let G = f 00 , F =

(t − x), get G0 = f 000 and F = 12 (t − x)2 , and eventually get

1

1 x

f (x) = f (0) + x f (0) + x2 f 00 (0) +

(t − x)2 f 000 (t)dt

(3)

2

2 0

And it should not be surprising that we write this as f (x) = T2 (x) + R2 (x) where T2 and R2 are

the second order Taylor polynomial of f at x = 0 and error term, respectively.

By now it is possible to start seeing how and why T2 approximates f . The approximation is

going to be a good approximation (in this case because 2 is still a small number, a relatively good

approximation) for values of x near zero, i.e. for small values of x. If x is small and t is between

0 and x, t − x will be small. And, (t − x)2 will be smaller yet. Thus if f 000 is small—or at least

bounded, then for small values of x, R2 (x) will be small and f (x) ∼

= T2 (x) where the approximation

is only as good as R2 (x) is small.

Z

0

Homework 1:

Verify expression (3).

Homework 2: By integrating the integral in (3) by parts, derive the expression f (x) = T3 (x) +

R3 (x) with the appropriate definitions of T3 and R3 .

After you do HW2 we say etc. (or if we want to be more rigorous, we use mathematical

00···0

|{z}

(n)

induction) and obtain (remember that j! = 1 · 2 · · · · · j and that f = f n ):

n

f (x) =

∑

j=0

1

1 j ( j)

x f (0) + (−1)n

j!

n!

Z x

0

(t − x)n f (n+1) (t)dt = Tn (x) + Rn (x)

(4)

where Tn is the n-th order Taylor polynomial of f at x = 0 and Rn is the n-th order error term,

i.e.

n

1

Tn (x) = ∑ x j f ( j) (0)

(5)

j=0 j!

2

1 x

(t − x)n f (n+1) (t)dt

(6)

Rn (x) = (−1)

n! 0

You might have noticed that every time we said that something was the Taylor polynomial, we

added the expression “of f at x = 0” or “of f about x = 0”. The reason that we keep telling you

that it is the Taylor polynomial at x = 0 is because we are expanding the function about x = 0 (that

is why it depends of f and the derivatives of f evaluated at x = 0). In the next section we will

expand functions about points other than zero.

We now consider the following example.

Z

n

Example 1.1 Consider the function f (x) = sin x on the interval [−π, π]. Compute the first, third,

fourth and sixth order Taylor polynomial approximations of f. Plot f and the four polynomials on

the same graph.

If you look at equation (5), we realize that we need f and a bunch of its derivatives evaluated

at x = 0. To accomplish this, we construct the following table.

n

0

1

2

3

4

5

6

7

f (n) (x) f (n) (0)

sin x

0

cos x

1

− sin x

0

− cos x

−1

sin x

0

cos x

1

− sin x

0

− cos x

−1

Then using equation (5) it is then easy to see that

1

x=x

1!

1

1

0+ x+ 0 = x

1!

2!

1

1

1

1

0 + x + 0 − x3 = x − x3

1!

2!

3!

6

1

1

1 3 1

1

0 + x + 0 − x + 0 = x − x3

1!

2!

3!

4!

6

1

1

1 3 1

1 5 1

1

1 5

0 + x + 0 − x + 0 + x + 0 = x − x3 +

x

1!

2!

3!

4!

5!

6!

6

120

T1 (x) = 0 +

T2 (x) =

T3 (x) =

T4 (x) =

T6 (x) =

Just for the fun of it (since we accidentally included the 7th derivative and haven’t used it for

anything), we can use equation (6) to see that

1

R6 (x) = (−1)

6!

6

Z x

0

6 (7)

(t − x) f

1

(t)dt = −

720

3

Z x

0

(t − x)6 costdt

(7)

Note 1: We notice that even coefficients (coefficients of even powers of x) are all zero. Only odd

powers of x are included in the expansion. We want to emphasize that this is not always the case.

This happens in this case because the sine function is an odd function so the Taylor polynomial

approximating the sine function will also be an odd function—and the way that a Taylor polynomial

is an odd function is by containing only odd powers of x. The reason that this happens is whenever

a function is an odd function about zero, then all of the even derivatives evaluated at zero will be

zero. Can you prove that?

Note 2: (Hint: This note is not easy—but it may be useful.) If we consider the expression for R6

given in (7), we see that for x ≥ 0

Z x

1 Z x

1

6

|R6 (x)| = (t − x) costdt ≤

(t − x)6 |cost| dt

720 0

720 0

Z x

1

≤

because |cost| ≤ 1

(t − x)6 dt

720 0

1

|x|7

=

720 · 7

The first inequality is due to a common property of integrals that apparently is not included in

Z b

Z b

| f (t)| dt. If we assume that both f and | f | are

our text: If f is integrable, then f (t)dt ≤

a

a

integrable, then the property follows from Property 7, page 347 (since − | f (t)| ≤ f (t) ≤ | f (t)|).

The last equality is true if you just consider the integral carefully, using the fact that x ≥ 0 implies

that x ≥ t. Of course the absolute value sign in the last expression is not needed—we want it there

because the end result is true when x is either positive or negative. When x ≤ 0, we see that

Z 0

1 Z 0

1 Z x

1

6

6

|R6 (x)| = (t − x) costdt = (t − x) costdt ≤

(t − x)6 |cost| dt

720 0

720 x

720 x

Z 0

1

1

6

|x|7 .

≤

(t − x) dt =

720 x

720 · 7

(Remember that when x ≤ 0, |x| = −x.)

1

|x|7 . If we want to consider x ∈ [−.5, .5], then

Hence in either case we have |R6 (x)| ≤ 720·7

1

|R6 (x)| ≤ 720·7

|.5|7 ≤ 1.550099206 · 10−6 . Thus using T6 as an approximation of the sine function

is a very good approximation if we limit x to be in the interval [−.5, .5].

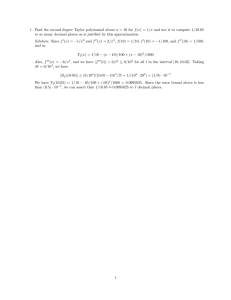

One of the ways that makes it reasonably easy to see what is happening is to plot the Taylor

polynomials and the function. (You should understand that the plot given below is very nice but

it does not take the place of the analysis done above. The results of the plot are only as good as

the plotter.) On the plot given in Figure 1 you see that there are four functions plotted: y = sin x,

y = T1 (x) (which is identical to y = T2 (x)) y = T3 (x) (= T4 (x)) and y = T6 (x).

It looks as if T1 is a pretty good approximation to y = sin x near x = 0 (which shouldn’t surprise

us since y = T1 (x) represents the tangent to the curve y = sin x at x = 0. It should be pretty clear

that y = T3 (x) is a better approximation to y = sin x that y = T1 (x) and that y = T6 (x) is better than

4

y = T3 (x). If you look carefully (and have a good eye), it appears that T6 is a good approximation

well beyond the [−.5, .5] interval analyzed above. You must remember that there are inequalities

involved in the analysis above—that means that the results might be better than we computed them

to be.

Figure 1: Taylor polynomial approximations for y = sin x

Example 1.2 Consider the function f (x) = e3x on the interval [−2, 2]. Compute the third,

fourth, sixth and eighth order Taylor polynomial approximations of f . Plot f and the four polynomials on the same graph.

5

We begin by constructing the following table.

n f (n) (x)

0

e3x

1

3e3x

2

9e3x

3 27e3x

4 81e3x

5 243e3x

6 729e3x

7 2187e3x

8 38 e3x

9 39 e3x

f (n) (0)

1

3

9

27

81

243

729

2187

38

39

Using equation (5) we see that

3

32

33

9

9

1

+ x + x2 + x3 = 1 + 3x + x2 + x3

0! 1!

2!

3!

2

2

3

k

3

= ∑ xk

k=0 k!

T3 (x) =

1

3

32

33

34

9

9

27

+ x + x2 + x3 + x4 = 1 + 3x + x2 + x3 + x4

0! 1!

2!

3!

4!

2

2

8

4

k

3

= ∑ xk

k=0 k!

T4 (x) =

1

3

32 2 33 3 34 4 35 5 36 6

T6 (x) =

+ x+ x + x + x + x + x

0! 1!

2!

3!

4!

5!

6!

9 2 9 3 27 4 81 5 81 6

= 1 + 3x + x + x + x + x + x

2

2

8

40

80

6

k

3

= ∑ xk

k=0 k!

1

3

32

33

34

35

36

37

38

+ x + x2 + x3 + x4 + x5 + x6 + x7 + x8

0! 1!

2!

3!

4!

5!

6!

7!

8!

9 2 9 3 27 4 81 5 81 6 243 7 729 8

= 1 + 3x + x + x + x + x + x +

x +

x

2

2

8

40

80

560

4480

8

3k

= ∑ xk

k=0 k!

T8 (x) =

We see that it is not much fun to calculate Taylor polynomials for very large n (at least I didn’t

enjoy it). In the lab we shall see that these polynomials can be easily computed using Maple or

Matlab—and for much larger ns than 8. In Figure 2 we see that they all do well in approximating

e3x near x = 0. We also note that they all do poorly for negative x less than 1.5. And hopefully

you’d expect by now, T8 does better than the others in general.

6

Figure 2: Taylor polynomial approximations for y = e3x

Note 1: As a part of the calculation we see that even though 3k grows fast, eventually we see that

k! grows faster. This can be seen using the techniques of Section 7.6.

Note 2:

We see that by equation (6)

1

R8 (x) = (−1)

8!

8

Z x

0

8 (9)

(t − x) f

1

(t)dt = −

51840

Z x

(t − x)8 39 e3t dt

(8)

0

If we now bound the error term as we did in Example 1.1, for x ∈ [−2, 2] we get

Z x

9 6

1 Z x

39 e6

8 9 3t (t − x)8 dt = 3 e |x|

|R8 (x)| = (t − x) 3 e dt ≤

51840 0

51840 0

51840 9

(9)

It should be noted that the e6 term is in the last expression because we bounded the function e3t

on [−2, 2] by e6 . If we apply inequality (9) on the entire interval [−2, 2], set |x| = 2, we see that

7

|R8 (x)| ≤ 8714.1 — which is not very good. If we looked at the plots given in Figure 2, we knew

that the approximation would not be good but we wouldn’t think it would be this bad. We must

remember that this is an inequality. If we look at the picture very carefully, the difference between

T8 and e3t is about 17.2, and it is true that 17.2 ≤ 8714.1. Even on the interval [−1.5, 1.5] where

by the plots in Figure 2 we see that T8 is clearly a pretty good approximation of e3t , we find that

|R8 (x)| ≤ 654.3 (and we could have done a little better if we have bounded e3t by e4.5 on [−1.5, 1.5]

instead of —and gotten 146.0 instead). Again the emphasis is that the result we get from (9) is an

inequality. If we set x = .5, we get |R8 (x)| ≤ 0.033, which is not great but is reasonably nice.

Homework 3:

Compute the fifth order Taylor polynomial of the function

1

1−x

at x = 0.

Homework 4: Compute the third order Taylor polynomial of the function 2 − x + 3x2 − 2x3 + x4

at x = 0. Compute R3 and show why you know why it is correct.

8

More Taylor Polynomials with Error Term

We emphasized in the last section (and we saw it in the plots) that our approximations were approximations near x = 0. In this section we develop the analogous results for approximations of a

function f near x =

Z a for an arbitrary a. We mimic what we did in the previous section but we start

x

with considering

f 0 (t)dt = f (x) − f (a) or

a

Z x

f (x) = f (a) +

f 0t(t)dt

(10)

a

Of course this gives us the zeroth order Taylor polynomial of f about x = a and the associated

zeroth order error term. We again integrate the integral in (10) by parts, setting G = f 0 and F 0 = 1.

We get G0 = f 00 , F = t − x (again the t − x instead of just the t—where x is just a constant with

respect to this integration), and

Z x

t=x Z x

(t − x) f 0 (t) t=a − (t − x) f 00 (t)dt

f 0 (t)dt =

a

0

= 0 − (a − x) f (a) −

Z ax

00

0

(t − x) f (t)dt = (x − a) f (a) −

a

Z x

(t − x) f 00 (t)dt.

a

Thus we have

0

f (x) = f (a) + (x − a) f (a) −

Z x

a

(t − x) f 00 (t)dt = T1 (x) + R1 (x)

(11)

where T1 (x)

f (a) + (x − a) f 0 (a) is the first order Taylor polynomial of f about x = a and

Z =

x

R1 (x) = − (t − x) f 00 (t)dt is the first order error term.

a

Hopefully by now it should be easy to compute

1

1

f (x) = f (a) + (x − a) f 0 (a) + (x − a)2 f 00 (a) +

2

2

Z x

a

(t − x)2 f 000 (t)dt = T2 (x) + R2 (x)

(12)

the second order Taylor polynomial of f about x = a and the second order error term, and in

general

n

f (x) =

∑

j=0

1 ( j)

(−1)n

f (a)(x − a) j +

j!

n!

where

n

Tn (x) =

∑

j=0

Z x

a

(t − x)n f (n+1) (t)dt = Tn (x) + Rn (x)

1 ( j)

f (a)(x − a) j

j!

(13)

(14)

is the n-th order Taylor polynomial about x = a and

(−1)n

Rn (x) =

n!

Z x

(t − x)n f (n+1) (t)dt

a

is the n-th order error term.

9

(15)

Homework 5: Verify expression (13). (Verify that the form of the Taylor polynomial given in

(13) matches that given in (12). Verify by taking one more step of integration by parts that there

will be a j! in the denominator. Verify that (−1)n term in Rn appears to be correct.)

We next see that computing Taylor polynomials about x = a is very much the same as computing Taylor polynomials about x = 0.

Example 2.1 Compute the third and fourth order Taylor polynomials of f (x) =

Plot f and the two polynomials on the same graph.

Again we the construct the table of derivatives as follows.

1

x+1

about x = 2.

n

f (n) (x)

f (n) (2)

0

(x + 1)−1

3−1

−2

1 −(x + 1)

−3−2

2 2!(x + 1)−3

2! · 3−3

3 −3!(x + 1)−4 −3! · 3−4

3 4!(x + 1)−5

4! · 3−5

5 −5!(x + 1)−6 −5! · 3−6

Applying equation (14) we see that

3

T3 (x) =

∑

j=0

1

(x − a) j f ( j) (a)

j!

1

1

1

1

(x − 2)0 f (2) + (x − 2) f 0 (2) + (x − 2)2 f 00 (2) + (x − 3)2 f 000 (2)

0!

1!

3!

2!

1

1

1

1

2!

1

3!

1

0

2

2

=

(x − 2) + (x − 2) − 2 + (x − 2) 3 + (x − 3) − 4

0!

3 1!

3

2!

3

3!

3

1

1

1 1

− (x − 2) + (x − 2)2 − (x − 2)3

and

=

3 9

27

81

1

T4 (x) = T3 (x) + (x − 2)4 f (4) (2)

4!

1 1

1

1

1

=

− (x − 2) + (x − 2)2 − (x − 2)3 +

(x − 2)4

3 9

27

81

243

Below in Figure 3 we see that both T3 and T4 approximate f well near x = 2. Careful inspection

also shows that T4 approximates f better than T3 .

If we treated this problem as we did Examples 1.1 and 1.2, we would next treat the error term

to bound the errors terms associated with T3 and T4 and, hence, have an analytic bound on the error

of approximation. Instead we shall state and prove (sort of) the following theorem.

=

Theorem 1.1 (Taylor Inequality) Supposethe function f and the first

n + 1 derivatives of f are

integrable. Suppose that M is such that max f (n+1) (x) |x − a| ≤ d ≤ M. Then

|Rn (x)| ≤

M

|x − a|n+1

(n + 1)!

10

Figure 3: Taylor polynomial approximations for y =

1

x+1

We treat this result just as we bounded the error terms in Examples 1.1 and 1.2. We see that

(−1)n Z x

1 Z x

n

(n+1)

n

(n+1)

|Rn (x)| = (t − x) f

(t)dt ≤ |(t − x) | f

(t) dt n!

n!

a

a

Z x

n+1

M |x − a|

M

M |(t − x)n | dt =

|x − a|n+1

≤

=

n! a

n! n + 1

(n + 1)!

Notice that in the right hand side of the first inequality we have taken the absolute value inside

of the integral and outside of the integral. The absolute value that is outside of the integral is

necessary to cover the case when x < a. Of course the second inequality is due to the assumed

bound on the absolute value of f . And finally, the final result is obtained by integrating (t − x)n . To

not allow the absolute value inside of the integral to bother us, it is easiest to perform the integral

twice, once assuming that x > a and once assuming that x < a.

We can then return to the example considered in Example 2.1.

11

Example 2.2: Use the Taylor Inequality to bound the error due to the approximation of f (x) =

1

x+1 by T3 and T4 on the interval [1.5, 2.5].

To

what we are doing we write R3 (x) = f (x) − T3 (x), note that f (4) (x) = 4!/(x + 1)5

emphasize

and f (4) (x) ≤ 4!/2.55 (evaluate the function at x = 1.5) and apply Taylor’s Inequality to get

4!/2.55

.54

4

|R3 (x)| ≤

|x − 2| ≤

= 0.00064

4!

2.55

Thus we see that for x ∈ [1.5, 2.5], | f (x) − T3 (x)| ≤ 0.00064 — not a bad approximation.

Repeating this process for n = 4, we get

|R4 (x)| ≤

5!/2.56

.55

|x − 2|5 ≤

= 0.000128

5!

2.56

We should emphasize that in the case when n = 3, we get the bound M = 4!/2.55 and when

n = 4, we get M = 5!/2.56 . These bounds are obtained by evaluating f (4) (x) and f (5) (x) at x = 1.5.

The easiest was to see that the maximums of f (4) (x) and f (5) (x) occur at x = 1.5 is to graph the

functions on the interval [1.5, 2.5]. It should be clear that the maximum values of the absolute

values of these functions occur at x = 1.5.

Homework 6: Evaluate the fourth order Taylor approximation about x = −1 of the function

f (x) = e3x on the interval [−1.5, −0.5] and use the Taylor Inequality to determine a bound for the

error of the approximation.

Homework 7: Evaluate the fifth order Taylor approximation about x = π of the function f (x) =

cos θ on the interval [π/3, 5π/3] and use the Taylor Inequality to determine a bound for the error

of the approximation. (Hint: It is sometime difficult to find the maximum of some functions on

some intervals. For something like the sine and cosine functions, using 1 is the maximum of the

absolute value is usually a good enough bound the function on any interval.)

12

Motivation of Series

In the last three sections we have seen that it is possible to approximate functions by polynomials.

We have seen that the approximation might be quite good on some intervals and not so good on

other intervals. We have seen the approximation is determined by the error term—and that error

term is a pain to deal with. From what we have seen, we would have guess that if T8 is a pretty

good approximation of f (x) = e3x , then how well might T100 (we first wrote out the expression for

T100 but then decided that we would not waste the space—you can see what it looks like in the lab)

approximate e3x ? We do know that the hundred and first derivative is f (101) (x) = 3101 e3x , so on

[−5, 5]

3101 e15 101

|R101 | ≤

5 − 2.1150 · 10−35 .

101!

We’d have to agree that this result is very good. We clearly could do pretty well on a larger

interval. But obviously T100 is not equal to f . There are times when we want to replace f by the

“polynomial”. We can’t do that with T100 . There are times when it is not good enough to have an

approximation. The obvious approach is to use a larger order yet. If we liked

100

f (x) ∼

= T100 (x) =

3k

∑ k! xk

k=0

how about

∞

3k k

∑ x

k=0 k!

(16)

Before we can consider how or if this might work or be useful, we must decide what it means

or if it can mean anything logical. Easier yet, we might consider whether (16) makes any sense for

a fixed value of x, say x = 1, i.e. does it make any sense to write

∞

3k

9

∑ k! 1k = 1 + 3 + 2 +

k=0

27

+···

8

(17)

In the next sections we show you how to decide whether or not an expression such as (17)

makes any sense. We will decide when infinite sums such as (17) converge (sum to some number)

or do not converge. After we understand what we mean by convergence (sums) of expressions like

(17), we return to infinite sums of functions such as is given in (16). Functions given in the form

of (16) have been and are still very useful in many areas of mathematics.

13