Mathematics 369 The Jordan Canonical Form A. Hulpke While S

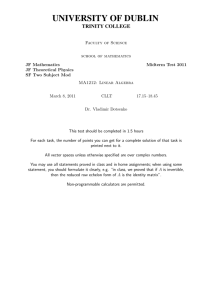

advertisement

Mathematics 369

The Jordan Canonical Form

A. Hulpke

While S C H U Rs theorem permits us to transform a matrix into upper triangular form, we

can in fact do even better, if we don’t insist on an orthogonal transformation matrix.

Our setup is that we have V = Cn and a matrix A ∈ Cn×n that represents a linear transformation L:V → V via the standard basis S of V : A = S [L]S .

We first want to show that it is possible to modify the basis, such that we get a block diagonal

matrix with blocks for each eigenvalue:

Theorem 1 (“special version of M ASC H KE’s theorem”) Given L:V → V , there is a basis B

of V such that

A1 0 · · · 0

0 A2 · · · 0

[L]

=

.. . .

..

B

B

. .

.

0 · · · 0 Al

is in block form with each Ai in upper triangular form with the same value on the diagonal.

Proof: Let us assume that by S C H U R’s theorem we have a basis F = {f1 , . . . , fn } for L which

is chosen in a way such that the diagonal entries have the eigenvalues clustered together.

Suppose that fa , . . . , fb are the basis vectors that correspond to the diagonal entry λ and that

fc (c > b) is a basis vector corresponding to a different diagonal eigenvalue µ. Suppose that

c

L(fc ) = ∑ di fi with dc = µ. We are interested in the coefficients di for a ≤ i ≤ b. Our aim is to

i=1

change the basis such that di = 0 for every a ≤ i ≤ b.

If this is not already the case, there is a largest j, such that d j 6= 0. We set v = fc −

dj

fj

λ−µ

j−1

and note that L(fj ) = λfj + ∑ ei fi because F [L]F is upper triangular.

i=1

Then

!

j−1

c

dj

dj

L(v) = L(fc ) −

L(fj ) = ∑ di fi −

λfj + ∑ ei fi

λ−µ

λ−µ

i=1

i=1

j−1

c−1

dj

dj

= ∑ di −

ei fi + d j −

λ fj + ∑ di fi + µfc

λ−µ

λ−µ

i=1

i= j+1

|

{z

}

d jµ

=−

λ−µ

j−1

c−1

dj

dj

= ∑ di −

ei fi + ∑ di fi + µ fc −

fj

λ−µ

λ−µ

i=1

i= j+1

{z

}

|

=v

Thus we can replace fc by v and obtain a new basis F̂ such that F̂ [L]F̂ is upper triangular

but that in this basis L(fˆc ) does not involve fj .

If we compare F [L]F and F̂ [L]F̂ , the only difference in the c-th column is that the entry in

the j-th row has been set to 0. Furthermore (as the images of the basis vectors at positions

< c do not involve fc , all prior columns of the matrix are not affected.

We can repeat this process for all entries to clean out the whole column for entries at

position i ≤ b and thus clean out the top of the c-th column.

We then iterate to the next (c + 1-th) column. We can clean out the to entries of this column

without disturbing any previous columns.

By processing over all columns in this way, we can build a basis B that achieves the claimed

block structure for B [L]B .

The Jordan Canonical form

(C AM I LLE J ORDAN, 1838-1922, http://turnbull.dcs.st-and.ac.uk/∼history/

Mathematicians/Jordan.html)

Let us assume now that be have found (for example by following theorem 1) a basis B such

that B [L]B is block diagonal such that every block is upper triangular with one eigenvalue

λi on the diagonal. If the block starts at position k and goes to position l, we say that the

basis vectors bk up to bl belong to this block. Our next aim is to describe the subspace

spanned by these basis vectors in terms of the transformation L:

Because the characteristic polynomial of L is ∏i (x − λi )aλi , we have that the block for an

eigenvalue λ has exactly size l − k + 1 = aλ . We now consider only one such eigenvalue

which we denote simply by λ:

Then note that bk must be an eigenvector of L for eigenvalue λ, thus bk ∈ ker(L − λid).

Similarly L(bk+1 ) = λbk+1 + ak,k+1 bk and thus bk+1 ∈ ker(L − λid)2 . By the same argument

bk+j ∈ ker(L − λid) j−1 . In particular Span(bk , . . . , bl ) = ker(L − λid)aλ .

Definition the generalized eigenspace of L for eigenvalue λ is ker(L − λid)aλ .

We thus could recover the space (and thus the block structure given by theorem 1) by

calculating the kernel of (L − λid)aλ . Furthermore this indicates the sequence of subspaces

ker(L − λid) ≤ ker(L − λid)2 ≤ · · · ≤ ker(L − λid)aλ is of interest. To simplify notation we set

M = L − λid and K i = ker(M i ). We note that K i is M-invariant and thus L-invariant.

Our aim is to use this kernel sequence to find a “nice” bases for the generalized eigenspace

K aλ . By concatenating the obtained “nice” bases for all generalized eigenspaces we will

obtain a “nice” basis for V .

As K i ≤ K i+1 we see that the dimensions of the K i cannot decrease. Because all dimensions

are finite this sequence of dimensions has to become stationary (at aλ ) for some i. This

situation is reached once dim K i = dim K i+1 , because then K i = K i+1 and

K i+2 = v | M i+2 (v) = 0 = v | M(v) ∈ ker M i+1 = K i+1 = K i

= v | M i+1 (v) = 0 = K i+1 = K i

and so forth.

The following lemma, which is the core of the argument, shows that we can choose bases

for K i in a particular nice way.

Lemma Suppose that for j >= 1 a basis for K j−1 is extended by the vectors v1 , . . . , vk ∈ K j

to form a basis of K j . Then:

a) M j−1 (v1 ), . . . , M j−1 (vk ) ∈ K 1 are linearly independent.

b) The vectors

M j−1 (v1 ), . . . , M j−1 (vk ),

M j−2 (v1 ), . . . , M j−2 (vk ),

..

.

M(v1 ), . . . , M(vk ),

v1 , . . . , vk

are linearly independent.

k

Proof: a) Suppose that 0 =

∑ ciM

i=1

j−1

(vi ) = M

j−1

k

( ∑ ci vi ). Then

i=1

k

∑ civi ∈ ker M j−1 = K j−1.

i=1

This would contradict that the vi extend a basis of K j−1 to a basis of K j , unless ci = 0 for all i.

b) We assume the result of a) and use induction over j. If j = 1 we have that M j−1 = id and

the statement is trivial. Thus assume now that j > 1. If

(∗)

0 = c j−1,1 M j−1 (v1 ) + · · · + c j−1,k M j−1 (vk )

+ c j−2,1 M j−2 (v1 ) + · · · + c j−2,k M j−2 (vk )

.

+ ..

+ c1,1 M(v1 ) + · · · + c1,k M(vk )

+ c0,1 v1 + · · · + c0,k vk

with coefficients ci,i0 ∈ F, we apply M and obtain (using that M j (vi ) = 0 as vi ∈ K j ) that

0 = c j−2,1 M j−2 (M(v1 )) + · · · + c j−2,k M j−2 (M(vk ))

.

+ ..

+ c1,1 M(M(v1 )) + · · · + c1,k M(M(vk ))

+ c0,1 M(v1 ) + · · · + c0,k M(vk )

We now apply induction to the vectors M(v1 ), . . . , M(vk ) ∈ K j−1 and deduce that ci,i0 = 0 for

i ≤ j − 2. Thus (∗) simplifies to

0 = c j−1,1 M j−1 (v1 ) + · · · + c j−1,k M j−1 (vk )

and by the assumption of linear independence of these vectors we get that ci,i0 = 0 for all

i, i0 which proves the claimed linear independence.

Let v be one of the vectors vi from this lemma. Then the set C = {. . . , M 2 (v), M(v), v} (this

is finite because M i (v) = 0 for large enough i) must be linearly independent. The subspace

W = Span(C) is clearly M-invariant (it is M-cyclic) and thus is L-invariant. We calculate that

λ 1 0 ··· 0

.

0 λ 1 0 ..

h

i

L/W = 0 . . . . . . . . . ... .

C

C

0 ··· 0 λ 1

0

···

0

λ

We call a matrix of this form an Jordan block.

We got W from one single vector v. If we consider all the vectors vi given in the preceeding

lemma we get a series of subspaces, whose bases we can concatenate to obtain a larger

linearly independent set.

If we consider them in the sequence

{. . . , M 2 (v1 ), M(v1 ), v1 , . . . , M 2 (v2 ), M(v1 ), v2 , . . . , M 2 (vk ), M(vk ), vk },

h

i

they span an L-invariant subspace U such that L/U is block diagonal with Jordan blocks

along the diagonal.

The last remaining question is whether this subspace U is equal to K aλ . This is not necessarily the case, in fact we might have that K i 6≤ U if dim K i − dim K i−1 > k. In such a case we

can extend the basis by picking further vectors in K i , which are not in K i−1 . These vectors

(and their images) again generate L-invariant subspaces, and yield further, smaller, Jordan

blocks.

If we continue this process to obtain a basis for K aλ for every eigenvalue λ we thus get the

following statement:

Theorem There exists a basis B such that B [L]B is block diagonal with Jordan blocks

along the diagonal. The total number of occurrences of λ on the diagonal is equal to aλ . the

number of Jordan blocks of size ≥ i is equal to dim K i − dim K i−1 .

If L is given by a matrix A, the matrix (similar to A) described in this theorem is called the

Jordan Canonical form of A. (See below for uniqueness.)

Computing the Jordan Canonical Form

Let us first look at the case of a concrete matrix A. We want to find such a basis B and the

Jordan Canonical form of A. This process essentially involves finding suitable vectors in the

kernels K i and arranging them in the right way.

The only issue is bookkeeping of the vectors to decide whether new basis vectors are just

obtained as images, or have to be chosen from a suitable kernel to start a new Jordan block.

(1) Determine the Eigenvalues λk of the matrix A (for example as roots of the characteristic polynomial). For each eigenvalue λ perform the following calculation (which

gives a basis of the generalized eigenspace of λ, the whole basis will be obtained by

concatenating the bases obtained for the different λk ).

Again we write K i = ker(A − λ · I)i .

(2) Calculate ei = dim K i until the sequence becomes stationary. (The largest ei is the

dimension of the generalized eigenspace.)

(3) Let fi = ei − ei−1 . Then ei gives the number of Jordan blocks that have size at least i.

(As long as we only want to know the Jordan form, we thus could stop here.)

We now build a basis in sequence of descending i. Let B = [ ] and i = max{i | fi > 0}.

(4) (Continue growing the existing Jordan blocks) For each vector list (s1 , . . . , sm ) in B,

append the image (A − λ · I) · sm of its last element to the list.

(5) (Start new Jordan block of size i) If fi − fi−1 = m > 0 (then the images of the vectors

obtained so far do not span K i ) let W = Span(K i−1 , s1 , . . . , sk ) where the s j run through

the elements in all the lists obtained so far. Extend a basis of W to a basis of K i by

adding m linearly independent vectors b1 , . . . bm in K i − K i−1 to it.

The probability is high (why?) that any m linear independent basis vectors of K i fulfill

this property. To verify it, choose a basis for K i−1 , append the sj and then append the

bi . Then show that the resulting list is linearly independent.

(The generic method would be to extend a basis of W to a basis of K i and take the

vectors by which the basis got extended.)

(6) For each such vector bi add a list [bi ] to B.

(7) If the number of vectors in the lists in B is smaller than the maximal ei , then decrement

i and go to step (4).

(8) Concatenate the reverses of the lists in B. This is the part of the basis corresponding

to eigenvalue λ.

For example, let

A :=

59 −224 511 −214

4

16 −61 139 −58

1

6 −24

51 −20

0

13 −52 110 −43

0

−4

12 −38

20 −1

.

Its characteristic polynomial is (x −1)4 . We get the following nullspace dimensions and their

differences:

1

2

3

4

i 0

i

ei = dim K 0

2

4

5

5

fi = ei+1 − ei −

2

2

1

0

At this point we know already the shape of the Jordan Canonical form of A (2 blocks of size

1 or larger, 2 blocks of size 2 or larger, 1 block of size 3 or larger. I.e. One block of size 2

and one block of size 3), but let us compute the explicit base change:

We start at i = 3 and set B = [ ]. We have that K 3 = R5 and

K 2 = Span(4, 1, 0, 0, 0)T , (−15/2, 0, 1, 0, 0)T , (3, 0, 0, 1, 0)T , (0, 0, 0, 0, 1)T ,

As f3 = 1 we need to find only one basis vector and pick b1 := (1, 0, 0, 0, 0)T as first basis

vector (an almost random choice, we only have to make sure it is not contained in K 2 ,

which is easy to verify) and add the list [b1 ] to B.

In step i = 2 we first compute the image b2 := (A − 1)b1 = (58, 16, 6, 13, −4)T and add it to

the list.

Furthermore, as f2 > f3 , we have to get another basis vector in K 2 , but not in the span of

K 3 and b2 . We pick b3 = (4, 1, 0, 0, 0)T from the spanning set of K 2 , and verify that it indeed

fulfills the conditions. We thus have B = [[b1 , b2 ], [b3 ]].

In step i = 1 we now compute images again b4 := (A − 1)b2 = (48, 12, 4, 10, 0)T and (from

the second list) b5 := (A − 1)b3 = (8, 2, 0, 0, −4)T .

As f1 = f2 no new vectors are added.

As a result we get B = [[b1 , b2 , b4 ], [b3 , b5 ]].

Finally we concatenate the reversed basis vector lists and get the new basis (b4 , b2 , b1 , b5 , b3 ).

We thus have the base change matrix

48 58 1

8 4

1 1 0 0 0

12 16 0

0 1 1 0 0

2 1

.It is easily verified thatS−1 AS = 0 0 1 0 0

4

6

0

0

0

S :=

10 13 0

0 0 0 1 1

0 0

0 −4 0 −4 0

0 0 0 0 1

Uniqueness and Similarity

If we arrange the bases of different M-cyclic subspaces in a different way, we rearrange

the order of Jordan blocks. We therefore make the following convention for the sake of

uniqueness:

• The eigenvalues are arranged in ascending order

• Within each eigenvalue, Jordan blocks are arranged in descending size.

The resulting matrix is called the Jordan Canonical form of A and is denoted by J(A).

Lemma: Let A, B ∈ Fn×n . Then A and B are similar if and only if J(A) = J(B).

Proof: Since the Jordan Canonical form is obtained form a base change operation we know

that A and J(A) are similar. We thus see that if A and B have the same Jordan canonical form,

then A and B must be similar.

Vice versa suppose that A and B represent the same linear transformation L with respect

to different bases. The Jordan canonical form is determined uniquely by the dimensions

dim ker(L − λ · id)i (these numbers determine the numbers and sizes of the Jordan blocks).

These dimensions are independent of the choice of base.

The (a priori very hard) question on whether two matrices are similar can therefore be

answered by computing the Jordan Canonical form.