Software Pipelining Software Pipelining of Loops (1)

advertisement

DF00100 Advanced Compiler Construction

Software Pipelining of Loops (1)

TDDC86 Compiler Optimizations and Code Generation

Software Pipelining

Literature:

C. Kessler, “Compiling for VLIW DSPs”, 2009, Section 7.2 (handed out)

ALSU2e Section 10.5

Muchnick Section 17.4

Allan et al.: Software Pipelining. ACM Computing Surveys 27(3), 1995

Christoph Kessler, IDA,

Linköpings universitet, 2014.

C. Kessler, IDA, Linköpings universitet.

2

TDDC86 Compiler Optimizations and Code Generation

Software Pipelining of Loops (2)

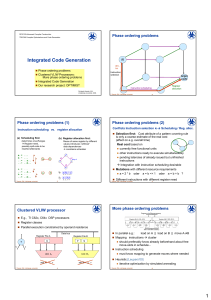

Introduction

Software Pipelining (Modulo Scheduling)

Overlap instructions in loops from different iterations

Kernel length II (initiation interval) ~ Throughput

Goal: Faster execution of entire loop

Better resource utilization,

Increase Instruction Level Parallelism,

also in the presence of loop-carried dependences

Prologue

Kernel, steady state

II

Epilogue

C. Kessler, IDA, Linköpings universitet.

3

TDDC86 Compiler Optimizations and Code Generation

C. Kessler, IDA, Linköpings universitet.

4

TDDC86 Compiler Optimizations and Code Generation

6

TDDC86 Compiler Optimizations and Code Generation

Lower Bound for MII

Definitions

Stage count (SC) = makespan for 1 iteration in terms of kernel length

Initiation Interval (II)

SC

Minimum Initiation Interval (MII)

Depends on

Data

dependence cycles (loop carried), RecMII

(registers, functional units), ResMII

Resources

MII = max( ResMII, RecMII)

ResMII = maxU ceil(NU / P),

II

NU

– Number of instructions for resource (functional unit) U

in the body

P

– Number of functional units

C. Kessler, IDA, Linköpings universitet.

5

TDDC86 Compiler Optimizations and Code Generation

C. Kessler, IDA, Linköpings universitet.

1

Calculating the Lower Bound for MII

Modulo Scheduling

max ( ResMII, RecMII )

Modulo scheduling:

Filling the Modulo Reservation Table,

one instruction by another

Heuristics

example

ASAP, As Soon As Possible

ALAP, As Late As Possible

HRMS

Swing Modulo Scheduling

Optimally

C. Kessler, IDA, Linköpings universitet.

TDDC86 Compiler Optimizations and Code Generation

7

Modulo Scheduling

Integer Linear Programming

C. Kessler, IDA, Linköpings universitet.

[Eriksson’09]

8

TDDC86 Compiler Optimizations and Code Generation

Modulo Scheduling Heuristics

Example:

Hypernode Reduction Modulo Scheduling (HRMS)

C. Kessler, IDA, Linköpings universitet.

TDDC86 Compiler Optimizations and Code Generation

9

HRMS – Motivation

Forward order / ASAP

A

Latency = 2

for all operations

v1

A

E

II = 2

C. Kessler, IDA, Linköpings universitet.

v5

Backward order/ALAP

v1

A

v2

v2

B

v1

B v2

E

D

C

v4

v5

F

v6

G

4 ALUs.

TDDC86 Compiler Optimizations and Code Generation

Schedule only nodes that have

Some nodes in the DAG are scheduled too early and

some too late

II = 2

10

Hypernode Reduction Approach

Problem with simple ASAP or ALAP heuristics:

Example:

C. Kessler, IDA, Linköpings universitet.

Only predecessors already scheduled or

Only successors already scheduled or

None of them,

but not both predecessors and successors.

B

Ensures low register pressure by scheduling nodes

v4

C

v4 v5

D

E

D

as close as possible to their relatives.

v6

v6

F

F

C

G

G

v1v2 v4 v5 v6

Ai Bi-1 Ci-2 Di-2

Ei Fi-3 Gi-4

v1 v2 v4 v5

Ai Bi-1Ci-4

Gi-4 Fi-3 Ei-2 Di-2

11

Code Generation

MaxLive = 7TDDC86 Compiler Optimizations andMaxLive

=7

C. Kessler, IDA, Linköpings universitet.

12

TDDC86 Compiler Optimizations and Code Generation

2

HRMS – Solution

Pre-Ordering Step

Two stage algorithm

Select initial node v

1.

•

2.

Pre order the nodes of the DAG

the hypernode H {v}

Reduce nodes to the hypernode iteratively

By using a reduction algorithm

Schedule according to the order given in step 1.

Remove iteratively edges and nodes in the DAG

(= reducing the DAG)

and add them to H

In each reduction step, append to list of ordered set of nodes

Similar to list scheduling / topological sorting, but now in both

directions – forward and backward along edges incident to H

C. Kessler, IDA, Linköpings universitet.

13

TDDC86 Compiler Optimizations and Code Generation

Select initial node; H {Initial node};

List = < Initial node >;

While (Pred(H) nonempty or Succ(H) nonempty) do

V’ = Pred(H);

V’ = Search_All_Paths( V’,G );

G’ = Hypernode_Reduction( V’, G, H );

L’ = Sort_PALA(G’);

// ALAP with inverted order

List = Concatenate ( List, L’ )

V’ = Succ(h);

V’ = Search_AllPaths(V’,G);

G’ = Hypernode_Reduction(V’,G,h);

L’ = Sort_ASAP(G’);

List = Concatenate ( List, L’ );

end while

return List;

15

TDDC86 Compiler Optimizations and Code Generation

14

HRMS – Example

Function pre_ordering( G )

C. Kessler, IDA, Linköpings universitet.

C. Kessler, IDA, Linköpings universitet.

Start with initial

hypernode H = { A }:

Original dependence graph

of one loop iteration:

A

B

C

H

D

G

E

F

H

G

E

J

F

H

I

G

D

E

H

F

I

E

H

J

F

J

F

F

I

E

I

H

B

F

I

E

J

B

D

B

D

H

J

B

H

B

C

I

H ”eats” successor

node C:

H

H

List = { A,C,G,H,D,J,I,E,B,F } Pred nodes to be scheduled ALAP (D,I,E,B,F),

Succ nodes scheduled ASAP (A,C,G,H,J)

TDDC86 Compiler Optimizations and Code Generation

C. Kessler, IDA, Linköpings universitet.

16

TDDC86 Compiler Optimizations and Code Generation

Pre-Ordering with circular dependencies

The Scheduling Step

Circular dependences from loop carried dependences.

Places operations in the order given by the pre-ordering step

Solution:

Different strategy depending on neighbors

Reduce complete path causing cycle to the Hypernode

How to deal with several connected cycles in DAG?

(See details in the paper, skipped).

If operation has

Only predecessors in partial schedule -> ASAP

Only successors in partial schedule -> ALAP

Both predecessors and successors in partial schedule

Scan from ASAP schedule time towards ALAP time.

(If

C. Kessler, IDA, Linköpings universitet.

17

TDDC86 Compiler Optimizations and Code Generation

no slot found, II++ and reschedule)

C. Kessler, IDA, Linköpings universitet.

18

TDDC86 Compiler Optimizations and Code Generation

3

HRMS – Example (cont.)

HRMS – Results

Resulting HRMS schedule:

Perfect Club Benchmark

A, B, C, D, E, F, G

where for E: ALAP, for others ASAP

v1

A

II = 2

v2

A

C

97.4 % of the loops gave optimal II

Works better than Slack scheduling and FRLC scheduling

(references in the paper)

About same performance as SPILP (optimal algorithm

using Integer Linear Programming, ILP) but lower

computational complexity

B

v1

B v2

E

D

C

v4

v5

F

v6

G

4 ALUs.

Latency = 2

for all operations

Comparison with other algorithms in the paper

v4

D

v5

E

v6

F

G

II = 2

Ai Bi-1Ci-2 Di-2

Fi-3 Ei-2 Gi-4

v1 v2 v4 v5 v6

MaxLive = 6

C. Kessler, IDA, Linköpings universitet.

19

TDDC86 Compiler Optimizations and Code Generation

HRMS – Conclusion

C. Kessler, IDA, Linköpings universitet.

20

TDDC86 Compiler Optimizations and Code Generation

Modulo Scheduling with Recurrences?

Works well for loops with high register pressure

Low time complexity

Tested on large benchmark suite.

Reference:

J. Llosa, M. Valero, E. Ayguadé and A. Gonzáles:

Hypernode Resource Modulo Scheduling.

Proc. 28th ACM/IEEE Int. Symposium on Microarchitecture,

pp. 350-360, IEEE Computer Society Press, 1995

C. Kessler, IDA, Linköpings universitet.

21

TDDC86 Compiler Optimizations and Code Generation

Modulo Scheduling and Register Allocation

C. Kessler, IDA, Linköpings universitet.

22

TDDC86 Compiler Optimizations and Code Generation

Modulo Scheduling and Register Allocation

Software Pipelining tends to increase register pressure

Live ranges may span over several iterations

May lead to (more) register spill

C. Kessler, IDA, Linköpings universitet.

23

TDDC86 Compiler Optimizations and Code Generation

Introduces new problems

Should we spill or increase II ?

How to choose variables to spill ?

Integrated software pipelining (later)

C. Kessler, IDA, Linköpings universitet.

24

TDDC86 Compiler Optimizations and Code Generation

4

Register Allocation

for Modulo-Scheduled Loops

Modulo Scheduling for Loops

We call a live range self-overlapping if it is longer than II

Example:

Needs > 1 physical register

Hard to address properly

without HW support

at target level

s = 0.0;

t = a[0]*b[0];

for ( i=1; i<N; i++)

{

s = s + t;

t = a[i] * b[i];

}

s = s + t;

Modulo Variable Expansion

Unroll the kernel and rename symbolic registers

until no self-overlapping live ranges remain

t

s

+1

Given:

VLIW-Processor with 3 units:

- Adder (Latency 1),

- Multiplier/MAC (Latency 3),

- Memory access unit (Latency 3)

by live range splitting (inserting copy operations before

modulo scheduling) [Stotzer,Leiss LCTES-2009]

Hardware support: Rotating Register Files

Iteration Control Pointer points to window in cyclic loop

file, advanced by25hardwareTDDC86

loop

control

Compiler

Optimizations and Code Generation

C. Kessler, IDA, register

Linköpings universitet.

Modulo Scheduling for Loops

can benefit from integration with instruction selection

s = 0.0;

t = a[0]*b[0];

for ( i=1; i<N; i++)

{

s = s + t;

t = a[i] * b[i];

}

s = s + t;

Loop-carried data dep.,

Distance +1

Simplified IR:

t

s

*

+

s

(loop control omitted for simplicity,

alternatively, ZOL)

MAC

+1

t

INDIR

INDIR

+

+

Kernel

Given:

(i=4,…,N-1):

VLIW-Processor with 3 units:

ADD

- Adder (Latency 1),

t

a+ii

- Multiplier/MAC (Latency 3),

- Memory access unit (Latency 3)

t+1

b+ii

ld

add

INDIR

+

+

Kernel

(i=4,…,N-1):

t

C. Kessler, IDA, Linköpings universitet.

Mem

MUL

s+t i-3 a[i]*b[i] i-2 ld b[i] i-1

t+1

a+i i

t+2

b+i i

26

add

b

i

ADD

ld

II = 3

ld a[i] i

TDDC86 Compiler Optimizations and Code Generation

Summary

Software Pipelining / Modulo-Scheduling

Software Pipelining: Move operations across iteration boundaries

Simplest technique: Modulo scheduling

= Fill modulo reservation table

Better resource utilization,

more ILP,

also in the presence of loop-carried data dependences

In general, higher register need, maybe longer code

b

i

a

INDIR

t

Example:

*

+

s

(loop control omitted for simplicity,

alternatively, ZOL)

mul

add

a

A-priori avoidance of self-overlapping live ranges

Loop-carried data dep.,

Distance +1

Simplified IR:

Heuristics e.g. HRMS, Swing Modulo Scheduling, …

Optimal methods e.g. Integer Linear Programming

MUL

mac i-3

Mem

ld b[i] i-1

ld a[i] i

II = 2

(Problem is NP-complete like acyclic scheduling)

Self-overlapping live ranges need special treatment

Loop unrolling can leverage additional optimization potential

Up to now, only at target code level,

C. Kessler, IDA, Linköpings universitet.

27

TDDC86 Compiler Optimizations and Code Generation

hardly integrated (sometimes with

registerTDDC86

allocation)

Compiler Optimizations and Code Generation

28

C. Kessler, IDA, Linköpings universitet.

5