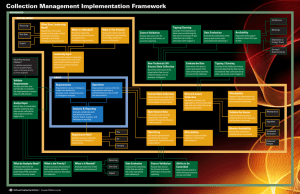

Implementation Framework – Collection Management 3.1

advertisement

Implementation Framework – Collection Management Troy Townsend Troy Mattern Jay McAllister September 2013 CARNEGIE MELLON UNIVERSITY | SOFTWARE ENGINEERING INSTITUTE Implementation Framework – Collection Management 3.1 Copyright 2013 Carnegie Mellon University This material is based upon work funded and supported by ODNI under Contract No. FA8721-05-C-0003 with Carnegie Mellon University for the operation of the Software Engineering Institute, a federally funded research and development center sponsored by the United States Department of Defense. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of ODNI or the United States Department of Defense. NO WARRANTY. THIS CARNEGIE MELLON UNIVERSITY AND SOFTWARE ENGINEERING INSTITUTE MATERIAL IS FURNISHEDON AN “AS-IS” BASIS. CARNEGIE MELLON UNIVERSITY MAKES NO WARRANTIES OF ANY KIND, EITHER EXPRESSED OR IMPLIED, AS TO ANY MATTER INCLUDING, BUT NOT LIMITED TO, WARRANTY OF FITNESS FOR PURPOSE OR MERCHANTABILITY, EXCLUSIVITY, OR RESULTS OBTAINED FROM USE OF THE MATERIAL. CARNEGIE MELLON UNIVERSITY DOES NOT MAKE ANY WARRANTY OF ANY KIND WITH RESPECT TO FREEDOM FROM PATENT, TRADEMARK, OR COPYRIGHT INFRINGEMENT. This material has been approved for public release and unlimited distribution except as restricted below. Internal use:* Permission to reproduce this material and to prepare derivative works from this material for internal use is granted, provided the copyright and “No Warranty” statements are included with all reproductions and derivative works. External use:* This material may be reproduced in its entirety, without modification, and freely distributed in written or electronic form without requesting formal permission. Permission is required for any other external and/or commercial use. Requests for permission should be directed to the Software Engineering Institute at permission@sei.cmu.edu. * These restrictions do not apply to U.S. government entities. DM-0000620 3.2 Implementation Framework – Collection Management CARNEGIE MELLON UNIVERSITY | SOFTWARE ENGINEERING INSTITUTE Implementation Framework–Collection Management Background The Software Engineering Institute (SEI) Emerging Technology Center at Carnegie Mellon University studied the state of cyber intelligence across government, industry, and academia to advance the analytical capabilities of organizations by using best practices to implement solutions for shared challenges. The study, known as the Cyber Intelligence Tradecraft Project (CITP), defined cyber intelligence as the acquisition and analysis of information to identify, track, and predict cyber capabilities, intentions, and activities to offer courses of action that enhance decision making. One of the most prevalent challenges observed in the CITP was that organizations were inundated with data. One company noted that they subscribed to multiple feeds from third party intelligence vendors, only to discover that the vendors largely reported the same information. Further compounding the problem was that the same information would be reported from multiple government sources a couple days later. Other organizations collected information without necessarily understanding why or what to do with it; an organization surveyed in CITP was capturing passive domain name system (DNS) information, but deleted it before any analysis was performed on the data because it took up too much storage space. Three core aspects lie at the heart of the collection management process: a requirement, the actual data gathering, and analysis of the data to answer the requirement. As organizations become more advanced in their implementation of this process, more factors need to be considered. This implementation framework divides these factors into three levels: basic, established, and advanced. Not every organization needs to employ an advanced collection management process – it will be up to individual organizations to assess what their needs are and pick the right mix of factors for themselves. What this framework provides is a method for adding additional rigor to the three facets of collection management through the combination of best practices in use by CITP participants. These examples highlight the necessity for a process to organize and manage data gathering efforts. Knowing where to get information and what type of information is needed ensures that key stakeholders, most importantly leadership, are provided the information needed to enhance their decision making. In government and military intelligence organizations, this process is called collection management. Throughout the course of the CITP research, it became apparent that commercial organizations were employing some facets of collection management into their cyber intelligence processes. Most borrowed the government terminology and identified it as collection management, although some did use variations of this term (prioritization management or requirements management). For the purposes of this framework, we will use the term collection management, and define it as the process of overseeing data gathering efforts to satisfy the intelligence requirements of key stakeholders. CARNEGIE MELLON UNIVERSITY | SOFTWARE ENGINEERING INSTITUTE Implementation Framework – Collection Management 3.3 Implementation Basic Collection Management: Organizations looking to operate a basic collection management program should develop the three core pillars of collection management: requirements, collection operations, and analysis. Collection Management in Practice A CITP participant in the IT sector assigns one senior analyst to run collection management specifically for their open source intelligence. The analyst looks for duplication of data, tracks sources to ensure they are still relevant, and feeds data to the various business units (such as product development) based on each unit’s specific needs. At the basic level, a requirements process requires the organization to have a mechanism in place to collect and document the intelligence requirements of its stakeholders. Traditional stakeholders include leadership, business units or operational units, network security, and intelligence analysts. The mechanism to collect these requirements can be formal or informal (e-mail submission or captured in weekly meetings), but stakeholders need to know how to request information. 3.4 Implementation Framework – Collection Management Collection operations is the process of taking requirements and marrying them up to available data. For a basic collection operations process, the key is for the organization to understand its access to data sources and the location of their knowledge gaps. Acknowledging the existence of these gaps and understanding where they are is as important as knowing what data can be obtained internally and ensuring the most relevant data is being made available for analysis. Analysis and reporting is the process of taking the collected data and turning it into intelligence by fusing sources, adding context, and providing analysis. The resulting report aids the decision making process for leadership and other stakeholders. Indicators of Success • S takeholders in the organization know who to go to for intelligence needs and how to submit their requests • T he organization understands what data is available and that some requirements cannot be met using existing sources organization has identified duplicative or irrelevant • The data sources. CARNEGIE MELLON UNIVERSITY | SOFTWARE ENGINEERING INSTITUTE Established Collection Management: Organizations that operate a more sophisticated collection management process build off the capabilities established in the basic process. Adding additional rigor to the requirements process and assessing the quality of the source data facilitates answering the right questions with the best available data. Collection Management in Practice A CITP participant in the financial sector works closely with leadership to help determine priorities. The CIO often lets the Threat Intelligence branch know what keeps him up at night, and the Threat Intelligence branch has worked closely with senior officers in risk management, drawing out their questions, to formalize intelligence requirements. As stakeholders learn where to go for their intelligence needs, the likely outgrowth is an abundance of requests that can overwhelm the intelligence analysis and reporting capacity of the organization. Established collection management programs realize that not all requests are created equal; some requests have a higher priority than others. At this intermediate stage of development, the first step is to separate and prioritize leadership’s requirements from the rest of the requirements. Depending on the organization, the definition of “leadership” will fluctuate, from C-level officers to directors of security, business unit management, or directors of federal agencies. The key is to identify what level of leadership receives this priority status and designate their requests as Priority Intelligence Requirements (PIRs). PIRs should be evaluated for two factors: what does leadership need and when do they need the information. What does leadership need entails identifying the information that leadership requires in order to support critical decision making. Once captured, it is important to determine when this information is needed. Are these onetime requests from leadership? Are they standing requirements? Is the requirement so critical that once there is data to support an answer, it must be reported urgently? Armed with the knowledge of what information is required and when it is needed, an overall priority can be assigned to the PIR vis-à-vis the other PIRs in the system. This priority aids in determining what resources are needed in the collection operations process. Evolving collection operations from the basic level requires assessing and managing the previously identified data sources. Collection managers at this level should ensure there are enough data sources to provide redundancy (if needed) and corroboration of data. This can be accomplished through a simple matrix that maps PIRs to the sources of available data. The matrix is an easy way to visualize if there are sources available to answer the PIRs and if there are multiple sources that, when correlated, provide a higher level of confidence in the data. The matrix also shows gaps in sources. These gaps can be outsourced to third party intelligence service providers or used as justification to field new hardware, personnel, or tools to collect the information. When complete, the matrix will show internal data sources, open sources, and third party sources. CARNEGIE MELLON UNIVERSITY | SOFTWARE ENGINEERING INSTITUTE The internal data sources mostly will be network-based, such as intrusion detection system (IDS) logs, proxy logs, host-based logs, netflow, etc. The key considerations for the network-based data are affordability, availability, and ensuring the data being collected is valid. Organizations should ensure that the cost of collecting the data is justified. Similarly, if additional network insight is required to answer a PIR, the same calculus can be applied to justify the purchase. If a sensor is in place, it is important to monitor its availability. Planning for system maintenance, downtimes, upgrades, and unscheduled outages will help collection managers prepare for periods when information will not be available and other sources may be needed. Lastly, the collection manager should validate the reliability of the system and ensure that it is collecting the right data. Open source and third party data fill out the data source matrix. The key concepts to consider here are if outsourcing is affordable and makes sense. Is the information that is being outsourced to a third party also freely available in open source? If so, does the organization have the personnel to collect and analyze it? Advancing analysis and reporting from the basic level simply requires the analyst to identify if the requirements are being met with the data that is being collected. In many cases, the requirements are partially answered, so additional or continued collection is necessary. In other cases, the data provided did not address the PIRs and so the collection manager has to re-evaluate which sources of data are best suited to answer the PIRs. When requirements are met, then the task can be closed out. Ideally, organizations will track which PIRs have been closed so that if the issue re-emerges in the future, the organization has data already available and knows where to get new information if required. Indicators of Success • T he organization tracks satisfied requirements so data/sources can be re-used if the same issue arises again • T he organization maintains an updated matrix depicting sources of data from internal, open source, and third party sources • T he organization can validate and justify the costs of third party intelligence providers/consortiums • T he organization maintains a prioritized list of leadership’s intelligence requirements Implementation Framework – Collection Management 3.5 Advanced Collection Management: Organizations looking to field advanced collection management processes will invest additional resources in managing data sources and prioritizing intelligence requirements from multiple stakeholders, including analysts themselves. With PIRs from leadership defined and prioritized, the next step is to incorporate the needs of other stakeholders. One requirement commonly overlooked in the commercial sector was the analysts. Organizations operating a more sophisticated collection management process must take into account the PIRs of the intelligence analysts, and apply the same standards of organization and prioritization as PIRs from other stakeholders. Analysts must assess the priority of their requirements, as well as the urgency with which it is needed. With PIRs being generated and prioritized for all the organization’s stakeholders, it is important to validate the requirements on a regular basis. If the requirement is still valid, the priority may have changed over time. Validating the requirements helps ensure that the data collection is focused on the most relevant needs of the stakeholders. Collection Management in Practice A CITP participant in the IT sector aligns its analysts’ intelligence requirements with the company’s five year strategic plan. This allows them to stay proactive and relevant to the business units as the company’s goals evolve. Organizations adopting an advanced collection management process must look at data sources beyond network data. These sources of information include data from business units, physical security, or even human sources. As with all other data gathering, the sources need to be validated and the information needs to be evaluated to ensure that accurate and actionable information is being provided to the analysts. Tracking the availability of these information sources can be difficult, but certain events may be recurring that are easier to monitor, such as conferences, meetings, or overseas business travel. The last concept to consider is tipping and queuing. This is the idea that collecting information from the data sources can be improved by coordinating activities between multiple sensors, or if certain events cause the collection to begin or end. If configured correctly, tipping and queuing makes the collection apparatus more refined, and provides a smaller set of highly relevant data for analysts to work with in their daily operations. Indicators of Success • More cost effective data gathering • G reater understanding by the analysts/data collectors as to what information is actually needed • I mproved and more efficient coordination with third party intelligence providers The other process that evolves is collection operations. The first big step from the previous capability is validating third party collection and evaluating their provided data. Information coming from third party providers usually can be tied to a discrete collection capability that can be validated to confirm it has the right level of access and assess its history of accurate reporting. This ties closely to evaluating the data that comes from third party providers. Just as with an organization’s own data collection, the usefulness of the third party data needs to be evaluated, which helps justify the costs to obtain it. Lastly, organizations should determine if the third party source can be directly controlled. If so, it can be integrated into the organization’s own collection matrix and adjusted as needed. 3.6 Implementation Framework – Collection Management CARNEGIE MELLON UNIVERSITY | SOFTWARE ENGINEERING INSTITUTE Collection Management Implementation Framework ADVANCED ESTABLISHED Recurring One-time Urgent Conference What Does Leadership Need? Identify the information needed to help make critical decisions (commonly referred to as Priority Intelligence Requirements) Tipping/Queuing When is it Needed? What Is The Priority? Determine reporting timeliness and recurrence for high priority intelligence requirements Assess the relative priority of intelligence requirements, which helps determine resource allocation Source Validation Assess data source for its level of access and history of accurate reporting Consider if the likelihood of these sources obtaining the data can be increased if data collection starts after a particular event triggers it Data Evaluation Availability Evaluate the usefulness of the data as well as the costs associated with obtaining it Keep track of the opportunities to collect this type of data Validate Requirements Accept, prioritize, and periodically re-evaluate the requirements to ensure they are still relevant Separate the needs of leadership from the needs of the rest of the organization and focus special attention to answering these requirements Non-Technical (AllSource) Data Collection Data from other parts of the organization that are not network-based, such as business intelligence BASIC Requirements Operations Organizations receive intelligence needs from leadership, business units, network security, or inteligence analysts Organizations receive collection requirements and look at available data sources to fulfill the requirements Internal Data Collection These sources are under direct control of the organization and can be tasked to collect data Analyst Input Not all data is immediately used for reporting; data also can be collected to help analysts build the bigger story Employees on Foreign Travel Surveys/ Questionnaires Leadership Input What Priority Does it Retain? A validated requirement may be assigned higher, lower or the same priority as it was originally Meetings Analysis & Reporting Evaluate the Data Tipping / Queuing Determine if the data is useful and assess if the value of the data warrants the cost of the collection Consider if the likelihood of these sources obtaining the data can be increased if data collection starts after a particular event triggers it Network-based Collection Hardware, software, log aggregators, and the associated data and meta-data that can be collected from them Open Source Data Analysts collaborate with operations to receive data for fusion, analysis, and intelligence reporting This is data external to the organization, but widely available through the Internet or other sources Affordability Assess if the organization can afford to collect, process, and store network-based data Validate the Sensor Maintenance Confirm that the system is collecting the correct data and that the data is accurate Upgrades Monitor Availability Requirement Met? Analysts assess if collected data answers intelligence requirements Yes Third Party Affordability No Information gathered and provided by an external organization, typically through a subscription Assess if outsourced data collection is financially advantageous Partially Ensure data collection is available when it is needed Scheduled Downtime Unplanned Outages Recurring What do Analysts Need? Analysts determine the information needed to answer leadership’s PIRs and other business/ security needs What is the Priority? Analysts assess the priority of their requirements, which in turn drives resource allocation for collection When is It Needed? Analysts determine if their requirement is time bound One-time Urgent Data Evaluation Source Validation Evaluate the usefulness of the data as well as the costs associated with obtaining it Assess data source for its level of access and history of accurate reporting Ability to be Controlled Determine the extent to which third party source can be controlled 09.10.2013