Risk analysis

advertisement

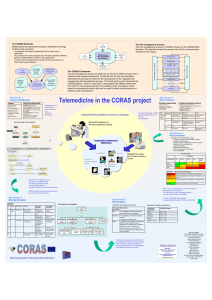

Risk analysis Marcus Bendtsen Department of Computer and Information Science (IDA) Division for Database and Information Techniques (ADIT) Risk • risk = consequence * probability • This is the classical definition that we will use in this lecture, but each of the factors can be decomposed: • Probability is a combination of the probability of a vulnerability and the probability of a threat. • Consequence can be of different types, money, goodwill, etc. Example: Every row in our database is worth $0.01 when it is protected. There are 10 000 rows in the database. The probability that somebody can steal our database is 0.5, thus the risk is: ($0.01 * 10000) * 0.5 = $50. If somebody is selling protection for $100, then we would loose money by buying the protection, but if somebody is willing to sell protection at $30, then it may be worth it to protect the remaining $20. 2 Risk analysis 3 • Risk analysis is a process of finding and quantifying threats using the aforementioned equation (risk = consequence * probability). • The challenge is in doing this in an organised manner, such that as many threats as possible are found, and that the quantification is done as correctly as possible (it is not always possible to use a quantitative risk measurement, sometimes a qualitative is necessary). • It is not possible to find all threats, since no single individual or group, has complete and clear insight of all parts of a system. Some Some attackers • • Curious attackers An attack is the realisation of a threat. Ideological attackers For-profit attackers Automated attacks Corporate attackers • Worms andInsiders viruses • Target low-hanging fruit Terrorists • Extremely common Nation states • • attacks You have likely been exposed to these, but you may not be aware. Targeted attacks • Aimed at specific targets • Performed with a specific aim • Uncommon The type of attacker we deal with affects the risk analysis as well. The motivation, risk adversenes 4 • Curious attackers Some attackers • Computers were new, wanted to learn for fun. • Ideological attackers • Defacing governments or businesses. • For-profit attackers • Make money from breaking into systems. Targets systems that have value for them or their clients. • Corporate attackers, Terrorists and Nation states • More exotic, significant resources. Most of the time not detected. Consequences can be disastrous, luckily very rare. • The type of attacker affects risk analysis. • Protecting against automatic attacks is a lot different than protecting from nation states. • Motivation, risk adverseness, capabilities, patience, etc. 5 Some purposes • • • 6 Break into systems • To steal information • To manipulate information • To use resources Take control over systems • To perform new attacks • To manipulate systems Disrupt service (Denial of Service) • To extort target • To discredit target • To facilitate other attacks Risk analysis - difficulties • Risk analysis is difficult. • Sometimes it is not hard to find the correct consequences, it is possible that one knows the consequence if a threat is realised. • It is however very hard to estimate the probability: • 7 • A system can be targeted by attackers that test the same attack on millions of systems, or by somebody that is specifically targeting the system. • The probabilities for success are very different. Estimating incorrect probabilities can lead to one threat being judged as high risk, and thus resources are put towards mitigating this threat, however in realty another threat may actually have had a higher risk (which was not mitigated). Risk analysis methods in general • 8 The analysis needs to be constrained to a certain part of the system: • Not all details can be assessed in one single analysis, if one attempts this it often leads to a type of ”analysis paralysis”. • Another type of ”analysis paralysis” comes from iterating the risk analysis indefinitely. • Depth of analysis needs to be constrained: Are you only going to consider the programs running on a system, or are you also going to look at the source code of the programs? • Qualitative or quantitative? Will you be using real numbers to quantify the risk equation or are you going to use qualitative values such as “high-mid-low”? Risk analysis methods in general 9 • There exists many methods for risk analysis. • A common problem is that they expect the analyst to find all threats, vulnerabilities, etc. • This will lead to subjective opinions being part of the analysis: different people will will weigh the consequence and/or the probability of a threat differently. • However, there is no closed-form mathematical formula to solve the problem and thus we must resort to heuristic methods, albeit that they are not globally optimal. • Some methods use ”brainstorming”, such that the analysis is done by more than one person. The motivation is that you find more threats this way, however there are group dynamic issues (for instance, the one that speaks the loudest gets their opinion through). Why bother? 10 Why bother? Just because a problem doesn’t have a solution, doesn’t mean that it isn’t a problem. - Timothy Geithner (sort of, I didn’t lookup the exact quote) 11 Risk analysis methods– This lecture • In this lecture we will look at three different risk analysis methods: • CORAS • Information Security Risk Analysis Method (ISRAM) • Attack Trees 12 CORAS 13 CORAS • CORAS defines a language to model threats and risks. • CORAS consist of 7 steps, where every step is in the direction of getting a quantification of the risks. (Sometimes CORAS is defined with 8 steps, but it is the 7 step method with an additional step that we skip). • F. den Braber, I. Hogganvik, M. S. Lund, K. Stølen, F. Vraasen, "Model-based security analysis in seven steps - a guided tour to the CORAS method" This is the CORAS that we use in this course, and the CORAS you should know. 14 CORAS – Step 1 • Customers = They who own the system that is to be analysed. • Security experts = They who perform the risk analysis (can be consultants or in-house). • The initial meeting between the experts and customers is concerned with defining the scope (constraints) • It must become clear which assets are to be protected. • The boundaries of the analysis (depth and width) must be clearly defined, i.e. which parts and how deep of the system should be considered. 15 CORAS – Step 1 Model-based security analy analysis le analysis se representa — decisio — technic — users (o Modelling guid system de — at this describe t pictures o A “low-tech” picture of the system is drawn at the initial meeting in step 1. In this drawing it Fig 2 Picture of the target. is ok to include parts of the system that should not be subject of the analysis. For instance, — the pr in this case the connection to the database should not be part of the analysis, yet it is in this more form picture for completeness. the picture we see that speech and other data from the 16 examination of a patient is streamed over a dedicated network, while access to the patient’s health record data-flow CORAS – Step 2 • The system is formally defined using UML by the security experts (class, collaboration, activity). • The experts also produce a CORAS asset diagram. • Direct assets and indirect assets: • Indirect assets are assets that are hurt due to a direct asset being hurt. • Arrows are drawn to show how damage to an asset affects other assets. • A new meeting is set up with experts and customers where the experts show the diagrams and the customers can make amendments. 17 medical equipment cardiologist terminal GP terminal dedicated connection cardiologist GP lysis in seven steps — a guided tour to the CORAS method CORAS – Step 2 Fig 3 Class diagram showing a conceptual view of the target. Internet hardware communication firewall database focus :medical equipment focus terminal :cardiologist terminal :GP terminal medical equipment cardiologist terminal GP terminal :firewall dedicated connection Fig 4 Class diagram showing a conceptual view of the target. Class diagram hardware communication :medical equipment 18 :GP terminal :database cardiologist GP Fig 3 :firewall CollaborationCollaboration diagram illustrating the physical communication lines. diagram stakeholder that is initiating and paying for the analysis), and its four assets: ‘Health records’, ‘Provision of telecardiology service’, ‘Patient’s health’ and ‘Public’s trust in system’. Because trust and health are difficult to measure, especially in a technical setting like this, the focus analysis leader makes a distinction between direct and indirect assets. He explains direct assets as assets that may be harmed directly by an unwanted incident, while the indirect assets are only harmed if one of the direct :cardiologist assets terminalis harmed first. In the asset diagram the direct in Fig 6 symbolise the client’s, or parties’, relation to the assets. After agreeing on the assets, the a high-level analysis together with t ticipants. The short brainstorming sh most important threats and vulnerabi going into great detail. In this case the c about hackers, eavesdroppers, sys whether the security mechanisms are su log out log out — a Model-based security analysis in seven steps — a guided tour to the CORAS method cardiologist GP CORAS – Step 2 Tasks: log on — Step 2 — summary log on Fig 5 Activity diagram describing the parallel processes of the GP and the cardiologist. the target as understood by the analysts is presented, the assets are identified, a high-level analysis is conducted. retrieve health record People that should be present: security analysis leader (required), establish connection acknowledge connection connect medical equipment open health record review examination — technical expertise (required), Modelling guidelines: patient’s health health — place the direct assets within therecords region, Direct log out provision of telecardiology service — indicate with arrows which assets may affect other assets, — assets may be ranked according to their importance, Fig 5 19 Activity Activity diagram describingdiagram the parallel processes of the GP and the cardiologist. public trust in system telecardiology service — place the indirect assets outside the region (indirect Asset part of assets are a harmed as aCORAS consequence of a6diagram directAsset asset(not Fig diagram. being harmed first), log out — f c b — decision makers (required), Indirect — draw a region that logically or physically represents the target of analysis, close connection — [ l representatives of the client: asset diagrams: update health record t Ministry of Health (client) security analysis secretary (required), — users (optional). examine patient — s — if the analysis has more than one client, the clients should be associated with their assets, UML) — d n — d n CORAS – Step 2 • Once the diagrams have been accepted by the customer, a brainstorming session is performed (with both customers and experts). • Here, it is important to identify what threats the clients are worried about, e.g. that external person sees or hears something that is private, etc. • These are not necessarily the most important threats, but they are a good starting point for the experts in depth analysis. • The brainstorming leads to a risk table (next slide). 20 Model-based security analysis in seven steps — a guided tour to the CORAS method CORAS – Step 2 Table 1 threat scenario threat (deliberate) threat (accidental) High-level risk table. unwanted incident asset threat (non-human) Who/what causes it? How? What is the incident? What does it harm? vulnerability What makes it possible? Hacker Breaks into the system and steals health records Insufficient security Employee Sloppiness compromises confidentiality of health records Insufficient training Eavesdropper Eavesdropping on dedicated connection Insufficient protection of connection System failure System goes down during examination Unstable connection/immature technology Employee Sloppiness compromises integrity of health record Prose-based health records (i.e. natural language) Network failure Transmission problems compromise integrity of medical data Unstable connection/immature technology Employee Health records leak out by accident — compromises their confidentiality and damages the trust in the system Possibility of irregular handling of health records 4. Step 3 — approval Risk table The last of the preparatory steps is the approval step. The approval is often conducted as a separate meeting, but may also take place via e-mail. The main goal is to finalise the 21 documentation and characterisation of target and assets, A risk is the potential for an unwanted incident to have an impact upon objectives (assets) [4], or in other words to reduce the value of at least one of the identified assets. Often the client accepts some risks that are not judged to be critical rather than eliminating or reducing CORAS – Step 3 • The last step of preparation. • At the end of this step there are several documents that must be present and agreed upon by both customers and expert. • Four more documents are authored: • Sorting of assets (which assets are most important) • Consequence scales (sometimes several scales are needed depending on the assets, it is easy to put numerical values for some assets and hard/impossible for others). • Probability scales (time: years, weeks, hours, etc. or probabilities: 10%,20%,1%). • Risk evaluation matrix 22 is reached. Because assets of different types are involved, intervals an increasing number of expected based onhave the following rule of thumb — each theevents lower they make separate consequence scales for of the until the maximum possible number of incidents per year incident likelihood ‘rare’ set tothe be aconsequence maximum of scale one direct assets. Table 3 is shows is reached. Because assets of different types are involved, occurrence the target’s lifetime; the remaining defined for during the asset ‘Health records’ in terms of number they make separate consequence scales for each of the of health records affected. If feasible, the consequence direct assets. Table 3 shows the consequence scale description for an asset Table 2 may Assetinclude table. more than one defined for the asset ‘Health records’ in terms of number measure, e.g. ‘major’ could be the number of disclosed of health records affected. If feasible, the consequence health or the number of deleted records, etc. Asset records, Typeone description for an asset Importance may include more than Table 4 gives likelihood defined forofthedisclosed target as measure, e.g. the ‘major’ could scale be the number Health records 2 Direct asset such. By usingorthe scale for all scenarios and health records, thesame number of deleted records, etc. incidents, it the is possible extract combinedDirect likelihood Table 4ofgives likelihoodtoscale target as Provision 3 defined for the asset values as shown later in the risk estimation step. telecardiology service the same scale for all scenarios and such. By using incidents, it is possible to extract combined likelihood Public’s trust in system (Scoped Indirect asset 3 Consequence scaleout) for ‘health records’. values Table as shown later in the risk estimation step. the participants decide to thisfor matrix cover the the oth After completing thislettask all assets an assets as well. and the participants have the framework and vocab they need to start identifying threats (a potential After completing this task for all assets the analy of an unwanted incident [5]), vulnerabilities (weakn and the participants have the framework and vocabula which can be exploited by one or more threats they need to start identifying threats (a potential cau unwanted incidents and risks, and can move on t of an unwanted incident [5]), vulnerabilities (weakness next step. which can be exploited by one or more threats [5 CORAS – Step 3 Consequence Description Indirect asset Patient’s healthvalue 1 Table 3 Consequence scale for ‘health(HRs) records’. Catastrophic 1000+ health records are affected Major Consequence value Moderate Catastrophic Minor Major Insignificant Moderate Minor Insignificant Likelihood value Certain Likelihood value Likely Certain Possible Likely Unlikely Possible Rare 23Unlikely 100-1000 HRs are affected Description 10-100 HRs are affected 1000+ health records (HRs) are affected 1-10 HRs are affected 100-1000 HRs are affected No HRs HR isare affected 10-100 affected 1-10 HRs are affected No HRscale. is affected Table 4 Likelihood Table 4 Likelihood scale.3 Description Five times or more per year (50-*: 10y = 5-*: 1y) Description3 Two to five times per year (21-49: 10y = 2,1-4,9: 1y) Five times or amore year10y (50-*: 10y = 1y) 5-*: 1y) Once yearper (6-20: = 0,6-2: TwoLess to five times 10y==0,2-0,5: 2,1-4,9:1y) 1y) than onceper peryear year(21-49: (2-5: 10y Onceonce a year 10y(0-1:10y = 0,6-2: 1y) Less than per(6-20: ten years = 0-0,1:1y) Less than once per year (2-5: 10y = 0,2-0,5: 1y) unwanted incidents and risks, and can move on to t Step — summary next 3step. Tasks: Sorting of assets Step 3 — summary Tasks: the client approves target descriptions an descriptions, the approves target according descriptions and theclient assets should be ranked to import descriptions, consequence scales must be set for each asse the ranked according to importan theassets scopeshould of thebe analysis, Consequence scales (may need consequence scales mustbe bedefined, set for each asset w a likelihood scale must the scope the analysis, more thanofone) the client must decide risk evaluation criteria a likelihood scale must be defined, asset within the scope of the analysis. the client must decide risk evaluation criteria for Participants: asset within the scope of the analysis. the same as in the previous meeting, but, since Participants: sets the boundaries for the further analys Probability scales (may need more the same as inthat the previous meeting,decision-mak but, since thi important the relevant than one) sets the boundaries for the further analysis, present. important that the relevant decision-makers present. 5. Step 4 — risk identification have managed. up, the security analysis leader marks the cells in rix as ‘acceptable’ or ‘must be evaluated’. The g risk evaluation matrix is shown in Table 5, and The findings from the brainstorming are do with the CORAS security risk modelling languag now exemplify how we model risks with th language, using the symbols presented in Fig 7. CORAS – Step 3 s short for 50 or more incidents per 10 years, equivalent to 5 or ts per year. Table 5 Risk evaluation matrix. Frequency Consequence Insignificant Minor Moderate Major Catastrophic Rare Acceptable Acceptable Acceptable Acceptable Must be evaluated Unlikely Acceptable Acceptable Acceptable Possible Acceptable Acceptable Likely Acceptable Certain Must be evaluated Must be evaluated Must be evaluated Must be evaluated Must be evaluated Must be evaluated Must be evaluated Must be evaluated Must be evaluated Must be evaluated Must be evaluated Must be evaluated Must be evaluated Must be evaluated Risk evaluation matrix BT Technology Journal • Vol 25 No 1 • J Must decide which risks have to be mitigated, and which risks can be ignored. 24 CORAS – Step 4 • Risk identification by structured brainstorming (only experts). • A thorough walkthrough of the system that is to be analysed. • People have different backgrounds and competences. (Does not necessarily have to be only IT-people). • • The group will find more threats than a single person would find. Documented using CORAS security risk modelling language. Model-based security analysis in seven steps — a guided tour to the CORAS method logical or physical region threat (accidental) threat (deliberate) threat (non-human) threat scenario treatment scenario unwanted incident Fig 7 25 asset stakeholder vulnerability and risk Symbols from the CORAS risk modelling language. or CORAS – Step 4 26 • All documents created during step 1, 2 and 3 are used as input to the brainstorming session. • One of the experts has prepared threat scenario diagrams • These initial documents are based on the threats that were pointed out by the customers in step 2. • These documents are updated and expanded during the session. different stages of the analysis, it is essential that information gathered during this session is documented in a simple and comprehensive way. The analysis leader uses the target models from Step 2 (FigsVulnerability 2, 3, 4 and 5) as input to the brainstorming session. The models are assessed in a stepwise and fidentiality of the patient’s health records. The system also allows for irregular handling of health records where an employee may accidentally cause a leakage of records. A confidentiality or integrity breach may harm the health record in the sense that it is no longer secret nor correct. In the outmost consequence a faulty health record may affect the patient’s health. CORAS – Step 4 . Threat scenario Incident telecardiology service insufficient training employee sloppy handling of records compromises confidentiality of health records patient’s health prose-based health records health record leakage compromises integrity of health records possibility of irregular handling of health records Fig 8 Indirect Initial threat diagram — accidental actions. Initial threat diagram for human mistakes. BT Technology Journal • Vol 25 No 1 • January 2007 27 health records Direct CORAS – Step 4 Model-based security analysis in seven steps — a guided tour to the CORAS m telecardiology service breaks into system insufficient security hacker eavesdropping on dedicated connection eavesdropper compromises confidentiality of data transmitted health records insufficient protection of connection Fig 9 telecardiology service 28 steals health records Initial threat diagram — deliberate actions. Initial threat diagram for human attacks. immature system goes down during examination examination disrupted provision of connection data transmitted insufficient protection of connection eavesdropper CORAS – Step Initial threat diagram — deliberate4 actions. Fig 9 telecardiology service immature technology system goes down during examination examination disrupted system failure transmission problems network error compromises integrity of medical data unstable connection Fig 10 provision of telecardiology service health records Initial threat diagram — non-human threats. In the threat diagram describing deliberate harmful by employees’ accidental actions (Fig 8) receives m Initial threat diagram for ”non-human” actions caused by humans, the participants have attentionthreats. among the participants and develops into dentified two main threats — hacker and eavesdropper Fig 11. Fig 9). A hacker may exploit insufficient security Due to space limitations, we will not explore the mechanisms to break into the system and steal health two threat diagrams further, but concentrate on ju ecords. An eavesdropper is a person that, due to 29 CORAS – Step 4 Model-based security analysis in seven steps — a guided tour to the CORAS method possibility of irregular handling of health records GP health records sent to unauthorised people compromises confidentiality of health records insufficient training health records health record copies stored on local computer prose-based health records wrong input in health record compromises integrity of health records no input validation misconfiguration of system IT personnel insufficient access control patient is given wrong diagnosis slow system unable to set diagnosis due to slow system lack of competence provision of telecardiology service telecardiology service Updated and expanded threat diagram for threat human mistakes after the session. Fig 11 Final diagram — accidental actions. 30 people without the required competence become Modelling guideline: patient’s health CORAS – Step 5 • During another session (a workshop) the consequence and probability of every threat is estimated. • Using the predefined scales from step 3: • Every participant of the workshop gives their probability and consequence estimate to every threat. • A consensus for estimates is found. • The estimated values are used together with the risk evaluation matrix to decide if the risk is worth analysing further (and finding mitigations) or if the risk should be accepted. 31 decided in Step 3. In this workshop it is especially important to include people with the competence needed to estimate realistic likelihoods and consequences, meaning that technical expertise, users and decision makers must be included. unauthorised people’ and ‘Health record copies stored on local computer’ can both lead to ‘Compromises confidentiality of health records’. Table 6 shows how the combined likelihood is estimated. The technique is informal, but suitable for the creative structured Be careful! CORAS – Step 5 possibility of irregular handling of health records GP health records sent to unauthorised people [rare] compromises confidentiality of health records [rare] insufficient training de mo health record copies stored on local computer [unlikely] compromises integrity of health records [possible] prose-based health records wrong input in health record [possible] IT personnel insufficient access control no input validation lack of competence mod er at misconfiguration of system [possible] slow system [possible] health records e rat patient is given wrong diagnosis [unlikely] catas troph Consequence ic unable to set diagnosis due to slow system [likely] mod erate provision of telecardiology service telecardiology service 32 e Fig 12 Threat diagram with likelihood and consequence estimates. r ajo m patient’s health the threat diagram, the analysis secretary extracts five risks. ‘Compromising the confidentiality of health records’ (CC1) may affect health records. ‘Compromising the integrity of health records’ may also harm health records (CI1), in addition to patient’s health if it contributes to a faulty diagnosis (PR1). Finally, ‘slow system’ may slow down an examination (SS2) and harm pants reject the suggested the patient’s health (SS1). Only CC1 is within acceptable confidentiality of health • Risk evaluation risk levels, the rest need further evaluation. Table 7 shows lihood is less than ‘unlikely’ placed inincidents the risk evaluation matrix. • Extract risks from the therisks unwanted (compromises confidentiality of more precise calculation of s (FTA)[6] may be used. It is combined estimates reflect mbined estimates should be for validation. CORAS – Step 6 health records CC1 = moderate / rare). Table 7 Risk evaluation matrix with risks consequence. • Place the risks in the risk evaluation matrix (defined earlier): Consequence Thisgiven is outside the area ust be a likelihood previously ncidentthat likelihoods arewas based defined as important. Likelihood Insignificant Minor Rare Moderate Major Catastrophic CC1 Unlikely Possible Likely PR1 CI1, SS2 SS1 n unwanted incident and an Certain equence estimate. • The customer must accept the matrix, and they may ask the The analysis leader gives the participants an opporexperts to reconsider certain risk evaluations. tunity to adjust likelihood and consequence estimates, acceptance levels, to make risk sure is that the results • A final diagram of and the risk threats and the evaluated presented. reflect reality as much as possible. quired), (required), 33 The participants request an overview of the risks. CORAS – Step 6 Will not be mitigated Model-based security analysis in seven steps — a guided tour to the CORAS method CC1 compromises confidentiality of health records [acceptable] health records GP CI1 compromises integrity of health records [unacceptable] PR1 patient is given wrong diagnosis [unacceptable] patient’s health SS2 unable to set diagnosis due to slow system [unacceptable] SS1 slow system [unacceptable] IT personnel provision of telecardiology service telecardiology service Fig 13 34 Risk overview. CORAS – Step 7 35 • All risks that fall into the grey area should be mitigated. • In a new workshop, mechanisms are agreed upon that either lower the risk or consequence (or both) of a risk until it is acceptable. • Some mechanisms can be more expensive than others, and therefore ”cost-benefit” is partially weighed in. • In the end, a plan is presented to the customers that include the mitigations. suggested of health n ‘unlikely’ contributes to a faulty diagnosis (PR1). Finally, ‘slow system’ may slow down an examination (SS2) and harm the patient’s health (SS1). Only CC1 is within acceptable risk levels, the rest need further evaluation. Table 7 shows the risks placed in the risk evaluation matrix. CORAS – Step 7 Table 7 Risk evaluation matrix with risks consequence. Consequence a likelihood ods are based cident and an ate. Likelihood Insignificant Minor Rare Moderate Major Catastrophic CC1 Unlikely Possible Likely PR1 CI1, SS2 SS1 Certain The analysis leader gives the participants an opportunity to adjust likelihood and consequence estimates, and risk this. acceptance levels, to make sure that the results We need to mitigate reflect reality as much as possible. How? 36 The participants request an overview of the risks. want reduce to knowconsequence who, or what,(move is initiating There are twoThey options, to the them left) orand reduce probability which assets they harm. The analysis secretary models the (move upwards). risks with their associated risk values in a risk diagram according to the guidelines (see summary). The final risk Model-based security analysis in seven steps — a guided tour to the CORAS method extend training programme (1 - 2 days) CORAS – Step 7 CI1 GP insufficient training prose-based health records health record copies stored on local computer wrong input in health record compromises integrity of health records health records patient is given wrong diagnosis unable to set diagnosis due to slow system misconfiguration of system IT personnel insufficient access control lack of competence SS2 slow system SS1 provision of telecardiology service telecardiology service revise access lists 37 PR1 no input validation patient’s health CORAS - Summary • At first glance the method may feel a bit overwhelming, however once you have read and used it a couple of times it is quite straight forward. • It is definitely a time and resource consuming method, and not all projects will benefit enough from CORAS to justify this cost. • The strength lies in the constant connection with the customer and the use of brainstorming and workshops (where many voices and opinions can be heard). 38 Information Security Risk Analysis Method ISRAM 39 ISRAM - Introduction • Focuses on one threat and tries to estimate the risk for this specific threat: • 40 The risk that a computer on a network gets infected by a virus. • Uses a specially crafted survey that is sent to users and experts. • The answers to the survey estimates the risk of the threat (using probability and consequence). ISRAM – Step 1 and 2 • Step 1 – Identify the threat of interest: virus infection. • Step 2 – Identify the factors that influence the probability and the consequence of the threat, and weigh these factors. 41 ISRAM – Step 2 Probability factors Consequence factors The type of a6achments in emails 3 Backup of files 3 Number of emails received per day 1 Physical locaDon of files 2 Number of downloaded files per day 1 Dependency on applicaDons 1 The source of USB-­‐drives 2 • The number of factors for probability does not have to be the same as for consequence. • More weights can be used, but it is hard to discern the difference between 3 and 4 on a 10 grade scale. • The definition of the weights is not strictly defined. Weight Explana.on 42 3 The factor has a direct affect 2 The factor has some affect 1 The factor has an indirect affect ISRAM – Step 3 • Step 3 – Convert factors to questions, create response options and give each option a score. Ques.on B C D How many emails 0-­‐10 (1) do you receive per day? 11-­‐30 (2) 31-­‐40 (3) 41+ (4) Where do you get From the USB-­‐drives from? company (0) Bring them from home (4) How oPen do you Every day (1) backup your files? Every week (2) • • • 43 A Never(4) The scores for the options are in parenthesis (they are removed when the survey is sent) The questions regarding probability and consequence are in the same survey. The possible scores for options are 0 through 4. ISRAM – Step 4 • Calculate the minimum and the maximum number of points that the questions regarding probability can give. • Calculate the minimum and the maximum number of points that the questions regarding consequence can give. • Create intervals (bins) such that scores can be translated to a scale of 1 to 5. 44 Points Qualita.ve scale Quan.ta.ve scale Poäng Qualita.ve scale Quan.ta.ve scale 29-­‐48 Very low probability 1 47-­‐68 Negligable consequence 1 49-­‐68 Low probability 2 69-­‐90 Small consequence 2 69-­‐88 Medium probability 3 91-­‐111 Increased consequence 3 89-­‐108 High probability 4 112-­‐133 Serious consequence 4 108-­‐128 Very high probability 5 134-­‐160 Very serious consequence 5 ISRAM – Step 4 • Create the final risk quantification table Risk = Probability x Consequence 1: Negligible 2: Small 3: Increased 4: Serious 5: Very serious 1: Very low 1: Very low 2: Very low 3: Very low 4: Low 5: Low 2: Low 2: Very low 4: Low 6: Low 8: Medium 10: Medium 3: Medium 3: Very low 6: Low 9: Medium 12: Medium 15: High 4: High 4: Low 8: Medium 12: Medium 16: High 20: Very high 5: Very high 5: Low 10: Medium 15: High 20: Very high 25: Very high 45 ISRAM – Step 5 and 6 • Step 5 – Complete the survey. It can be sent to users of the computers that are the subject of the analysis, and/or other experts. • Step 6 – Use an equation to calculate the a value that represents risk (based on the factors for probability and consequence). Use the result of the equation in the risk evaluation table to get the final risk estimation. 46 ISRAM – Step 6 Risk = 47 N P n=1 [Ts ( I P i=1 N ! ↵i si,n )] N P n=1 [Tk ( J P j=1 N j kj,n )] ! • N = number of respondents • I = number of questions regarding probability • J = number of questions regarding consequence • αi = the weight given to probability question i • si,n = score for the option that respondent n choose for probability question i • βj = the weight given to consequence question j • kj,n = score for the option that respondent n choose for consequence question j • Ts a function that translates an integer to the probability scale 1 through 5 • Tk a function that translates an integer to the consequence scale 1 through 5 ISRAM – Step 6 Risk = N P n=1 [Ts ( I P i=1 N ! ↵i si,n )] N P [Tk ( n=1 J P j kj,n )] j=1 N ! Respondent Sum of probability ques.ons TS Sum of consequence ques.ons TK 1 94 4 103 3 2 74 3 136 5 Mean: 3.5 Mean: 4 Risk = 3.5 * 4 = 14 which is between medium and high risk, but closer to high risk 48 ISRAM – Step 7 • Step 7 – Evaluation of results 49 • The final risk estimation is the important outcome of the method. • The estimation can be used to decide if new policies should be made or new mechanisms should be introduced. • However, at the same time a lot of information has been gathered about the use of the systems analysed. • For instance, it may be possible to get an idea of how often users update their software, if they are using administrative accounts properly, etc. • This extra information is valuable when deciding between mitigations. ISRAM - Summary • ISRAM is useful when you want to estimate the risk for one specific threat. • It only requires one person to administer the analysis (if there already are respondents). (It can be advantageous for more people to help with the choice of factors and weights). • 50 The outcome of the analysis is very dependent on the factors identified and the weights chosen. You cannot get answers to questions you did not ask. ATTACK TREES 51 Attack trees Represent attacks against the system in a tree structure, with the goal as the root node and different ways of achieving that goal as leaf nodes. 52 Attack Trees Open Safe Pick lock Learn combo Find written combo Threaten Blackmail Cut open Get combo from target Eavesdrop Listen to conversation 53 Install improperly Bribe Get target to state combo Attack Trees Open Safe Pick lock Learn combo Find written combo Threaten Blackmail Cut open Get combo from target Eavesdrop Bribe and Listen to conversation 54 Install improperly Get target to state combo Attack Trees P Open Safe I P Pick lock P Learn combo I Get combo from target I Threaten Install improperly Cut open P Find written combo I I I Blackmail P Eavesdrop Bribe and P I Listen to conversation 55 Get target to state combo Attack Trees P Open Safe $10 I P Pick lock $30 P Learn combo $20 I $20 $75 I Threaten $60 Cut open $10 $100 Get combo from target I Blackmail $100 P Eavesdrop $60 Bribe $20 and P Listen to conversation $20 56 Install improperly P Find written combo I I I Get target to state combo $40 Attack Trees • We can annotate the attack tree with many different kind of Boolean and continuous values: • “Legal” versus “Illegal” • “Requires special equipment” versus “No special equipment” • Probability of success, likelihood of attack, etc. • Once we have annotated the tree we can query it: • Which attacks cost less than $10? • Legal attacks that cost more than $50? • Would it be worth paying a person $80 so they are less susceptible to bribes? (In reality you need to also consider the probability of success) 57 Attack Trees • First you identify possible attack goals. • Each goal forms a separate tree. • Add all attacks you can think of to the tree. • Expand the attacks as if they were goals downwards in the tree. • Let somebody else look at your tree, get comments from experts, iterate and re-iterate. • Keep your trees updated and use them to make security decisions throughout the software life cycle. 58 Risk analysis - Summary Risk analysis is a cornerstone: • Development of new software may require a risk analysis prior to defining requirements and once the software has been developed. • Expansion of a company to a new office may require a risk analysis of physical security and new business continuity planning. • Changing the topology of a network system may require a risk analysis of how to break down the system in different security levels. • 59 … Risk analysis - Summary • Many methods exists – need to choose one that fits the current situation and available resources. • CORAS, ISRAM and Attack Trees all have their advantages and disadvantages. • Risk analysis is hard, really hard, and a successful analysis is dependent on the experts. • Limiting the analysis, getting help from others and being organised are important common factors for any successful risk analysis. 60 www.liu.se