Abstracting and Counting Synchronizing Processes Zeinab Ganjei, Ahmed Rezine

advertisement

Abstracting and Counting Synchronizing

Processes

Zeinab Ganjei, Ahmed Rezine? , Petru Eles, and Zebo Peng

Linköping University, Sweden

Abstract. We address the problem of automatically establishing synchronization dependent correctness (e.g. due to using barriers or ensuring absence of deadlocks) of programs generating an arbitrary number of

concurrent processes and manipulating variables ranging over an infinite

domain. This is beyond the capabilities of current automatic verification

techniques. For this purpose, we define an original logic that mixes variables refering to the number of processes satisfying certain properties

and variables directly manipulated by the concurrent processes. We then

combine existing works on counter, predicate, and constrained monotonic

abstraction and build an original nested counter example based refinement scheme for establishing correctness (expressed as non reachability

of configurations satisfying formulas in our logic). We have implemented

a tool (Pacman, for predicated constrained monotonic abstraction) and

used it to perform parameterized verification for several programs whose

correctness crucially depends on precisely capturing the number of processes synchronizing using shared variables.

Key words: parameterized verification, counting logic, barrier synchronization, deadlock freedom, multithreaded programs, counter abstraction, predicate abstraction, constrained monotonic abstraction

1

Introduction

We address the problem of automatic and parameterized verification for concurrent multithreaded programs. We focus on synchronization related correctness

as in the usage of barriers or integer shared variables for counting the number of

processes at different stages of the computation. Such synchronizations orchestrate the different phases of the executions of possibly arbitrary many processes

spawned during runs of multithreaded programs. Correctness is stated in terms

of a new counting logic that we introduce. The counting logic makes it possible to express statements about program variables and variables counting the

number of processes satisfying some properties on the program variables. Such

statements can capture both individual properties, such as assertion violations,

and global properties such as deadlocks or relations between the numbers of

processes satisfying certain properties.

?

In part supported by the 12.04 CENIIT project.

2

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

Synchronization among concurrent processes is central to the correctness of

many shared memory based concurrent programs. This is particularly true in

certain applications such as scientific computing where a number of processes,

parameterized by the size of the problem or the number of cores, is spawned

in order to perform heavy computations in phases. For this reason, when not

implemented individually using shared variables, constructs such as (dynamic)

barriers are made available in mainstream libraries and programming languages

such as Pthreads, java.util.concurrent or OpenMP.

Automatically taking into account the different phases by which arbitrary

many processes can pass is beyond the capabilities of current automatic verification techniques. Indeed, and as an example, handling programs with barriers

of arbitrary sizes is a non trivial task even in the case where all processes only

manipulate boolean variables. To enforce the correct behaviour of a barrier, a

verification procedure needs to capture relations between the number of processes satisfying certain properties, for instance that there are no processes that

are not waiting for the barrier before any process can cross it. This amounts to

testing that the number of processes at certain locations is zero. Checking violations of program assertions is then tantamount to checking state reachability of

a counter machine where counters track the number of processes satisfying predicates such as being at some program location. No sound verification techniques

can therefore be complete for such systems.

Our approach to get around this problem builds on the following observation.

In case there are no tests for the number of processes satisfying certain properties

(e.g. being in specific programs locations for barriers), symmetric boolean concurrent programs can be exactly encoded as counter machines without tests, i.e.,

essentially vector addition systems (VASS). For such systems, state reachability

can be decided using a backwards exploration that only manipulates sets that

are upward closed with respect to the component wise ordering [2, 7]. The approach is exact because of monotonicity of the induced transition system (more

processes can fire more transitions since there are no tests on the numbers of

processes). Termination is guaranteed by well quasi ordering of the component

wise ordering on the natural numbers. The induced transition system is no more

monotonic in the presence of tests on the number of processes. The idea in monotonic abstraction [10] is to modify the semantics of the entailed tests (e.g., tests

for zero for barriers), such that processes not satisfying the tests are removed

(e.g., tests for zero are replaced by resets). This results in a monotonic overapproximation of the original transition system and spurious traces are possible.

This is also true for verification approaches that generate (broadcast) concurrent

boolean programs as abstractions of concurrent programs manipulating integer

variables. Such boolean approximations are monotonic even when the original

program (before abstraction) can encode tests on the number of processes and

is therefore not monotonic. Indeed, having more processes while respecting important relations between their numbers and certain variables in the original

programs does not necessarily allow to fire more transitions (which is what abstracted programs do in such approaches).

Abstracting and Counting Synchronizing Processes

3

Our approach consists in two nested counter example guided abstraction

refinement loops. We summarize our contributions in the following points.

1. We propose an original counting logic that allows to express statements about

program variables and about the number of processes satisfying certain predicates on the program variables.

2. We implement the outer loop by leveraging on existing symmetric predicate

abstraction techniques. We encode resulting boolean programs in terms of a

monotonic counter machine where reachability of configurations satisfying a

counting property from our logic is captured as a state reachability problem.

3. We explain how to strengthen the counter machine using counting invariants,

i.e. properties from our logic that hold on all runs. these can be automatically

generated using classical thread modular analysis techniques.

4. We leverage on existing constrained monotonic abstraction techniques to

implement the inner loop and to address the state reachability problem.

5. We have implemented both loops, together with automatic counting invariants generation, in a prototype (Pacman) that allowed us to automatically

establish or refute counting properties such as deadlock freedom and assertions. All programs we report on may generate arbitrary many processes.

Related work . Several works consider parameterized verification for concurrent

programs. In [9] the authors use counter abstraction and truncate the counters to

obtain a finite state system. Environment abstraction [4] combines predicate and

counter abstraction. Both [9, 4] can require considerable interaction and human

ingenuity to find the right predicates. The works in [8, 1] explore finite instances

and automatically check for cutoff conditions. Except for checking larger instances, it is unclear how to refine entailed abstractions. The closest works to

ours are [10, 3, 5]. We introduced (constrained) monotonic abstraction in [10, 3].

Monotonic abstraction was not combined with predicate abstraction, nor did it

explicitly target counting properties or dynamic barrier based synchronization.

In [5], the authors propose a predicate abstraction framework for concurrent

multithreaded programs. As explained earlier such abstractions cannot exclude

behaviours forbidden by synchronization mechanisms such as barriers. In our

work, we build on [5] in order to handle shared and local integer variables. To

the best of our knowledge, our work is the first automatic verification approach

that specifically targets parameterized correctness of programs involving constructs where the number of processes are kept track of (e.g, using barriers).

Outline. We start by illustrating our approach using an example in Sec. 2 and

introduce some preliminaries in Sec. 3. We then define concurrent programs and

describe our counting logic in Sec. 4. Next, we explain the different phases of

our nested loop in Sec. 5 and report on our experimental results in Sec. 6. We

finally conclude in Sec. 7.

4

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

2

A Motivating Example

Consider the concurrent program described in Fig. 1. In this example, a main

process spawns (transition t1 ) an arbitrary number (count) of proc processes (at

location proc@lcent ). All processes share four integer variables (namely max,

prev, wait and count) and a single boolean variable proceed. Initially, the variables wait and count are 0 while proceed is false. The other variables may assume

any value belonging to their respective domains. Each proc process possesses a

local integer variable val that can only be read or written by its owner. Each

proc process assigns to max the value of its local variable val (may be any integer value) in case the later is larger than the former. Transitions t6 and t7

essentially implement a barrier in the sense that all proc processes must have

reached proc@lc3 in order for any of them to move to location proc@lc4 . After

the barrier, the max value should be larger or equal to any previous local val

value stored in the shared prev (i.e., prev ≤ max should hold). Violation of

this assertion can be captured with the countingpredicate (introduced in Sec

4) (proc@lc4 ∧ ¬(prev ≤ max))# ≥ 1 stating that the number of processes at

location proc@lc4 and witnessing that prev > max is larger or equal than 1.

int

max, prev, wait, count := ∗, ∗, 0, 0

bool proceed := ff

main :

t1 : lcent I lcent : count := count + 1;

spawn(proc)

t2 : lcent I lc1 : proceed := tt

...

proc :

int val :=

t3 : lcent

t4 :

lc1

t5 :

lc1

t6 :

lc2

t7 :

lc3

t8 :

lc4

(3, 7, 0, 0, ff) {(main@lcent )}

t1

(3, 7, 0, 1, ff) {(main@lcent )(proc@lcent , 9)}

t1

(3, 7, 0, 2, ff)

∗

I

I

I

I

I

I

lc1

lc2

lc2

lc3

lc4

...

:

:

:

:

:

prev := val

max ≥ val

max < val; max := val

wait := wait + 1

proceed ∧ (wait = count)

n

(main@lcent )(proc@lcent , 9)2

o

t3

n

o

(3, 9, 0, 2, ff) (main@lc1 )(proc@lcent , 9)(proc@lc1 , 9)

t2

...

Fig. 1. The max example (left) and a possible run (right). The assertion (proc@lc4 ∧

¬(prev ≤ max))# ≥ 1 cannot be violated when starting with a single main process.

A possible run of the concurrent program is depicted to the right of Fig. 1.

Observe that we write (proc@lcent , 9)2 to mean that there are two proc processes

at location lcent s.t. their local val are both equal to 9. In other words, we use

counter abstraction since the processes are symmetric, i.e., processes running

the same lines of code with equal local variables are interchangeable and we do

not need to differentiate them. The initial configuration of this run is given in

terms of the values of max, prev, wait, count and proceed, here (3, 7, 0, 0, ff),

and of the main process being at location lcent . The main process starts by

incrementing the count variable and by spawning a proc process twice.

Abstracting and Counting Synchronizing Processes

5

The assertion (proc@lc4 ∧ ¬(prev ≤ max))# ≥ 1 is never violated under

any run starting from a single main process. In order to establish this fact, any

verification procedure needs to take into account the barrier in t7 in addition to

the two sources of infinitness; namely, the infinite domain of the shared and local

variables and the number of procs that may participate in the run. Until now,

the closest works to ours deal with these two sources of infinitness separately

and cannot capture facts that relate them, namely, the values of the program

variables and the number of generated processes. Any sound analysis that does

not take into account that the count variable holds the number of spawned proc

processes and that wait represents the number of proc processes at locations

lc3 or later will not be able to discard scenarios were a proc process executes

prev := val although one of them is at proc@lc4 . Such an analysis will therefore

fail to show that prev ≤ max each time a process is at line proc@lc4 .

Our original nested CEGAR loop, called Predicated Constrained Monotonic

Abstraction and depicted in Fig. 2, systematically leverages on simple facts that

relate numbers of processes to the variables manipulated in the program. This

allows us to verify or refute safety properties (e.g., assertions, deadlock freedom)

depending on complex behaviors induced by constructs such as dynamic barriers.

We illustrate our approach in the remaining on the max example of Fig. 1.

Fig. 2. Predicated Constrained Monotonic Abstraction

From concurrent programs to boolean concurrent programs. We build on the recent predicate abstraction techniques for concurrent programs. Such techniques

would discard all variables and predicates and only keep the control flow together with the spawn and join statements. This leads to a number of counter

example guided abstraction refinement steps (the outer CEGAR loop in Fig.

2) that require the addition of new predicates. Our implementation adds the

6

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

predicates proceed, prev ≤ val, prev ≤ max, wait ≤ count, count ≤ wait. It is

worth noticing that all variables of the obtained concurrent program are finite

state (in fact booleans). Hence, one would need a finite number of counters in

order to faithfully capture the behavior of the abstracted program using counter

abstraction. In addition, some of the transitions of the program, such as t3 where

a shared variable is updated, are abstracted using a broadcast transition.

From concurrent boolean programs to counter machines. Given a concurrent

boolean program, we generate a counter machine that essentially boils down to

a vector addition system with transfers. Each counter in the machine counts the

number of processes at some location and with some local variables combination.

One state in the counter machine represents reaching a configuration satisfying

the counting property we want to verify. The other states correspond to the

possible combinations of the global variables. The transfers represent broadcast

transitions. Such a machine cannot relate the number of processes in certain

locations (for instance the number of spawned processes proc so far) to the

predicates that are valid at certain states (for instance that count = wait). In

order to remedy to this fact, we make use of counting invariants that relate

program variables, count and wait in the following invariants, to the number of

processes at certain locations.

P

P

count = (proc@lcent )# + i≥1 (proc@lci )#

wait = i≥3 (proc@lci )#

We automatically generate such invariants using a simple thread modular analysis that tracks the number of processes at each location. Given such counting

invariants, we constrain the counter machine and generate a more precise machine for which state reachability may now be undecidable.

Constrained monotonic abstraction. We monotonically abstract the resulting

counter machine in order to answer the state reachability problem. Spurious

traces are now possible. For instance, we remove processes violating the constraint imposed by the barrier in Fig.1. This example illustrates a situation

where such approximations yield false positives. To see this, suppose two processes are spawned and proceed is set to tt. A first process gets to lc3 and waits.

The second process moves to lc1 . Removing the second process opens the barrier for the first process. However, the assertion can now be violated because

the removed process did not have time to update the variable max. Constrained

monotonic abstraction eliminates spurious traces by refining the preorder used

in monotonic abstraction. For the example of Fig.1, if the number of processes

at lc1 is zero, then closing upwards will not alter this fact. By doing so, the process that was removed in forward at lc1 is not allowed to be there to start with,

and the assertion is automatically established for any number of processes. The

inner loop of our approach can automatically add more elaborate refinements

such as comparing the number of processes at different locations. Exact traces of

the counter machine are sent to the next step and unreachability of the control

location establishes safety of the concurrent program.

Abstracting and Counting Synchronizing Processes

7

Trace Simulation. Traces obtained in the counter machine are real traces as far

as the concurrent boolean program is concerned. Those traces can be simulated

on the original program to find new predicates (e.g., using Craig interpolation)

and use them in the next iteration of the outer loop.

3

Preliminaries

We write N to mean the set of natural numbers and Z to mean the one of integer

numbers. We write k to mean a constant in Z and b to mean a boolean value in

{tt, ff}. We use v, s, l, c, a to mean integer variables and ṽ, s̃, ˜l to mean boolean

variables. We let V, S, L, C and A (resp. Ṽ , S̃ and L̃) denote sets of integer variables (resp. sets of boolean variables). We let ∼ be an element in {<, ≤, =, ≥, >}.

An expression e (resp. predicate π) belonging to the set exprsOf(V ) (resp.

predsOf(Ṽ , E)) of arithmetic expressions (resp. boolean predicates) over integer

variables V (resp. boolean variables Ṽ and arithmetic expressions E) is defined

as follows.

e ::= k || v || (e + e) || (e

π ::= b || ṽ || (e ∼ e) || ¬π

− e) || k e

|π∧π |π∨π

|

|

v∈V

ṽ ∈ Ṽ , e ∈ E

We write vars(e) to mean all variables v appearing in e, and vars(π) to mean

all variables ṽ and v appearing in π or in e in π. We also write atoms(π) (the

set of atomic predicates) to mean all boolean variables ṽ and all comparisons

(e ∼ e) appearing in π. We use the letters σ, η, θ, ν (resp. σ̃, η̃, ν̃) to mean mappings from sets of variables to Z (resp. B). Given n mappings νi : Vi → Z such

that Vi ∩ Vj = ∅ for each i, j : 1 ≤ i 6= j ≤ n, and an expression e ∈ exprsOf(V ),

we write valν1 ,...,νn (e) to mean the expression obtained by replacing each occurrence of a variable v appearing in some Vi by the corresponding νi (v). In a

similar manner, we write valν,ν̃,... (π) to mean the predicate obtained by replacing the occurrence of integer and boolean variables as stated by the mappings

ν, ν̃, etc. Given a mapping ν : V → Z and a set subst = {vi ← ki |1 ≤ i ≤ n}

where variables v1 , . . . vn are pairwise different, we write ν [subst] to mean the

mapping ν 0 such that ν 0 (vi ) = ki for each 1 ≤ i ≤ n and ν 0 (v) = ν(v) otherwise. We abuse notation and write ν [{vi ← vi0 |1 ≤ i ≤ n}], for ν : V → Z

where variables v1 , . . . vn are in V and pairwise different, to mean the mapping

ν 0 : (V \{vi |1 ≤ i ≤ n})∪{vi0 |1 ≤ i ≤ n} → Z and such that ν 0 (v 0 ) = ν(v) for each

v ∈ {vi |1 ≤ i ≤ n} and ν 0 (v) = ν(v) otherwise. We define ν̃ [{ṽi ← bi |1 ≤ i ≤ n}]

and ν̃ [{ṽi ← ṽi0 |1 ≤ i ≤ n}] in a similar manner.

A multiset m over a set X is a mappingP

X → Z. We write x ∈ m to mean

m(x) ≥ 1. The size |m| of a multiset m is x∈X m(x). We sometimes view a

multiset m as a sequence x1 , x2 , . . . , x|m| where each element x appears m(x)

times. We write x ⊕ m to mean the multiset m0 such that m0 (y) equals m(y) + 1

if x = y and m(y) otherwise.

8

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

4

Concurrent Programs and Counting Logic

To simplify the presentation, we assume a concurrent program (or program for

short) to consist in a single non-recursive procedure manipulating integer variables. Arguments and return values are passed using shared variables. Programs

where arbitrary many processes run a finite number of procedures can be encoded by having the processes choose a procedure at the beginning.

Syntax. A procedure in a program (S, L, T ) is given in terms of a set T of transitions (lc1 I lc01 : stmt1 ) , (lc2 I lc02 : stmt2 ) , . . . operating on two finite sets of

integer variables, namely a set S of shared variables (denoted s1 , s2 , . . .) and a set

L of local variables (denoted l1 , l2 . . .). Each transition (lc I lc0 : stmt) involves

two locations lc and lc0 and a statement stmt. We write Loc to mean the set

of all locations appearing in T . We always distinguish two locations, namely an

entry location lcent and an exit location lcext . In any transition, location lcext

may appear as the source location only if the destination is also lcext (i.e., a

sink location). Program syntax is given in terms of pairwise different v1 , . . . vn

in S ∪ L, e1 , . . . en in exprsOf(S ∪ L) and π is in predsOf(exprsOf(S ∪ L)).

+

prog ::= (s := (k || ∗))∗ proc : (l := (k || ∗))∗ (lc I lc : stmt)

stmt ::= spawn || join || π || v1 , . . . , vn := e1 , . . . , en || stmt; stmt

Semantics. Initially, a single process starts executing the procedure with both

local and shared variables initialized as stated in their definitions. Executions

might involve an arbitrary number of spawned processes. The execution of any

process (whether initial or spawned with the statement spawn) starts at the

entry location lcent . Any process at an exit point lcext can be eliminated by

a process executing a join statement. An assume π statement blocks if the

predicate π over local and shared variables does not evaluate to true. Each

transition is executed atomically without interruption from other processes.

More formally, a configuration is given in terms of a pair (σ, m) where the

shared state σ : S → N is a mapping that associates a natural number to each

variable in S. An initial shared state (written σinit ) is a mapping that complies

with the initial constraints for the shared variables. The multiset m contains

process configurations, i.e., pairs (lc, η) where the location lc belongs to Loc and

the process state η : L → N maps each local variable to a natural number.

We also write ηinit to mean an initial process state. An initial multiset (written

minit ) maps all (lc, η) to 0 except for a single (lcent , ηinit ) mapped to 1. We

stmt

introduce a relation P in order to define statements semantics (Fig. 3). We

stmt

write (σ, η, m) P (σ 0 , η 0 , m0 ), where σ, σ 0 are shared states, η, η 0 are process

states, and m, m0 are multisets of process configurations, in order to mean that

a process at process state η when the shared state is σ and the other process

configurations are represented by m, can execute the statement stmt and take

the program to a configuration where the process is at state η 0 , the shared

Abstracting and Counting Synchronizing Processes

9

state is σ 0 and the configurations of the other processes are captured by m0 .

For instance, a process can always execute a join if there is another process at

location lcext (rule join). A process executing a multiple assignment atomically

updates shared and local variables values according to the values taken by the

expressions of the assignment before the execution (rule assign).

stmt

(σ, η, m) P (σ 0 , η 0 , m0 )

(lcIlc0 :stmt) 0

(σ, (lc, η) ⊕ m)

−→P

(σ , (lc0 , η 0 ) ⊕ m0 )

stmt

(σ, η, m) P (σ 0 , η 0 , m0 )

(σ, η, m)

substA =

(σ, η, m)

stmt0

: seq

m = (lcext , η 0 ) ⊕ m0

join

(σ 00 , η 00 , m00 )

vi ← valσ0 ,η0 (ei ) |vi ∈ A

: assume

π

(σ, η, m) P (σ, η, m)

(σ 0 , η 0 , m0 ) P (σ 00 , η 00 , m00 )

stmt;stmt0

P

v1 ,...vn ,:=e1 ,...en

P

valσ,η (π)

: trans

: join

(σ, η, m) P (σ, η, m0 )

: assign

m0 = (lcent , ηinit ) ⊕ m

spawn

: spawn

(σ, η, m) P (σ, η, m0 )

(σ[substS ], η[substL ], m)

Fig. 3. Semantics of concurrent programs.

t

We write (σ, m) −→P (σ 0 , m0 ) if (σ, m) −→P (σ 0 , m0 ) for a transition t.

A P run ρ is a sequence (σ0 , m0 ), t1 , ..., tn , (σn , mn ). The run is P feasible if

ti+1

(σi , mi ) −→P (σi+1 , mi+1 ) for each i : 0 ≤ i < n and σ0 and m0 are initial. We say that a configuration (σ, m) is reachable if there is a feasible P run

(σ0 , m0 ), t1 , ..., tn , (σn , mn ) s.t. (σ, m) = (σn , mn ).

Counting Logic. We use @Loc to mean the set {@lc | lc ∈ Loc} of boolean variables. Intuitively, @lc evaluates to tt exactly when the process evaluating it

is at location lc. We associate a counting variable (π)# to each predicate π in

predsOf(@Loc, exprsOf(S ∪ L)). Intuitively, in a given program configuration,

the variable (π)# counts the number of processes for which the predicate

π holds.

We let Ω mean the set (π)# |π ∈ predsOf(@Loc, exprsOf(S ∪ L)) of counting

variables. A counting predicate is a predicate in predsOf(@Loc, exprsOf(S ∪ Ω)).

Elements in exprsOf(S ∪ L) and predsOf(@Loc, exprsOf(S ∪ L)) are evaluated wrt. a shared configuration σ and a process configuration (lc, η). For instance, valσ,(lc,η) (v) is σ(v) if v ∈ S and η(v) if v ∈ L and valσ,(lc,η) (@lc0 ) =

(lc = lc0 ). We abuse notation and write valσ,m (ω) to mean the evaluation of the

counting

predicate ω wrt. a configuration (σ, m). More precisely, valσ,m (π)# =

P

(lc,η) s.t. valσ,(lc,η) (π) m((lc, η)) and valσ,m (v) = σ(v) for v ∈ S.

Our counting logic is quite expressive as we can capture assertion violations

and deadlocks. For location lc, we let enabled(lc) in predsOf(exprsOf(S ∪ L))

define when a process can fire some transition from lc. We capture the violation

of an assert(π) at some location lc and the deadlock configurations using the

following two counting predicates.

V

ωassert = (@lc ∧ ¬π)# ≥ 1

ωdeadlock = lc∈Loc (@lc ∧ enabled(lc))# = 0

10

5

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

Relating layers of abstractions

We formally describe in the following the four steps involved in our predicated

constrained monotonic abstraction approach (see Fig. 2).

5.1

Predicate abstraction

Given a program P = (S, L, T ) and a number of predicates Π on the variables

S ∪ L, we leverage on existing techniques (such as [5])

in order

to generate an

abstraction in the form of a boolean program P̃ = S̃, L̃, T̃ where all shared

and local variables take boolean values. To achieve this, Π is partitioned into

three sets Πshr , Πloc and Πmix . Predicates in Πshr only mention variables in S

and those in Πloc only mention variables in L. Predicates in Πmix mention both

shared and local variables of P . A one to one mapping associates a predicate

origPredOf(ṽ) in Πshr (resp. Πmix ∪ Πloc ) to each ṽ in S̃ (resp. L̃).

In addition, there are as many transitions in T as in T̃ . For each (lc I lc0 : stmt)

in T there is a corresponding (lc I lc0 : abstOf(stmt)) with the same source and

destination locations lc, lc0 , but with an abstracted statement abstOf(stmt) that

may operate on the variables S̃∪L̃. For instance, the statement (count := count+

1) in Fig. 1 is abstracted with the multiple assignment:

wait leq count,

count leq wait

:=

choose (wait leq count, ff) ,

choose (¬wait leq count ∧ count leq wait, wait leq count)

(1)

The value of the variable count leq wait after execution of the multiple assignment 1 is tt if ¬wait leq count ∧ count leq wait holds, ff if wait leq count

holds, and is equal to a non deterministically chosen boolean value otherwise.

In addition, abstracted statements can mention the local variables of passive

processes, i.e., processes other than the

the transition. For this,

n one executing

o

we make use of the variables L̃p = ˜lp |˜l in L̃ where each ˜lp denotes the local variable ˜l of passive processes. For instance, the statement prev := val in

Fig. 1 is abstracted with the multiple assignment 2. Here, the local variable

prev leq val of each process other than the one executing the statement (written prev leq valp ) is separately updated. This corresponds to a broadcast where

the local variables of all passive processes need to be updated.

tt,

prev leq val,

choose

prev leq max, :=

∧

prev leq valp

choose

∧

¬prev leq val

prev leq val

,

,

prev leq max ∧ ¬prev leq max

¬prev leq val

prev leq val

,

prev leq valp ∧ ¬prev leq valp

(2)

Syntax and semantics of boolean programs. The syntax of boolean programs

is described below. Variables ṽ1 , . . . , ṽn are in S̃ ∪ L̃ ∪ L̃p . Predicate π is in

Abstracting and Counting Synchronizing Processes

11

predsOf(S̃ ∪ L̃), and predicates π1 , . . . , πn are in predsOf(S̃ ∪ L̃ ∪ L̃p ). We further require for the multiple assignment that if ṽi ∈ S̃ ∪ L̃ then vars(πi ) ⊆ S̃ ∪ L̃.

prog ::= (s̃ := (tt || ff || ∗))∗ proc : (˜l := (tt || ff || ∗))∗ (lc I lc : stmt)

stmt ::= spawn || join || π || ṽ1 , . . . , ṽn := π1 , . . . , πn || stmt; stmt

+

Apart from the fact that all variables are now boolean, the main difference of

ṽ1 ,...ṽn :=π1 ,...πn

7→P̃

Fig. 4 with Fig. 3 is the assign statement. For this, we write (σ̃, η̃, η̃p )

(σ̃ 0 , η̃ 0 , η̃p0 ) to mean that η̃p0 is obtained in the following way. First, we change the

hn

oi

domain of η̃p from L̃ to L̃p and obtain the mapping η̃p,1 = η̃p ˜l ← ˜lp |˜l ∈ L̃ ,

hn

oi

then we let η̃p,2 = η̃p,1 ṽi ← valσ̃,η̃,η̃p,1 (πi ) |ṽi ∈ L̃p in lhs of the assignment .

hn

oi

Finally, we obtain η̃p0 = η̃p,2 ˜lp ← ˜l|˜l ∈ L̃ . This step corresponds to a broadt̃

cast. We write (σ̃, m̃) −→P̃ (σ̃ 0 , m̃0 ) if (σ̃, m̃) −→P̃ (σ̃ 0 , m̃0 ) for some t̃. A P̃ run

t̃i+1

ρ̃ is a sequence (σ̃0 , m̃0 ), t̃1 , ..., t̃n , (σ̃n , m̃n ). The run is feasible if (σ̃i , m̃i ) −→P̃

(σ̃i+1 , m̃i+1 ) for each i : 0 ≤ i < n and both σ̃0 and m̃0 are initial.

stmt

(σ̃, η̃, m̃) P̃ (σ̃ 0 , η̃ 0 , m̃0 )

(σ̃, (lc, η̃) ⊕ m̃)

(lcIlc0 :stmt) 0

(σ̃ , (lc0 , η̃ 0 ) ⊕ m̃0 )

−→P̃

valσ̃,η̃ (π)

: trans

: assume

π

(σ̃, η̃, m̃) P̃ (σ̃, η̃, m̃)

stmt0

stmt

(σ̃, η̃, m̃) P̃ (σ̃ 0 , η̃ 0 , m̃0 ) and (σ̃ 0 , η̃ 0 , m̃0 ) P̃ (σ̃ 00 , η̃ 00 , m̃00 )

(σ̃, η̃, m̃)

stmt;stmt0

P̃

m̃0 = (lcent , η̃init ) ⊕ m̃

spawn

: sequence

(σ̃ 00 , η̃ 00 , m̃00 )

: spawn

m̃ = (lcext , η̃ 0 ) ⊕ m̃0

join

(σ̃, η̃, m̃) P̃ (σ̃, η̃, m̃0 )

: join

(σ̃, η̃, m̃) P̃ (σ̃, η̃, m̃0 )

n

o

σ̃ 0 = σ̃[ ṽi ← valσ̃,η̃ (πi ) |ṽi ∈ S̃ ]

n

o

η̃ 0 = η̃[ ṽi ← valσ̃,η̃ (πi ) |ṽi ∈ L̃ ]

h : m̃ → m̃0 a bijection with h((lcp , η̃p )) = (lcp , η̃p0 )

for some η̃ 0 s.t. (σ̃, η̃, η̃p )

(σ̃, η̃, m̃)

ṽ1 ,...ṽn ,:=π1 ,...πn

7→P̃

ṽ1 ,...ṽn :=π1 ,...πn

P̃

(σ̃ 0 , η̃ 0 , η̃p0 )

: assign

(σ̃ 0 , η̃ 0 , m̃0 )

Fig. 4. Semantics of boolean concurrent programs.

Relation between P and V

P̃ . Given a shared configuration σ̃, we write origPredOf(σ̃)

to mean the predicate s̃∈S̃ (σ̃(s̃) ⇔ origPredOf(s̃)). In a similar manner, we

V

write origPredOf(η̃) to mean the predicate l̃∈L̃ (η̃(˜l) ⇔ origPredOf(˜l)). Observe vars(origPredOf(σ̃)) ⊆ S and vars(origPredOf(η̃)) ⊆ S ∪ L. We abuse

notation and write valσ (σ̃) (resp. valη (η̃)) to mean that valσ (origPredOf(σ̃))

12

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

(resp. valη (origPredOf(η̃))) holds. We also write valσ̃,η̃ (π), for a boolean combination π of predicates in Π, to mean the predicate obtained by replacing each

π 0 in Πmix ∪ Πloc (resp. Πshr ) with η̃(ṽ) (resp. σ̃(ṽ)) where origPredOf(ṽ) = π 0 .

We let valm (m̃) mean there is a bijection h : |m| → |m̃| s.t. we can associate to each (lc, η)i in m an (lc, η̃)h(i) in m̃ such that valη (η̃) for each

i : 1 ≤ i ≤ |m|. To each P̃ configuration (σ̃, m̃) corresponds a set γ ((σ̃, m̃)) =

{(σ, m)|valσ (σ̃) ∧ valm (m̃)}, and to each P̃ configuration (σ, m) a singleton

α ((σ, m)) = {(σ̃, m̃)|valσ (σ̃) ∧ valm (m̃)}. We initialize the P̃ variables s.t. for

each σinit , minit there are σ̃init , m̃init s.t. α ((σinit , minit )) = {(σ̃init , m̃init )}. To

each

P̃ run ρ̃ = (σ̃0 , m̃0 ), t̃1 , ...(σ̃n , m̃n ) corresponds a set of

P runs γ (ρ̃) =

(σ0 , m0 ), t1 , ...(σn , mn )|(σi , mi ) ∈ γ ((σ̃, m̃)) , t̃i = abstOf(ti ) , and to each P

run

ρ = (σ0 , m0 ), t1 , ...tn , (σn , mn ) corresponds a set

of P̃ runs α (ρ) defined as

α ((σ0 , m0 )) , t̃1 , ...t̃n , α ((σn , mn )) |ti = abstOf(ti ) .

Definition

1(predicate abstraction). Let P = (S, L, T ) be a program and

P̃ = S̃, L̃, T̃ be its abstraction wrt. Π as described in this Section. The abstraction is said to be effective and sound if P̃ can be effectively computed and

to each feasible P run ρ corresponds a non empty set α (ρ) of feasible P̃ runs.

5.2

Translation into an extended counter machine

Assume a program P = (S, L, T ), a set Π ⊆ predsOf(exprsOf(S ∪ L)) of predicates and two counting predicates in predsOf(@Loc,

exprsOf(S

∪ Ω)): an in

variant ωinv and a target ωtrgt . We write P̃ = S̃, L̃, T̃ to mean the boolean

abstraction of P wrt. Π ∪atoms(ωtrgt )∪atoms(ωinv ). Intuitively, this step results

in the state reachability problem of an extended counter machine MP,Π,ωinv ,ωtrgt

that captures reachability of P̃ configurations (abstracting ωtrgt P configurations) with P̃ runs that are strengthened wrt. the P counting invariant ωinv .

An extended counter machine M is a tuple (Q, C, ∆, QInit , ΘInit , qtrgt ) where

Q is a finite set of states, C is a finite set of counters (i.e., variables ranging over

N), ∆ is a finite set of transitions, QInit ⊆ Q is a set of initial states, ΘInit is an

initial set of counters valuations, (i.e., mappings from C to N) and qtrgt is a state

in Q. A transition δ in ∆ is of the form [q : op : q 0 ] where the operation op is either

the identity operation nop, a guarded command grd ⇒ cmd, or a sequential

composition of operations. We use a set A of auxiliary variables ranging over

N. These are meant to be existentially quantified when firing the transitions as

explained in Fig. 5. A guard grd is a predicate in predsOf(exprsOf(A ∪ C)) and

a command cmd is a multiple assignment c1 , . . . , cn := e1 , . . . , en that involves

e1 , . . . en in exprsOf(A ∪ C) and pairwise different c1 , . . . cn .

A machine configuration is a pair (q, θ) where q is a state in Q and θ is a

mapping C → N. Semantics are given in Fig. 5. A configuration (q, θ) is initial

if q ∈ QInit and θ ∈ ΘInit . An M run ρM is a sequence (q0 , θ0 ); δ1 ; . . . (qn , θn ). It

δi+1

is feasible if (q0 , θ0 ) is initial and (qi , θi ) −→M (qi+1 , θi+1 ) for i : 0 ≤ i < n. We

Abstracting and Counting Synchronizing Processes

13

δ

write −→M to mean ∪δ∈∆ −→M . The machine state reachability problem is to

decide whether there is an M feasible run (q0 , θ0 ); δ1 ; . . . (qn , θn ) s.t. qn = qtrgt .

op

δ = [q : op : q 0 ] and θ M θ 0

δ

: transition

(q, θ) −→M (q 0 , θ 0 )

θ

nop

M

op0

op

θ M θ 0 and θ 0 M θ 00

: nop

θ

: seq

op;op0

θ M θ 00

∃A.valθ (grd) ∧ ∀i : 1 ≤ i ≤ n.θ 0 (ci ) = valθ (ei )

θ

grd⇒cmd

M

: gcmd

θ0

Fig. 5. Semantics of an extended counter machine

Translation. We describe a machine (Q, C, ∆, QInit , ΘInit , qtrgt ) that captures

the behaviour of the program P̃ and encodes reaching abstractions of P configurations satisfying ωtrgt in terms of a machine state reachability problem. Each

state in Q is either the target state qtrgt or is associated to a shared configuration

σ̃. We write qσ̃ to make the association explicit. There is a one to one mapping

that associates a process configuration (lc, η̃) to each counter c(lc,η̃) in C. Transitions are generated as described in Fig. 7. We associate a program configuration

(σ̃, m̃) to each machine configuration encM ((σ̃, m̃)) = (qσ̃ , θ). Intuitively, states

in Q encode values of the shared variables while each counter c(lc,η̃) counts the

number of processes at location lc and satisfying η̃. In other words m̃((lc, η̃)) =

c(lc,η̃) for each

(lc, η̃). The encoding of a P̃ run ρP̃ = (σ̃0 , m̃0 ); t̃1 ; . . . (σ̃n , m̃n )

is the set (q0 , θ0 ); δ1 . . . ; (qn , θn )|(qi , θi ) = encM ((σ̃i , m̃i )) and δi ∈ ∆t̃i . The

machine encodes the behaviour of the boolean program as specified in Lem. 1.

Lemma 1 (monotonic translation).

Any P̃ configuration (σ̃,

h

i m̃) such that

P

#

ωtrgt [origPredOf(s̃)/σ̃(s̃)] (π) / {(lc,η̃)|val(lc,η̃) (π)} m̃((lc, η̃)) holds is P̃ reachable iff qtrgt is M reachable.

Let us write θ θ0 if θ(c) ≤ θ0 (c) for each c ∈ C. Observe that all machine

δ

transitions of Fig. 7 are monotonic in that if (q1 , θ1 ) −→M (q2 , θ2 ) for some δ ∈

δ

∆, and if θ1 θ3 , then there is a θ4 such that θ2 θ4 and (q1 , θ3 ) −→M (q2 , θ4 ).

Combined with the fact that is a well quasi ordering over N|C| , we get that:

Lemma 2 (monotonic decidability). State reachability of any monotonic

translation is decidable.

However, monotonic translations correspond to coarse over-approximations

that are incapable of dealing with statements of our counting logic (Sec. 4). Intuitively, bad configurations (such as those where a deadlock occurs) are no more

14

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

[qσ̃ : op : qσ̃0 ] h∈ ∆

P

strongωinv (σ̃) = ∃S.origPredOf(σ̃) ∧ ωinv (π)# / {(lc,η̃)|val

(lc,η̃) (π)}

c(lc,η̃)

i

strengthen

[qσ̃ : strongωinv (σ̃); op; strongωinv (σ̃ 0 ) : qσ̃0 ] ∈ ∆0

Fig. 6. Strengthening of counter transitions given a counting invariant ωinv .

upward closed wrt. . This loss of precision makes the verification of such properties out of the reach of techniques solely based on monotonic translations. To

regain some of the lost precision, we constrain the runs using counting invariants.

Lemma 3 (strengthened soundness). Any feasible P run ρP has a P̃ feasible

run ρP̃ in α (ρP ) with an M feasible run in encM (ρP̃ ), where M is any machine

strengthened as described in Fig. 7 and Fig. 6.

lc I lc0 : stmt and (σ̃, η̃) : op : (σ̃ 0 , η̃ 0 ) stmt

: transition

(qσ̃ : c(lc,η̃) ≥ 1; (c(lc,η̃) )−− ; op; (c(lc0 ,η̃0 ) )++ : qσ̃0 ) ∈ ∆(lcIlc0 :stmt)

lc I lc0 : stmt and (σ̃, η̃) : op : (σ̃ 0 , η̃ 0 ) stmt

: target

i

h

P

(qσ̃ : ωtrgt [s̃/σ̃(s̃)] (π)# / (lc,η̃)|=π c(lc,η̃) : qtrgt ) ∈ ∆trgt

valσ̃,η̃ (π)

[(σ̃, η̃) : nop : (σ̃, η̃)]π

i

: assume h

(σ̃, η̃) : (c(lcent ,η̃init ) )++ : (σ̃, η̃)

: spawn

spawn

(σ̃, η̃) : op : (σ̃ 0 , η̃ 0 ) stmt and (σ̃ 0 , η̃ 0 ) : op0 : (σ̃ 00 , η̃ 00 ) stmt0

: sequence

(σ̃, η̃) : op; op0 : (σ̃ 00 , η̃ 00 ) stmt;stmt0

h

(σ̃, η̃) : c(lcext ,η̃0 ) ≥ 1; (c(lcext ,η̃0 ) )−− : (σ̃, η̃)

i

: join

join

n

o

n

o

σ̃ 0 = σ̃[ ṽi ← valσ̃,η̃ (πi ) |ṽi ∈ S̃ ]

η̃ 0 = η̃[ ṽi ← valσ̃,η̃ (πi ) |ṽi ∈ L̃ ]

ṽ ,...ṽn ,:=π1 ,...πn

E = a(lc,η̃p ),(lc,η̃0 ) |(σ̃, η̃, η̃p ) 1

−→M

(σ̃ 0 , η̃ 0 , η̃p0 ), a ∈ A

p

n

o

n

o

DomE = (lc, η̃p )|a(lc,η̃p ),(lc,η̃0 ) ∈ E

ImgE = (lc, η̃p0 )|a(lc,η̃p ),(lc,η̃0 ) ∈ E

p

p

: assign

n

o

P

c(d) =

i∈ImgE ad,i |d ∈ DomE

0

0

o

(σ̃, η̃) : n

:

(σ̃

,

η̃

)

P

∪ c(i) :=

ad,i |i ∈ ImgE

d∈Dom

E

ṽ1 ,...ṽn ,:=π1 ,...πn

Fig. 7. Translation of the transitions of a boolean program S̃, L̃, T̃ , given a counting

target ωtrgt , to the transitions ∆ = ∪t∈T̃ ∆t ∪ ∆trgt of a counter machine.

The resulting machine is not monotonic in general and we can encode the

state reachability of a two counter machine.

Abstracting and Counting Synchronizing Processes

15

Lemma 4 (strengthened undecidability). State reachability is in general

undecidable after strengthening.

5.3

Constrained monotonic abstraction and preorder refinement

This step addresses the state reachability problem for an extended counter machine M = (Q, C, ∆, QInit , ΘInit , qtrgt ). As stated in Lem. 4, this problem is

in general undecidable for strengthened translations. The idea here [10] is to

force monotonicity with respect to a well-quasi ordering on the set of its

configurations. This is apparent at line 7 of the classical working list algorithm

Alg. 1. We start with the natural component wise preorder θ θ0 defined as

∧c∈C θ(c) ≤ θ0 (c). Intuitively, θ θ0 holds if θ0 can be obtained by “adding more

processes to” θ. The algorithm requires that we can compute membership (line

5), upward closure (line 7), minimal elements (line 7) and entailment (lines 9,

13, 15) wrt. to preorder , and predecessor computations of an upward closed

set (line 7).

If no run is found, then not reachable is returned. Otherwise a run is

obtained and simulated on M. If the run is possible, it is sent to the fourth

step of our approach (described in Sect. 5.4). Otherwise, the upward closure

step Up ((q, θ)) responsible for the spurious trace is identified and an interpolant I (with vars(I) ⊆ C) is used to refine the preorder as follows: i+1 :=

{(θ, θ0 )|θ i θ0 ∧ (θ |= I ⇔ θ0 |= I)}. Although stronger, the new preorder is again

a well quasi ordering and the trace is guaranteed to be eliminated in the next

round. We refer the reader to [3] for more details.

Lemma 5 (CMA [3]). All steps involved in Alg. 1 are effectively computable

and each instantiation of Alg. 1 is sound and terminates given the preorder is a

well quasi ordering.

5.4

Simulation on the original concurrent program

A given run of the extended counter machine (Q, C, ∆, QInit , ΘInit , qtrgt ) is simulated by this step on the original concurrent program P = (S, L, T ). This is

possible because to each step of the counter machine run corresponds a unique

and concrete transition of P . This step is classical in counter example guided

abstraction refinement approaches. In our case, we need to differentiate the variables belonging to different processes during the simulation. As usual in such

frameworks, if the trace turns out to be possible then we have captured a concrete run of P that violates an assertion and we report it. Otherwise, we deduce

predicates that make the run infeasible and send them to step 1 (Sect. 5.1).

Theorem 1 (predicated constrained monotonic abstraction). Assume

an effective and sound predicate abstraction. If the constrained monotonic abstraction step returns not reachable, then no configuration satisfying ωtrgt is

16

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

input : A machine (Q, C, ∆, QInit , ΘInit , qtrgt ) and a preorder output: not reachable or a run (q1 , θ1 ); δ1 ; (q2 , θ2 ); δ2 ; . . . δn ; (qtrgt , θ)

Working := ∪e∈Min (N|C| ) {((qtrgt , e), (qtrgt , e))}, Visited := {};

while Working 6= {} do

((q, θ), ρ) =pick a member from Working;

Visited ∪ = {((q, θ), ρ)};

if (q, θ) ∈ QInit × ΘInit then return ρ;

foreach δ ∈ ∆ do

pre = Min (Preδ (Up ((q, θ))));

foreach (q 0 , θ0 ) ∈ pre do

if θ00 θ0 for some ((q 0 , θ00 ), ) in Working ∪ Visited then

continue;

else

foreach ((q 0 , θ00 ), ) ∈ Working do

if θ0 θ00 then Working = Working \ {((q 0 , θ0 ), )};

foreach ((q 0 , θ00 ), ) ∈ Visited do

if θ0 θ00 then Visited = Visited \ {((q 0 , θ0 ), )};

Working ∪ = {((q 0 , θ0 ), (q 0 , θ0 ); δ; ρ)}

return not reachable;

Algorithm 1: Constrained monotonic abstraction

reachable in P . If a P run is returned by the simulation step, then it reaches

a configuration where ωtrgt holds. Every iteration of the outer loop terminates

given the inner loop terminates. Every iteration of the inner loop terminates.

Notice that there is no general guaranty that we establish or refute the safety

property. For instance, it may be the case that one of the loops does not terminate (although each one of their iterations does) or that we need to add predicates relating local variables of two different processes (something the predicate

abstraction framework we use cannot express).

6

Experimental results

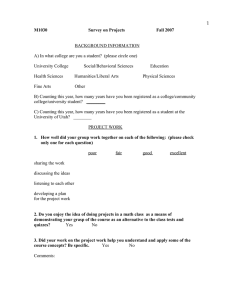

We report on experiments with our prototype Pacman(for predicated constrained

monotonic abstraction). We have conducted our experiments on an Intel Xeon

2.67GHz processor with 8GB of RAM. To the best of our knowledge, the reported

examples which need refinement of monotonic abstraction’s preorder cannot be

verified by previous techniques; either because the examples require stronger

orderings than the usual preorder, or because they involve counting target predicates that are not expressed in terms of violations of program assertions.

All predicate abstraction predicates and counting invariants have been derived automatically. For the counting invariants, we implemented a thread modular analysis operating on the polyhedra numerical domain. This took less than 11

seconds for all the examples we report here. For each example, we report on the

Abstracting and Counting Synchronizing Processes

17

Table 1. Checking assertion violation with Pacman

example

max

max-bug

max-nobar

readers-writers

readers-writers-bug

parent-child

parent-child -nobar

simp-bar

simp-nobar

dynamic-barrier

dynamic-barrier-bug

as-many

as-many-bug

P

5:2:8

5:2:8

5:2:8

3:3:10

3:3:10

2:3:10

2:3:10

5:2:9

5:2:9

5:2:8

5:2:8

3:2:6

3:2:6

outer loop inner loop

results

ECM num. preds. num. preds. time(s) output

18:16:104 4

5

6

2

192 correct

18:8:55

3

4

5

2

106

trace

18:4:51

3

3

3

0

24

trace

9:64:121 5

6

5

0

38 correct

9:7:77

3

3

3

0

11

trace

9:16:48

3

4

5

2

73 correct

9:1:16

2

1

2

0

3

trace

8:16:123 3

3

5

2

93 correct

8:7:67

3

2

3

0

13

trace

8:8:44

3

3

3

0

8

correct

8:1:14

2

1

2

0

3

trace

8:4:33

3

2

6

3

62 correct

8:1:9

2

1

2

0

2

trace

number of transitions and variables both in P and in the resulting counter machine. We also state the number of refinement steps and predicates automatically

obtained in both refinement loops. We made use of several optimizations. For

instance, we discarded boolean mappings corresponding to unsatisfiable combinations of predicates, we used automatically generated invariants (such as

(wait ≤ count) ∧ (wait ≥ 0) for the max example in Fig.1) to filter the state

space. Such heuristics dramatically helps our state space exploration algorithms.

We report on experiments checking assertion violations in Tab.1 and deadlock

freedom in Tab.2. For both cases we consider correct and buggy (by removing

the barriers for instance) programs. Pacman establishes correctness and exhibits

faluty runs as expected. The tuples under the P column respectively refer to the

number of variables, procedures and transitions in the origirnal program. The

tuples under the ECM column refer to the number of counters, states and

transitions in the extended counter machine.

Table 2. Checking deadlock with Pacman

example

bar-bug-no.1

bar-bug-no.2

bar-bug-no.3

correct-bar

ddlck bar-loop

no-ddlck bar-loop

P

4:2:7

4:3:8

3:2:6

4:2:7

4:2:10

4:2:9

outer loop inner loop

results

ECM num. preds. num. preds. time(s) output

7:16:66 4

4

6

2

27

trace

9:16:95 4

3

4

0

33

trace

6:16:78 5

4

6

1

21

trace

7:16:62 4

4

6

2

18 correct

8:8:63 3

2

3

0

16

trace

7:16:78 4

3

4

0

19 correct

18

7

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

Conclusions and Future Work

We have presented a technique, predicated constrained monotonic abstraction,

for the automated verification of concurrent programs whose correctness depends

on synchronization between arbitrary many processes, for example by means of

barriers implemented using integer counters and tests. We have introduced a new

logic and an iterative method based on combination of predicate, counter and

monotonic abstraction. Our prototype implementation gave encouraging results

and managed to automatically establish or refute program assertions deadlock

freedom. To the best of our knowledge, this is beyond the capabilities of current

automatic verification techniques. Our current priority is to improve scalability

by leveraging on techniques such as cartesian and lazy abstraction, partial order

reduction, or combining forward and backward explorations. We are also aim to

generalize to richer variable types.

References

1. P. Abdulla, F. Haziza, and L. Holk. All for the price of few. In R. Giacobazzi,

J. Berdine, and I. Mastroeni, editors, Verification, Model Checking, and Abstract

Interpretation, volume 7737 of Lecture Notes in Computer Science, pages 476–495.

Springer Berlin Heidelberg, 2013.

2. P. A. Abdulla, K. Čerāns, B. Jonsson, and Y.-K. Tsay. Algorithmic analysis of programs with well quasi-ordered domains. Information and Computation, 160:109–

127, 2000.

3. P. A. Abdulla, Y.-F. Chen, G. Delzanno, F. Haziza, C.-D. Hong, and A. Rezine.

Constrained monotonic abstraction: A cegar for parameterized verification. In

Proc. CONCUR 2010, 21th Int. Conf. on Concurrency Theory, pages 86–101, 2010.

4. E. Clarke, M. Talupur, and H. Veith. Environment abstraction for parameterized

verification. In Proc. VMCAI ’06, 7th Int. Conf. on Verification, Model Checking,

and Abstract Interpretation, volume 3855 of Lecture Notes in Computer Science,

pages 126–141, 2006.

5. A. F. Donaldson, A. Kaiser, D. Kroening, M. Tautschnig, and T. Wahl.

Counterexample-guided abstraction refinement for symmetric concurrent programs. Formal Methods in System Design, 41(1):25–44, 2012.

6. A. Downey. The Little Book of SEMAPHORES (2nd Edition): The Ins and Outs

of Concurrency Control and Common Mistakes. Createspace Independent Pub,

2009.

7. A. Finkel and P. Schnoebelen. Well-structured transition systems everywhere!

Theoretical Computer Science, 256(1-2):63–92, 2001.

8. A. Kaiser, D. Kroening, and T. Wahl. Dynamic cutoff detection in parameterized

concurrent programs. In Proceedings of CAV, volume 6174 of LNCS, pages 654–

659. Springer, 2010.

9. A. Pnueli, J. Xu, and L. Zuck. Liveness with (0,1,infinity)-counter abstraction.

In Proc. 14th Int. Conf. on Computer Aided Verification, volume 2404 of Lecture

Notes in Computer Science, 2002.

10. A. Rezine. Parameterized Systems: Generalizing and Simplifying Automatic Verification. PhD thesis, Uppsala University, 2008.

Abstracting and Counting Synchronizing Processes

A

19

Appendix

In this section the examples of Sec.6 are demonstrated. For simplicity the property which is going to be checked in the input program is reformulated as a

statement that goes to lcerr which denotes the error location.

A.1

Readers and Writers

int readcount := 0

bool lock := tt, writing := ff

main :

lcent I lcent : spawn(writer)

lcent I lcent : readcount = 0 ∧ lock; spawn(reader); readcount := readcount + 1

lcent I lcent : readcount! = 0; spawn(reader); readcount := readcount + 1

reader :

lcent I lcerr : writing

lcent I lcext : readcount = 1; readcount := readcount − 1; lock := tt

lcent I lcext : readcount! = 1; readcount := readcount − 1

writer :

lcent I

lc1 I

lc2 I

lc3 I

lc1

lc2

lc3

lcext

:

:

:

:

lock; lock := ff

writing := tt

writing := ff

lock := tt

Fig. 8. The readers and writers example.

The readers and writers problem is a classical problem. In this problem there

is a resource which is shared between several processes. There are two type of

processes, one that only read from the resource reader and one that read and

write to it writer. At each time there can either exist several readers or only

one writer. readers and writers can not exist at the same time.

In Fig.8 a solution to the readers and writers problem with preference to

readers is shown. In this approach readers wait until there is no writer in the

critical section and then get the lock that protects that section. We simulate a

lock with a boolean variable lock. Considering the fact that in our model the

transitions are atomic, such simulation is sound. When a writer wants to access

the critical section, it first waits for the lock and then gets it (buy setting it to

ff). Before starting writing, a writer sets a flag writing that we check later on

in a reader process. At the end a writer unsets writing and frees lock.

An arbitrary number of reader processes can also be spawned. The number of

readers is being kept track of by the variable readcount. When the first reader

20

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

is going to be spawned (i.e. readcount = 0) flag lock must hold. readcount is

incremented after spawning each reader. Whenever a reader starts execution,

it checks flag writing and goes to error if it is set, because it shows that at the

same time a writer is writing to the shared resource. When a reader wants to

exit, it decrements the readcount. The last reader frees the lock.

In this example we need a counting invariant to capture the relation between

number of readers, i.e. readcount and the number of processes in different locations of process reader.

A.2

Parent and Child

int i := 0

bool allocated := ff

main :

lcent I lcent : spawn(parent); i := i + 1

lcent I lcent : join(parent); i := i − 1

parent :

lcent I

lc1 I

lc2 I

lc3 I

lc1 I

lc1

lc2

lc3

lcext

lc3

:

:

:

:

:

allocated := tt

spawn(child)

join(child)

i = 1; allocated := ff

tt

child :

lcent I lcext : allocated

lcent I lcerr : ¬allocated

Fig. 9. The Parent and Child example.

In the example of Fig.9 a sample nested spawn/join is demonstrated. In

this example two types of processes exist. One is parent which is spawned by

main and the other one is called child which is spawned by parent. The shared

variable i is initially 0 and is incremented and decremented respectively when

a parent process is spawned and joined. A parent process first sets the shared

flag allocated and then either spawns and joins a child process or just moves

from lc1 to lc3 without doing anything. The parent that sees i = 1 unsets the

flag allocated. A child process goes to error if allocated is not set. This example

is error free because one can see that allocated is unset when only one parent

exists and that parent has already joined its child or did not spawn any child,

i.e. no child exists. Such relation between number of child and parent processes

as well as variable i can only be captured by appropriate counting invariants

and predicate abstraction is incapable of that.

Abstracting and Counting Synchronizing Processes

A.3

21

Simple Barrier

int wait := 0, count := 0

bool enough := ff, f lag := ∗, barrierOpen := ff

main :

lcent I lc1 : ¬enough; spawn(proc); count := count + 1

lc1 I lcent : enough := ff

lc1 I lcent : enough := tt

proc :

lcent

lc1

lc2

lc3

lc3

lc4

I

I

I

I

I

I

lc1

lc2

lc3

lc4

lc4

lcerr

:

:

:

:

:

:

f lag := tt

f lag := ff

wait := wait + 1

(enough ∧ wait = count); barrierOpen := tt : wait := wait − 1

barrierOpen; wait := wait − 1

f lag

Fig. 10. Simple Barrier example.

In the example of Fig.10 a simple application of a barrier is shown. main

process spawns an arbitrary number of procs and increments a shared variable

count that is initially zero and counts the number of procs in the program before

shared flag enough is set. Each proc first sets and then unsets shared flag f lag.

The statements in lc2 to lc4 simulate a barrier. Each proc first increments a

shared variable wait which is initially zero. Then the first proc that finds out that

the condition (enough∧wait = count) holds, sets a shared flag barrierOpen and

goes to lc4 . Other procs that want to traverse the barrier can the transition lc3 I

lc4 : barrierOpen. After the barrier a proc goes to error if f lag is unset.One can

see that the error state is not reachable in this program because all procs have

to unset f lag before any of them can traverse the barrier. To prove that this

example is error free, it must be shown that the barrier implementation does not

let any process be in locations lcent , lc1 or lc2 where there are processes after

barrier, i.e. in locations lc4 and lcerr . Proving such property requires the relation

between number of processes in program locations and variables wait and count

be kept. This is possible when we use counting invariants as introduced in this

paper.

A.4

Dynamic Barrier

In a dynamic barrier the number of processes that have to wait at a barrier

can change. The way we implemented barriers in this paper makes it easy to

capture characteristics of such barriers. In the example of Fig.11 the variables

22

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

int N := ∗, wait := ∗, count := ∗, i := 0

bool done := ff

main :

lcent

lc1

lc2

lc3

lc3

I

I

I

I

I

lc1

lc1

lc3

lc3

lc4

:

:

:

:

:

count, wait := N, 0

i! = N, spawn(proc); i := i + 1

i = N ∧ wait = count

join(proc); i := i − 1

i = 0; done := tt

proc :

lcent I lcext : count := count − 1

lcent I lcerr : done

Fig. 11. dynamic barrier

corresponding to barrier i.e. count and wait are respectively set to N and 0 in

the main’s first statement. Then procs are spawned as long as the counter i is

not equal to N which denotes the total number of procs in the system. Each

created proc decrements count and by doing so it decrements the number of

processes that have to wait at the barrier. In this example the barrier is in lc2

of main and can be traversed as usual when wait = count holds and no more

proc is going to be spawned, i.e. i = N . Then main can non-deterministically

join a proc or set flag done if no more proc exists.

A.5

As Many

int count1 := 0, count2 := 0

bool enough := ff

main :

lcent I lc1 : spawn(proc1); count1 := count1 + 1

lc1 I lcent : spawn(proc2); count2 := count2 + 1

lcent I lc2 : enough := tt

proc1 :

lcent I lc1 : enough

lc1 I lcerr : count1 6= count2

proc2 :

lcent I lc1 : enough

Fig. 12. As Many

Abstracting and Counting Synchronizing Processes

23

In the example of Fig.12 process main spawns as many processes proc1 as

proc2 and it increments their corresponding counters count1 and count2 accordingly. At some point main sets flag enough and does not spawn any other

processes. Processes in proc1 and proc2 start execution after enough is set. A

process in proc1 goes to error location if count1 6= count2. One can see that error

is not reachable because the numbers of processes in the two groups are the same

and respective counter variables are initially zero and are incremented with each

spawn to represent the number of processes. To verify this example obviously the

relation between count1, count2 and number of processes in different locations

of proc1 and proc2 must be captured.

A.6

Barriers causing deadlock

int wait := 0, count := 0, open := 0

bool proceed := ff

main :

lcent I lcent : spawn(proc); count := count + 1

lcent I lc1 : proceed := tt

proc :

lcent

lc1

lc1

lc2

lc2

I

I

I

I

I

lc1

lc2

lc2

lc3

lcerr

:

:

:

:

:

wait := wait + 1

proceed ∧ wait = count; open := open + 1

proceed ∧ wait 6= count

open > 0; open := open − 1

open = 0 ∧ (proc@lcent )# = 0 ∧ (proc@lc1 )# = 0

Fig. 13. Buggy Barrier No.1

In Fig.13 a buggy implementation of barrier is demonstrated. This example is

based on an example in [6]. The barrier implementation in the book is based on

semaphores and in our example the shared variable open which is initialized to

zero plays the role of a semaphore. A buggy barrier is implemented in program

locations lcent to lc3 . First process main spawns a number of process proc,

increments the shared variable count which is supposed to count the number of

procs and at the end sets flag proceed. A proc increments shared variable wait

which is aimed to count the number of procs accumulated at the barrier. procs

must wait for the flag proceed to be set before they can proceed to lc2 . Each

proc that finds out that condition proceed ∧ wait = count holds increments

open. This lets another process which is waiting at lc2 to take the transition

lc2 I lc3 , i.e. traverse the barrier. A deadlock situation is possible to happen in

this implementation and that is when one or more processes are waiting for the

condition open > 0 to hold, but there is no process left at lcent or lc1 of process

which may eventually increment open. In this case a process goes to error state.

24

Zeinab Ganjei, Ahmed Rezine, Petru Eles, and Zebo Peng

int wait := 0, count := 0

bool proceed := ff

main :

lcent I lcent : spawn(proc1); count := count + 1

lcent I lcent : spawn(proc2)

lcent I lcext : proceed := tt

proc1 :

lcent

lc1

lc1

proc2 :

lcent

I lc1 : wait := wait + 1

I lc2 : proceed ∧ wait = count

I lcerr : proceed ∧ wait 6= count ∧ (proc1@lcent )# = 0

I lc1 : wait > 0; wait := wait − 1

Fig. 14. Buggy Barrier No.2

In Fig.14 another buggy implementation of a barrier is demonstrated which

makes deadlock possible. Process main non-deterministically either spawns a

proc1 and increments count or spawns a proc2 or sets flag proceed. proc1 contains

a barrier. Each process in proc1 increments wait and then waits at lc1 for the

barrier condition to hold. A proc2 decrements wait if wait > 0. A deadlock

happens when at least a proc2 decrements wait which causes the condition in

lc1 I lc2 of proc1 to never hold. We check a deadlock situation in lc1 I lcerr

of proc1 which is equivalent to the situation where proceed ∧ wait 6= count does

not hold but there exists no process in lcent of proc1 that can increment wait.

int wait := 0, count := 0

bool proceed := ff

main :

lcent I lcent : spawn(proc); count := count + 1

lcent I lc1 : proceed := tt

proc :

lcent

lcent

lc1

lc1

I

I

I

I

lc1

lc1

lc2

lcerr

:

:

:

:

wait := wait + 1

wait > 0; wait := wait − 1

proceed ∧ wait = count

proceed ∧ wait 6= count ∧ (proc@lcent )# = 0

Fig. 15. Buggy Barrier No.3

The buggy implementation of a barrier in Fig.15 is similar to Fig.14, just

that this time the proc itself may decrement the wait and thus make the barrier

Abstracting and Counting Synchronizing Processes

25

condition proceed ∧ wait = count never hold. A deadlock situation is detected

similar to the Fig.14.

int wait := 0, count := 0, open := 0

bool proceed := ff

main :

lcent I lcent : spawn(proc); count := count + 1

lcent I lc1 : proceed := tt

proc :

lcent

lc1

lc1

lc2

lc3

lc4

lc4

lc4

I

I

I

I

I

I

I

I

lc1

lc2

lc2

lc3

lc4

lcerr

lcent

lcent

:

:

:

:

:

:

:

:

wait := wait + 1

proceed ∧ wait = count; open := open + 1

proceed ∧ wait 6= count

open >= 1

wait := wait − 1;

wait = 0 ∧ open = 0

wait = 0 ∧ open >= 1; open := open − 1

wait 6= 0

Fig. 16. Buggy Barrier in Loop

The example in Fig.16 is based on an example in [6]. It demonstrates a

buggy implementation of a reusable barrier. Reusable barriers are needed when

a barrier is inside a loop. In Fig.16 the loop is formed by backward edges from lc3

to lcent . Process main spawns proc and increments count accordingly. Program

locations lcent to lc3 in proc correspond the barrier implementation and are

similar to example in Fig.13 and the other transitions make the barrier ready to

be reused in the next loop iteration. The example is buggy first because deadlock

is possible and second because a processes can continue to next loop iteration

while others are still in previous iterations. Deadlock will happen when processes

are not able to proceed from lc4 because wait = 0 but open = 0, thus they can

never take any of the lc4 I lcent edges. For detecting such a deadlock scenario

it is essential to capture the relation between shared variables count and wait

with number of procs in different locations.