Document 13075106

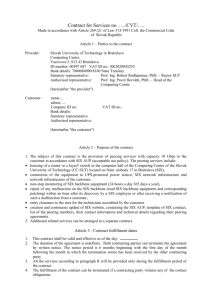

advertisement