C4 Software Technology Reference Guide —A Prototype

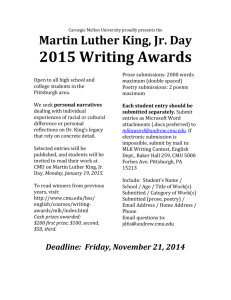

advertisement