Tucker Chambers CS105 Project 12/10/2010 Data Mining for Insights Into Movie-Advertisement Placement

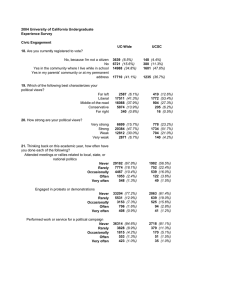

advertisement

Tucker Chambers CS105 Project 12/10/2010 Data Mining for Insights Into Movie-Advertisement Placement I. INTRODUCTION For my project I performed data-mining techniques on a dataset from the perspective a movie producer to discover the best places to put movie ads. I analyzed a several attributes regarding media habits (radio, internet, TV, newspaper, etc.) and their relation to the frequency that people go to the movies. By analyzing this data it could hypothetically enable a movie producer to discover the types of media exposure that predict frequent movie-going, which would allow more effective and efficient ad placement. To make my analysis, I used Weka, a python program, and multiple data graphics. II. DATASET DESCRIPTION The dataset I analyzed is the Fast Food dataset from STARS website. It can be obtained at the following URL: http://stars.ac.uk/showSubject1.php?subjectID=5. This dataset was originally designed to analyze the factors that may affect the amount of fast food that people eat per month, such as exposure to different kinds of media and TV watching habits. I didn’t find this data arrangement to be particularly interesting, so I modified the data to analyze the factors which affect how often people attend movies. III. DATA PREPARATION 1. Manual Modifications: I began by making several manual modifications to the original Fast Food dataset. It was necessary to make several high-level changes to the layout and structure of the database that were not feasible to include in my automated data preparation process. Removed attributes that only applied to original Fast Food dataset: All fast food / month, First mentioned, Last bought, Peer influence, Brand importance Removed Household income. Although this attribute may have been useful and predictive, I removed it for two reasons: i. From the perspective a movie producer, when ads are placed you do not necessarily know the income of those people which are exposed to the ads, so it isn’t helpful to the goals of my project if this is predictive. ii. This attribute had a very high number of missing values; including income would have forced me to sacrifice a large portion of my data by removing such rows Removed Watch TV Cur Aff. I am not sure what type of show Cur Aff refers to. It might be “current affairs,” but even so I’m not sure what type of show would qualify as a “current affairs” show. There are no explanations of the attributes on the source website, so there’s no way to know. I don’t want to include anything which is ambiguous or confusing as that would make it difficult for me to properly analyze my results. Removed Watch morning TV for similar reasons. Does this refer to watching TV in the morning hours, watching a morning news show, or watching morning talk shows? This is unclear and could signify a number of different things. Again, I don’t want to include anything that is ambiguous. Removed the ID attribute. This is simply a numeric identifier of the row and would be in no way predictive of movie-going – it would only confuse my algorithms Shifted the Go to movies to the last column since it is my output See Appendix A for a list of the remaining attributes 2. Automated Data Preparation: I used a Python program to remove lines with missing or problematic data. There were many problematic lines, and it would have been tedious and timeconsuming to remove them manually, so it made sense to automate this process. All lines with empty or unknown values were removed (the program searched for any instances of “*”, “Don’t know”, “Unknown” and zero-length strings) All apostrophes were replaced with nothing, as this is problematic in Weka Replaced all instances of “Not at all” with “Never” to make it easier to handle After this process was completed, there were 385 lines remaining. See Appendix B for the Python data preparation program that was used 3. Data-Mining Preparation: The final step in the preparation was to prepare the dataset for datamining in Weka. After ensuring that my dataset was in a CSV file, I loaded the data into Weka and performed the following: Randomized the data using Weka’s randomize algorithm – this ensures a good balance across the data so that the results are not lopsided once I split off the test examples. Split the data into training and test examples. I used a 75/25 split (i.e. I used about 75% of the data for training examples, and about 25% for test examples). This resulted in 290 training examples and 95 test examples. IV. DATA ANALYSIS The goal of the analysis was to develop a model that accurately predicts the movie-going behavior of people based on media exposure and several demographic attributes. This analysis could potentially be used by movie producers to determine the most effective types of media, places, and demographic groups to advertise their movies to. To find the best model, I explored a number of different classification-learning algorithms. The following tables summarize the results of each algorithm on the training and test data: Algorithm PART OneR NBTree JRip J48 Training Data Accuracy 87.93% 67.93% 78.28% 68.97% 77.24% Test Data Accuracy 54.74% 58.95% 62.11% 64.21% 65.26% PART o Explanation of Algorithm: This algorithm creates a decision list using the separate-andconquer strategy. The decision list is often very long and complicated, which can lead to high accuracy on training data, but low accuracy on new examples. o Analysis: On the training data PART created a very long and complicated decision list based on many attributes which led to overfitting. The model created by PART was highly accurate on the test data (87.93%), but due to overfitting it was the most inaccurate on the test examples (54.74%). OneR o Explanation of Algorithm: This algorithm finds the one attribute that makes the fewest prediction errors (i.e. the attribute that on its own is the most predictive) and uses only that one attribute to predict the output. o Analysis: On the training data OneR found that the attribute with the least errors was (interestingly enough) Pass billboards. This model predicted that if you pass billboards regularly or occasionally, you will see movies occasionally, and if you never pass billboards, you will never see movies. This model had 67.93% accuracy on the test data and 58.95% accuracy on the training data. NBTree o Explanation of Algorithm: This algorithm generates a decision tree using the Naive Bayes classifiers at the each of the leaves. o Analysis: On the training data, the model generated by NBTree had a high accuracy of 78.28%, but on the test data it performed less well (probably due to overfitting) with an accuracy of 62.11%. JRip o Explanation of Algorithm: This algorithm implements a propositional rule learner, and then executes the Repeated Incremental Pruning to Produce Error Reduction (RIPPER). Basically JRip creates a long and complicated set of accurate rules, and then “prunes” a number of rules from the list to prevent overfitting and improve accuracy and previously unseen examples. o Analysis: The JRip algorithm produced a surprisingly simple model with two rules: if Age = 55-70 and Watch TV cooking = Yes then Go to movies=Never else Go to movies=Occasionally This model was 68.97% accurate on the test data, and 64.21% accurate on the training data. Of all of the algorithms, JRip lost the least accuracy when moving from training to test examples. Unfortunately the test accuracy was still too low to be chosen. J48 o Explanation of Algorithm: Like JRip, this model generates a decision tree, and then prunes it to prevent overfitting. However, compared with JRip, J48 seems to do much less pruning (i.e. the final tree is more complicated) since J48 ended up with 33 leaves, and JRip ended up with just 2 rules. o Analysis: The pruned tree produced by J48 had 33 leaves, with an overall size of 48. I found that the best leaves (i.e. the attributes with the highest goodness score) chosen by this algorithm were very interesting. The first two leaves chosen were Internet and Education, following by Watch TV music and Pass billboards. This model had 77.24% accuracy on the training set, and 65.26% accuracy on the test set. This was the highest accuracy on the test examples. See Appendix C for the full decision tree. V. RESULTS In my analysis of the data I used models produced by the PART, OneR, NBTree, JRip, and J48 algorithms. The specific analyses of these algorithms are described above, so I have provided a brief, general summary of all five algorithms, and then an in depth-discussion of the chosen algorithm J48. Algorithm Results The OneR algorithm produced a model that was far too simple (OneR is only based on one rule) to generalize well with unseen examples. The Pass billboards attribute on its own is not able to accurately predict unseen test examples. The PART and NBTree models both used overly complicated and precise decisions lists/trees that worked well on the training data but did not generalize well on the test data. Both of these models seemed to overfit to the training data, and thus were much less accurate on the test data. The JRip and J48 algorithms both produced decision lists/trees and then pruned them to prevent overfitting. Of all the models, these two lost the least accuracy when moving from the training set to the test set (most likely due to this careful pruning that prevents overcomplicating). However, in the end the J48 algorithm ended up being the most accurate on the test set by a small margin, with an accuracy of 65.26% J48 Results and Implementation Since the J48 algorithm had the highest accuracy on the test data, it is the best classificationlearning algorithm to use for the purposes of this project. According to this model, the best attributes (i.e. highest goodness score) to use to predict moving going include internet usage, followed by type of education, and then Watch TV-Music, Pass billboards, and hours of TV watched on weekdays/weekends (see graphic below and Appendix C for the full tree). For the most part, the fact that these were chosen as the best attributes does make some intuitive sense in the real world. For example, the data suggests that internet users are much more likely to see movies than non-users. If you use the internet this may suggest that you are willing and able to expose yourself to various types of media such as movies. However, several of the chosen attributes chosen are quite interesting and do not make much intuitive sense. For example, the data suggests that your exposure to music television and billboards are highly decisive factors in whether you see movies. There is no clear explanation for this. Furthermore, it is surprising that age and sex are not even present in the J48 tree – it would have made intuitive sense if these factors predicted movie-going (it’s a common conception that the young see more movies). To illustrate the potential usefulness of this algorithm in predicting movie-going, I have created several graphical representations for some of the most useful attributes according to the J48 model. With these graphics, you can see how powerful some of these attributes are for movie-going predictions. Internet Usage – Movie Frequency: This suggests that internet usage predicts at least occasional movie-going, while non-users do not go to movies as often. This could potentially inform a movieproducer that the internet is an effective medium for advertising a movie. Hours Watching TV – Movie Frequency: This suggests that the more TV that someone watches, the more likely they are to see movies (at least occasionally), while those who watch less TV (or none at all) are less likely to see movies. This could potentially inform a movie-producer that television is an effective medium for movie advertising, especially for those who watch TV frequently. Education – Movie Frequency: This suggests that those who have only completed Secondary education and/or Tech-college are more likely to see movies at least occasionally, while those who have completed graduate or post-graduate work may be less likely to see movies. This could inform a movieproducer that it may be more effective to direct advertising towards movie-goers with less education. Pass Billboards – Movie Frequency: This suggests that those who pass billboards could be more likely to see movies (at least occasionally) than those who do not pass billboards. This could inform a movie producer that billboard ads may be effective to reach consumers who occasionally go to see movies. VI. CONCLUSION Classification learning algorithms can be a very useful tool in many applications such as movie advertisements. In this project I analyzed a dataset of attributes regarding movie-goers and determined the best algorithm to predict movie-going behavior. In my analysis I determined that the pruned decision-list algorithm J48 creates the most accurate and effective model for predicting whether someone goes to movies regularly, occasionally, or never. Further, through results of the J48 algorithm, and through the use of visuals, I discovered that internet usage, hours watching TV, education and billboard exposure may be predictive factors for people that go to movies at least occasionally. Datamining projects such as this could be invaluable in applications such as movie advertising, as they allow companies to use their limited resources to reach consumers in the most effective way possible. The key point to remember that no model is perfect, and that these models and predictive attributes are merely suggestions – in real life there is always variation beyond what is predicted. Yet in the expensive world of movie-making, every dollar counts, and a suggestion is better than nothing. APPENDIX A: Dataset Attributes All attributes are nominal AGE SEX INTERNET REGION EDUCATION READ NEWSPAPER PASS BILLBOARDS LISTEN TO RADIO WATCH TV NEWS WATCH TV SOAPS WATCH TV SPORT WATCH TV CHAT WATCH TV QUIZ WATCH TV DRAMA WATCH TV GARDEN WATCH TV COOKING WATCH TV COMEDY WATCH TV DOCUMENTARY WATCH TV FILMS WATCH TV REALITY WATCH TV MUSIC TV HRS WEEKDAY TV HRS WEEKEND GO TO MOVIES (15-17, 18-24, 25-35, 36-54, 55-70) (Male/Female) (User/Non-user) (North/South/Midlands) (Secondary/Tech college/Graduate/Post-graduate) (Regularly/Occasionally/Never) (Regularly/Occasionally/Never) (Regularly/Occasionally/Never) (Yes/No) (Yes/No) (Yes/No) (Yes/No) (Yes/No) (Yes/No) (Yes/No) (Yes/No) (Yes/No) (Yes/No) (Yes/No) (Yes/No) (Yes/No) (Under 1, 1 to 2, 3 to 5, 5 to 10, Over 10) (Under 1, 1 to 2, 3 to 5, 5 to 10, Over 10) (Regularly/Occasionally/Never) APPENDIX B: Data Preparation Program # # # # # perpareData.py Tucker Chambers (tuckerc@bu.edu) CS105 Final Project 12/10/2010 Prepares a dataset for data-mining in Weka import string inFileName = "FastFood.csv" outFileName = "GoToMovies.csv" # This is the original Fast Food dataset food_file = open(inFileName, 'r') # This will be the new movies dataset movie_file = open(outFileName, 'w') for line in food_file: testLine = string.replace(line.lower(), " ", "") # Exclude lines with missing data if not (len(testLine) == 0 or "*" in testLine or "don'tknow" in testLine): # Remove apostrophies that cause errors line = string.replace(line, "'", "") # Replace "not at all" with "never" -- easier to use line = string.replace(line, "Not at all", "Never") # Write the modified line to movies file movie_file.write(line) food_file.close() movie_file.close() print "Data preparation complete.", outFileName, "is ready for analysis." APPENDIX C: J48 Pruned Tree Internet = User: Occasionally (184.0/52.0) Internet = Non-user | Education = Secondary | | Watch TV music = No | | | TV hrs weekend = 3 to 5: Never (12.0/4.0) | | | TV hrs weekend = 5 to 10 | | | | Region = North | | | | | Watch TV comedy = Yes: Occasionally (4.0) | | | | | Watch TV comedy = No: Never (3.0) | | | | Region = Midlands: Occasionally (4.0) | | | | Region = South: Never (5.0/2.0) | | | TV hrs weekend = 1 to 2: Never (2.0) | | | TV hrs weekend = 2 to 3: Occasionally (5.0) | | | TV hrs weekend = Over 10: Never (3.0/1.0) | | | TV hrs weekend = Under 1: Never (1.0) | | Watch TV music = Yes | | | Watch TV documentary = Yes | | | | Watch TV garden = Yes: Occasionally (14.0) | | | | Watch TV garden = No | | | | | Watch TV drama = Yes: Occasionally (3.0) | | | | | Watch TV drama = No: Regularly (3.0) | | | Watch TV documentary = No | | | | Watch TV garden = Yes: Never (2.0) | | | | Watch TV garden = No | | | | | Listen to radio = Regularly: Occasionally (3.0) | | | | | Listen to radio = Never: Never (2.0) | | | | | Listen to radio = Occasionally: Occasionally (6.0/1.0) | Education = Graduate: Occasionally (1.0) | Education = Tech college | | Pass billboards = Regularly | | | Watch TV reality = No: Occasionally (12.0/2.0) | | | Watch TV reality = Yes: Regularly (6.0/2.0) | | Pass billboards = Occasionally | | | TV hrs weekend = 3 to 5: Occasionally (3.0) | | | TV hrs weekend = 5 to 10 | | | | TV hrs weekday = 5 to 10: Never (4.0/1.0) | | | | TV hrs weekday = 3 to 5: Occasionally (0.0) | | | | TV hrs weekday = 2 to 3: Occasionally (0.0) | | | | TV hrs weekday = 1 to 2: Occasionally (0.0) | | | | TV hrs weekday = Over 10: Occasionally (2.0) | | | | TV hrs weekday = Under 1: Occasionally (0.0) | | | TV hrs weekend = 1 to 2: Occasionally (0.0) | | | TV hrs weekend = 2 to 3: Occasionally (0.0) | | | TV hrs weekend = Over 10: Occasionally (0.0) | | | TV hrs weekend = Under 1: Occasionally (0.0) | | Pass billboards = Never: Occasionally (2.0/1.0) | Education = Postgraduate: Never (4.0) Number of Leaves: 33 Size of the tree: 48