ITERATIVE METHODS AND REGULARIZATION IN THE DESIGN OF FAST ALGORITHMS

advertisement

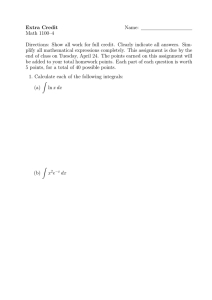

ITERATIVE METHODS AND REGULARIZATION IN THE DESIGN OF FAST ALGORITHMS An unified framework for optimization and online learning beyond Multiplicative Weight Updates Lorenzo Orecchia, MIT Math Talk Outline: A Tale of Two Halves PART 1: REGULARIZATION AND ITERATIVE TECHNIQUES FOR ONLINE LEARNING • Online Linear Optimization • Online Linear Optimization over Simplex and Multiplicative Weight Updates (MWUs) • A Regularization Framework to generalize MWUs: Follow the Regularized Leader MESSAGE: REGULARIZATION IS A POWERFUL ALGORITHMIC TECHNIQUE Talk Outline: A Tale of Two Halves PART 1: REGULARIZATION AND ITERATIVE TECHNIQUES FOR ONLINE LEARNING • Online Linear Optimization • Online Linear Optimization over Simplex and Multiplicative Weight Updates (MWUs) • A Regularization Framework to generalize MWUs: Follow the Regularized Leader MESSAGE: REGULARIZATION IS A POWERFUL ALGORITHMIC TECHNIQUE Optimization: Regularized Updates Online Learning: Multiplicative Weight Updates (MWUs) Talk Outline: A Tale of Two Halves PART 1: REGULARIZATION AND ITERATIVE TECHNIQUES FOR ONLINE LEARNING • Online Linear Optimization • Online Linear Optimization over Simplex and Multiplicative Weight Updates (MWUs) • A Regularization Framework to generalize MWUs: Follow the Regularized Leader MESSAGE: REGULARIZATION IS A POWERFUL ALGORITHMIC TECHNIQUE PART 2: NON-SMOOTH OPTIMIZATION AND FAST ALGORITHMS FOR MAXFLOW • Non-smooth vs Smooth Convex Optimization •Non-smooth Convex Optimization reduces to Online Linear Optimization • Application: Understanding Undirected Maxflow algorithms based on MWUs MESSAGE: FASTEST ALGORITHMS REQUIRE PRIMAL-DUAL APPROACH TOC Applications of MWUs Fast Algorithms for solving specific LPs and SDPs: Maximum Flow problems [PST], [GK], [F], [CKMST] Covering-packing problems [PST] Oblivious routing [R], [M] Fast Approximation Algorithms based on LP and SDP relaxations: Maxcut [AK] Graph Partitioning Problems [AK], [S], [OSV] Proof Technique Hardcore Lemma [BHK] QIP = PSPACE [W] Derandomization [Y] … and more Machine Learning meets Optimization meets TCS These techniques have been rediscovered multiple times in different fields: Machine Learning, Convex Optimization, TCS Three surveys emphasizing the different viewpoints and literatures: 1) ML: Prediction, Learning and Games by Gabor and Lugosi 2) Optimization: Lectures in Modern Convex Optimization by Ben Tal and Nemirowski 3) TCS: The Multiplicative Weights Update Method: a Meta Algorithm and Applications by Arora, Hazan and Kale REGULARIZATION 101 What is Regularization? Regularization is a fundamental technique in optimization OPTIMIZATION PROBLEM WELL-BEHAVED OPTIMIZATION PROBLEM • Stable optimum • Unique optimal solution • Smoothness conditions … What is Regularization? Regularization is a fundamental technique in optimization OPTIMIZATION PROBLEM WELL-BEHAVED OPTIMIZATION PROBLEM Parameter ¸ > 0 Benefits of Regularization in Learning and Statistics: • Prevents overfitting • Increases stability •Decreases sensitivity to random noise Regularizer F Example: Regularization Helps Stability Consider a convex set S ½ Rn and a linear optimization problem: f(c) = arg minx2S cT x The optimal solution f(c) may be very unstable under perturbation of c : kc0 ¡ ck · ± kf(c0 ) ¡ f(c)k >> ± and c0 f(c0 ) c S f(c) Example: Regularization Helps Stability Consider a convex set S ½ Rn and a regularized linear optimization problem f(c) = arg minx2S cT x +F (x) where F is ¾-strongly convex. Then: kc0 ¡ ck · ± implies kf(c0 ) ¡ f(c)kk · ± ¾ c0T x + F (x) cT x + F (x) f(c) f(c0 ) Example: Regularization Helps Stability Consider a convex set S ½ Rn and a regularized linear optimization problem f(c) = arg minx2S cT x +F (x) where F is ¾-strongly convex. Then: kc0 ¡ ck · ± implies kslopek · ± kf(c0 ) ¡ f(c)kk · ± ¾ c0T x + F (x) cT x + F (x) f(c) f(c0 ) ONLINE LINEAR OPTIMIZATION AND MULTIPLICATIVE WEIGHT UPDATES Online Linear Minimization SETUP: Convex set Xµ Rn, generic norm, repeated game over T rounds. At round t, ALGORITHM x(t) 2 X Current solution ADVERSARY Online Linear Minimization SETUP: Convex set Xµ Rn, generic norm, repeated game over T rounds. At round t, ALGORITHM x(t) 2 X Current solution ADVERSARY `(t) 2 Rn; kr`(t) k¤ · ½ Current linear objective Loss vector Online Linear Minimization SETUP: Convex set Xµ Rn, generic norm, repeated game over T rounds. At round t, ALGORITHM ADVERSARY x(t) 2 X `(t) 2 Rn; kr`(t) k¤ · ½ Current linear objective Current solution Loss vector (t) T ` x(t) Algorithm’s loss Online Linear Minimization SETUP: Convex set Xµ Rn, generic norm, repeated game over T rounds. At round t, ALGORITHM x(t) 2 X x(t+1) 2 X Updated solution ADVERSARY n `(t) 2 R ; kr` x(t) 2 X(t) k¤ · ½ Online Linear Minimization SETUP: Convex set Xµ Rn, generic norm, repeated game over T rounds. At round t, ALGORITHM x(t) 2 X (t+1) x 2X Updated solution ADVERSARY n `(t) 2 R ; kr` x(t) 2 X(t) k¤ · ½ `(t+1) 2 Rn; kr`(t) k¤ · ½ New Loss Vector Online Linear Minimization SETUP: Convex set Xµ Rn, generic norm, repeated game over T rounds. At round t, ALGORITHM x(t) 2 X (t+1) x 2X ADVERSARY n `(t) 2 R ; kr` x(t) 2 X(t) k¤ · ½ `(t+1) 2 Rn; kr`(t) k¤ · ½ GOAL: update x(t) to minimize regret T T X X 1 1 (t) T (t) T T ¢ ` x ¡ min ¢ `i x x2X T T t=1 t=1 ^ Average Algorithm’s Loss L A Posteriori Optimum L¤ Simplex Case: Learning with Experts SETUP: Simplex Xµ Rn under ℓ1 norm. At round t, ALGORITHM p(t) distribution over experts ADVERSARY Simplex Case: Learning with Experts SETUP: Simplex Xµ Rn under ℓ1 norm. At round t, ALGORITHM p(t) distribution over dimensions i.e. experts ADVERSARY k`(t) k1 · ½ Experts’ losses Simplex Case: Learning with Experts SETUP: Simplex Xµ Rn under ℓ1 norm. At round t, ALGORITHM ADVERSARY p(t) k`(t) k1 · ½ distribution over experts Experts’ losses h i (t) (t) T (t) EiÃp(t) `i = p ` Algorithm’s loss Simplex Case: Learning with Experts SETUP: Simplex Xµ Rn under ℓ1 norm. At round t, ALGORITHM p(t) distribution over experts p(t+1) Update distribution ADVERSARY k`(t) k1 · ½ Experts’ losses Simplex Case: Multiplicative Weight Updates ALGORITHM ADVERSARY p(t) `(t) (t+1) Weights: w i (t) `i = (1 ¡ ²) (t) wi ; w1 = ~1 Simplex Case: Multiplicative Weight Updates ALGORITHM ADVERSARY p(t) `(t) (t+1) Weights: w i Distribution: (t+1) pi (t) `i = (1 ¡ ²) (t) wi (t) wi = Pn j=1 (t) wj ; w1 = ~1 Simplex Case: Multiplicative Weight Updates ALGORITHM ADVERSARY p(t) `(t) (t+1) Weights: w i Distribution: (t+1) pi (t) `i = (1 ¡ ²) (t) wi ; w1 = ~1 (t) wi = Pn j=1 (t) wj MULTIPLICATIVE WEIGHT UPDATE Simplex Case: Multiplicative Weight Updates ALGORITHM ADVERSARY p(t) `(t) (t+1) Weights: w i Distribution: (t+1) pi = (1 ¡ ²) (t) wi ; w1 = ~1 (t) wi = Pn j=1 CONSERVATIVE (t) `i 0 (t) wj AGGRESSIVE 1 ² 2 (0; 1) MWUs: Unraveling the Update ALGORITHM ADVERSARY p(t) `(t) Update: (t+1) pi / (t+1) wi (t) `i = (1 ¡ ²) (t) ¢ wi WEIGHT (t+1) wi P (t) ` t i (1 ¡ ²) CUMULATIVE LOSS P (t) t `i MWUs: Regret Bound ALGORITHM ADVERSARY p(t) `(t) Update: For ² < 1 2 (t+1) pi / (t+1) wi = (1 ¡ ²) and k`(t) k1 · ½ ^ ¡ L? · L ½ log n ²T (t) `i + ½² (t) ¢ wi MWUs: Regret Bound ALGORITHM ADVERSARY p(t) `(t) Update: For ² < 1 2 (t+1) pi / (t+1) wi (t) `i = (1 ¡ ²) (t) ¢ wi and k`(t) k1 · ½ ^ ¡ L? · L Algorithm’s Regret ½ log n ²T + ½² Start-up Penalty Penalty for being greedy ONLINE LINEAR OPTIMIZATION BEYOND MWUs A REGULARIZATION FRAMEWORK MWUs: Proof Sketch of Regret Bound Update: (t+1) pi / (t+1) wi Pt (s) = (1 ¡ ²) s=1 `i • Proof is potential function argument (t+1) © = log1¡² Pn (t+1) i=1 wi MWUs: Proof Sketch of Regret Bound (t+1) pi Update: / (t+1) wi Pt (s) = (1 ¡ ²) s=1 `i • Proof is potential function argument (t+1) © = log1¡² Pn (t+1) i=1 wi • Potential function bounds loss of best expert (t+1) © · (t+1) n log1¡² mini=1 wi = minni=1 ³P t (s) s=1 `i ´ MWUs: Proof Sketch of Regret Bound (t+1) pi Update: / (t+1) wi Pt (s) = (1 ¡ ²) s=1 `i • Proof is potential function argument (t+1) © = log1¡² Pn (t+1) i=1 wi • Potential function bounds loss of best expert (t+1) © · (t+1) n log1¡² mini=1 wi = minni=1 ³P t • Potential function is related to algorithm’s performance ©(t+1) ¡ ©(t) ³ T ´ ¸ `(t) p(t) ¡ ² (s) s=1 `i ´ MWUs: Proof Sketch of Regret Bound (t+1) pi Update: / (t+1) wi Pt (s) = (1 ¡ ²) s=1 `i • Proof is potential function argument (t+1) © = log1¡² Pn (t+1) i=1 wi • Potential function bounds loss of best expert (t+1) © · (t+1) n log1¡² mini=1 wi = minni=1 ³P t • Potential function is related to algorithm’s performance ©(t+1) ¡ ©(t) ³ T ´ ¸ `(t) p(t) ¡ ² (s) s=1 `i ´ DOES THIS PROOF TECHNIQUE GENERALIZE TO BEYOND SIMPLEX CASE? Designing a Regularized Update GOAL: Design an update and its potential function analysis QUESTION: Choice of potential function? DESIDERATA: 1) lower bounds best expert’s loss 2) tracks algorithm’s performance MWUs AND APPLICATIONS Designing a Regularized Update QUESTION: Choice of potential function? DESIDERATA: 1) lower bounds best expert’s loss 2) tracks algorithm’s performance Attempt 1 – FOLLOW THE LEADER: Cumulative loss L (t) MWUs AND APPLICATIONS x(t+1) = arg min xT L(t) x2X Pick best current solution = Pt (s) ` s=1 ©(t+1) = min xT L(t) x2X Potential is current best loss Designing a Regularized Update QUESTION: Choice of potential function? DESIDERATA: 1) lower bounds best expert’s loss 2) tracks algorithm’s performance Attempt 1 – FOLLOW THE LEADER: Cumulative loss L (t) MWUs AND APPLICATIONS x(t+1) = arg min xT L(t) x2X Pick best current solution = Pt (s) ` s=1 ©(t+1) = min xT L(t) x2X Potential is current best loss Designing a Regularized Update QUESTION: Choice of potential function? DESIDERATA: 1) lower bounds best expert’s loss 2) tracks algorithm’s performance Attempt 1 – FOLLOW THE LEADER: Cumulative loss L (t) MWUs AND APPLICATIONS x(t+1) = arg min xT L(t) x2X Pick best current solution = Pt (s) ` s=1 ©(t+1) = min xT L(t) x2X Potential is current best loss Fails if best expert changes moves drastically Designing a Regularized Update QUESTION: Choice of potential function? DESIDERATA: 1) lower bounds best expert’s loss 2) tracks algorithm’s performance Attempt 1 – FOLLOW THE LEADER: Cumulative loss L (t) MWUs AND APPLICATIONS x(t+1) = arg min xT L(t) x2X ©(t+1) = min xT L(t) x2X = Pt How to make update more stable? (s) ` s=1 Regularized Update: Definition QUESTION: Choice of potential function? DESIDERATA: 1) lower bounds best expert’s loss 2) tracks algorithm’s performance Attempt 2 – FOLLOW THE REGULARIZED LEADER: MWUs AND APPLICATIONS x(t+1) = arg min xT L(t) + ´ ¢ F(x) x2X ©(t+1) = min xT L(t) + ´ ¢ F(x) x2X Properties of Regularizer F(x): 1. Convex, differentiable 2. ¾-strong convex w.r.t. norm Parameter ´ ¸ 0, TBD Regularized Update: Definition QUESTION: Choice of potential function? DESIDERATA: 1) lower bounds best expert’s loss 2) tracks algorithm’s performance Attempt 2 – FOLLOW THE REGULARIZED LEADER: MWUs AND APPLICATIONS x(t+1) = arg min xT L(t) + ´ ¢ F(x) x2X ©(t+1) = min xT L(t) + ´ ¢ F(x) x2X Properties of Regularizer F(x): 1. Convex, differentiable 2. ¾-strong convex w.r.t. norm Parameter ´ ¸ 0, TBD These properties are actually sufficient to get a regret bound Regularized Update: Analysis QUESTION: Choice of potential function? DESIDERATA: 1) lower bounds best expert’s loss 2) tracks algorithm’s performance Attempt 2 – FOLLOW THE REGULARIZED LEADER: MWUs AND APPLICATIONS x(t+1) = arg min xT L(t) + ´ ¢ F(x) x2X ©(t+1) = min xT L(t) + ´ ¢ F(x) x2X (t+1) © (t) T · min L x2X Properties of Regularizer F(x): 1. Convex, differentiable 2. ¾-strong convex w.r.t. norm Parameter ´ ¸ 0, TBD x + ´ ¢ max F (x) x2X Regularized Update: Analysis QUESTION: Choice of potential function? DESIDERATA: 1) lower bounds best expert’s loss 2) tracks algorithm’s performance Attempt 2 – FOLLOW THE REGULARIZED LEADER: MWUs AND APPLICATIONS x(t+1) = arg min xT L(t) + ´ ¢ F(x) x2X ©(t+1) = min xT L(t) + ´ ¢ F(x) x2X (t+1) © (t) T · min L x2X Properties of Regularizer F(x): 1. Convex, differentiable 2. ¾-strong convex w.r.t. norm Parameter ´ ¸ 0, TBD x + ´ ¢ max F (x) x2X Regularization error Regularized Update: Analysis QUESTION: Choice of potential function? DESIDERATA: 1) lower bounds best expert’s loss 2) tracks algorithm’s performance ? Attempt 2 – FOLLOW THE REGULARIZED LEADER: MWUs AND APPLICATIONS x(t+1) = arg min xT L(t) + ´ ¢ F(x) x2X ©(t+1) = min xT L(t) + ´ ¢ F(x) x2X f (t+1) (x) Properties of Regularizer F(x): 1. Convex, differentiable 2. ¾-strong convex w.r.t. norm Parameter ´ ¸ 0, TBD Tracking the Algorithm: Proof by Picture f (t+1) (x) f (t) (x) ©(t+1) ©(t) x(t) Define: x(t+1) f (t+1) (x) = xT L(t) + ´ ¢ F (x) x Tracking the Algorithm: Proof by Picture f (t+1) (x) f (t) (x) ©(t+1) ©(t) x(t) Define: Notice: x x(t+1) f (t+1) (x) = xT L(t) + ´ ¢ F (x) f (t+1) (x) ¡ f (t) (t) T (x) = ` x Latest loss vector Tracking the Algorithm: Proof by Picture f (t+1) (x) f (t) (x) ©(t+1) T `(t) x(t) ©(t) x(t) Define: Notice: x x(t+1) T f (t+1) (x) = L(t) x + ´ ¢ F (x) f (t+1) (x) ¡ f (t) (t) T (x) = ` x Latest loss vector Tracking the Algorithm: Proof by Picture (t+1) (x) ff(t+1) (x) (t) (x) ff(t) (x) ©(t+1) T `(t) x(t) ©(t) x(t) x(t+1) Compare: (t) T ` x(t) and ©(t+1) ¡ ©(t) x Tracking the Algorithm: Proof by Picture f (t+1) (x) f (t) (x) ©(t+1) T `(t) x(t) ©(t) x(t) (t+1) © Want: (t) ¡© =f (t+1) (t+1) (x p x x(t+1) )¡f (t+1) (t) (t) T (t) (x ) + ` f (t+1) (x(t) ) ¼ f (t+1) (x(t+1) ) x Regularization in Action f (t+1) (x) f (t) (x) ©(t+1) T `(t) x(t) ©(t) x(t) x(t+1) REGULARIZATION T f (t+1) (x) = L(t) x + ´ ¢ F (x) x f (t) is (´ ¢ ¾ )-strongly-convex Regularization in Action f (t+1) (x) f (t) (x) ©(t+1) `(t) T `(t) x(t) ©(t) x(t) x x(t+1) REGULARIZATION T f (t+1) (x) = L(t) x + ´ ¢ F (x) kf (t+1) ¡f (t) (t) k¤ = k` k¤ STABILITY f (t) is (´ ¢ ¾ )-strongly-convex (t+1) jjx (t) ¡ x jj · jj`(t) jj¤ ´¢¾ Regularization in Action f (t+1) (x) f (t) (x) ©(t+1) `(t) Quadratic lower bound to f(t+1) T `(t) x(t) ©(t) x(t) x x(t+1) REGULARIZATION T f (t+1) (x) = L(t) x + ´ ¢ F (x) kf (t+1) ¡f (t) (t) k = k` k STABILITY f (t) is (´ ¢ ¾ )-strongly-convex (t+1) jjx (t) ¡ x jj¤ · jj`(t) jj ´¢¾ Analysis: Progress in One Iteration (t+1) © rf (t+1) (t) ¡© =f (t) (t) (x ) = ` (t+1) (t+1) (x )¡f (x ) + ` (t) (t) jjx (t) (t) T (t) (t+1) ¡ x jj · x jj`(t) jj¤ ´¢¾ MWUs AND APPLICATIONS (t+1) f (t) T f (t+1) (x(t+1) ) ¡ f (t+1) (x(t) ) ¸ ` is (´ ¢ ¾)-strongly-convex (t) 2 jj` jj¤ (t+1) (t) (x ¡x )+ 2´ ¢ ¾ Analysis: Progress in One Iteration (t+1) © rf (t+1) (t) ¡© =f (t) (t) (x ) = ` (t+1) (t+1) (x )¡f (t+1) (t) jjx (t) (t) T (t) (t) jj`(t) jj¤ ´¢¾ (x ) + ` ¡ x jj · x MWUs AND APPLICATIONS f (t+1) is (´ ¢ ¾)-strongly-convex (t) 2 jj` jj¤ (t+1) (t+1) (t+1) (t) (t+1) (t) f (x )¡f (x ) ¸ ` (x ¡x )+ 2´ ¢ ¾ (t) (t) 2 jj` jj k` k¤ ¤ (t) (t+1) (t) ¸ ¡k` k¤ kx ¡x k+ ¸¡ 2´ ¢ ¾ 2´ ¢ ¾ (t) T Completing the Analysis Progress in one iteration: (t) k` k¤ (t+1) (t) (t) © ¡© ¸` x ¡ 2¾´ MWUs AND APPLICATIONS (t) T Regret at iteration t Completing the Analysis Progress in one iteration: (t) T ©(t+1) ¡ ©(t) ¸ ` (t) k` k¤ (t) x ¡ 2¾´ MWUs AND APPLICATIONS Telescopic sum: ©(T +1) ¸ T X t=1 (t) T (t) ` p (t) jj` jj (1) +© ¡T ¢ 2´ ¢ ¾ Completing the Analysis Progress in one iteration: (t) T ©(t+1) ¡ ©(t) ¸ ` (t) k` k¤ (t) x ¡ 2¾´ MWUs AND APPLICATIONS Telescopic sum: ©(T +1) ¸ T X t=1 (t) T (t) ` p (t) jj` jj (1) +© ¡T ¢ 2´ ¢ ¾ Final regret bound: à T ! T X T 1 X (t) T (t) ´ ½2 (t) ` x ¡ min ` x · ¢ (max F (x) ¡ min F (x)) + x2X x2X x2X T t=1 T 2¾´ t=1 Completing the Analysis Regret bound: with regularizer F and jj`(t) jj¤ · ½ à T ! T X T 1 X (t) T (t) ´ ½2 (t) ` x ¡ min ` x · ¢ (max F (x) ¡ min F (x)) + x2X x2X T t=1 T x2X 2¾´ t=1 MWUs AND APPLICATIONS Start-up Penalty SAME TYPE OF BOUND AS FOR MWUs Penalty for being greedy Reinterpreting MWUs Potential function: Regularizer: F (p) = ©(t+1) = min pT L(t) + ´ ¢ n X Pp¸0; pi =1 n X pi log pi i=1 pi log pi is negative entropy MWUs i=1 AND APPLICATIONS Reinterpreting MWUs Potential function: ©(t+1) = min pT L(t) + ´ ¢ Regularizer: F (p) = Pp¸0; pi =1 n X n X pi log pi i=1 pi log pi is negative entropy MWUs SOFT-MAX i=1 AND APPLICATIONS F (p) is 1-strongly-convex w.r.t. k ¢ k1 Update: p(t+1) = arg min pT L(t) + ´ ¢ Pp¸0; pi =1 (t) (t+1) pi 1 ¡´ Li i=1 (t) 1 ¡´ Li e pi log pi i=1 (t) Li e =P n n X (1 ¡ ²) = Pn i=1 (t) (1 ¡ ²)Li : Reinterpreting MWUs Potential function: ©(t+1) = min pT L(t) + ´ ¢ Regularizer: F (p) = Pp¸0; pi =1 n X n X pi log pi i=1 pi log pi is negative entropy MWUs i=1 AND APPLICATIONS F (p) is 1-strongly-convex w.r.t. k ¢ k1 Update: p(t+1) = arg min pT L(t) + ´ ¢ Pp¸0; pi =1 (t) (t+1) pi 1 ¡´ Li i=1 (t) 1 ¡´ Li e pi log pi i=1 (t) Li e =P n n X (1 ¡ ²) = Pn i=1 (t) (1 ¡ ²)Li : Beyond MWUs: which regularizer? Regret bound: optimizing over ´ à T ! p T X X ½ (2 ¢ (maxx2X F (x) ¡ minx2X F (x)) 1 (t) T (t) (t) T p ` x ¡ min ` x · x2X T t=1 ¾T t=1 MWUs AND APPLICATIONS Best choice of regularizer and norm minimizes maxt jj`(t) jj2¤ ¢ (maxx2X F (x) ¡ minx2X F (x)) ¾ Beyond MWUs: which regularizer? Regret bound: optimizing over ´ à T ! p T X X ½ (2 ¢ (maxx2X F (x) ¡ minx2X F (x)) 1 (t) T (t) (t) T p ` x ¡ min ` x · x2X T t=1 ¾T t=1 MWUs AND APPLICATIONS Best choice of regularizer and norm minimizes maxt jj`(t) jj2¤ ¢ (maxx2X F (x) ¡ minx2X F (x)) ¾ Negative entropy with `1-norm is approximately optimal for simplex QUESTION: are other regularizers ever useful? Different Regularizers in Algorithm Design QUESTION 1: Are other regularizers, besides entropy, ever useful? YES! Applications: Graph Partitioning and Random Walks ~ Spectral algorithms for balanced separator running in time O(m) Uses random-walk framework and SDP MWUs Different walks correspond to different regularizers for eigenvector problem F(X) = Tr(X log X) Heat Kernel Random Walk p-norm, 1 · p · 1 F(X) = Tr(X p) Lazy Random Walk NEW REGULARIZER F (X) = Tr(X 1=2) Personalized PageRank SDP MWU [Mahoney, Orecchia, Vishnoi 2011], [Orecchia, Sachdeva, Vishnoi 2012] Different Regularizers in Algorithm Design QUESTION 1: Are other regularizers, besides entropy, ever useful? YES! Applications: Graph Partitioning and Random Walks Sparsification n ²-spectral-sparsifiers with O( n log edges ²2 ) Uses Matrix concentration bound equivalent to SDP MWUs [Spielman, Srivastava 2008] ²-spectral-sparsifiers with O( ²n2 ) edges Can be interpreted as different regularizer: F (X) = Tr(X 1=2) [Batson, Spielman, Srivastava 2009] Different Regularizers in Algorithm Design QUESTION 1: Are other regularizers, besides entropy, ever useful? YES! Applications: Graph Partitioning and Random Walks Sparsification Many more in Online Learning Bandit Online Learning [AHR], … NON-SMOOTH CONVEX OPTIMIZATION REDUCES TO ONLINE LINEAR OPTIMIZATION Convex Optimization Setup min f(x) x2X NON-SMOOTH f convex, differentiable X µ Rn closed, convex set SMOOTH 8x 2 X; krf(x)k¤ · ½ 8x; y 2 X; krf(y) ¡ rf(x)k¤ · Lky ¡ xk ½-Lipschitz continuous ½-Lipschitz continuous gradient Convex Optimization Setup min f(x) x2X f convex, differentiable X µ Rn closed, convex set NON-SMOOTH SMOOTH 8x 2 X; krf(x)k¤ · ½ 8x; y 2 X; krf(y) ¡ rf(x)k¤ · Lky ¡ xk ½-Lipschitz continuous ½-Lipschitz continuous gradient Gradient step is guaranteed to decrease function value (t+1) f(x krf(x(t) )k2¤ ) · f(x ) ¡ 2L (t) Convex Optimization Setup min f(x) x2X f convex, differentiable X µ Rn closed, convex set NON-SMOOTH SMOOTH 8x; y 2 X; krf(y) ¡ rf(x)k¤ · Lky ¡ xk 8x 2 X; krf(x)k¤ · ½ ½-Lipschitz continuous NO GRADIENT STEP GUARANTEE ½-Lipschitz continuous gradient Gradient step is guaranteed to decrease function value (t+1) f(x x(t+1) x(t) krf(x(t) )k2¤ ) · f(x ) ¡ 2L (t) Convex Optimization Setup min f(x) x2X f convex, differentiable X µ Rn closed, convex set NON-SMOOTH SMOOTH 8x; y 2 X; krf(y) ¡ rf(x)k¤ · Lky ¡ xk 8x 2 X; krf(x)k¤ · ½ ½-Lipschitz continuous NO GRADIENT STEP GUARANTEE ½-Lipschitz continuous gradient Gradient step is guaranteed to decrease function value (t+1) f(x x(t+1) x(t) ONLY DUAL GUARANTEE krf(x(t) )k2¤ ) · f(x ) ¡ 2L (t) Non-Smooth Setup: Dual Approach f convex, differentiable min f(x) x2X X µ Rn closed, convex set 8x 2 X; krf(x)k¤ · ½ ½-Lipschitz continuous APPROACH: Each iterate solution provides a lower bound and an upper bound ¤ (t) (t) T f(x ) ¸ f(x ) + rf(x (x¤ ¡ x(t) ) f(x(t)) ¸ f(x¤) (t+1) x x(t+2) x(t) Non-Smooth Setup: Dual Approach f convex, differentiable min f(x) x2X X µ Rn closed, convex set 8x 2 X; krf(x)k¤ · ½ ½-Lipschitz continuous APPROACH: Each iterate solution provides a lower bound and an upper bound ¤ (t) (t) T f(x ) ¸ f(x ) + rf(x (x¤ ¡ x(t) ) f(x(t)) ¸ f(x¤) (t+1) x x(t+2) x(t) CAN WEAKEN DIFFERENTIABILITY ASSUMPTION: SUBGRADIENTS SUFFICE Non-Smooth Setup: Dual Approach APPROACH: Each iterate solution provides a lower bound and an upper bound T f(x¤) ¸ f(x(t) ) + rf(x(t) (x¤ ¡ x(t) ) f(x(t)) ¸ f(x¤) UPPER x(t) x(t+1) x(t+2) Take convex combination of both upper bounds and lower bounds with weights °t UPPER BOUND: 1 PT t=1 LOWER BOUND: °t ³P T ´ ¤ ° f(x ) ¸ f(x ) t t=1 (t) Non-Smooth Setup: Dual Approach APPROACH: Each iterate solution provides a lower bound and an upper bound T f(x¤) ¸ f(x(t) ) + rf(x(t) ) (x¤ ¡ x(t) ) f(x(t)) ¸ f(x¤) UPPER LOWER x(t) x(t+1) x(t+2) Take convex combination of both upper bounds and lower bounds with weights °t ³P ´ T 1 (t) ¤ P ° f(x ) ¸ f(x ) T t UPPER: t=1 ° t=1 LOWER : f(x¤ ) ¸ PT1 t=1 °t t hP T i (t) ¤ (t) ° (f(x ) + rf(x ) (x ¡ x )) t t=1 (t) T Non-Smooth Setup: Dual Approach APPROACH: Each iterate solution provides a lower bound and an upper bound T f(x¤) ¸ f(x(t) ) + rf(x(t) ) (x¤ ¡ x(t) ) UPPER LOWER f(x(t)) ¸ f(x¤) HOW TO UPDATE ITERATES? HOW TO CHOSE WEIGHTS? x(t) x(t+1) x(t+2) Take convex combination of both upper bounds and lower bounds with weights °t ³P ´ T 1 (t) ¤ P ° f(x ) ¸ f(x ) T t UPPER: t=1 ° t=1 LOWER : f(x¤ ) ¸ PT1 t=1 °t t hP T i (t) ¤ (t) ° (f(x ) + rf(x ) (x ¡ x )) t t=1 (t) T Reduction to Online Linear Minimization Fix weights °t to be uniform for simplicity: PT1 UPPER: t=1 LOWER : f(x¤ ) ¸ PT1 t=1 DUALITY GAP: · PT t=1 PT°t t=1 °t °t °t ³P T hP T ´ (t) ¤ ° f(x ) ¸ f(x ) t t=1 i (t) ¤ (t) ° (f(x ) + rf(x ) (x ¡ x )) t t=1 (t) T ¸ PT (t) ¤ (t) T ¤ (t) f(x ) ¡ f(x ) · ¡rf(x ) (x ¡ x ) t=1 LINEAR FUNCTION Reduction to Online Linear Minimization Fix weights °t to be uniform for simplicity: DUALITY GAP: · PT t=1 PT°t t=1 °t ¸ PT (t) ¤ (t) T ¤ (t) f(x ) ¡ f(x ) · ¡rf(x ) (x ¡ x ) t=1 ONLINE SETUP ALGORITHM ADVERSARY x(t) 2 X ¡rf(x(t) ) Reduction to Online Linear Minimization Fix weights °t to be uniform for simplicity: DUALITY GAP: · PT t=1 PT°t t=1 °t ¸ PT (t) ¤ (t) T ¤ (t) f(x ) ¡ f(x ) · ¡rf(x ) (x ¡ x ) t=1 ONLINE SETUP ALGORITHM ADVERSARY x(t) 2 X `(t) = ¡rf(x(t) ) Recall that by assumption: (t) (t) k` k¤ = krf(x )k¤ · ½ Loss vector is gradient Reduction to Online Linear Minimization Fix weights °t to be uniform for simplicity: DUALITY GAP: hP T t=1 i 1 (t) ¤ f(x ) ¡ f(x )· T 1 T ¢ PT (t) T ¤ (t) ¡rf(x ) (x ¡ x ) t=1 ONLINE SETUP ALGORITHM ADVERSARY x(t) 2 X `(t) = ¡rf(x(t) ) Recall that by assumption: (t) (t) k` k¤ = krf(x )k¤ · ½ Loss vector is gradient T T 1 X ¢ ¡rf(x(t) ) (x¤ ¡ x(t) ) = REGRET T t=1 Final Bound ONLINE SETUP ALGORITHM ADVERSARY x(t) 2 X `(t) = ¡rf(x(t) ) Recall that by assumption: T X t=1 (t) (t) k` k¤ = krf(x )k¤ · ½ Loss vector is gradient (t) T ¡rf(x ) (x¤ ¡ x(t) ) = REGRET RESULTING ALGORITHM: MIRROR DESCENT Error bound with ¾-strongly-convex regularizer F p ½ 2 ¢ (maxx2X F (x) ¡ minx2X F (x)) p ²MD · ¾ T Final Bound ONLINE SETUP ALGORITHM ADVERSARY x(t) 2 X `(t) = ¡rf(x(t) ) Recall that by assumption: T X t=1 (t) (t) k` k¤ = krf(x )k¤ · ½ Loss vector is gradient (t) T ¡rf(x ) (x¤ ¡ x(t) ) = REGRET RESULTING ALGORITHM: MIRROR DESCENT Error bound with ¾-strongly-convex regularizer F p ½ 2 ¢ (maxx2X F (x) ¡ minx2X F (x)) p ²MD · ¾ T ASYMPTOTICALLY OPTIMAL BY INFORMATION COMPLEXITY LOWER BOUND Non-Smooth Optimization over Simplex RESULTING ALGORITHM: MIRROR DESCENT OVER SIMPLEX = MWU Regularizer F is negative entropy, with krf(x(t) )k1 · ½ p ½ 2 ¢ log n p ²MD · T APPLICATIONS IN ALGORITHM DESIGN Warm-up Example: Linear Programming A 2 Rm£n ; ?9x 2 X : Ax ¡ b ¸ 0 Easy constraints Maintain feasible Hard constraints Require fixing LP Feasibility problem Warm-up Example: Linear Programming A 2 Rm£n ; ?9x 2 X : Ax ¡ b ¸ 0 LP Feasibility problem Convert into non-smooth optimization problem over simplex: min max pT (b ¡ Ax) p2¢m x2X Non-differentiable objective: f(p) = max pT (b ¡ Ax) x2X Warm-up Example: Linear Programming A 2 Rm£n ; ?9x 2 X : Ax ¡ b ¸ 0 LP Feasibility problem Convert into non-smooth optimization problem over simplex: min max pT (b ¡ Ax) p2¢m x2X Non-differentiable objective: T f(p) = max p (b ¡ Ax) x2X Best response to dual solution p Warm-up Example: Linear Programming A 2 Rm£n ; ?9x 2 X : b ¡ Ax ¸ 0 LP Feasibility problem Convert into non-smooth optimization problem over simplex: min max pT (b ¡ Ax) p2¢m x2X Non-differentiable objective f(p) = max pT (b ¡ Ax) x2X Admits subgradients, for all p: xp : pT (b ¡ Axp ) ¸ 0; (b ¡ Axp ) 2 @f(p) Subgradient is slack in constraints Warm-up Example: Linear Programming A 2 Rm£n ; ?9x 2 X : b ¡ Ax ¸ 0 LP Feasibility problem Convert into non-smooth optimization problem over simplex: min max pT (b ¡ Ax) p2¢m x2X Non-differentiable objective f(p) = max pT (b ¡ Ax) x2X Admits subgradients, for all p: xp : pT (b ¡ Axp ) ¸ 0; (b ¡ Axp ) 2 @f(p) If we can pick xp such that kb ¡ Axpk1 · ½ , then p ½ 2 ¢ log n p ²MD · T 2 ¢ ½2 ¢ log n T· ²2 MWU and s-t Maxflow Minaximum flow feasibility for value F over undirected graph G with incidence matrix B: jfe j 8e 2 E; F ¢ · 1 ce B T f = es ¡ et Will enforce this Turn into non-smooth minimization problem over simplex: X F ¢ jfe j f(p) = min pe ¢ ¡1 T ce B f =es ¡et e2E Best response fp is shortest s-t path with lengths pe / ce. For any p, if fphas length > 1, there is no subgradient, i.e. problem is infeasible. Otherwise, the following is a subgradient F ¢ j(fp )e j @f(p)e = ¡1 ce Unfortunately, width can be large k@f(p)e k1 · F cmin [PST 91] T =O ³ F log n ²2 cmin ´ Width Reduction: make function nicer NEED PRIMAL ARGUMENT (t+1) x x(t+2) x(t) PROBLEM: Optimal for this specific formulation k@f(p)e k1 · SOLUTION: Regularize primal X fe ³ ²´ f(p) = min F ¢ pe + ¡1 T c m B f=es ¡et e e2E F cmin Width Reduction: make primal nicer PROBLEM: Optimal for this specific formulation k@f(p)e k1 · SOLUTION: Regularize primal X fe ³ ²´ f(p) = min F ¢ pe + ¡1 T ce m B f=es ¡et e2E REGULARIZATION ERROR: NEW WIDTH: ²F m k@f(p)e k1 · ² ITERATION BOUND: T =O ³ m log n ²2 ´ [GK 98] F cmin Electrical Flow Approach [CKMST] Different formulation yields basis for CKMST algorithm: fe2 8e 2 E; F ¢ 2 · 1 ce B T f = es ¡ et Non-smooth optimization problem: f(p) = T min B f =es ¡et X e2E F ¢ fe2 pe ¢ ¡1 c2e Will enforce this Electrical Flow Approach [CKMST] Different formulation yields basis for CKMST algorithm: fe2 8e 2 E; F ¢ 2 · 1 ce B T f = es ¡ et Non-smooth optimization problem: f(p) = T min B f =es ¡et X e2E F ¢ fe2 pe ¢ ¡1 c2e Best response is electrical flow fp Original width: k@f(p)ek1 · m Will enforce this Electrical Flow Approach [CKMST] Different formulation yields basis for CKMST algorithm: fe2 8e 2 E; F ¢ 2 · 1 ce B T f = es ¡ et Will enforce this Non-smooth optimization problem: f(p) = Regularize primal: T min B f =es ¡et X e2E F ¢ fe2 pe ¢ ¡1 2 ce X f2 ³ ²´ e f(p) = min F ¢ pe + ¡1 2 T ce m B f =es ¡et e2E k@f(p)e k1 · r m ² Conclusion: Take-away messages • Regularization is a powerful tool for the design of fast algorithms. • Most iterative algorithms can be understood as regularized updates: MWUs, Width Reduction, Interior Point, Gradient descent, .. • Perform well in practice. Regularization also helps eliminate noise. • ULTIMATE GOAL: Development of a library of iterative methods for fast graph algorithms. Regularization plays a fundamental role in this effort THE END – THANK YOU