LEARNING MARGINAL AMP CHAIN GRAPHS UNDER FAITHFULNESS REVISITED

advertisement

LEARNING MARGINAL AMP CHAIN GRAPHS UNDER

FAITHFULNESS REVISITED

JOSE M. PEÑA

ADIT, IDA

LINKÖPING UNIVERSITY, SWEDEN

JOSE.M.PENA@LIU.SE

MANUEL GÓMEZ-OLMEDO

DEPT. COMPUTER SCIENCE AND ARTIFICIAL INTELLIGENCE

UNIVERSITY OF GRANADA, SPAIN

MGOMEZ@DECSAI.UGR.ES

Abstract. Marginal AMP chain graphs are a recently introduced family of models that is

based on graphs that may have undirected, directed and bidirected edges. They unify and

generalize the AMP and the multivariate regression interpretations of chain graphs. In this

paper, we present a constraint based algorithm for learning a marginal AMP chain graph from

a probability distribution which is faithful to it. We show that the marginal AMP chain graph

returned by our algorithm is a distinguished member of its Markov equivalence class. We

also show that our algorithm performs well in practice. Finally, we show that the extension

of Meek’s conjecture to marginal AMP chain graphs does not hold, which compromises the

development of efficient and correct score+search learning algorithms under assumptions

weaker than faithfulness.

1. Introduction

Chain graphs (CGs) are graphs with possibly directed and undirected edges, and no semidirected cycle. They have been extensively studied as a formalism to represent independence

models, because they can model symmetric and asymmetric relationships between the random variables of interest. However, there are three different interpretations of CGs as independence models: The Lauritzen-Wermuth-Frydenberg (LWF) interpretation (Lauritzen,

1996), the multivariate regression (MVR) interpretation (Cox and Wermuth, 1996), and the

Andersson-Madigan-Perlman (AMP) interpretation (Andersson et al., 2001). It is worth mentioning that no interpretation subsumes another: There are many independence models that

can be represented by a CG under one interpretation but that cannot be represented by any

CG under the other interpretations (Andersson et al., 2001; Sonntag and Peña, 2015). Moreover, although MVR CGs were originally represented using dashed directed and undirected

edges, we like other authors prefer to represent them using solid directed and bidirected edges.

Recently, a new family of models has been proposed to unify and generalize the AMP and

MVR interpretations of CGs (Peña, 2014b). This new family, named marginal AMP (MAMP)

CGs, is based on graphs that may have undirected, directed and bidirected edges. This paper

complements that by Peña (2014b) by presenting an algorithm for learning a MAMP CG

from a probability distribution which is faithful to it. Our algorithm is constraint based and

builds upon those developed by Sonntag and Peña (2012) and Peña (2014a) for learning,

respectively, MVR and AMP CGs under the faithfulness assumption. It is worth mentioning

that there also exist algorithms for learning LWF CGs under the faithfulness assumption (Ma

et al., 2008; Studený, 1997) and under the milder composition property assumption (Peña

et al., 2014). In this paper, we also show that the extension of Meek’s conjecture to MAMP

Date: pgm2014jmpfollowup6.tex, 09:50, 08/10/15.

1

2

CGs does not hold, which compromises the development of efficient and correct score+search

learning algorithms under assumptions weaker than faithfulness.

Finally, we should mention that this paper is an extended version of that by Peña (2014c).

The extension consists in that the learning algorithm presented in that paper has been modified so that it returns a distinguished member of a Markov equivalence class of MAMP CGs,

rather than just a member of the class. As a consequence, the proof of correctness of the

algorithm has changed significantly. Moreover, the algorithm has been implemented and

evaluated. This paper reports the results of the evaluation for the first time.

The rest of this paper is organized as follows. We start with some preliminaries in Section

2. Then, we introduce MAMP CGs in Section 3, followed by the algorithm for learning them

in Section 4. In that section, we also include a review of other learning algorithms that are

related to ours. We report the experimental results in Section 5. We close the paper with

some discussion in Section 6. All the proofs appear in an appendix at the end of the paper.

2. Preliminaries

In this section, we introduce some concepts of models based on graphs, i.e. graphical

models. Most of these concepts have a unique definition in the literature. However, a few

concepts have more than one and we opt for the most suitable in this work. All the graphs

and probability distributions in this paper are defined over a finite set V . All the graphs in

this paper are simple, i.e. they contain at most one edge between any pair of nodes. The

elements of V are not distinguished from singletons.

If a graph G contains an undirected, directed or bidirected edge between two nodes V1 and

V2 , then we write that V1 − V2 , V1 → V2 or V1 ↔ V2 is in G. We represent with a circle, such

as in V1 ⊸

← V2 or V1 ⊸

⊸ V2 , that the end of an edge is unspecified, i.e. it may be an arrowhead

or nothing. If the edge is of the form V1 ⊸

← V2 , then we say it has an arrowhead at V2 . If

the edge is of the form V1 → V2 , then we say that it has an arrowtail at V1 . The parents of

a set of nodes X of G is the set paG (X) = {V1 ∣V1 → V2 is in G, V1 ∉ X and V2 ∈ X}. The

children of X is the set chG (X) = {V1 ∣V1 ← V2 is in G, V1 ∉ X and V2 ∈ X}. The neighbors

of X is the set neG (X) = {V1 ∣V1 − V2 is in G, V1 ∉ X and V2 ∈ X}. The spouses of X is

the set spG (X) = {V1 ∣V1 ↔ V2 is in G, V1 ∉ X and V2 ∈ X}. The adjacents of X is the set

adG (X) = neG (X) ∪ paG (X) ∪ chG (X) ∪ spG (X). A route between a node V1 and a node

Vn in G is a sequence of (not necessarily distinct) nodes V1 , . . . , Vn such that Vi ∈ adG (Vi+1 )

for all 1 ≤ i < n. If the nodes in the route are all distinct, then the route is called a path.

The length of a route is the number of (not necessarily distinct) edges in the route, e.g. the

length of the route V1 , . . . , Vn is n − 1. A route is called descending if Vi → Vi+1 , Vi − Vi+1 or

Vi ↔ Vi+1 is in G for all 1 ≤ i < n. A route is called strictly descending if Vi → Vi+1 is in G

for all 1 ≤ i < n. The descendants of a set of nodes X of G is the set deG (X) = {Vn ∣ there

is a descending route from V1 to Vn in G, V1 ∈ X and Vn ∉ X}. The strict ascendants of X

is the set sanG (X) = {V1 ∣ there is a strictly descending route from V1 to Vn in G, V1 ∉ X

and Vn ∈ X}. A route V1 , . . . , Vn in G is called a cycle if Vn = V1 . Moreover, it is called a

semidirected cycle if Vn = V1 , V1 → V2 is in G and Vi → Vi+1 , Vi ↔ Vi+1 or Vi − Vi+1 is in G for

all 1 < i < n. A cycle has a chord if two non-consecutive nodes of the cycle are adjacent in G.

The subgraph of G induced by a set of nodes X is the graph over X that has all and only

the edges in G whose both ends are in X. Moreover, a triplex ({A, C}, B) in G is an induced

subgraph of the form A ⊸

←B←

⊸ C, A ⊸

← B − C or A − B ←

⊸ C.

A directed and acyclic graph (DAG) is a graph with only directed edges and without

semidirected cycles. An AMP chain graph (AMP CG) is a graph whose every edge is directed

or undirected such that it has no semidirected cycles. A MVR chain graph (MVR CG) is

a graph whose every edge is directed or bidirected such that it has no semidirected cycles.

Clearly, DAGs are a special case of AMP and MVR CGs: DAGs are AMP CGs without

undirected edges, and DAGs are MVR CGs without bidirected edges. We now recall the

3

semantics of AMP and MVR CGs. A node B in a path ρ in an AMP CG G is called a triplex

node in ρ if A → B ← C, A → B − C, or A − B ← C is a subpath of ρ. Moreover, ρ is said to

be Z-open with Z ⊆ V when

● every triplex node in ρ is in Z ∪ sanG (Z), and

● every non-triplex node B in ρ is outside Z, unless A − B − C is a subpath of ρ and

paG (B) ∖ Z ≠ ∅.

A node B in a path ρ in an MVR CG G is called a triplex node in ρ if A ⊸

←B←

⊸ C is a

subpath of ρ. Moreover, ρ is said to be Z-open with Z ⊆ V when

● every triplex node in ρ is in Z ∪ sanG (Z), and

● every non-triplex node B in ρ is outside Z.

Let X, Y and Z denote three disjoint subsets of V . When there is no Z-open path in an

AMP or MVR CG G between a node in X and a node in Y , we say that X is separated

from Y given Z in G and denote it as X ⊥ G Y ∣Z. The independence model represented by G,

denoted as I(G), is the set of separations X ⊥ G Y ∣Z. In general, I(G) is different depending

on whether G is an AMP or MVR CG. However, it is the same when G is a DAG.

3. MAMP CGs

In this section, we review marginal AMP (MAMP) CGs. We refer the reader to the work by

Peña (2014b) for more details. Specifically, a graph G containing possibly directed, bidirected

and undirected edges is a MAMP CG if

C1. G has no semidirected cycle,

C2. G has no cycle V1 , . . . , Vn = V1 such that V1 ↔ V2 is in G and Vi − Vi+1 is in G for all

1 < i < n, and

C3. if V1 − V2 − V3 is in G and spG (V2 ) ≠ ∅, then V1 − V3 is in G too.

The semantics of MAMP CGs is as follows. A node B in a path ρ in a MAMP CG G is

called a triplex node in ρ if A ⊸

←B ←

⊸ C, A ⊸

← B − C, or A − B ←

⊸ C is a subpath of ρ.

Moreover, ρ is said to be Z-open with Z ⊆ V when

● every triplex node in ρ is in Z ∪ sanG (Z), and

● every non-triplex node B in ρ is outside Z, unless A − B − C is a subpath of ρ and

spG (B) ≠ ∅ or paG (B) ∖ Z ≠ ∅.

Let X, Y and Z denote three disjoint subsets of V . When there is no Z-open path in G

between a node in X and a node in Y , we say that X is separated from Y given Z in G

and denote it as X ⊥ G Y ∣Z. The independence model represented by G, denoted as I(G), is

the set of separations X ⊥ G Y ∣Z. We denote by X ⊥ p Y ∣Z (respectively X ⊥/ p Y ∣Z) that X is

independent (respectively dependent) of Y given Z in a probability distribution p. We say

that p is faithful to G when X ⊥ p Y ∣Z if and only if X ⊥ G Y ∣Z for all X, Y and Z disjoint

subsets of V . We say that two MAMP CGs are Markov equivalent if they represent the same

independence model. We also say that two MAMP CGs are triplex equivalent if they have

the same adjacencies and the same triplexes. Two MAMP CGs are Markov equivalent if and

only if they are triplex equivalent (Peña, 2014b, Theorem 7).

Clearly, AMP and MVR CGs are special cases of MAMP CGs: AMP CGs are MAMP CGs

without bidirected edges, and MVR CGs are MAMP CGs without undirected edges. Then,

the union of AMP and MVR CGs is a subfamily of MAMP CGs. The following example

shows that it is actually a proper subfamily.

Example 1. The independence model represented by the MAMP CG G below cannot be

represented by any AMP or MVR CG.

A

B

C

D

E

4

To see it, assume to the contrary that it can be represented by an AMP CG H. Note that

H is a MAMP CG too. Then, G and H must have the same triplexes. Then, H must have

triplexes ({A, D}, B) and ({A, C}, B) but no triplex ({C, D}, B). So, C − B − D must be in

H. Moreover, H must have a triplex ({B, E}, C). So, C ← E must be in H. However, this

implies that H does not have a triplex ({C, D}, E), which is a contradiction because G has

such a triplex. To see that no MVR CG can represent the independence model represented

by G, simply note that no MVR CG can have triplexes ({A, D}, B) and ({A, C}, B) but no

triplex ({C, D}, B).

Finally, it is worth mentioning that MAMP CGs are not the first family of models to

be based on graphs that may contain undirected, directed and bidirected edges. Other such

families are summary graphs after replacing the dashed undirected edges with bidirected edges

(Cox and Wermuth, 1996), MC graphs (Koster, 2002), maximal ancestral graphs (Richardson

and Spirtes, 2002), and loopless mixed graphs (Sadeghi and Lauritzen, 2014). However, the

separation criteria for these families are identical to that of MVR CGs. Then, MVR CGs are

a subfamily of these families but AMP CGs are not. For further details, see also the works by

Richardson and Spirtes (2002, p. 1025) and Sadeghi and Lauritzen (2014, Sections 4.1-4.3).

Therefore, MAMP CGs are the only graphical models in the literature that generalize both

AMP and MVR CGs.

4. Algorithm for Learning MAMP CGs

In this section, we present our algorithm for learning a MAMP CG from a probability

distribution which is faithful to it. Prior to that, we describe how we represent a class of

Markov equivalent MAMP CGs, because that is the output of the algorithm. In the works

by Andersson et al. (1997), Andersson and Perlman (2006), Meek (1995), Sonntag and Peña

(2015) and Sonntag et al. (2015), the authors define the unique representant of a class of

Markov equivalent DAGs, AMP CGs and MVR CGs as the graph H such that (i) H has the

same adjacencies as every member of the class, and (ii) H has an arrowhead at an edge end

if and only if there is a member of the class with an arrowhead at that edge end and there

is no member of the class with a arrowtail at that edge end. Clearly, this definition can also

be used to construct a unique representant of a class of Markov equivalent MAMP CGs. We

call the unique representant of a class of Markov equivalent DAGs, AMP CGs, MVR CGs or

MAMP CGs the essential graph (EG) of the class. We show below that the EG of a class of

Markov equivalent MAMP CGs is always a member of the class. The EG of a class of Markov

equivalent AMP CGs also has this desirable feature (Andersson and Perlman, 2006, Theorem

3.2), but the EG of a class of Markov equivalent DAGs or MVR CGs does not (Andersson et

al., 1997; Sonntag et al., 2015).

Now, we present our algorithm for learning MAMP CGs under the faithfulness assumption.

The algorithm can be seen in Table 1. Note that the algorithm returns the EG of a class of

Markov equivalent MAMP CGs. The correctness of the algorithm is proven in the appendix.

Our algorithm builds upon those developed by Meek (1995), Peña (2014a), Sonntag and

Peña (2012) and Spirtes et al. (1993) for learning DAGs, AMP CGs and MVR CGs under

the faithfulness assumption. Like theirs, our algorithm consists of two phases: The first

phase (lines 1-8) aims at learning adjacencies, whereas the second phase (lines 9-17) aims at

directing some of the adjacencies learnt. Specifically, the first phase declares that two nodes

are adjacent if and only if they are not separated by any set of nodes. Note that the algorithm

does not test every possible separator (see line 5). Note also that the separators tested are

tested in increasing order of size (see lines 2, 5 and 8). The second phase consists of two

steps. In the first step (lines 9-11), the ends of some of the edges learnt in the first phase are

blocked according to the rules R1-R4 in Table 2. A block is represented by a perpendicular

line at the edge end such as in z or z

x, and it means that the edge cannot be a directed edge

5

Table 1. Algorithm for learning MAMP CGs.

Input: A probability distribution p that is faithful to an unknown MAMP CG G.

Output: The EG H of the Markov equivalence class of G.

1 Let H denote the complete undirected graph

2 Set l = 0

3 Repeat while l ≤ ∣V ∣ − 2

4

For each ordered pair of nodes A and B in H st A ∈ adH (B) and

∣[adH (A) ∪ adH (adH (A))] ∖ {A, B}∣ ≥ l

5

If there is some S ⊆ [adH (A) ∪ adH (adH (A))] ∖ {A, B} st ∣S∣ = l and A⊥ p B∣S then

6

Set SAB = SBA = S

7

Remove the edge A − B from H

8

Set l = l + 1

9 Apply the rules R1-R4 to H while possible

10 Replace every edge A − B in every cycle in H that is of length greater than three,

chordless, and without blocks with A z

xB

11 Apply the rules R2-R4 to H while possible

12 Replace every edge A z B in H with A → B

13 Replace every edge A z

x B in H with A ↔ B

14 Replace every induced subgraph A ↔ B ↔ C in H st B ∈ SAC with A − B − C

15 If H has an induced subgraph A

B

C

16

Replace the edge A ↔ B in H with A − B

17

Go to line 15

18 Return H

then

Table 2. Rules R1-R4.

R1

A

B

∧ B ∉ SAC

C

⇒

A

B

C

R2

A

B

∧ B ∈ SAC

C

⇒

A

B

C

R3

A

...

B

⇒

A

...

B

C

R4

A

C

B

D

∧ A ∈ SCD

⇒

A

B

D

6

Table 3. Algorithm for learning AMP CGs presented by Peña (2014a).

Input: A probability distribution p that is faithful to an unknown AMP CG G.

Output: The EG CG H of the Markov equivalence class of G.

1 Let H denote the complete undirected graph

2 Set l = 0

3 Repeat while l ≤ ∣V ∣ − 2

4

For each ordered pair of nodes A and B in H st A ∈ adH (B) and

∣[adH (A) ∪ adH (adH (A))] ∖ {A, B}∣ ≥ l

5

If there is some S ⊆ [adH (A) ∪ adH (adH (A))] ∖ {A, B} st ∣S∣ = l and A⊥ p B∣S then

6

Set SAB = SBA = S

7

Remove the edge A − B from H

8

Set l = l + 1

9 Apply the rules R1-R4 to H while possible

10 Replace every edge A − B in every cycle in H that is of length greater than three,

chordless, and without blocks with A z

xB

11 Apply the rules R2-R4 to H while possible

12 Replace every edge A z B in H with A → B

13 Replace every edge A z

x B in H with A − B

14 Return H

pointing in the direction of the block. Note that z

x does not mean that the edge must be

undirected: It means that the edge cannot be a directed edge in either direction and, thus,

it must be a bidirected or undirected edge. In the second step (lines 12-17), some edges get

directed. Specifically, the edges with exactly one unblocked end get directed in the direction

of the unblocked end (see line 12). The rest of the edges get bidirected (see line 13), unless

this produces a false triplex (see line 14) or violates the constraint C2 (see lines 15-17). Note

that only cycles of length three are checked for the violation of the constraint C2.

The rules R1-R4 in Table 2 work as follows: If the conditions in the antecedent of a rule

are satisfied, then the modifications in the consequent of the rule are applied. Note that

the ends of some of the edges in the rules are labeled with a circle such as in z

⊸ or ⊸

⊸.

The circle represents an unspecified end, i.e. a block or nothing. The modifications in the

consequents of the rules consist in adding some blocks. Note that only the blocks that appear

in the consequents are added, i.e. the circled ends do not get modified. The conditions in the

antecedents of R1, R2 and R4 consist of an induced subgraph of H and the fact that some of

its nodes are or are not in some separators found in line 6. The condition in the antecedent

of R3 consists of just an induced subgraph of H. Specifically, the antecedent says that there

is a cycle in H whose edges have certain blocks. Note that the cycle must be chordless.

4.1. Related Algorithms. In this section, we review two algorithms for learning AMP CGs

and DAGs that can be seen as particular cases of the algorithm presented above.

4.1.1. Algorithm for Learning AMP CGs. Peña (2014a) presents an algorithm for learning

AMP CGs under the faithfulness assumption. We show next that that algorithm coincides

with the algorithm for learning MAMP CGs in Table 1 when G is an AMP CG. Specifically,

if G is an AMP CG then it only has directed and undirected edges and, thus, any edge A z

xB

in H corresponds to an edge A − B in G. Therefore, line 13 in Table 1 should be modified

accordingly. After this modification, lines 14-17 do not make sense and, thus, they can be

removed. The resulting algorithm can be seen in Table 3. This is exactly the algorithm for

7

Table 4. Rules R1’-R4’.

R1’

A

B

∧ B ∉ SAC

C

⇒

A

B

C

R2’

A

B

∧ B ∈ SAC

C

⇒

A

B

C

R3’

A

...

B

⇒

A

...

B

C

A

R4’

C

⇒

B

A

D

∧ A ∈ SCD

B

D

Table 5. Rules R3” and R4”.

R3”

A

B

C

⇒

A

B

C

C

R4”

A

C

B

D

∧ A ∈ SCD

⇒

A

B

D

learning AMP CGs presented by Peña (2014a), except for lines 10-11. Adding these lines

ensures that the output is the EG of the Markov equivalence class of G and not just a CG in

class (Sonntag and Peña, 2015, Theorem 11).

4.1.2. Algorithm for Learning DAGs. Meek (1995) presents an algorithm for learning DAGs

under the faithfulness assumption. We show next that that algorithm coincides with the

algorithm for learning AMP CGs in Table 3 when G is a DAG.

Firstly, the set of nodes [adH (A) ∪ adH (adH (A))] ∖ {A, B} is considered in lines 4 and 5

so as to guarantee that G and H have the same adjacencies after line 8, as proven in Lemma

1 in the appendix. However, if G is a DAG then it only has directed edges and, thus, the

proof of the lemma simplifies so that it suffices to consider adH (A) ∖ {B} in lines 4 and 5 to

guarantee that G and H have the same adjacencies after line 8. Thus, if G is a DAG then

we can replace [adH (A) ∪ adH (adH (A))] ∖ {A, B} in lines 4 and 5 with adH (A) ∖ {B}.

8

Table 6. Algorithm for learning DAGs presented by Meek (1995).

Input: A probability distribution p that is faithful to an unknown DAG G.

Output: The EG CG H of the Markov equivalence class of G.

1 Let H denote the complete undirected graph

2 Set l = 0

3 Repeat while l ≤ ∣V ∣ − 2

4

For each ordered pair of nodes A and B in H st A ∈ adH (B) and

∣adH (A) ∖ {B}∣ ≥ l

5

If there is some S ⊆ adH (A) ∖ {B} st ∣S∣ = l and A⊥ p B∣S then

6

Set SAB = SBA = S

7

Remove the edge A − B from H

8

Set l = l + 1

9 Apply the rules R1’, R2’, R3” and R4” to H while possible

10 Return H

Secondly, if G is a DAG then it only has directed edges and, thus, H cannot have any edge

Az

x B. Therefore, lines 10, 11 and 13 in Table 3 do not make sense and, thus, they can be

removed. Moreover, if H cannot have any edge A z

x B, then any edge A z

⊸ B in the rules

R1-R4 in Table 2 can be replaced by A z B. For the same reason, any modification of an

edge A ⊸

⊸ B into A z

⊸ B in the rules can be replaced by a modification of an edge A − B

into A z B. These observations together with line 12 in Table 3 imply that the rules R1-R4

can be rewritten as the rules R1’-R4’ in Table 4. After this rewriting, line 12 in Table 3 is

not needed anymore and, thus, it can be removed.

Finally, if G is a DAG, then the rule R3’ in Table 4 does not need to be applied to

cycles of length greater than three. To see it, assume that the rule is applied to the cycle

A → V1 → ⋯ → Vn → B − A in H with n > 1. Recall that the cycle must be chordless. Then,

the rule modifies the edge A − B in H into A → B. This implies that G has an induced

subgraph A → B ← Vn , i.e. G has a triplex ({A, Vn }, B). Clearly, B ∉ SAVn . Then, the edge

A − B in H would have been modified into A → B by the rule R1’ anyway. Therefore, the

rule R3’ does not need to be applied to cycles of length greater than three and, thus, it can

be replaced by the rule R3” in Table 5. Likewise, the rule R4’ in Table 4 can be replaced by

the rule R4” in Table 5. To see it, note that A ∈ SCD implies that G has an induced subgraph

C → A → D, C ← A ← D or C ← A → D. Therefore, if R4’ can be applied but R4” cannot,

then H must have an induced subgraph A → C → B − A or A → D → B − A. Then, the edge

A−B gets modified into A → B by R3”. The resulting algorithm can be seen in Table 6. This

is exactly the algorithm for learning DAGs presented by Meek (1995), except for the names

of the rules.

5. Experiments

In this section, we report the performance of our algorithm on samples drawn from probability distributions that are faithful to MAMP CGs and DAGs. For the latter, we also

report the performance of Meek’s algorithm. We implemented both the algorithms in R. We

implemented the algorithms as they appear in Tables 1 and Table 6 and, thus, we did not

implement any conflict resolution technique such as those discussed by Ramsey et al. (2006),

Cano et al. (2008), and Colombo and Maathuis (2014). The implementation will be made

publicly available. To obtain sample versions of the algorithms, we replaced A ⊥ p B∣S in line

9

G

H

A

B

C

D

E

F

A

A

B

B

C

D

D

LCE

C

SC D

SD F

E

E

F

F

LEF

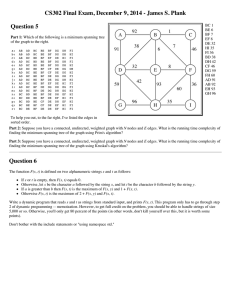

Figure 1. Example of the transformation of a MAMP CG into a DAG.

5 with a hypothesis test. Specifically, we used the default test implemented by the function

ci.test of the R package bnlearn1 with the default significance level 0.05.

5.1. Experiments with MAMP CGs. Since MAMP CGs have not been considered in

practice before, we artificially generated three MAMP CGs for the experiments. To produce

the artificial CG 1, we first produced a graph with 15 nodes and 25 edges such that 20 %

of the edges were directed, 20 % undirected and 60 % bidirected. The edges were generated

uniformly. After removing some edges to satisfy the constraints C1-2 and adding some others

to satisfy the constraint C3, the graph contained 5 directed edges, 4 undirected edges, and

8 bidirected edges. This is what we call artificial CG 1. To produce the artificial CG 2, we

repeated this process but this time 20 % of the initial 25 edges were directed, 60 % undirected

and 20 % bidirected which, after enforcing the constraints C1-3, resulted in the artificial CG

2 having 3 directed edges, 24 undirected edges, and 5 bidirected edges. Finally, we produced

the artificial CG 3 by repeating the process with 60 % of the initial 25 edges being directed,

20 % undirected and 20 % bidirected which, after enforcing the constraints C1-3, resulted in

the artificial CG 3 having 7 directed edges, 4 undirected edges, and 6 bidirected edges.

Since there is no known parameterization of MAMP CGs, in order to sample the artificial

CGs, we first transformed them into DAGs and then sampled these DAGs under marginalization and conditioning as indicated by Peña (2014b). The transformation of a MAMP CG

G into a DAG H is illustrated in Figure 1. First, every node X in G gets a new parent X

representing an error term, which by definition is never observed. Then, every undirected

edge X − Y in G is replaced by X → SX Y ← Y where SX Y denotes a selection bias node,

i.e. a node that is always observed. Finally, every bidirected edge X ↔ Y in G is replaced

by X ← LXY → Y where LXY denotes a latent node, i.e. a node that is never observed.

We parameterized each of the DAGs corresponding to the artificial CGs as follows. All the

nodes represented continuous random variables. Each node was equal to a linear combination

of its parents. The coefficients of the linear combinations were all 1 except in the case of the

selection bias nodes, where one was 1 and the other -1. Parentless nodes followed a Gaussian

probability distribution with mean 0 and standard deviation 1. All this together defined a

Gaussian probability distribution p(V, , L, S) where , L and S denote the error nodes, latent

nodes and selection bias nodes in the DAG (Peña, 2014b). Note that p(V, , L, S) was most

likely to be faithful to the DAG (Spirtes et al., 1993). Then, p(V ∣S) was most likely to be

faithful to the artificial CG corresponding to the DAG (Peña, 2014b). To sample p(V ∣S)

and so obtain the desired sample for our experiments, we used the function cpdist of the R

1www.bnlearn.com

10

Table 7. Results for the artificial CG 1 (15 nodes and 5 directed edges, 4

undirected edges, and 8 bidirected edges).

size

RA

PA

Our algorithm

RT

PT

500

0.72 ± 0.03

0.98 ± 0.04

0.37 ± 0.06

0.90 ± 0.17

1000

0.76 ± 0.03

0.96 ± 0.05

0.45 ± 0.07

0.91 ± 0.14

5000

0.83 ± 0.02

0.98 ± 0.04

0.58 ± 0.04

0.91 ± 0.13

10000

0.84 ± 0.03

0.99 ± 0.03

0.60 ± 0.06

0.96 ± 0.08

50000

0.89 ± 0.04

0.96 ± 0.04

0.67 ± 0.08

0.82 ± 0.12

Table 8. Results for the artificial CG 2 (15 nodes and 3 directed edges, 24

undirected edges, and 5 bidirected edges).

size

RA

PA

Our algorithm

RT

PT

500

0.32 ± 0.04

0.96 ± 0.04

0.13 ± 0.03

0.88 ± 0.12

1000

0.36 ± 0.03

0.99 ± 0.03

0.13 ± 0.02

0.86 ± 0.18

5000

0.45 ± 0.04

0.98 ± 0.03

0.19 ± 0.06

0.80 ± 0.17

10000

0.49 ± 0.05

0.97 ± 0.04

0.25 ± 0.06

0.66 ± 0.14

50000

0.69 ± 0.05

0.99 ± 0.02

0.47 ± 0.06

0.57 ± 0.07

Table 9. Results for the artificial CG 3 (15 nodes and 7 directed edges, 4

undirected edges, and 6 bidirected edges).

size

RA

PA

Our algorithm

RT

PT

500

0.78 ± 0.05

0.99 ± 0.03

0.35 ± 0.08

0.94 ± 0.14

1000

0.82 ± 0.02

1.00 ± 0.00

0.41 ± 0.03

0.99 ± 0.05

5000

0.83 ± 0.03

0.99 ± 0.02

0.42 ± 0.08

0.96 ± 0.11

10000

0.84 ± 0.02

1.00 ± 0.00

0.44 ± 0.07

0.99 ± 0.06

50000

0.92 ± 0.04

1.00 ± 0.02

0.69 ± 0.13

0.98 ± 0.07

package bnlearn, which generates a sample from a probability distribution via probabilistic

logic sampling and then discards the instances in the sample that do not comply with the

evidence provided. In our case, the evidence consisted in instantiating the selections bias

nodes. In particular, we instantiated all the selection bias nodes to the interval [−0.2, 0.2].

This worked fine for the artificial CGs 1 and 3 since they contain only 4 selection bias nodes.

However, it did not work for the artificial CG 2 as it contains 24 selection bias nodes and,

thus, the evidence was so unlikely that most samples were discarded. Therefore, for this

network, we instantiated the selection bias nodes to the interval [−0.9, 0.9]. This means that

the dependencies due to undirected edges are weaker in this case.

From each artificial CG, we obtained 30 samples of size 500, 1000, 5000, 10000 and 50000

as described above. For each sampled size, we ran our algorithm on the corresponding 30

samples and, then, computed the average precision and recall between the adjacencies in the

sampled and learnt graphs, as well as between the triplexes in the sampled and learnt graphs.

We denote the former two measures as PA and RA, and the latter two as PT and RT. We

chose these measures because it is the adjacencies and triplexes what determines the Markov

equivalence class of the MAMP CG learnt (recall Section 3).

Tables 7-9 show the results of our experiments. Broadly speaking, the results are rather

good. The results are worse for the artificial CG 2. This is not surprising since, as explained

above, its undirected edges were weaker. We are currently working on a parameterization for

MAMP CGs that will enable us to sample them directly and solve this problem.

5.2. Experiments with DAGs. In this section, we compare the performance of our algorithm (Table 1) and that by Meek (1995) (Table 6) on samples from probability distributions

that are faithful to DAGs. Therefore, the learning data is tailored to Meek’s algorithm rather

than to ours. Of course, both algorithms should perform similarly on the large sample limit,

11

because DAGs are a subfamily of MAMP CGs. We are however interested in their relative

performance for medium size samples. Since our algorithm tests in line 5 all the separators

that Meek’s algorithm tests and many more, we expect that our algorithm drops more edges

in line 7 and, thus, the edges retained are more likely to be true positive. Therefore, we

expect that our algorithm shows lower recall but higher precision between the adjacencies

in the sampled and learnt graphs. We also expect that our algorithm shows lower recall

between the triplexes in the sampled and learnt graphs because, as discussed before, the

edges involved in a true triplex are more likely to be dropped in our algorithm. Likewise, we

expect that our algorithm shows lower precision between the triplexes in the sampled and

learnt graphs, because dropping a true edge may create a false positive triplex, and this is

more likely to happen in our algorithm. In this section, we try to elucidate the extend of

this decrease in the performance of our algorithm compared to Meek’s. Another way to look

at this question is by noting that, despite the sampled graph belongs to the search spaces

considered by both algorithms, our algorithm considers a much bigger search space, which

implies a larger risk of ending in a suboptimal solution. For instance, the ratio of the numbers

of independence models representable by an AMP or MVR CG and those representable by a

DAG is approximately 7 for 8 nodes, 26 for 11 nodes, and 1672 for 20 nodes (Sonntag and

Peña, 2015). Note that the ratio of the numbers of independence models representable by a

MAMP CG and those representable by a DAG is much larger than the figures given (recall

Example 1). In this section, we try to elucidate the effects that this larger search space has

on the performance of our algorithm.

In the experiments, we considered the following Bayesian networks: Asia (8 nodes and

8 edges), Sachs (11 nodes and 17 edges), Child (20 nodes and 25 edges), Insurance (27

nodes and 52 edges), Mildew (35 nodes and 46 edges), Alarm (37 nodes and 46 edges) and

Barley (48 nodes and 84 edges). All the networks were obtained from the repository at

www.bnlearn.com. All the nodes in these networks represent discrete random variables.

From each network considered, we obtained 30 samples of size 500, 1000, 5000, 10000 and

50000 with the help of the function cpdist of the R package bnlearn. For each sampled size,

we ran our and Meek’s algorithms on the corresponding 30 samples and, then, computed the

average precision and recall between the adjacencies in the sampled and learnt graphs, as

well as between the triplexes in the sampled and learnt graphs. We denote the former two

measures as PA and RA, and the latter two as PT and RT. We chose these measures because

it is the adjacencies and triplexes what determines the Markov equivalence class of the DAG

or MAMP CG learnt (recall Section 3).

Tables 10-16 show the results of our experiments. The entries in bold font correspond to

the cases where the difference in the performance of the algorithms is significant according

to Student’s t-test. For the test, we used the function t.test available in R with a 95 %

confidence interval and parameters alt="two.sided" and var.equal=TRUE. Broadly speaking, the results confirm our expectation of our algorithm scoring lower RA, RT and PT, and

higher PA. However, there are quite a few cases where the results depart from the expectation

and that indicate that our algorithm performs better than expected, specially when it comes

to PT:

● For the Asia network, our algorithm scores comparable or better RT and PT for all

the sample sizes.

● Note that the Sachs network has no triplex and hence the 0.00 ± 0.00 in RT and PT

scored by both algorithms.

● For the Child network, our algorithm scores better RT for the sample size 50000, and

comparable or better PT for the sample sizes 1000-50000.

● For the Insurance network, our algorithm scores comparable RT for the sample sizes

500-5000, and better PT for all the sample sizes.

12

Table 10. Results for the Asia network (8 nodes and 8 directed edges).

size

RA

PA

Our algorithm

RT

PT

RA

PA

Meek’s algorithm

RT

PT

500

0.50 ± 0.00

0.97 ± 0.07

0.25 ± 0.25

0.42 ± 0.46

0.54 ± 0.06

0.84 ± 0.14

0.22 ± 0.25

0.21 ± 0.27

1000

0.52 ± 0.04

0.96 ± 0.08

0.35 ± 0.23

0.63 ± 0.45

0.58 ± 0.06

0.85 ± 0.16

0.37 ± 0.22

0.42 ± 0.33

5000

0.50 ± 0.00

0.99 ± 0.05

0.50 ± 0.00

0.89 ± 0.23

0.67 ± 0.07

0.93 ± 0.10

0.37 ± 0.22

0.51 ± 0.40

10000

0.50 ± 0.00

0.99 ± 0.04

0.50 ± 0.00

0.95 ± 0.15

0.69 ± 0.06

0.93 ± 0.09

0.30 ± 0.25

0.57 ± 0.49

50000

0.62 ± 0.05

0.99 ± 0.03

0.50 ± 0.00

0.90 ± 0.24

0.75 ± 0.00

0.95 ± 0.08

0.43 ± 0.17

0.72 ± 0.37

Table 11. Results for the Sachs network (11 nodes and 17 directed edges).

size

RA

PA

Our algorithm

RT

PT

RA

PA

Meek’s algorithm

RT

PT

500

0.46 ± 0.02

1.00 ± 0.00

0.00 ± 0.00

0.00 ± 0.00

0.73 ± 0.05

0.95 ± 0.05

0.00 ± 0.00

0.00 ± 0.00

1000

0.53 ± 0.04

1.00 ± 0.00

0.00 ± 0.00

0.00 ± 0.00

0.83 ± 0.04

0.99 ± 0.04

0.00 ± 0.00

0.00 ± 0.00

5000

0.65 ± 0.01

1.00 ± 0.00

0.00 ± 0.00

0.00 ± 0.00

0.90 ± 0.04

0.99 ± 0.03

0.00 ± 0.00

0.00 ± 0.00

10000

0.74 ± 0.13

1.00 ± 0.00

0.00 ± 0.00

0.00 ± 0.00

0.88 ± 0.01

1.00 ± 0.02

0.00 ± 0.00

0.00 ± 0.00

50000

0.84 ± 0.03

1.00 ± 0.00

0.00 ± 0.00

0.00 ± 0.00

0.94 ± 0.00

1.00 ± 0.01

0.00 ± 0.00

0.00 ± 0.00

Table 12. Results for the Child network (20 nodes and 25 directed edges).

size

RA

PA

Our algorithm

RT

PT

RA

PA

Meek’s algorithm

RT

PT

500

0.37 ± 0.03

0.98 ± 0.05

0.03 ± 0.08

0.08 ± 0.19

0.72 ± 0.04

0.88 ± 0.06

0.31 ± 0.17

0.20 ± 0.12

1000

0.42 ± 0.03

0.99 ± 0.02

0.18 ± 0.10

0.40 ± 0.23

0.75 ± 0.04

0.89 ± 0.07

0.59 ± 0.20

0.40 ± 0.17

5000

0.55 ± 0.03

1.00 ± 0.00

0.22 ± 0.08

0.49 ± 0.28

0.92 ± 0.00

0.91 ± 0.05

0.77 ± 0.16

0.38 ± 0.12

10000

0.60 ± 0.02

1.00 ± 0.00

0.60 ± 0.00

0.80 ± 0.17

0.92 ± 0.01

0.91 ± 0.05

0.89 ± 0.15

0.52 ± 0.19

50000

0.69 ± 0.02

1.00 ± 0.00

1.00 ± 0.00

0.89 ± 0.19

0.95 ± 0.02

0.87 ± 0.05

0.94 ± 0.12

0.52 ± 0.15

● For the Mildew network, our algorithm scores better RT for the sample size 5000, and

better PT for all the sample sizes.

● For the Alarm network, our algorithm scores better PT for all the sample sizes.

● For the Barley network, our algorithm scores comparable RT for the sample sizes 500

and 50000, and comparable or better PT for all the sample sizes.

Roughly speaking, one could say that our algorithm scores better precision (PA and PT),

whereas Meek’s algorithm scores better recall (RA and RT). Since we do not have any reason

to deem any of the four performance measures in our experiments (i.e. RA, PA, RT and PT)

more important than the others, we can conclude that our algorithm performs relatively well

compared to Meek’s, although Meek’s algorithm is tailored to learning the models sampled

in the experiments whereas our algorithm is tailored to learning much more general models.

Based on our experiments, we therefore recommend to use Meek’s algorithm if it is known

that the model sampled is a DAG. However, if it is known that the model sampled is a MAMP

CG but it is not known whether it is a DAG, then we recommend to use our algorithm: It

13

Table 13. Results for the Insurance network (27 nodes and 52 directed edges).

size

RA

PA

Our algorithm

RT

PT

RA

PA

Meek’s algorithm

RT

PT

500

0.27 ± 0.01

0.96 ± 0.04

0.06 ± 0.03

0.48 ± 0.22

0.41 ± 0.02

0.78 ± 0.05

0.07 ± 0.05

0.15 ± 0.11

1000

0.31 ± 0.02

0.99 ± 0.02

0.13 ± 0.03

0.69 ± 0.18

0.48 ± 0.02

0.80 ± 0.05

0.13 ± 0.06

0.19 ± 0.07

5000

0.38 ± 0.01

1.00 ± 0.00

0.22 ± 0.02

0.72 ± 0.03

0.63 ± 0.02

0.82 ± 0.05

0.24 ± 0.09

0.26 ± 0.11

10000

0.40 ± 0.01

1.00 ± 0.00

0.25 ± 0.00

0.74 ± 0.03

0.69 ± 0.02

0.86 ± 0.05

0.40 ± 0.07

0.41 ± 0.13

50000

0.47 ± 0.01

1.00 ± 0.00

0.28 ± 0.03

0.76 ± 0.04

0.76 ± 0.01

0.86 ± 0.03

0.40 ± 0.07

0.35 ± 0.12

Table 14. Results for the Mildew network (35 nodes and 46 directed edges).

size

RA

PA

Our algorithm

RT

PT

RA

PA

Meek’s algorithm

RT

PT

500

0.07 ± 0.03

0.74 ± 0.11

0.02 ± 0.02

0.46 ± 0.49

0.18 ± 0.03

0.32 ± 0.05

0.04 ± 0.03

0.04 ± 0.03

1000

0.10 ± 0.02

0.78 ± 0.06

0.03 ± 0.02

0.66 ± 0.44

0.16 ± 0.02

0.27 ± 0.04

0.05 ± 0.03

0.03 ± 0.02

5000

0.23 ± 0.02

0.91 ± 0.02

0.08 ± 0.01

0.98 ± 0.08

0.27 ± 0.01

0.42 ± 0.03

0.07 ± 0.02

0.04 ± 0.01

10000

0.33 ± 0.00

0.94 ± 0.00

0.11 ± 0.00

1.00 ± 0.00

0.41 ± 0.00

0.56 ± 0.02

0.12 ± 0.02

0.09 ± 0.02

50000

0.46 ± 0.01

0.96 ± 0.01

0.22 ± 0.01

0.80 ± 0.02

0.59 ± 0.01

0.58 ± 0.01

0.24 ± 0.03

0.16 ± 0.03

Table 15. Results for the Alarm network (37 nodes and 46 directed edges).

size

RA

PA

Our algorithm

RT

PT

RA

PA

Meek’s algorithm

RT

PT

500

0.37 ± 0.03

1.00 ± 0.01

0.11 ± 0.05

0.71 ± 0.19

0.66 ± 0.03

0.83 ± 0.04

0.25 ± 0.06

0.41 ± 0.11

1000

0.45 ± 0.03

1.00 ± 0.00

0.18 ± 0.04

0.74 ± 0.13

0.76 ± 0.02

0.85 ± 0.03

0.31 ± 0.05

0.44 ± 0.09

5000

0.57 ± 0.02

1.00 ± 0.00

0.26 ± 0.04

0.89 ± 0.11

0.91 ± 0.03

0.85 ± 0.04

0.51 ± 0.09

0.56 ± 0.12

10000

0.65 ± 0.03

1.00 ± 0.00

0.34 ± 0.03

0.98 ± 0.04

0.94 ± 0.02

0.84 ± 0.03

0.56 ± 0.13

0.54 ± 0.14

50000

0.71 ± 0.01

1.00 ± 0.00

0.39 ± 0.04

0.99 ± 0.05

0.96 ± 0.01

0.80 ± 0.04

0.50 ± 0.14

0.41 ± 0.14

Table 16. Results for the Barley network (48 nodes and 84 edges).

size

RA

PA

Our algorithm

RT

PT

RA

PA

Meek’s algorithm

RT

PT

500

0.07 ± 0.01

0.75 ± 0.03

0.00 ± 0.00

0.00 ± 0.00

0.19 ± 0.02

0.48 ± 0.05

0.01 ± 0.01

0.02 ± 0.03

1000

0.09 ± 0.01

0.72 ± 0.04

0.02 ± 0.01

0.62 ± 0.39

0.26 ± 0.03

0.53 ± 0.05

0.03 ± 0.02

0.08 ± 0.04

5000

0.23 ± 0.01

0.84 ± 0.03

0.04 ± 0.01

0.65 ± 0.18

0.38 ± 0.01

0.65 ± 0.03

0.08 ± 0.01

0.20 ± 0.03

10000

0.23 ± 0.01

0.82 ± 0.03

0.04 ± 0.00

0.65 ± 0.09

0.39 ± 0.02

0.61 ± 0.03

0.06 ± 0.02

0.13 ± 0.03

50000

0.34 ± 0.00

0.99 ± 0.03

0.12 ± 0.01

0.60 ± 0.09

0.50 ± 0.01

0.65 ± 0.03

0.12 ± 0.03

0.16 ± 0.05

14

guarantees reasonable performance if the model sampled is really a DAG while it accommodates the possibility that the model sampled is a more general MAMP CG. We believe that

this is an interesting trade-off.

6. Discussion

MAMP CGs are a recently introduced family of models that is based on graphs that may

have undirected, directed and bidirected edges. They unify and generalize AMP and MVR

CGs. In this paper, we have presented an algorithm for learning a MAMP CG from a

probability distribution p which is faithful to it. We have also proved that the algorithm is

correct. The algorithm consists of two phases: The first phase aims at learning adjacencies,

whereas the second phase aims at directing some of the adjacencies learnt by applying some

rules. It is worth mentioning that, whereas the rules R1, R2 and R4 only involve three or four

nodes, the rule R3 may involve more. Unfortunately, we have not succeeded so far in proving

the correctness of our algorithm with a simpler R3. Note that the output of our algorithm

would be the same. The only benefit might be a decrease in running time. Finally, we have

implemented and evaluated our algorithm. The evaluation has shown that our algorithm

performs well.

6.1. A Note on the Faithfulness Assumption. The correctness of our algorithm relies

upon the assumption that p is faithful to some MAMP CG. This is a strong requirement that

we would like to weaken, e.g. by replacing it with the milder assumption that p satisfies the

composition property. Specifically, p satisfies the composition property when X ⊥ p Y ∣Z ∧ X ⊥

p W ∣Z ⇒ X ⊥ p Y ∪ W ∣Z for all X, Y , Z and W pairwise disjoint subsets of V . Note that if p

is a Gaussian distribution, then it satisfies the composition property regardless of whether it

is faithful or not to some MAMP CG (Studený, 2005, Corollary 2.4).

When assuming faithfulness is not reasonable, the correctness of a learning algorithm may

be redefined as follows. Given a MAMP CG G, we say that p is Markovian with respect to

G when X ⊥ p Y ∣Z if X ⊥ G Y ∣Z for all X, Y and Z pairwise disjoint subsets of V . We say that

a learning algorithm is correct when it returns a MAMP CG H such that p is Markovian

with respect to H and p is not Markovian with respect to any MAMP CG F such that

I(H) ⊂ I(F ).

Correct algorithms for learning DAGs and LWF CGs under the composition property

assumption exist (Chickering and Meek, 2002; Nielsen et al., 2003; Peña et al., 2014). The

way in which these algorithms proceed (i.e. score+search based approach) is rather different

from that of the algorithm presented in this paper (i.e. constraint based approach). In a

nutshell, they can be seen as consisting of two phases: A first phase that starts from the

empty graph H and adds single edges to it until p is Markovian with respect to H, and a

second phase that removes single edges from H until p is Markovian with respect to H and

p is not Markovian with respect to any graph F such that I(H) ⊂ I(F ). The success of the

first phase is guaranteed by the composition property assumption, whereas the success of the

second phase is guaranteed by the so-called Meek’s conjecture (Meek, 1997). Specifically,

given two DAGs F and H such that I(H) ⊆ I(F ), Meek’s conjecture states that we can

transform F into H by a sequence of operations such that, after each operation, F is a DAG

and I(H) ⊆ I(F ). The operations consist in adding a single edge to F , or replacing F with

a triplex equivalent DAG. Meek’s conjecture was proven to be true by Chickering (2002,

Theorem 4). The extension of Meek’s conjecture to LWF CGs was proven to be true by Peña

et al. (2014, Theorem 1). The extension of Meek’s conjecture to AMP and MVR CGs was

proven to be false by Peña (2014a, Example 1) and Sonntag and Peña (2015), respectively.

Unfortunately, the extension of Meek’s conjecture to MAMP CGs does not hold either, as

the following example illustrates.

15

Example 2. The MAMP CGs F and H below show that the extension of Meek’s conjecture

to MAMP CGs does not hold.

A

B

A

B

A

B

C

D

E C

D

E C

D

E

F

H

F′

We can describe I(F ) and I(H) by listing all the separators between any pair of distinct

nodes. We indicate whether the separators correspond to F or H with a superscript. Specifically,

F

F

F

F

● SAD

= SBE

= SCD

= SDE

= ∅,

F

● SAB = {∅, {C}, {D}, {E}, {C, D}, {C, E}},

F

● SAC

= {∅, {B}, {E}, {B, E}},

F

● SAE

= {∅, {B}, {C}, {B, C}},

F

● SBC = {∅, {A}, {D}, {A, D}, {A, D, E}},

F

● SBD

= {∅, {A}, {C}, {A, C}}, and

F

● SCE = {{A, D}, {A, B, D}}.

Likewise,

H

H

H

H

H

● SAD

= SBD

= SBE

= SCD

= SDE

= ∅,

H

● SAB = {∅, {C}, {E}, {C, E}},

H

● SAC

= {∅, {B}, {E}, {B, E}},

H

● SAE = {∅, {B}, {C}, {B, C}},

H

● SBC

= {{A, D}, {A, D, E}}, and

H

● SCE = {{A, D}, {A, B, D}}.

H

F

Then, I(H) ⊆ I(F ) because SXY

⊆ SXY

for all X, Y ∈ {A, B, C, D, E} with X ≠ Y . Moreover, the MAMP CG F ′ above is the only MAMP CG that is triplex equivalent to F , whereas

there is no MAMP CG that is triplex equivalent to H. Obviously, one cannot transform F or

F ′ into H by adding a single edge.

While the example above compromises the development of score+search learning algorithms

that are correct and efficient under the composition property assumption, it is not clear to us

whether it also does it for constraint based algorithms. This is something we plan to study.

Acknowledgments

We thank the Reviewers and Editors for their comments, which have helped us to improve

this work. We thank Dag Sonntag for pointing out a mistake in the experimental setting of

an earlier version of this paper. The first author is supported by the Center for Industrial

Information Technology (CENIIT) and a so-called career contract at Linköping University,

and by the Swedish Research Council (ref. 2010-4808). The second author is supported by

the Spanish Ministry of Economy and Competitiveness under project TIN2013-46638-C3-2-P

and by the European Regional Development Fund (FEDER).

Appendix: Proof of Correctness

This appendix is devoted to prove that the algorithm for learning MAMP CGs in Table 1

is correct. We start by proving some auxiliary results.

Lemma 1. After line 8, G and H have the same adjacencies.

Proof. Consider any pair of nodes A and B in G. If A ∈ adG (B), then A ⊥/ p B∣S for all

S ⊆ V ∖ {A, B} by the faithfulness assumption. Consequently, A ∈ adH (B) at all times.

On the other hand, if A ∉ adG (B), then A⊥ p B∣paG (A) or A⊥ p B∣neG (A) ∪ paG (A ∪ neG (A))

(Peña, 2014b, Theorem 5). Note that, as shown before, neG (A)∪paG (A∪neG (A)) ⊆ [adH (A)∪

16

adH (adH (A))]∖{A, B} at all times. Therefore, there exists some S in line 5 such that A⊥ p B∣S

and, thus, the edge A − B will be removed from H in line 7. Consequently, A ∉ adH (B) after

line 8.

Lemma 2. The rules R1-R4 block the end of an edge only if the edge is not a directed edge

in G pointing in the direction of the block.

Proof. According to the antecedent of R1, G has a triplex ({A, C}, B). Then, G has an

induced subgraph of the form A ⊸

←B←

⊸ C, A ⊸

← B − C or A − B ←

⊸ C. In either case, the

consequent of R1 holds.

According to the antecedent of R2, (i) G does not have a triplex ({A, C}, B), (ii) A ⊸

←B

or A − B is in G, (iii) B ∈ adG (C), and (iv) A ∉ adG (C). Then, B → C or B − C is in G. In

either case, the consequent of R2 holds.

According to the antecedent of R3, (i) G has a path from A to B with no directed edge

pointing in the direction of A, and (ii) A ∈ adG (B). Then, A ← B cannot be in G because G

has no semidirected cycle. Then, the consequent of R3 holds.

According to the antecedent of R4, neither B → C nor B → D are in G. Assume to the

contrary that A ← B is in G. Then, G must have an induced subgraph of one of the following

forms:

C

A

C

B

D

A

C

B

D

A

C

B

D

A

B

D

because, otherwise, G has a semidirected cycle. However, either case contradicts that

A ∈ SCD .

Lemma 3. After line 11, H has a block at the end of an edge only if the edge is not a directed

edge in G pointing in the direction of the block.

Proof. In Lemma 2, we have proved that any of the rules R1-R4 blocks the end of an edge

only if the edge is not a directed edge in G pointing in the direction of the block. Of course,

for this to hold, every block in the antecedent of the rule must be on the end of an edge that

is not a directed edge in G pointing in the direction of the block. This implies that, after line

9, H has a block at the end of an edge only if the edge is not a directed edge in G pointing

in the direction of the block, because H has no blocks before line 9. However, to prove that

this result also holds after line 11, we have to prove that line 10 blocks the end of an edge in

H only if the edge is not a directed edge in G pointing in the direction of the block. To do

so, consider any cycle ρH in H that is of length greater than three, chordless, and without

blocks. Let ρG denote the cycle in G corresponding to the sequence of nodes in ρH . Note

that no edge in ρH can be directed or bidirected in ρG because, otherwise, a subroute of the

form A ⊸

←B←

⊸ C or A ⊸

← B − C exists in ρG since G has no directed cycle. This implies

that G contains a triplex ({A, C}, B) because A and C cannot be adjacent in G since ρG is

chordless, which implies that A z

⊸B⊸

z C is in H by R1 in line 9, which contradicts that ρH

has no blocks. Therefore, every edge in ρH is undirected in ρG and, thus, line 10 blocks the

end of an edge in H only if the edge is not a directed edge in G pointing in the direction of

the block.

Lemma 4. After line 11, H does not have any induced subgraph of the form

A

B

C

.

Proof. Assume to the contrary that the lemma does not hold. We interpret the execution

of lines 9-11 as a sequence of block addings and, for the rest of the proof, one particular

sequence of these block addings is fixed. Fixing this sequence is a crucial point upon which

some important later steps of the proof are based. Since there may be several induced

subgraphs of H of the form under study after lines 9-11, let us consider any of the induced

17

subgraphs A B C that appear firstly during execution of lines 9-11 and fix it for the

rest of the proof. Now, consider the following cases.

Case 0: Assume that A z

x B is in H due to line 10. Then, after line 10, H had

an induced subgraph of one of the following forms, where possible additional edges

between C and internal nodes of the route A z

x ... z

x D are not shown:

A

B

C

A

...

B

C

...

D

case 0.1

D

case 0.2

Note that C cannot belong to the route A z

⊸ ... z

⊸ D because, otherwise, the cycle

Az

⊸ ... z

⊸Dz

⊸ B ⊸ A would not have been chordless.

Case 0.1: If B ∉ SCD then B ⊸

z C is in H by R1, else B z

⊸ C is in H by R2.

Either case is a contradiction.

Case 0.2: Recall from line 10 that the cycle A z

x ... z

xDz

xBz

x A is of length

greater than three and chordless, which implies that there is no edge between A

and D in H. Thus, if C ∉ SAD then A z

⊸ C is in H by R1, else B ⊸

z C is in H

by R4. Either case is a contradiction.

Case 1: Assume that A z

⊸ B is in H due to R1. Then, after R1 was applied to A ⊸

⊸ B,

H had an induced subgraph of one of the following forms:

A

B

C

A

D

case 1.1

B

C

D

case 1.2

Case 1.1: If B ∉ SCD then B ⊸

z C is in H by R1, else B z

⊸ C is in H by R2.

Either case is a contradiction.

Case 1.2: If C ∉ SAD then A z

⊸ C is in H by R1, else B ⊸

z C is in H by R4.

Either case is a contradiction.

Case 2: Assume that A z

⊸ B is in H due to R2. Then, after R2 was applied to A ⊸

⊸ B,

H had an induced subgraph of one of the following forms:

A

B

D

C

A

B

D

case 2.1

C

A

B

D

case 2.2

C

A

B

C

D

case 2.3

case 2.4

Case 2.1: If A ∉ SCD then A ⊸

z C is in H by R1, else A z

⊸ C is in H by R2. Either

case is a contradiction.

Case 2.2: Note that D A C cannot be an induced subgraph of H after lines

9-11 because, otherwise, it would contradict the assumption that A B C

is one of the firstly induced subgraph of that form that appeared during the

execution of lines 9-11. Then, A z

⊸ C, A x C, D ⊸

z C or D z C must be in H

after lines 9-11. However, either of the first two cases is a contradiction. The third

case can be reduced to Case 2.3 as follows. The fourth case can be reduced to

Case 2.4 similarly. The third case implies that the block at C in D ⊸

z C is added

at some moment in the execution of lines 9-11. This moment must happen later

than immediately after adding the block at A in A z

⊸ B, because immediately

after adding this block the situation is the one depicted by the above figure for

Case 2.2. Then, when the block at C in D ⊸

z C is added, the situation is the one

depicted by the above figure for Case 2.3.

18

Case 2.3: Assume that the situation of this case occurs at some moment in the

z C is in H by R3 after lines 9-11, which is a

execution of lines 9-11. Then, A ⊸

contradiction.

Case 2.4: Assume that the situation of this case occurs at some moment in the

execution of lines 9-11. If C ∉ SBD then B z

⊸ C is in H by R1 after lines 9-11,

else B ⊸

z C is in H by R2 after lines 9-11. Either case is a contradiction.

Case 3: Assume that A z

⊸ B is in H due to R3. Then, after R3 was applied to A ⊸

⊸ B,

H had a subgraph of one of the following forms, where possible additional edges

between C and internal nodes of the route A z

⊸ ... z

⊸ D are not shown:

A

B

...

D

case 3.1

C

A

B

...

D

case 3.2

C

A

B

...

D

case 3.3

C

A

B

C

...

D

case 3.4

Note that C cannot belong to the route A z

⊸ ... z

⊸ D because, otherwise, R3 could

not have been applied since the cycle A z

⊸ ... z

⊸Dz

⊸ B ⊸ A would not have been

chordless.

Case 3.1: If B ∉ SCD then B ⊸

z C is in H by R1, else B z

⊸ C is in H by R2.

Either case is a contradiction.

Case 3.2: Note that D B C cannot be an induced subgraph of H after lines

9-11 because, otherwise, it would contradict the assumption that A B C

is one of the firstly induced subgraph of that form that appeared during the

execution of lines 9-11. Then, B z

⊸ C, B x C, D ⊸

z C or D z C must be in H

after lines 9-11. However, either of the first two cases is a contradiction. The third

case can be reduced to Case 3.3 as follows. The fourth case can be reduced to

Case 3.4 similarly. The third case implies that the block at C in D ⊸

z C is added

at some moment in the execution of lines 9-11. This moment must happen later

than immediately after adding the block at A in A z

⊸ B, because immediately

after adding this block the situation is the one depicted by the above figure for

Case 3.2. Then, when the block at C in D ⊸

z C is added, the situation is the one

depicted by the above figure for Case 3.3.

Case 3.3: Assume that the situation of this case occurs at some moment in the

execution of lines 9-11. Then, B ⊸

z C is in H by R3 after lines 9-11, which is a

contradiction.

Case 3.4: Assume that the situation of this case occurs at some moment in the

execution of lines 9-11. Note that C is not adjacent to any node of the route A z

⊸

... z

⊸ D besides A and D. To see it, assume to the contrary that C is adjacent

to some nodes E1 , . . . , En ≠ A, D of the route A z

⊸ ... z

⊸ D. Assume without

loss of generality that Ei is closer to A in the route than Ei+1 for all 1 ≤ i < n.

Now, note that En z

⊸ C must be in H by R3 after lines 9-11. This implies that

En−1 z

⊸ C must be in H by R3 after lines 9-11. By repeated application of this

argument, we can conclude that E1 z

⊸ C must be in H after lines 9-11 and, thus,

Az

⊸ C must be in H by R3 after lines 9-11, which is a contradiction. Therefore,

if C is not adjacent to any node of the route A z

⊸ ... z

⊸ D besides A and D,

then the cycle A z

⊸ ... z

⊸ D z C − A is chordless and, thus, A z

⊸ C must be in

H by R3 after lines 9-11, which is a contradiction.

⊸ B,

Case 4: Assume that A z

⊸ B is in H due to R4. Then, after R4 was applied to A ⊸

H had an induced subgraph of one of the following forms:

19

A

B

C

A

B

C

A

B

C

A

B

C

D

D

D

D

E

case 4.1

E

case 4.2

E

case 4.3

E

case 4.4

Cases 4.1-4.3: If B ∉ SCD or B ∉ SCE then B ⊸

z C is in H by R1, else B z

⊸ C is

in H by R2. Either case is a contradiction.

Case 4.4: Assume that C ∈ SDE . Then, B ⊸

z C is in H by R4, which is a contradiction. On the other hand, assume that C ∉ SDE . Then, it follows from applying

R1 that H has an induced subgraph of the form

A

B

C

D

E

Note that A ∈ SDE because, otherwise, R4 would not have been applied. Then,

Az

⊸ C is in H by R4, which is a contradiction.

Lemma 5. After line 11, every chordless cycle ρ ∶ V1 , . . . , Vn = V1 in H that has an edge

Vi z Vi+1 also has an edge Vj x Vj+1 .

Proof. Assume for a contradiction that ρ is of the length three such that V1 z V2 occur

and neither V2 x V3 nor V1 z V3 occur. Note that V2 z

x V3 cannot occur either because,

otherwise, V1 z

⊸ V3 must occur by R3. Since V1 z V3 contradicts the assumption, then

V1 z

x V3 must occur. However, this implies that V1 z

x V2 must occur by R3, which contradicts

the assumption. Similarly, V1 z

x V3 cannot occur either. Then, ρ is of one of the following

forms:

V1

V2

V3

V1

V2

V3

V1

V2

V3

The first form is impossible by Lemma 4. The second form is impossible because, otherwise,

V2 ⊸

z V3 would occur by R3. The third form is impossible because, otherwise, V1 z V3 would

be occur by R3. Thus, the lemma holds for cycles of length three.

Assume for a contradiction that ρ is of length greater than three and has an edge Vi z Vi+1

but no edge Vj x Vj+1 . Note that if Vl z

⊸ Vl+1 ⊸

⊸ Vl+2 is a subroute of ρ, then either Vl+1 z

⊸ Vl+2

or Vl+1 x Vl+2 is in ρ by R1 and R2. Since ρ has no edge Vj x Vj+1 , Vl+1 z

⊸ Vl+2 is in ρ. By

repeated application of this reasoning together with the fact that ρ has an edge Vi z Vi+1 , we

can conclude that every edge in ρ is Vk z

⊸ Vk+1 . Then, by repeated application of R3, observe

that every edge in ρ is Vk z

x Vk+1 , which contradicts the assumption.

Lemma 6. If H has an induced subgraph of the form

induced subgraph must actually be of the form

A

B

A

C

B

,

after line 11, then the

C

A

B

Proof. Lemmas 4 and 5 together with R3 rule out any other possibility.

C

or

A

B

C

.

Lemma 7. All the undirected edges in H at line 18 that are of the form z

x after line 11 are

undirected edges in G.

Proof. The undirected edges in H at line 18 that are of the form z

x after line 11 are those

added to H in lines 14 and 16. We first prove that the undirected edges added to H in line

20

14 are undirected edges in G. Any undirected edges A − B and B − C added to H in line 14

imply that H has an induced subgraph A z

xBz

x C with B ∈ SAC after line 11, which implies

that (i) A and B as well as B and C are adjacent in G whereas A and C are not adjacent in

G by Lemma 1, and (ii) G has no directed edge between A and B or B and C by Lemma 3.

Then, A − B − C must be in G because B ∈ SAC .

We now prove that the undirected edges added to H in line 16 are undirected edges in G.

As shown in the paragraph above, the result holds after having executed line 16 zero times.

Assume as induction hypothesis that the result also holds after having executed line 16 n

times. When line 16 is executed for the (n + 1)-th time, H has an induced subgraph of the

form A B C . This implies that H has an induced subgraph of the form A B C

after line 11, which implies that the induced subgraph is actually of the form A B C

by Lemma 6. Then, the undirected edges B − C and A − C have been added to H in previous

executions of lines 14 and 16. Then, B − C and A − C are in G by the induction hypothesis

and, thus, A − B must be in G too due to the constraint C2. Consequently, the desired result

holds after having executed line 16 n + 1 times.

Lemma 8. At line 18, G and H have the same triplexes.

Proof. We first prove that any triplex in H at line 18 is in G. Assume to the contrary that

H at line 18 has a triplex ({A, C}, B) that is not in G. This is possible if and only if H has

an induced subgraph of one of the following forms after line 11:

A

B

C

A

B

C

A

B

C

A

B

C

A

B

C

Note that the induced subgraphs above together with Lemma 1 imply that A is adjacent

to B in G, B is adjacent to C in G, and A is not adjacent to C in G. This together with

the assumption made above that G has no triplex ({A, C}, B) implies that B ∈ SAC . Now,

note that the first and third induced subgraphs above are impossible because, otherwise,

A⊸

z B would be in H by R2. Likewise, the second and fourth induced subgraphs above are

impossible because, otherwise, B z

⊸ C would be in H by R2. Now, note that any triplex that

is added to H in line 13 due to the fifth induced subgraph above is removed from H in line

14 because, as shown above, B ∈ SAC . Finally, note that no triplex is added to H in lines

15-17.

We now prove that any triplex ({A, C}, B) in G is in H at line 18. Note that B ∉ SAC .

Consider the following cases.

Case 1: Assume that the triplex in G is of the form A → B ⊸

⊸ C (respectively A ⊸

⊸

B ← C). Then, after line 11, A z B ⊸

z C (respectively A z

⊸ B x C) is in H by

Lemmas 1 and 3. Then, the triplex is added to H in lines 12-13. Moreover, the

triplex added is of the form A → B ⊸

⊸ C (respectively A ⊸

⊸ B ← C) and, thus, it

does not get removed from H in lines 14-17, because all these lines do is replacing

bidirected edges in H with undirected edges.

⊸ C or A ⊸

⊸ B ↔ C.

Case 2: Assume that the triplex in G is of the form A ↔ B ⊸

Then, after line 11, A z

⊸B⊸

z C is in H by Lemmas 1 and 3. Then, the triplex is

added to H in lines 12-13. Moreover, the triplex cannot get removed from H in lines

14-17. To see it, consider the following cases.

Case 2.1: Assume that the subgraph A z

⊸B⊸

z C in H after line 11 is actually of

the form A z B ⊸

z C or A z

⊸ B x C. Then, the triplex does not get removed

from H in lines 14-17, because all these lines do is replacing bidirected edges in

H with undirected edges.

Case 2.2: Assume that the subgraph A z

⊸B⊸

z C in H after line 11 is actually of

the form A z

xBz

x C. Note that all lines 14-17 do is replacing bidirected edges

in H with undirected edges. Therefore, the triplex gets removed from H only if

21

A − B − C is in H at line 18. However, this implies that A − B − C is in G by

Lemma 7, which is a contradiction.

It is worth noting that one may think that Lemma 4 implies that H does not have any

induced subgraph of the form A B C after line 14 and, thus, that lines 15-17 are not

needed. However, this is wrong as the following example illustrates.

Example 3. The MAMP CG G below shows that lines 15-17 are necessary.

A

C

D

B

F

E

G

I

A

C

D

B

F

E

H after line 11

I

A

C

D

B

F

E

I

H after line 14

We are now ready prove the correctness of our algorithm.

Theorem 1. At line 18, H is a MAMP CG that is Markov equivalent to G.

Proof. First, note that Lemmas 1 and 8 imply that H at line 18 has the same adjacencies

and triplexes as G.

Now, we show that H at line 18 satisfies the constraint C1. Lemma 5 implies that H has no

semidirected chordless cycle after line 13. This implies that H has no semidirected chordless

cycle at line 18, because all lines 14-17 do is replacing bidirected edges in H with undirected

edges. To see that this in turn implies that H has no semidirected cycle at line 18, assume

to the contrary that H has no semidirected chordless cycle but it has a semidirected cycle

ρ ∶ V1 , . . . , Vn = V1 with a chord between Vi and Vj with i < j. Then, divide ρ into the cycles

ρL ∶ V1 , . . . , Vi , Vj , . . . , Vn = V1 and ρR ∶ Vi , . . . , Vj , Vi . Note that ρL or ρR is a semidirected

cycle. Then, H has a semidirected cycle that is shorter than ρ. By repeated application

of this reasoning, we can conclude that H has a semidirected chordless cycle, which is a

contradiction.

Now, we show that H at line 18 satisfies the constraint C2. Assume to the contrary that H

has a cycle ρ ∶ V1 , . . . , Vn = V1 such that V1 ↔ V2 is in H and Vi − Vi+1 is in H for all 1 < i < n.

Note that ρ must be of length greater than three by lines 15-17, i.e. n > 3. Note also that

that V1 ↔ V2 is in H at line 18 implies that V1 z

x V2 is in H at line 11. Consider the following

cases.

Case 1: Assume that V1 and V3 are not adjacent in H at line 18. Then, V2 z

⊸ V3 or

V2 ⊸

z V3 is in H after line 11 by R1 or R2. In fact, V2 z

x V3 must be in H after line

11 because, otherwise, V2 → V3 or V2 ← V3 is in H at line 18, which is a contradiction.

Case 2: Assume that V1 and V3 are adjacent in H at line 18. That V2 − V3 is in H at

line 18 implies that V1 z

x V2 z

x V3 or V1 z

x V2 − V3 is in H at line 11. In fact, V2 z

x V3

must be in H after line 11 by Lemma 6.

In either case above, V2 z

x V3 is in H after line 11 and, thus, V2 −V3 is in G by Lemma 7. By

repeated application of this argument, we can conclude that Vi − Vi+1 is in G for all 2 < i < n,

which implies that V1 − V2 is also in G by the constraints C1 and C2. This implies that V1

and V3 are adjacent in G because, otherwise, G and H have not the same triplexes, which

contradicts Lemma 8. Then, V1 and V3 are adjacent in H by Lemma 1. In fact, V1 ↔ V3 must

be in H because, otherwise, H has a cycle of length three that violates the constraint C1 or

C2 which, as shown above, is a contradiction. Then, H has a cycle that violates the constraint

C2 and that is shorter than ρ, namely V1 , V3 , . . . , Vn = V1 . By repeated application of this

reasoning, we can conclude that H has a cycle of length three that violates the constraint C2

which, as shown above, is a contradiction.

Finally, we show that H at line 18 satisfies the constraint C3. Assume to the contrary

that, at line 18, V1 − V2 − V3 is in H, V2 ↔ V4 is in H, but V1 − V3 is not in H. We show below

22

that G (respectively H at line 18) has the graph to the left (respectively right) below as an

induced subgraph.

V4

V1

V2

V4

V3

V1

V2

V3

That V1 − V2 − V3 is in H at line 18 but V1 − V3 is not implies that V1 and V3 cannot be

adjacent in H because, otherwise, H violates the constraint C1 or C2 which, as shown above,

is a contradiction. This implies that V1 and V3 are not adjacent in G either by Lemma 1.

Consider the following cases.

Case 1: Assume that V1 and V4 are not adjacent in H at line 18. That V2 ↔ V4 is in

H at line 18 implies that V2 z

x V4 is in H after line 11 and, thus, that V1 z

⊸ V2 or

V1 ⊸

z V2 is in H after line 11 by R1 or R2. In fact, V1 z

x V2 must be in H after line

11 because, otherwise, V1 → V2 or V1 ← V2 is in H at line 18, which is a contradiction.

Then, V1 − V2 is in G by Lemma 7.

Case 2: Assume that V1 and V4 are adjacent in H at line 18. That V1 − V2 ↔ V4 is in

H at line 18 implies that V1 z

x V2 z

x V4 or V1 − V2 z

x V4 is in H after line 11. In fact,

V1 z

x V2 must be in H after line 11 by Lemma 6. Then, V1 − V2 is in G by Lemma 7.

In either case above, V1 − V2 is in G. Likewise, V2 − V3 is in G. That V2 ↔ V4 is in H at line

18 implies that V2 z

x V4 is in H after line 11, which implies that V2 − V4 or V2 ↔ V4 is in G by

Lemmas 1 and 3. In fact, V2 − V4 must be in G because, otherwise, G violates the constraint

C3 since, as shown above, V1 − V2 − V3 is in G but V1 − V3 is not. Finally, note that V1 and V4

as well as V3 and V4 must be adjacent in G and H because, otherwise, H at line 18 does not

have the same triplexes as G, which contradicts Lemma 8. Specifically, V1 − V4 − V3 must be

in G and V1 ↔ V4 ↔ V3 must be in H because, otherwise, G or H violates the constraint C1

or C2 which, as shown above, is a contradiction.

However, that G (respectively H at line 18) has the graph to the left (respectively right)

above as an induced subgraph implies that H has a triplex ({V1 , V3 }, V4 ) that G has not,

which contradicts Lemma 8. Then, V1 and V3 must be adjacent in H which, as shown above,

is a contradiction.

Theorem 2. At line 18, H is the EG of the Markov equivalence class of G.

Proof. Let K denote the graph that contains all and only the edges in H at line 18 that have

a block in H after line 11, and let U denote the graph that contains the rest of the edges in

H at line 18. Note that every edge in K is undirected, directed or bidirected, whereas every

edge in U is undirected. Note also that the edges in U correspond to the edges without blocks

in H after line 11. Therefore, U has no cycle of length greater than three that is chordless

by line 10. In other words, U is chordal. Then, we can orient all the edges in U without

creating triplexes nor directed cycles by using, for instance, the maximum cardinality search

algorithm (Koller and Friedman, 2009, p. 312). Consider any such orientation of the edges