SLMS Pseudonymisation Rules

advertisement

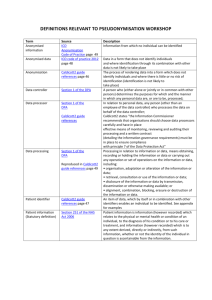

LONDON’S GLOBAL UNIVERSITY SLMS Pseudonymisation Rules 1.0 Document Information Document Name Author Issue Date Approved by Next review SLMS-IG14 Pseudonymisation Rules Shane Murphy 02/08/2013 Chair of SLMS IGSG Three years 2.0 Document History Version 0.1 0.2 0.3 1.0 Date 17/06/2013 28/06/2013 23/07/2013 02/08/2013 Summary of change First draft for discussion Revision with comments from IDHS Steering Group members Incorporated revisions from Alice Garrett and Shane Murphy Approved by Chair of SLMS IGSG SLMS-IG14 Pseudonymisation Rules v1.0 Page 1 of 22 Contents 1.! Introduction .......................................................................................................................3! 1.1.! Identifying Data .........................................................................................................3! 2.! External Disclosure and Publication .................................................................................4! 3.! Data Storage ....................................................................................................................5! 4.! Rules for Applying Pseudonym Creation Techniques Within the SLMS ..........................6! 10.1 Re-identification as Part of an Exceptional Event ........................................................7! 5.! Data Transfer and Access ................................................................................................8! Appendix 1: Pseudonymisation, Anonymisation and UK law ..................................................9! Appendix 2: De-identification techniques .................................................................................9! Appendix 3: Pseudonym Creation Techniques ......................................................................10! Appendix 4: Applicability of Department of Health NHS Guidance ........................................12! Appendix 5: Safe Haven Requirements .................................................................................14! Appendix 6: Privacy Impact Assessment ...............................................................................16! Appendix 7: Glossary .............................................................................................................22! SLMS-IG14 Pseudonymisation Rules v1.0 Page 2 of 22 1. Introduction Research projects within the SLMS receive information from NHS partners and other third party organisations and must satisfy NHS, UK law and other information governance requirements which ensure that sensitive personal data is safeguarded. This document sets out rules for protecting sensitive information when it is pseudonymised by research studies within the SLMS. These rules are based upon best practice and ISO/TS 25237:20081, the intention is to facilitate efficient management of pseudonymised information, ensuring the security and confidentiality of that data. The UK laws governing personal identifiable data are the Common Law Duty of Confidentiality, Data Protection Act 1998 and the Human Rights Act 2000. These laws require that organisations protect the confidentiality of data to prevent identification of individuals; an overview of how these are currently interpreted is contained in Appendix 1. Conformance to the NHS Information Governance (IG) Toolkit, Department of Health and UK legislation requirements are documented in Appendix 4. The central objective for using pseudonymisation within the SLMS is to mitigate legal, reputational and financial risk factors by preventing the unauthorised or accidental disclosure of personal data about a healthcare data subject. 1.1. Identifying Data There is a need to distinguish between identifying and non-identifying data because the use of data for purposes not associated with direct healthcare provision requires the explicit consent of the individual. Consequently the publication of identifying data is generally prohibited under UK law and requires the data to be manipulated to turn identifying data into non-identifying data The table below shows the hierarchy of identifying and non-identifying data when published reflecting the degree to which the confidentiality and privacy of individuals is protected. Table 1: Hierarchy of identifying and non-identifying data Safe as possible Non-identifying data (anonymous) Fully anonymous (effectively anonymised) data Coded anonymous (pseudonymised) data Moderately safe Coded but coding insecure or aggregation level too low Identifying data (personal data) Unsafe Identifying data (personal 1 Indirectly identifiable data Not coded but aggregation too low Directly identifiable data see ‘SLMS-IG14 Health Informatics - Pseudonymisation Overview’ for full details SLMS-IG14 Pseudonymisation Rules v1.0 Page 3 of 22 data) To be as safe as possible non-identifying data must be effectively anonymised. Pseudonymised data is more prone to a de-identification attack because of its retention of quasi-identifiers. The guidance contained in this document explains the risks associated with the use of pseudonymisation and anonymisation techniques and why disclosure and access controls are effective. 2. External Disclosure and Publication Data released into the public domain should contain little or no identifying data to ensure that nothing is revealed of a confidential nature. For this reason it is recommended that Principal Investigators (PIs) endeavour to effectively anonymise data where possible. There are a significant number of data fields which have been identified as sensitive due to their potential to identify individual research subjects. The data fields in the table below have been highlighted within the NHS as holding sensitive data that may potentially be used to reidentify individuals. Within the SLMS staff must ensure that the measures detailed below are followed before disclosure of these data fields to external parties. PIs must consider: • The context of disclosure to a wider audience • The need to control that disclosure through the use of contracts and data sharing agreements (Where possible the use of contracts should restrict the audience for research data for those with a need to know and enforce legal obligations for the limitation of usage). Privacy Impact Assessments (PIA), should be considered for the publication of any research data and assist PIs in assessing re-identification risks and introducing mitigation measures.2 The PIA should be undertaken at the commencement of the study by the PI. Principal Investigators should be conscious of the need to restrict access to datasets for staff and external parties on a ‘need to know’ basis and can use system-based access controls such as those applied in the Identifiable Data Handling System (IDHS). The end goal of pseudonymisation is to ensure de-identification of the identifiable data (such that the data is no longer personal data and therefore no longer subject to the Data Protection Act 1998. Table 2: Pseudonymisation for sensitive data fields Data Field Patient name Patient address Patient Date Of Birth (DOB) Patient postcode Patient NHS Number One-off and repeated events No name data items supplied No address data items supplied Replace with age band or age in years Postcode sector and other derivations* Pseudonymised with consistent values; different values for 2 Further guidance and advice can be provided by the IG Lead. A copy of the PIA with model answers is contained in Appendix 6. SLMS-IG14 Pseudonymisation Rules v1.0 Page 4 of 22 different purposes for the same user (for one-off events the data should be pseudonymised) Only supply if relevant to the study Data should be pseudonymised Data should be pseudonymised or do not display it Data should be pseudonymised or do not display it Data should be pseudonymised Patient ethnic category SUS PbR spell identifier Local Patient identifier Hospital Spell number Patient Unique booking reference number Patient Social Service Client Data should be pseudonymised or do not display it identifier Any other unique identifier Data should be pseudonymised Date of Death Truncate to month and year *Caution is to be exercised with derivations to avoid identification of individuals. The default output should be Low Super Output Area level Caution must also be exercised with the use of rare diagnostic codes in pseudonymised data sets as it is possible that these may have the potential to infer identity.3 3. Data Storage Identifiable data (i.e. Name, NHS number etc.) shall be stored in the IDHS safe haven environment or equivalent.4 The pseudonymised data and non-identifiable data (payload) should be stored separately on different servers. 3 4 Further details on pseudonym creation techniques are included in Appendix 2 See ‘SLMS-IG04 Data Handling Guidance for Principal Investigators’ SLMS-IG14 Pseudonymisation Rules v1.0 Page 5 of 22 4. Rules for Applying Pseudonym Creation Techniques Within the SLMS The rules for utilising pseudonymisation techniques within the SLMS are as follows: 1. Where no lawful justification exists for the processing of the data non-identifying data must be used, this is the justification for the use of pseudonymisation techniques.5 2. Each data field has a different base for its pseudonym (e.g. with encryption, say key 1 for Name, key 2 for address); so that it must not be possible to deduce values of one field from another if the pseudonym is compromised. 3. Pseudonyms used in place of NHS Numbers shall be of a reasonable length and formatted to ensure readability. 4. In performing pseudonymisation care should be exercised to ensure that field lengths are replicated (e.g. NHS numbers will be 10 characters but not consist purely of numbers to avoid confusion with genuine NHS numbers). 5. Pseudonyms generated from a hash shall be seeded. 6. Pseudonymisation must not be performed ‘on the fly’ and should be carried out prior to any user access in order to prevent errors and lessen the risk of identifiable data being displayed. 7. Pseudonyms for external use must be generated, using a different hash seed, to produce different pseudonym values. This rule will ensure that internal pseudonyms are not compromised. 8. To preserve confidentiality, data to be provided to external organisations must apply a distinct pseudonymisation to each data set generated for a specific purpose so that data cannot be linked across them. A consistent pseudonym may be required for a specific purpose, separate data sets are provided over a period of time and the recipient needs to link the data sets to create records that can be reviewed several times over a period of time. 9. The concept of the minimum necessary data set also applies to pseudonymised data. 10. Re-identification requests for, and access to data in the clear, must be fully logged and an approval trail maintained. The following procedure must be followed and conforms to the requirements of ISO/TS 25237:2008.6–). 5 Section 251 of the NHS Act 2006 can permit, with authorisation from the Health Research Authority (HRA), the use of identifying data for health research 6 For further information see ‘SLMS-IG14 Health Informatics - Pseudonymisation Overview’ SLMS-IG14 Pseudonymisation Rules v1.0 Page 6 of 22 10.1 Re-identification as Part of an Exceptional Event The following shall apply to this re-identification procedure: • All exceptional event requests for re-identification shall be logged and must include as a minimum the following information: o o o o o o o o Date/time of the request; Name of staff requesting; Name of authorising member of staff; Justification of the re-identification; Confirmation from the requestor that the re-identification data will be used for the research purpose and details of any disclosure and the party involved; Date and time of the re-identification; Security arrangements for the re-identified data; and Retention and destruction (date and time) arrangements for the reidentification data. • The re-identification process shall be carried out in a secure manner by the PI, or trusted member of staff, and all necessary precautions must be taken to limit access to information that connect identifiers and pseudonyms. • All due care shall be taken to ensure the integrity and completeness of the reidentified data, especially if such data are to be used for diagnosis or treatment. • The re-identified data must include details of its origin and a caveat that the data may be incomplete as it was derived from a clinical research database or data warehouse. • The re-identified data will remain the responsibility of the PI, who will continue to act in the capacity of data controller under the Data Protection Act 1998. The exception will be in circumstances where re-identified data has been disclosed to a third party with appropriate legal authority. • The PI should seek legal advice prior to any disclosure from UCL’s Data Protection Officer. If the disclosure is authorised, the DPO will provide suitable legal advice to limit the use of the re-identified data for an authorised purpose and that the third party assume all the responsibilities of a data controller as specified in the Data Protection Act 1998.Pseudonymised data should be afforded the same security and access controls as required for sensitive personal data. Compliance with Care Record Guarantee pledges shall be required. IDHS or equivalent system will provide as a minimum audit log: o o o User ID and identification of the dataset; Date and time of access; and Query or access process undertaken. SLMS-IG14 Pseudonymisation Rules v1.0 Page 7 of 22 • Pseudonymisation of personal data requires the highest standards of security and confidentiality in every day operational use, system design and implementation. PIs must be mindful of the research exemption in Section 33 of the Data Protection Act 1998 which can only be applied to personal data that is used solely for research purposes and not to influence decision making in respect of individuals. The exemption is therefore not applicable to pseudonymised, or effectively anonymised data as there is no impact upon an individual. 5. Data Transfer and Access To conform with Safe Haven requirements7 all data access and transfers must be conducted in a secure manner following the relevant Standard Operating Procedures.8 All transfers and access should be subject to contractual and data sharing obligations. When transferring and granting access to data the PI must undertake a Privacy Impact Assessment (PIA) to ensure that disclosure risks are suitably assessed.9 7 Described in Appendix 5 Detailed Standard Operating Procedures for the IDHS can be found on the intranet page 9 See Appendix 6 for Privacy Impact Assessment (PIA) procedure 8 SLMS-IG14 Pseudonymisation Rules v1.0 Page 8 of 22 Appendix 1: Pseudonymisation, Anonymisation and UK law The following bullet points should be considered when using identifying data that may need to be converted into non-identifying data: • Data can be identifying or non-identifying based upon the context in which that data is used. • Data published externally will be subject to attack from its audience. The greater the value of the potential to identify personal data then the greater the risk of confidentiality being compromised. • Disclosure of data into a controlled domain is more manageable and there is a reduced risk when compared to the previous bullet point. • Data released into the public domain should contain little or no identifying data to ensure that nothing is revealed of a confidential nature. • Assessments must be undertaken to identify the likelihood of additional information being available to potential attackers to reveal identity. • Reasonable efforts must be made to make a fair risk assessment. • The records of deceased persons should be treated exactly the same as living individuals for pseudonymisation and anonymisation purposes Appendix 2: De-identification techniques De-identification of identifiable data can be accomplished by using a variety of the following techniques: o o o o Not displaying sensitive data items; Use pseudonyms on a one off basis; Use of pseudonyms on a consistent basis Use of derivations as indicated in Table 2 above for DOB and postcode; and o Use of data in a non-sensitive disclosure context e.g. use of NHS number with a non-NHS recipient. SLMS-IG14 Pseudonymisation Rules v1.0 Page 9 of 22 Appendix 3: Pseudonym Creation Techniques Pseudonym Creation Techniques Pseudonyms in general have the following characteristics: • • Reversibility – pseudonyms are reversible or irreversible Replicability – data items may have a consistent and repeatable pseudonym assigned on various studies and also when it appears in different records. When creating a pseudonym consideration must be given to the format (see Rule 2 below) and whether this will be appropriate for its intended use. Detailed below are methods that should be adopted when using a surrogate’s field as a pseudonym. A surrogate can be, for example, a replacement for the NHS number. The creation of different types of pseudonyms: Irreversible Pseudonyms – are created through the application of a cryptographic hash function. These are one-way functions that take a string of any length as input and produce a fixed-length hash value (or digest) 8. Hash functions are available in both SQL Server (from SQL Server 2005) and Oracle (obfuscation toolkit). Reversible Pseudonyms - the creation of a reversible pseudonym generally involves the maintenance of a secure lookup table which holds the source data and which is linked to less sensitive data elements by a surrogate key which is randomised in some way to ensure that there is no relationship between the value of the key and the clear text. The lookup table can either be created to include all potential values, when the range of potential values is bounded (as for example is the case for date of birth) or updated when new values are found in the data. Access to the lookup table must be limited to authorised users. The source text on the secure table may be encrypted to provide a further layer of security if required. Reversible Pseudonyms (Alternative) - in principle, an alternative approach to the creation of a reversible pseudonym is to apply encryption; the pseudonym being the code text, while access to the clear text is by decryption. However, the encryption functions available in commercial systems such as SQL Server and Oracle may be inappropriate as a mechanism to create reversible pseudonyms as they are implemented in a way that ensures that a given plain text will generate differing encryptions from case to case. Formatting issues can be handled to some degree through data transformation – for example, the NHS SUS reduces the length of a pseudonym on presentation by expressing it to Base 36 (positional numeral system using the combination of Arabic numbers 0-9 and Latin letters A-Z to represent digits). Random Generated Values This approach uses a set of randomly generated values to operate against the surrogate key. The mechanisms available for creating suitable surrogate fields and keys include: • sequential (random element introduced by sequence in which data item, e.g. NHS Numbers arise) • random sampling without replacement • cryptographic hash function – such as SQL Server use of MD5 or SHA1 and Oracle’s DBMS_CRYPTO function • specific system functions for surrogate creation such as functions within SQL Server (SQL Server identity column) and Oracle (SEQUENCE) • adding or subtracting a consistent random number generated from the abve SLMS-IG14 Pseudonymisation Rules v1.0 Page 10 of 22 Any or all the above may be combined to provide an effective solution in accordance with the Pseudonymisation Rules set out in this document. A more direct concern arises when a hash is used to pseudonymise a bounded set of numbers where the set is relatively small. • For example, there are only just over 40,000 days between 1900 and 2010 and if it is known that these have been pseudonymised by the simple application of a hash function then the creation of a look-up table which will break the pseudonymisation is a simple matter. • An approach, which has been successfully trialled by one NHS Trust, involves building a table of 75,000 entries, creating a key by sampling without replacement in the range 0 to 40472, and assigning successive dates from 1880 to each new entry as it occurs. The key was then hashed to provide a pseudonym of consistent form with other pseudonyms applied to the set. • More generally, hashed values should always be seeded’ with a local constant value. The effect of this is to localise the pseudonymisation derived from the hash to hashes which use the same seed and algorithm and provide another layer of security. It is vital that data is consistently formatted before pseudonymisation to maintain the ability of pseudonyms to link data. This is particularly true of postcodes where data may variously been provided in several different formats: Formatting action A single blank separating the outbound and inbound postcode As two separate fields With no blank With some other number of intervening blanks The simple solution is to remove any blanks for consistency Outcome WC1E_6BT outbound postcode WC1E inbound postcode 6BT WC1E6BT WC1E__6BT WC1E6BT SLMS-IG14 Pseudonymisation Rules v1.0 Page 11 of 22 Page 12 of 22 means of de-identification Techniques used in creating pseudonyms Rules for applying pseudonymisation techniques Log of identifying access to identifiable data Implementing de-identification in systems and organisations Use of data must be effectively anonymised –Organisations should proactively seek ways of minimising the use of personal data –Presence of rare diagnostic codes in pseudonymised data sets and inference of identity –Effectively anonymised data is not personal data –combinations of techniques applied to the data and controls on the recipient will provide effectively anonymised data Technical processes to de-identify data e.g. aggregating data, pseudonymisation; banding; stripping out removing person identifiers. –Reversible and Irreversible techniques. scope of paper limited to new safe haven and business process aspects of the implementation to support secondary uses (research). Safe havens Identification of sensitive personal data fields Medical research purposes are out of scope DH NHS Guidance Appendix 4: Applicability of Department of Health NHS Guidance DH NHS Guidance Implementation Guidance on Local NHS Data Usage and Governance for Secondary Uses Pseudonymisation Implementation Project (PIP) Reference Paper– 1 Guidance on Terminology Pseudonymisation Implementation Project (PIP) Reference Paper 3 Guidance on De-identification ISO/TS 25237:2008 Health Informatics Pseudonymisation SLMS-IG14 Pseudonymisation Rules v1.0 Section 3.3.5 Table 2 Section 3.2.5 Section 3.2.7 Section 3.2.3 Section 2.3.5 Section 2.3.8 DH NHS Relevant Section Section 1 Table 2, Section 3 and Appendix 3 Section 3 Table 2, Section 3 Section 3 Section 3 Section 3 Relevant Section in this Document or other SLMS Evidence Not applicable Section 1 and Rule 9 SLMS-IG14 Health Informatics - Section 3 and Appendix 3 Appendix 3 Section 5 Rule 10 Section 4, Data Storage Section 6 and Appendix 5 External disclosure and publication Appendix 3 Relevant sections covered in this document as indicated below. Section 1 Section 2.2 and 3.2 Figure 1 Section 2.4 Section 4 All DH The Caldicott Guardian Manual 2010 NHS Care Record Guarantee for England DH NHS 2010/11 Operating Framework Data Protection Act 1998; Human Right Act 1998 and common law of confidentiality ICO – Anonymisation: managing data protection risk code of practice SLMS-IG14 Pseudonymisation Rules v1.0 Pledges in respect of confidentiality and audit trail information for access to personal records can be provided. Ensure that relevant staff are aware of and trained to use anonymised or pseudonymised data Ensure appropriate changes are made to processes, systems and security mechanisms in order to facilitate the use of de-identified data in place of patient identifiable data UK Legislation Context of disclosure wider audience and restricted audience for research data Disclosed anonymised data is not personal data under the Data Protection Act 1998 DPA Research exemption Privacy Impact Assessments – risk assessment of research disclosures Page 13 of 22 n/a page 7 page 44 Pseudonymisation Overview SLMS consider the guidance is not applicable currently. Matter to be kept under review by IGSG Rule 10 Provision of IG induction Training IDHS Service including Pseudonymisation IDHS Service and associated SOPs; Pseudonymisation Rules; Pseudonymisation Plan SLMS-IG14 Health Informatics – Pseudonymisation Overview Section 1 Rule 12, Appendix 1. Section 3 Rule 12 Rule 12 Section 3 Appendix 5: Safe Haven Requirements What is a safe haven? An set of procedures to ensure the confidentiality of identifiable data regardless of location to ensure confidentiality and security. Why is it necessary? To comply with the following legislation and obligations for healthcare research: Data Protection Act 1998 Common law duty of confidentiality NHS Act 2006 Caldicott recommendations NHS IG Toolkit ISO27001 – International standard for Information Security Management Systems Staff Obligations All SLMS staff are expected to conform with the following general requirements: Email • • • Identifiable data if sent by email must be encrypted (use 7-Zip). Personal data must not be included in the subject line of an email. Email disclaimers should be included in the footer of the electronic communication. IDHS Safe Haven Identifiable data should ideally be stored on the SLMS IDHS system, a secure environment that hosts relevant tools and resources required for analysis. PIs, as the Data Controller, are responsible for allocating user access rights on an internal and external basis. • • • • • • • • • All IDHS data transfers shall be through the Managed File Transfer service. Services must be suitably disabled if there is no operational need for them. All IDHS servers should be hardened using current industry best practice e.g.,Windows IIS standard Audit logs should be enabled in respect of access to identifiable data. Users should be presented with a WYSIWYG screen after the log on process to prevent curiosity surfing. Functionality to copy identifiable data within systems must be prevented to guard against data leakage and breaches of confidentiality. Data cannot be copied or removed and sent outside of the IDHS safe haven. IDHS user IDs shall be subject to a leavers and transferees procedure to delete redundant IDs and ensure all user’s rights are current. Use of role based access controls should be utilised and a separation of duties in line with security best practice. Access to identifiable data is through approved SLMS user IDs Privileges on the system should be granted as and when necessary and separate user IDs allocated for their use. SLMS-IG14 Pseudonymisation Rules v1.0 Page 14 of 22 • Lock computer screens when not at your desk using a password enabled screensaver. Physical location and security Take precautions against unauthorised access to premises. Challenge persons who do not have a UCL identity badge. Do not place yourself at risk and if necessary contact UCL Security on Ext 222 or telephone 020 7679 2108. • Utilise a clear desk and screen policy. Any confidential papers must be locked away at the end of the working day. Be aware of social engineering techniques e.g.individuals tailgating in swipe card access locations Incoming post • Should not be left unattended in areas that may be accessed by unauthorised individuals or members of the public. • Post should be opened and kept in a secure environment. • Post can only be collected by a UCL member of staff showing their UCL identity badge. The name on the badge should be the same as that on the postal packet. • Outgoing post • • • All confidential material should be sent in envelopes marked “Private & Confidential – Open by Addressee Only”. Confidential material must be addressed to a person. Envelopes should be of robust quality and the contents securely sealed inside before despatch. Fax machines • • • • • Fax machines that receive identifiable data should be located in a secure environment, such that unauthorised staff or members of the public cannot casually view documentation. Sending identifiable data by fax is one of the least secure methods and you should consider a more secure one. If fax’s are to be sent to a particular number on a regular basis, you should program the number into the fax machine memory. Fax cover sheets with a disclaimer must be used when sending identifiable data. Contact the recipient prior to sending the fax so that they collect it immediately and then confirm with the recipient that the document has been received. SLMS-IG14 Pseudonymisation Rules v1.0 Page 15 of 22 Appendix 6: Privacy Impact Assessment Privacy Impact Assessment (PIA) Screening Questions Scope: This document provides guidance for evaluating whether a study runs the risk of breaching the Data Protection Act 1998. The evaluation depends on sufficient information about the research study having been collected. The evaluation process involves answering a set of 11 questions about key characteristics of the study and the system that the study will use or deliver. The answers to the questions need to be considered as a whole, in order to decide whether the overall impact, and the related risk, warrant investment in the study. The 11 questions about key study characteristics are shown below in bold. Model answer: A key objective of the study is to maintain the confidentiality and security of healthcare research data subjects. The study has obtained level 2 compliance for the IG Toolkit v11. Any new identifiers used are designed to de-identify the individual. No intrusive identification of individuals has been instigated. Users of the system are required to provide [Model answer: There is no increase in privacy intrusion because appropriate controls and countermeasures are in use. Pseudonymisation and anonymisation techniques have been considered and used to anonymise the data to prevent reidentification. Assessments of potential re-identification from publication/disclosure have been undertaken in accordance with guidance on the ‘motivated intruder’ test (section 3 of Anonymisation: managing data protection risk code of practice, from the Information Commissioner’s Office – summary is contained in Additional Guidance below). The level of risk associated with publication/disclosure is deemed acceptable.] Guidance in relation to the interpretation of each question is provided in plain text and a ‘model’ answer in the right hand column. Responses should be inserted into the section with square brackets. If required further guidance can be provided by the IG Lead. Examples may include, but are not limited to: smart cards, radio frequency identification (RFID) tags, biometrics, locator technologies (including mobile phone location, applications of global positioning systems (GPS) and intelligent transportation systems), visual surveillance, digital image and video recording, profiling, data mining, and logging of electronic traffic. 1) Does the study apply new or additional information technologies that have substantial potential for privacy intrusion? Name of Study: [Research Study Name] Technology Identity 2) Does the study involve new identifiers, re-use of existing identifiers, or intrusive identification, identity authentication or identity management processes? Page 16 of 22 Examples of relevant study features include a digital signature SLMS-IG14 Pseudonymisation Rules v1.0 Multiple organisations Data initiative, a multi-purpose identifier, interviews and the presentation of identity documents as part of a registration scheme, and an intrusive identifier such as biometrics. All schemes of this nature have considerable potential for privacy impact and give rise to substantial public concern and hence study risk. 3) Does the study have the effect of denying anonymity and pseudonymity, or converting transactions that could previously be conducted anonymously or pseudonymously into identified transactions? Some healthcare research functions cannot be effectively performed without access to the patient’s identity. On the other hand, many others do not require identity. An important aspect of privacy protection is sustaining the right to interact with organisations without declaring’one's identity. 4) Does the study involve multiple organisations, whether they are government agencies (e.g. in “joined up government” initiatives) or private sector organisations (e.g. as outsourced service providers or as “business partners”) Schemes of this nature often involve the breakdown of personal data silos and identity silos, and may raise questions about how to comply with data protection legislation. This breakdown can be desirable in research to take advantage of rich data sources in health and social care to support current government initiatives. However, data silos and identity silos are of long standing, and have in many cases provided effective privacy protection. Particular care is therefore needed in relation to preparation of a business case that justifies the privacy invasions of study’s involving multiple organisations. Compensatory protection measures should be considered. Page 17 of 22 5) Does the study involve new or significantly changed handling of personal data that is of particular concern to individuals? SLMS-IG14 Pseudonymisation Rules v1.0 identification.] [Model answer: There is no inclination to deny anonymity and indeed the converse applies. The approach to de-identification for this healthcare research study is identified in section 7 below. Transmissions are conducted using https and AES 256 bit encryption (7-Zip) to safeguard confidentiality.] [Model answer: Suitable measures compliant with NHS requirements and ISO27001 have been taken to provide effective privacy protection using secure servers to EAL4 requirements and robust access control features, AES 256 bit encryption and https connections. PII is processed using this technology with other selected and IGSoC approved healthcare organisations. The involvement of multiple organisations is therefore not considered to be derogatory to the privacy of individuals.] [Model answer: There is no significant change in the manner in which personal data concerning the patient’s health condition is handled as confidentiality and security are of primary concern The Data Protection Act at schedule 2 identifies a number of categories of sensitive personal data that require special care. These include racial and ethnic origin, political opinions, religious beliefs, trade union membership, health conditions, sexual life, offences and court proceedings. There are other categories of personal data that may give rise to concerns, including financial data, particular data about vulnerable individuals, and data which can enable identity theft. Further important examples apply in particular circumstances. The addresses and phone-numbers of a small proportion of the population need to be suppressed, at least at particular times in their lives, because such persons at risk may suffer physical harm if they are found. 6) Does the study involve new or significantly changed handling of a considerable amount of personal data about each individual in the database? Examples include intensive data processing such as welfare administration, healthcare, consumer credit, and consumer marketing based on intensive profiles. 7) Does the study involve new or significantly changed handling of personal data about a large number of individuals? Any data processing of this nature is attractive to organisations and individuals seeking to locate people, or to build or enhance profiles of them. 8) Does the study involve new or significantly changed consolidation, inter-linking, cross referencing or matching of personal data from multiple sources? Page 18 of 22 This is an especially important factor. Issues arise in relation to SLMS-IG14 Pseudonymisation Rules v1.0 for the study. All healthcare research subjects are provided with st informed consent in line with the 1 Principle of the Data Protection Act 1998 and UK law.] [Model answer: Informed consent is provided to the healthcare research subjects and they are aware of the processing and disclosures of their personal data. The processing is in pursuit of healthcare research to which they have knowingly consented] [Model answer: There is no significant change in the handling of personal data that would involve a privacy risk. The use of deidentification techniques anonymisation and or pseudonymisation (indicate the techniques used) is considered to be secure given the advice from the Department of Health and NHS.] [Model answer: The PII is processed in accordance with data quality standards (advise what these are) and data quality and confidentiality audits are performed on a routine basis.] Exemptions and exceptions data quality, the potential for duplicated records, mismatched data fields, and the retention of data with potential for damage and harm to individuals. 9) Does the study relate to data processing which is in anyway exempt from legislative privacy protections? Examples include law enforcement and national security information systems and also other schemes where some or all of the privacy protections have been negated by legislative exemptions or exceptions. Section 251 exemption details should be included. 10) Does the study's justification include significant contributions to public security measures? Measures to address concerns about critical infrastructure, wellbeing and the physical safety of the population usually have a substantial impact on privacy. Yet there have been tendencies in recent years not to give privacy its due weight. This has resulted in tensions with privacy interests, and creates the risk of public opposition. 11) Does the study involve systematic disclosure of personal data to, or access by, third parties that are not subject to comparable privacy regulation? Disclosure may arise through various mechanisms such as sale, exchange, unprotected publication in hard-copy or electronically-accessible form, or outsourcing of aspects of the data handling to sub-contractors. Page 19 of 22 Third parties may not be subject to comparable privacy regulation because they are not subject to the provisions of the Data Protection Act or other relevant statutory provisions, such as where they are in a foreign jurisdiction. Concern may also arise in the case of organisations within the UK which are subsidiaries of organisations headquartered outside the UK. SLMS-IG14 Pseudonymisation Rules v1.0 [Model answer: All necessary legislative privacy requirements have been suitably considered and implemented in line with the IG Toolkit version 11, at level 2 compliance.] [Model answer: There are no public security measures associated with this study. Alternatively you will need to provide a cogent argument in respect of any public security measures being in the public interest] [Model answer: The study takes its obligations under the Data Protection Act 1998 seriously and is also compliant with IG Toolkit v11 level 2. Any contractors employed by the SLMS sign a contract that requires an appropriate level of confidentiality. There is no systematic disclosure of personal data and the potential for access by third parties not subject to privacy regulation is not considered to be a risk.] Conclusion The 11 key questions in relation to the PIA have been answered objectively. Stakeholders such as the [NHS, GPs, healthcare research subjects and the external partners] could be involved in the PIA, but in view of the clarity of the study documentation and goals this is not considered to be necessary. Page 20 of 22 No major risks have been identified and consequently it is considered that there are no significant privacy risks in relation to this healthcare study. Consequently the intended publication/disclosure of the study material is an acceptable risk. [AN Other] Principal Investigator SLMS-IG14 Pseudonymisation Rules v1.0 Appendix 6: Privacy Impact Assessment Privacy Impact Assessment (PIA) Screening Questions Scope: This document provides guidance for evaluating whether a study runs the risk of breaching the Data Protection Act 1998. The evaluation depends on sufficient information about the research study having been collected. The evaluation process involves answering a set of 11 questions about key characteristics of the study and the system that the study will use or deliver. The answers to the questions need to be considered as a whole, in order to decide whether the overall impact, and the related risk, warrant investment in the study. The 11 questions about key study characteristics are shown below in bold. Model answer: A key objective of the study is to maintain the confidentiality and security of healthcare research data subjects. The study has obtained level 2 compliance for the IG Toolkit v11. Any new identifiers used are designed to de-identify the individual. No intrusive identification of individuals has been instigated. Users of the system are required to provide [Model answer: There is no increase in privacy intrusion because appropriate controls and countermeasures are in use. Pseudonymisation and anonymisation techniques have been considered and used to anonymise the data to prevent reidentification. Assessments of potential re-identification from publication/disclosure have been undertaken in accordance with guidance on the ‘motivated intruder’ test (section 3 of Anonymisation: managing data protection risk code of practice, from the Information Commissioner’s Office – summary is contained in Additional Guidance below). The level of risk associated with publication/disclosure is deemed acceptable.] Guidance in relation to the interpretation of each question is provided in plain text and a ‘model’ answer in the right hand column. Responses should be inserted into the section with square brackets. If required further guidance can be provided by the IG Lead. Examples may include, but are not limited to: smart cards, radio frequency identification (RFID) tags, biometrics, locator technologies (including mobile phone location, applications of global positioning systems (GPS) and intelligent transportation systems), visual surveillance, digital image and video recording, profiling, data mining, and logging of electronic traffic. 1) Does the study apply new or additional information technologies that have substantial potential for privacy intrusion? Name of Study: [Research Study Name] Technology Identity 2) Does the study involve new identifiers, re-use of existing identifiers, or intrusive identification, identity authentication or identity management processes? Page 16 of 22 Examples of relevant study features include a digital signature SLMS-IG14 Pseudonymisation Rules v1.0 Multiple organisations Data initiative, a multi-purpose identifier, interviews and the presentation of identity documents as part of a registration scheme, and an intrusive identifier such as biometrics. All schemes of this nature have considerable potential for privacy impact and give rise to substantial public concern and hence study risk. 3) Does the study have the effect of denying anonymity and pseudonymity, or converting transactions that could previously be conducted anonymously or pseudonymously into identified transactions? Some healthcare research functions cannot be effectively performed without access to the patient’s identity. On the other hand, many others do not require identity. An important aspect of privacy protection is sustaining the right to interact with organisations without declaring’one's identity. 4) Does the study involve multiple organisations, whether they are government agencies (e.g. in “joined up government” initiatives) or private sector organisations (e.g. as outsourced service providers or as “business partners”) Schemes of this nature often involve the breakdown of personal data silos and identity silos, and may raise questions about how to comply with data protection legislation. This breakdown can be desirable in research to take advantage of rich data sources in health and social care to support current government initiatives. However, data silos and identity silos are of long standing, and have in many cases provided effective privacy protection. Particular care is therefore needed in relation to preparation of a business case that justifies the privacy invasions of study’s involving multiple organisations. Compensatory protection measures should be considered. Page 17 of 22 5) Does the study involve new or significantly changed handling of personal data that is of particular concern to individuals? SLMS-IG14 Pseudonymisation Rules v1.0 identification.] [Model answer: There is no inclination to deny anonymity and indeed the converse applies. The approach to de-identification for this healthcare research study is identified in section 7 below. Transmissions are conducted using https and AES 256 bit encryption (7-Zip) to safeguard confidentiality.] [Model answer: Suitable measures compliant with NHS requirements and ISO27001 have been taken to provide effective privacy protection using secure servers to EAL4 requirements and robust access control features, AES 256 bit encryption and https connections. PII is processed using this technology with other selected and IGSoC approved healthcare organisations. The involvement of multiple organisations is therefore not considered to be derogatory to the privacy of individuals.] [Model answer: There is no significant change in the manner in which personal data concerning the patient’s health condition is handled as confidentiality and security are of primary concern The Data Protection Act at schedule 2 identifies a number of categories of sensitive personal data that require special care. These include racial and ethnic origin, political opinions, religious beliefs, trade union membership, health conditions, sexual life, offences and court proceedings. There are other categories of personal data that may give rise to concerns, including financial data, particular data about vulnerable individuals, and data which can enable identity theft. Further important examples apply in particular circumstances. The addresses and phone-numbers of a small proportion of the population need to be suppressed, at least at particular times in their lives, because such persons at risk may suffer physical harm if they are found. 6) Does the study involve new or significantly changed handling of a considerable amount of personal data about each individual in the database? Examples include intensive data processing such as welfare administration, healthcare, consumer credit, and consumer marketing based on intensive profiles. 7) Does the study involve new or significantly changed handling of personal data about a large number of individuals? Any data processing of this nature is attractive to organisations and individuals seeking to locate people, or to build or enhance profiles of them. 8) Does the study involve new or significantly changed consolidation, inter-linking, cross referencing or matching of personal data from multiple sources? Page 18 of 22 This is an especially important factor. Issues arise in relation to SLMS-IG14 Pseudonymisation Rules v1.0 for the study. All healthcare research subjects are provided with st informed consent in line with the 1 Principle of the Data Protection Act 1998 and UK law.] [Model answer: Informed consent is provided to the healthcare research subjects and they are aware of the processing and disclosures of their personal data. The processing is in pursuit of healthcare research to which they have knowingly consented] [Model answer: There is no significant change in the handling of personal data that would involve a privacy risk. The use of deidentification techniques anonymisation and or pseudonymisation (indicate the techniques used) is considered to be secure given the advice from the Department of Health and NHS.] [Model answer: The PII is processed in accordance with data quality standards (advise what these are) and data quality and confidentiality audits are performed on a routine basis.] Exemptions and exceptions data quality, the potential for duplicated records, mismatched data fields, and the retention of data with potential for damage and harm to individuals. 9) Does the study relate to data processing which is in anyway exempt from legislative privacy protections? Examples include law enforcement and national security information systems and also other schemes where some or all of the privacy protections have been negated by legislative exemptions or exceptions. Section 251 exemption details should be included. 10) Does the study's justification include significant contributions to public security measures? Measures to address concerns about critical infrastructure, wellbeing and the physical safety of the population usually have a substantial impact on privacy. Yet there have been tendencies in recent years not to give privacy its due weight. This has resulted in tensions with privacy interests, and creates the risk of public opposition. 11) Does the study involve systematic disclosure of personal data to, or access by, third parties that are not subject to comparable privacy regulation? Disclosure may arise through various mechanisms such as sale, exchange, unprotected publication in hard-copy or electronically-accessible form, or outsourcing of aspects of the data handling to sub-contractors. Page 19 of 22 Third parties may not be subject to comparable privacy regulation because they are not subject to the provisions of the Data Protection Act or other relevant statutory provisions, such as where they are in a foreign jurisdiction. Concern may also arise in the case of organisations within the UK which are subsidiaries of organisations headquartered outside the UK. SLMS-IG14 Pseudonymisation Rules v1.0 [Model answer: All necessary legislative privacy requirements have been suitably considered and implemented in line with the IG Toolkit version 11, at level 2 compliance.] [Model answer: There are no public security measures associated with this study. Alternatively you will need to provide a cogent argument in respect of any public security measures being in the public interest] [Model answer: The study takes its obligations under the Data Protection Act 1998 seriously and is also compliant with IG Toolkit v11 level 2. Any contractors employed by the SLMS sign a contract that requires an appropriate level of confidentiality. There is no systematic disclosure of personal data and the potential for access by third parties not subject to privacy regulation is not considered to be a risk.] Conclusion The 11 key questions in relation to the PIA have been answered objectively. Stakeholders such as the [NHS, GPs, healthcare research subjects and the external partners] could be involved in the PIA, but in view of the clarity of the study documentation and goals this is not considered to be necessary. Page 20 of 22 No major risks have been identified and consequently it is considered that there are no significant privacy risks in relation to this healthcare study. Consequently the intended publication/disclosure of the study material is an acceptable risk. [AN Other] Principal Investigator SLMS-IG14 Pseudonymisation Rules v1.0 Additional Guidance Finding facts early The key characteristics addressed here represent significant risk factors for the study and their seriousness should not be downplayed. It should also be remembered that the later the problems are addressed, the higher the costs will be to overcome them. Things to consider It is important to appreciate that the various stakeholder groups may have different perspectives on these factors. If the analysis is undertaken solely from the viewpoint of the organisation itself, it is likely that risks will be overlooked. It is therefore recommended that stakeholder perspectives are also considered as each question is answered. In relation to the individuals affected by the study, the focus needs to be more precise than simply citizens or residents generally, or the population as a whole. In order to ensure a full understanding of the various segments of the population that have an interest in, or are affected by, the study, the stakeholder analysis that was undertaken as part of the preparation step may need to be refined. For example, there are often differential impacts and implications for people living in remote locations, for the educationally disadvantaged, for itinerants, for people whose first language is not English, and for ethnic and religious minorities. Applying the criteria Once each of the 11 questions has been answered individually, the set of answers needs to be considered as a whole, in order to reach a conclusion as to whether the study continues and publishes and or discloses the resultant study material. Summary of the ‘motivated intruder’ test It is sensible to commence this test for re-identification based upon established fact (information publicly available such as the electoral register) and recorded fact (available through the study such as gender, post code, date of birth, age band etc.) 1) Perform a web search to identify if the use of a combination of postcode and date of birth can identify individuals from the study. 2) If names/ethnicity codes are used you can search the archives of national or local papers to see whether there is any association with crime. 3) Electoral register, local library resources and other publicly available resources (Council offices, Registry offices and Church records) to see if it is possible to identify an individual from the study 4) Use of social network sites such as Facebook, twitter to see if it is possible to link anonymised data to a user You can also consider prior knowledge held by individuals that may have implications for reidentification. What is the likelihood that this type of individual would have access to the disclosed or published information? Consider the factor that those with prior knowledge, for much of the data, would be healthcare professionals, who are bound by codes of confidentiality, and requirements for ethical conduct. In considering the use of prior knowledge as a potential for aiding re-identification this must be assessed as being plausible and reasonable. SLMS-IG14 Pseudonymisation Rules v1.0 Page 21 of 22 Appendix 7: Glossary Term Anonymisation Explanation Process that renders data into a format that does not identify the individual and where identification is unlikely to take place. Follow this link Follow this link No reasonable chance that identity can be inferred from the data in the context it is being used. Without Section 251 approval this should be the de-facto standard for research data. Follow this link The same meaning as personal data, but extended to apply to dead, as well as living, people. Identifiable Data Handling Service, that provides a secure infrastructure for the storage of identifiable data. International technical specification for pseudonymisation of personal health information. An Office of National Statistics (ONS) code that is an improvement in reporting small area statistics and providing consistency in population size. Typical mean population is 1500 and minimum is 1000. Data that are not “identifying data” (see definition above). Non-identifying data are always also nonpersonal data. Data that are not “personal data”. Non-personal data may still be identifying in relation to the deceased (see definition of “identifying data” and “personal data”). Data which relate to a living individual who can be identified – (a) from those data, or (b) from those data and other information which is in the possession of, or is likely to come into the possession of, the data controller, and includes any expression of opinion about the individual and any indication of the intentions of the data controller or any other person in respect of the individual (Source Data Protection Act 1998) Personal identifier that is different from the normally used personal identifier The process to distinguish individuals in a dataset by using a unique identifier that does not reveal their true identity. NOTE: Pseudonymisation can be either reversible or irreversible. If the data is pseudonymised with the intention of reversing the pseudonymisation it remains personal data under the Data Protection Act. The process of discovering the identity of individuals from a data set by using additional relevant information. Data that has been effectively re-identified thereby rendering it identifying data. common law duty of confidentiality Data Protection Act 1998 effective anonymisation Human Rights Act 1998 identifying data IDHS safe haven environment ISO/TS 25237:2008 Low Super Output Area level non-identifying data non-personal data Personal data Pseudonym Pseudonymisation Re-identification re-identified data SLMS-IG14 Pseudonymisation Rules v1.0 Page 22 of 22