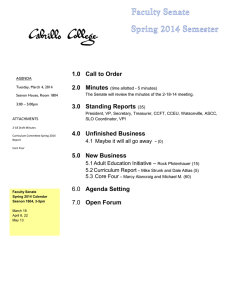

T M S D

advertisement

T EXT M INING

S TATISTICAL M ODELING OF T EXTUAL D ATA

L ECTURE 2

Mattias Villani

Division of Statistics

Dept. of Computer and Information Science

Linköping University

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

1 / 19

O VERVIEW

I

I

I

Text classication

Regularization

R's tm package (demo) [TMPackageDemo.R]

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

2 / 19

S UPERVISED CLASSIFICATION

I

I

I

I

Predict the class label s ∈ S using a set of features.

Feature = Explanatory variable = Predictor = Covariate

Binary classication: s ∈ {0, 1}

S = {pos,neg}

S = {Spam,Ham}

S = {Not bankrupt, Bankrupt}

I

Movie reviews:

I

E-mail spam:

I

Bankruptcy:

Multi-class classication:

s

∈ {1, 2, ..., K }

I

Topic categorization of web pages:

S = {0 News0 ,0 Sports0 ,0 Entertainment0 }

I

POS-tagging:

M ATTIAS V ILLANI (S TATISTICS , L I U)

S = {VB,JJ,NN,...,DT}

T EXT M INING

3 / 19

S UPERVISED CLASSIFICATION , CONT.

I

Example data:

I

Larry Wall, born in British Columbia, Canada, is the original creator of

the programming language Perl. Born in 1956, Larry went to ...

I

I

Bjarne Stroustrup is a 62-years old computer scientist ...

Person

Income

Age

Single

Payment remarks

Bankrupt

Larry

10

58

Yes

Yes

Yes

Bjarne

.

.

.

15

.

.

.

62

.

.

.

No

.

.

.

Yes

.

.

.

No

.

.

.

Guido

27

56

No

No

No

Classication: construct prediction machine

Features → Class label

I

More generally:

Features → Pr(Class label|Features)

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

4 / 19

F EATURES FROM TEXT DOCUMENTS

I

I

Any quantity computed from a document can used as a feature:

I

Presence/absence of individual words

I

Number of times an individual word is used

I

Presence/absence of pairs of words

I

Presence/absence of individual bigrams

I

Lexical diversity

I

Word counts

I

Number of web links from document, possibly weighted by Page Rank.

I

etc etc

Document

has('ball')

has('EU')

has('political_arena')

wordlen

Lex. Div.

Topic

Article1

Yes

No

No

4.1

5.4

Sports

Article2

No

No

No

6.5

13.4

Sports

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

ArticleN

2No

No

7.4

11.1

News

Constructing clever

M ATTIAS V ILLANI (S TATISTICS , L I U)

Yes

discriminating features

T EXT M INING

is the name of the game!

5 / 19

S UPERVISED LEARNING FOR CLASSIFICATION

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

6 / 19

T HE B AYESIAN CLASSIFIER

I

Bayesian classication

argmaxp (s |x)

s ∈S

where x = (x , ..., xn ) is a feature vector.

By Bayes' theorem

1

I

p (s |x)

I

=

p (x|s )p (s )

p (x)

∝ p (x|s )p (s )

Bayesian classication

argmaxp (x|s )p (s )

s ∈S

can be easily estimated from training data by relative frequencies.

Even with binary features [has(word)] the outcome space of p (x|s ) is

I p (s )

I

huge (=data are sparse).

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

7 / 19

N AIVE B AYES

I Naive Bayes (NB): features are assumed independent

p (x|s )

I

Naive Bayes solution

"

argmax

s ∈S

I

n

= ∏ p (xj |s )

j =1

n

∏ p (xj |s )

j=

p (s )

1

With binary features, p (xj |s ) can be easily estimated by

p̂ (xj |s )

I

#

Example:

s

=

C (xj , s )

C (s )

=news, xj = has('ball')

p̂ (has(ball)|news) =

M ATTIAS V ILLANI (S TATISTICS , L I U)

Number of news articles containing the word 'ball'

Number of news articles

T EXT M INING

8 / 19

N AIVE B AYES

I Continuous features

I

Replacing continous feature with several binary features

I

Estimating

(1

I

(e.g. lexical diversity) can be handled by:

≤lexDiv< 2, 2 ≤lexDiv≤ 10 and lexDiv> 10)

p (xj |s ) by a density estimator (e.g. kernel

Finding the most

smallest

discriminatory features.

estimator)

Sort from largest to

p (xj |s

= pos )

for j = 1, ..., n.

p (xj |s = neg )

I Problem with NB:

I

I

features are seldom independent ⇒

double-counting the evidence of individual features.

Extreme example: what happens with naive Bayes if you duplicate a

feature?

Advantages of NB: simple and fast, yet often surprising accurate

classications.

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

9 / 19

M ULTINOMIAL REGRESSION

I

I

I

I

Logistic regression (Maximum Entropy/MaxEnt):

exp(x0 β)

p (s = 1|x) =

1 + exp(x0 β)

Classication rule: Choose s = 0 if p (s |x) < 0.5 otherwise choose

s = 1.

... at least when consequences of dierent choices of s are the same.

Loss/Utility function.

Multinomial regression for multi-class data with K classes

exp(x0 βj )

p (s = sj |x) =

0

∑K

k = exp(x β k )

Classication

1

I

argmax p (s |x)

s ∈{s

1

I

,...sK }

Classication with text data is like any multi-class regression problem

... but with hundred or thousand of covariates! Wide data.

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

10 / 19

R EGULARIZATION - VARIABLE SELECTION

I

I

I

I

Select a subset of the covariates.

Old school: Forward and backward selection.

New school: Bayesian variable selection.

For each βi introduce binary indicator Ii such that

Ii

Ii

I

I

= 1 if covariate is in the model, that is β i 6= 0

= 0 if covariate is in the model, that is β i = 0

Use Markov Chain Monte Carlo (MCMC) simulation to approximate

Pr(Ii |Data) for each i .

Example S = {News, Sports}. Pr(News|x).

has('ball')

has('EU')

has('political_arena')

wordlen

Lex. Div.

0.2

0.90

0.99

0.01

0.85

Pr(Ii |Data)

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

11 / 19

R EGULARIZATION - S HRINKAGE

I

Keep all covariates, but shrink their β-coecient to zero.

I Penalized likelihood

LRidge ( β )

I

I

I

I

= LogLik ( β) − λβ0 β

where λ is the penalty parameter.

Maximize LRidge ( β) with respect to β. Trade-o of t (LogLik ( β))

against complexity penalty β0 β.

Ridge regression if regression is linear.

iid

The penalty can be motivated as a Bayesian prior βi ∼ N (0, λ− ).

λ can be estimated by cross-validation or Bayesian methods.

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

1

12 / 19

L ASSO - S HRINKAGE AND VARIABLE SELECTION

I

Replace Ridge penalty

LRidge ( β )

by

LLasso ( β )

I

I

I

n

= LogLik ( β) − λ ∑ β2j

j =1

n

= LogLik ( β) − λ ∑ | β j |

j =1

The β that maximizes LLasso ( β) is called the Lasso estimator.

Some parameters are shrunked exactly to zero ⇒ Lasso does both

shrinkage AND variable selection.

Lasso penalty is equivalent to a double exponential prior

p ( βi )

M ATTIAS V ILLANI (S TATISTICS , L I U)

=

λ

2

exp (λ | βi − 0|)

T EXT M INING

13 / 19

S UPPORT VECTOR MACHINES

I

I

I

I

One of the best classiers around.

Finds the line in covariate space that maximally separates the two

classes.

When the points are not linearly separable: add a slack-variable ξ i > 0

for each observation. Allow misclassication, but make it costly.

Non-linear separing curves can be obtained by basis expansion (think

about adding x , x and so on)

The kernel trick makes it possible to handle many covariates.

Drawback: not so easily extended to multi-class.

svm function in R-package e1071 [or nltk.classify.svm]

2

I

I

I

M ATTIAS V ILLANI (S TATISTICS , L I U)

3

T EXT M INING

14 / 19

L INEAR SVM S

X2

H1

H2

H3

X1

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

15 / 19

R EGRESSION TREES AND RANDOM FOREST

I

I

Binary partioning tree.

At each internal node decide:

I

I

I

I

I

I

I

I

Which covariate to split on

Where to split the covariate (Xj

< c.

Trivial for binary covariates)

The optimal splitting variables and split-points are chosen to minimize

the mis-classication rate (or other similar measures).

Random forest (RF) predicts using an average of many small trees.

Each tree in RF is grown on a random subset of variables. Makes it

possible to handle many covariates. Parallel.

Advantage of RF: better predictions than trees.

RF harder to interpret, but provide variable importance scores.

R packages: tree and rpart (trees), randomForest (RF).

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

16 / 19

R EGRESSION TREES

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

17 / 19

E VALUATING A CLASSIFIER : A CCURACY AND E RROR

I Confusion

matrix:

Truth

Decision

I

I

I

Spam

Not Spam

Spam Not Spam

tp

fp

fn

tn

tp = true positive, fp = false positive

fn = false negative, tn = true negative

Accuracy is the proportion of correctly classied items

Accuracy =

tp

+ tn

tp + tn + fn + fp

is the proportion of wrongly classied items

Error = 1-Accuracy

Accuracy is problematic when tn is large. High accuracy can then be

obtained by not acting at all!

I Error

I

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

Truth

18 / 19

E VALUATING A CLASSIFIER : THE F- MEASURE

I Confusion

matrix:

Truth

Choice

Spam

Good

Spam Good

tp

fp

fn

tn

= proportion of selected items that the system got right

tp

Precision =

tp+fp

Recall = proportion of spam that the system classied as spam

tp

Recall =

tp+fn

F-measure is a trade-o between Precision and Recall (harmonic

mean):

1

F =

α Precision + (1 − α) Recall

I Precision

I

I

1

M ATTIAS V ILLANI (S TATISTICS , L I U)

T EXT M INING

1

19 / 19