Lectures on Stochastic Analysis Autumn 2012 version Xue-Mei Li The University of Warwick

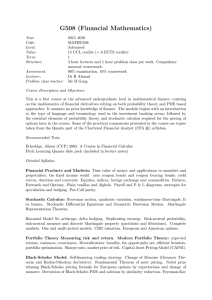

advertisement

Lectures on Stochastic Analysis

Autumn 2012 version

Xue-Mei Li

The University of Warwick

Typset: April 9, 2013

Contents

1

Prologue

1.1 What do we cover in this course and why? . . . . . . . . . . . . .

1.2 Exams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.3 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2

Preliminaries

2.1 Basics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.1.1 Sets . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.1.2 Measurable Spaces . . . . . . . . . . . . . . . . . . .

2.1.3 The Monotone Class Theorem . . . . . . . . . . . . .

2.1.4 Measures . . . . . . . . . . . . . . . . . . . . . . . .

2.1.5 Measurable Functions . . . . . . . . . . . . . . . . .

2.2 Integration with respect to a measure . . . . . . . . . . . . . .

2.3 Lp spaces . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4 Notions of convergence of Functions . . . . . . . . . . . . . .

2.5 Convergence Theorems . . . . . . . . . . . . . . . . . . . .

2.5.1 Uniform Integrability . . . . . . . . . . . . . . . . . .

2.6 Pushed Forward Measures, Distributions of Random Variables

2.7 Lebesgue Integrals . . . . . . . . . . . . . . . . . . . . . . .

2.8 Total variation . . . . . . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

7

7

7

7

8

9

10

11

13

14

15

15

18

19

20

Lectures

3.1 Lectures 1-2 . . . . . . . . . . . . . . .

3.2 Notation . . . . . . . . . . . . . . . . .

3.3 The Wiener Spaces . . . . . . . . . . .

3.4 Lecture 3. The Pushed forward Measure

3.5 Basics of Stochastic Processes . . . . .

3.6 Brownian Motion . . . . . . . . . . . .

3.7 Lecture 4-5 . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

22

22

23

25

26

27

29

30

3

1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4

4

5

6

3.7.1 Finite Dimensional Distributions . . . . . . . . . . . . . .

3.7.2 Gaussian Measures on Rd . . . . . . . . . . . . . . . . .

Kolmogorov’s Continuity Theorem . . . . . . . . . . . . . . . . .

3.8.1 The existence of the Wiener Measure and Brownian Motion

3.8.2 Lecture 6. Sample Properties of Brownian Motions . . . .

3.8.3 The Wiener measure does not charge the space of finite

energy* . . . . . . . . . . . . . . . . . . . . . . . . . . .

Product-σ-algebras . . . . . . . . . . . . . . . . . . . . . . . . .

3.9.1 The Borel σ-algebra on the Wiener Space . . . . . . . . .

3.9.2 Kolmogorov’s extension Theorem . . . . . . . . . . . . .

39

40

40

41

4

Conditional Expectations

4.1 Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2 Lecture 7-8: Conditional Expectations . . . . . . . . . . . . . . .

4.3 Properties of conditional expectation . . . . . . . . . . . . . . . .

4.4 Conditioning on a Random Variable . . . . . . . . . . . . . . . .

4.5 Regular Conditional Probabilities . . . . . . . . . . . . . . . . . .

4.6 Lecture 9: Regular Conditional Distribution and Disintegration . .

4.6.1 Lecture 9: Conditional Expectation as Orthogonal Projection

4.6.2 Uniform Integrability of conditional expectations . . . . .

43

43

45

46

47

51

54

55

57

5

Martingales

5.0.3 Lecture 10: Introduction . . . . . . . . . .

5.1 Lecture 11: Overview . . . . . . . . . . . . . . .

5.2 Lecture 12: Stopping Times . . . . . . . . . . . .

5.2.1 Extra Reading . . . . . . . . . . . . . . . .

5.2.2 Lecture 13: Stopped Processes . . . . . . .

5.3 Lecture 14: The Martingale Convergence Theorem

5.4 Lecture 15: The Optional Stopping Theorem . . . .

5.5 Martingale Inequalities (I) . . . . . . . . . . . . .

5.6 Lecture 16: Local Martingales . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

58

58

60

61

65

65

67

71

74

75

6

The quadratic Variation Process

6.1 Lecture 18-20: The Basic Theorem . . . . . . . . . . . . . . . . .

6.2 Martingale Inequalities (II) . . . . . . . . . . . . . . . . . . . . .

77

78

83

7

Stochastic Integration

7.1 Lecture 21: Integration . . . . . . . . . . . . . . . . . . . . . . .

7.2 Lecture 21: Stochastic Integration . . . . . . . . . . . . . . . . .

7.2.1 Stochastic Integral: Characterization . . . . . . . . . . . .

85

85

86

88

3.8

3.9

2

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

30

31

33

35

36

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

90

91

93

95

97

99

Stochastic Differential Equations

8.0.1 Stochastic processes defined up to a random time

8.1 Lecture 24. Stochastic Differential Equations . . . . . .

8.2 The definition . . . . . . . . . . . . . . . . . . . . . . .

8.3 Examples . . . . . . . . . . . . . . . . . . . . . . . . .

8.3.1 Pathwise Uniqueness and Uniqueness in Law . .

8.3.2 Maximal solution and Explosion Time . . . . . .

8.3.3 Strong and Weak Solutions . . . . . . . . . . . .

8.3.4 The Yamada-Watanabe Theorem . . . . . . . . .

8.3.5 Strong Complteness, flow . . . . . . . . . . . .

8.3.6 Markov Process and its semi-group . . . . . . .

8.3.7 The semi-group associated to the SDE . . . . . .

8.3.8 The Infinitesimal Generator for the SDE . . . . .

8.4 Existence and Uniqueness Theorems . . . . . . . . . . .

8.4.1 Gronwall’s Lemma . . . . . . . . . . . . . . . .

8.4.2 Main Theorems . . . . . . . . . . . . . . . . . .

8.4.3 Global Lipschitz case . . . . . . . . . . . . . . .

8.4.4 Locally Lipschitz Continuous Case . . . . . . .

8.4.5 Non-explosion . . . . . . . . . . . . . . . . . .

8.5 Girsanov Theorem . . . . . . . . . . . . . . . . . . . .

8.6 Summary . . . . . . . . . . . . . . . . . . . . . . . . .

8.7 Glossary . . . . . . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

100

101

102

102

103

105

106

107

108

109

110

112

113

114

114

116

116

119

120

124

127

130

7.3

7.4

7.5

7.6

8

7.2.2 Properties of Integrals . . . . . . . .

7.2.3 Lecture 22: Stochastic Integration (2)

Localization . . . . . . . . . . . . . . . . . .

Properties of Stochastic Integrals . . . . . . .

Itô Formula . . . . . . . . . . . . . . . . . .

Lévy’s Martingale Characterization Theorem

3

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Chapter 1

Prologue

The objective of the course is to understand and to learn the Theory of Martingales,

and basics of Stochastic Differential Equations (SDEs). We will also study relevant

topics on Brownian motions and on Markov processes. We cover basic theories

of martingales, of Brownian motions, of stochastic integration, and of stochastic

differential equations. We offer topics on stochastic flows and on the geometry of

stochastic differential equations.

1.1

What do we cover in this course and why?

What are Brownian motions? They result from summing many small influential

factors (law of large numbers) over a time interval [0, t], t ≥ 0. So they have

Gaussian laws that change with time t.

What are martingales? A stochastic process is a martingale if, roughly speaking, the conditioned average value at a future time t given its value at current time

s is its value at s. On average you expect to see what is already statistically known.

Continuous martingales and local martingales can be represented as stochastic integrals with respect to a Brownian motion by the Integral Representation Theorem

(The Clark-Ocone formula from Malliavin calculus gives an explicit representation).

What are Markov processes? The conditional average of the future value of a

Markov process given knowledge of its past up to now is the same as the conditional average of the future value of the Markov process given knowledge on its

present status only.

The Dubin-Schwartz Theorem says that a martingale is a time change of a

Brownian motion, e.g. a Brownian motion run at a random clock. The random

clock is the quadratic variation of the martingale. The time change may not be

4

Markovian and hence a martingale as a stochastic process may not have the Markov

property. Markov processes relates to second order parabolic differential equations.

The following selected Topics gives a guidance on what we intend to work on.

We will cover some topics in depth.

• Brownian Motion. The definition(s), Lévy’s Characterization theorem, the

martingale property, the Markov Property, properties of sample paths.

• Stochastic integration with respect to Brownian motions and with respect to

semi-martingales.

• Rudiment of the Wiener Space.

• Diffusion Processes. Infinitesimal generators, Markov semigroups, Construction by SDEs.

• SDEs. Strong and weak solutions of SDE’s , path wise unique and uniqueness in law of solutions, existence of a local solution, existence of a global

solution, the infinitesimal generator and associated semi-groups, the Markov

property and the Cocycle property, the non-explosion problem, the flow

problem, and Girsanov Transform for SDEs.

• Martingale Theory. martingales, local martingales, semi-martingales, Change

of measures and Girsanov Transform,

• Itô’s formula.

Prerequisites: Measure Theory and Theory of Integration (MA359) or advanced probability theory. Good working skills in Analysis (MA244 Analysis III)

and and Metric Spaces (MA222) . Knowledge of Functional Analysis (MA3G7,

MA 3G8) would be helpful. The following course would be specially helpful:

Brownian Motion (MA4F7).

Leads to: This Module leads to Topics in Stochastic Analysis (MA595), Stochastic Partial Differential Equations.

Related to This module is related to Markov Processes and Percolation Theory (MA3H2), Quantumn Mechanics: Basic Principles and probabilistic methods

(MA4A7), and Stochastic Partial Differential Equations.

1.2

Exams

This module is assessed by one single exam ( at the beginning of term 3). I hand

out a problem sheet each week. The best way to review for the exam is to work on

the problems.

5

1.3

References

For a comprehensive study of martingales we refer to “Continuous Martingales and

Brownian Motion” by D. Revuz and M. Yor [23]. An enjoyable read for introduction to martingales is the book “Probability with martingales” by D. Williams [29].

For further reads on Brownian motions check on M. Yor’s recent books, e.g. [30]

also [18] by R. Mansuy-M. Yor, and also [19] by P. Morters and Y. Peres.

For an overall reference for stochastic differential equations, we refer to “Stochastic differential equations and diffusion processes, second edition” by N. Ikeda and

S. Watanabe [13]. The small book [16] by H. Kunita is nice to read. There are two

lovely books by A. Friedman “Stochastic differential equations and applications”

[9, 10], and “Stochastic differential equations ” by I. Gihman and A.V. Skorohod

[11]. Another book that is good for working out examples is “Stochastic stability

of differential equations” by R. Z. Khasminskii [12]. Two books that are good for

the beginners are “Stochastic Differential Equations” by B. Oksendale [20] and

“Brownian Motion and Stochastic Calculus” by I. Karatzas and S.E. Shreve [15].

The book by Oksendale has 6 editions. I like edition three and edition four: they

are neat and compact. For further studies there are “Diffusions, Markov processes

and Diffusions” by C. Rogers and D. Williams [25, 24]. Another lovely reference

book is “Foundations of Modern Probability” by Kallenberg [14]. It would work

great as a reference book. For SDEs driven by space time martingales see “Stochastic Flows and Stochastic Differential Equations” by H. Kunita [17]. For SDEs on

manifolds see “Stochastic differential equations on manifolds” by K. D. Elworthy

[4]. For work from the point of view of random dynamics see “Random Dynamical

systems” by L. Arnold [1] and “Random perturbations of dynamical systems” by

M. I. Freidlin and A.D. Wentzell. For further work on the geometry of SDEs have

a look at the books “On the geometry of diffusion operators and stochastic flows”

[7] and “The geometry of filtesing” [5] by K. D. Elworthy, Y. LeJan and X.-M. Li.

For a theory on Markov processes and especially the treatment of the Martingale

problem see “Multidimensional diffusion processes” by D. Stroock and S. R.S.

Varadhan [27]. There are a number of nice and slim books by the two authors, see

D.W. Stroock [26] and S. R.S. Varadhan [28].

If you wish to review the theory of integration, try Royden’s book “Real Analysis”. It is easy to read and useful as a reference. For further study on measures see

“Real Analysis” by Folland [8]. Have a read of “Probability measures on metric

spaces” by Parthasarathy [21] for a deep theory on measures. The books “Measure

Theory, vol 1&2” by Bogachev [3] is quite useful. For some aspects measure on

the Wiener space see “Convergence of Probability measures” by Billingsley [2].

6

Chapter 2

Preliminaries

2.1

2.1.1

Basics

Sets

[DeMorgan’s law.] For any index set I

(∩α∈I Eα )c = ∪α∈I Eαc ,

(∪α∈I Eα )c = ∪α∈I Eαc .

If {An } is a sequence of sets define

∞

lim sup An = ∩∞

m=1 ∪k=m Ak .

n→∞

∞

lim inf An = ∪∞

m=1 ∩k=m Ak .

n→∞

2.1.2

Measurable Spaces

Definition 2.1.1 Let Ω be a set. A σ-algebra F on Ω is a collection of subsets of

Ω ssatisfying the following conditions:

(1) ∅ ∈ F

(2) F is closed under complement: if A ∈ F then Ac ∈ F.

(3) F is closed under countable unions: if An ∈ F , ∪∞

n=1 An ∈ F.

The dual (Ω, F) is a measurable space and elements of F are measurable sets.

Definition 2.1.2 Let G be a collection of subsets of Ω. The σ- algebra generated

by G is the smallest σ-algebra that contains G. It is denoted by σ(G).

7

Definition 2.1.3 Let (X, d) be a metric space with metric d. Denote by Bx (a) the

open ball centred at x with radius a. The Borel σ-algebra, denoted by B(X), is

defined to be

σ({Ba (x) : x ∈ X, a ∈ R}),

Elements of B(X) are Borel sets.

If not otherwise mentioned, this is the σ-algebra we will use. Single points and

therefore a countable subset of a metric is always measurable since {x0 } = ∩n {x :

|x − x0 | < n1 }.

2.1.3

The Monotone Class Theorem

Definition 2.1.4 Let Ω be a set. A non-empty family of subsets G of Ω is called a

monotone class if limits of monotone sequences in G belong to G.

1. ∪∞

n=1 An ∈ G if An is an increasing sequence in G.

2. ∩∞

n=1 An ∈ G if An is an decreasing sequence in G

Finite additive property together with monotone class property is equivalent to

the σ-additive property.

A σ-algebra is a monotone class. Intersection of monotone classes containing

a common element is a monotone classes.

Theorem 2.1.5 (Monotone Class Theorem) If A is a algebra, then the smallest

monotone class m(A) of sets containing A is the σ algebra σ(A) generated by A.

Definition 2.1.6 A collection of subset A is a π system if A, B ∈ A implies that

A ∩ B ∈ A.

The intersection of π-systems is a π-system. Note that some people may insist that

the empty set ∅ is in π system.

Definition 2.1.7 A family B of subsets of a set Ω is a σ-additive class also called

a Dynkin system if

1. Ω ∈ B.

2. If B1 ⊂ B2 , B1 , B2 ∈ B then B1 \B2 ∈ B.

3. If An is an increasing sequence in B then ∪∞

n=1 An ∈ B.

8

Note that property 1 and 2 imply that σ-additive class is closed under taking compliment. A σ-additive class is a monotone class. The intersection of any number

of σ-additive classes is a σ-additive class.

Theorem 2.1.8 (Dynkin’s Lemma) If A is a π system then the σ-additive class

(Dynkin system) generated by A is the σ-algebra generated by A.

2.1.4

Measures

Definition 2.1.9 Let (Ω, F) be a measurable space. A measure µ is a function,

µ : F → [0, ∞], with the following property:

1. P (∅) = 0

2. Let {Aj } be a pairwise disjoint sequence

P∞ from F, that is Ai ∩ Aj = ∅

whenever i 6= j. Then µ(∪∞

(A

)

=

i

i=1

i=1 µ(Ai ),

Definition 2.1.10 The triple (Ω, F, P ) is called a measure space. The measure µ

is finite if µ(Ω) < ∞. It is a probability measure if µ(Ω) = 1. If µ(Ω) = 1, the

triple (Ω, F, µ) is called a probability space. The measure is σ-finite, if there are

∞

measurable sets {An }∞

n=1 of finite measure such that Ω = ∪n=1 An . A measure on

a Borel σ-algebra B(Ω) is called a Borel measure.

Property (2) is called the σ-additive property, which implies that if An is a nondecreasing sequence of measurable sets A1 ⊂ A2 ⊂ . . . ,

lim P (An ) = P (∪∞

n=1 An ).

n→∞

(2.1)

Similarly if An is a non-increasing sequence of measurable sets A1 ⊃ A2 ⊃ . . . ,

lim P (An ) = P (∩∞

n=1 An ).

n→∞

Definition 2.1.11 Consider the measure space (Ω, F, P ). We say that F is complete if any subset of a measurable set of null measure is in F.

Standard Assumption. We assume that the measure is a probability measure

and that the σ-algebra is complete.

In this case if f = g almost surely, that is they agree on a set of full measure,

then they are both measurable or both not measurable.

Remark 2.1.12 A measure on F is determined by its value on a generating set of

F that is a π system.

9

2.1.5

Measurable Functions

Definition 2.1.13 Given measurable spaces (Ω, F) and (S, B), a function f :

Ω → S is said to be measurable if f −1 (B) = {ω : f (ω) ∈ B} belongs to F

for each B ∈ B. Measurable functions are known as random variables, especially

if S = R.

Let (Ω, F) be a measurable space.

Example 2.1.14 The indicator function of a set A is:

1, if ω ∈ A

1A (ω) =

,

0, if ω 6∈ A.

a real valued function. The functions 1A is measurable if and only if A ∈ F.

Example 2.1.15 A function f : E → R that only takes a finite number of values

have the following form,

n

X

f (x) =

aj 1Aj (x),

j=1

where aj are values that f take and Aj = {x : f (x) = aj }. Then f is measurable

if and only if Aj are measurable sets.

Definition 2.1.16 A simple function is of the form

f (x) =

n

X

aj 1Aj (x),

j=1

where aj ∈ R and Aj are measurable sets.

If {Aα , α ∈ I} is a collection of subsets of Y then

f −1 (Ac ) = (f −1 (A))c

f −1 (∩α∈I Eα ) = ∩α∈I f −1 (Eα )

f −1 (∪α∈I Eα ) = ∪α∈I f −1 (Eα ).

Proposition 2.1.17

• If f1 : (Ω, F) → (E1 , A1 ) and f2 : (Ω, F) → (E2 , A2 )

are measurable functions. Define the product σ-algebra

A1 ⊗ A2 = σ{A1 × A2 : A∈ A1 , A2 ∈ A2 }.

Then h = (f1 , f2 ) : (Ω, F) → (E1 ×, A1 ⊗ A2 ) is measurable.

10

• If f : (X1 , B1 ) → (X2 , B2 ) and g : (X2 , B2 ) → (X3 , B3 ) are measurable

functions then so f ◦ g : (X1 , B1 ) → (X3 , B3 ) is measurable.

• If f, g, fn : (X, B) → (R, B(R) are measurable functions, then the follow−g|

ing functions are measurable: f + g, f g, max(f, g) = (f +g)+|f

, f+ =

2

max(f, 0), f − , lim sup fn , lim inf fn , supn≥1 fn , inf n≥1 fn , limn→∞ fn .

Proposition 2.1.18 If f : E → [0, ∞] is a positive measurable function, there is a

sequence {fn } of simple functions such that

0 ≤ f1 ≤ f2 ≤ . . . fn ≤ . . . f,

and fn → f pointwise. Furthermore fn → f uniformly on any sets on which f is

bounded. Here both E and [0, ∞] are equipped with the relevant Borel σ-algebras.

Theorem 2.1.19

1. For every measurable function f , there is a sequence of

simple functions fn such that for every x,

lim fn (x) = f (x).

n→∞

If f is bounded, fn can be chosen to be uniformly bounded.

2. If f : E → [0, ∞] is a positive measurable function, there is a sequence

{fn } of simple functions such that

0 ≤ f1 ≤ f2 ≤ . . . fn ≤ . . . f,

and fn → f pointwise. Furthermore fn → f uniformly on any sets on which

f is bounded. Here both E and [0, ∞] are equipped with the relevant Borel

σ-algebras.

Proposition 2.1.20 Let (X, B(X)) and (Y, B(Y )) be the metric spaces with Borel

σ-algebras. If f : X → Y is continuous then it is Borel measurable .

2.2

Integration with respect to a measure

Let (E, F, µ) be a measure space. Let

E = {f (x) =

n

X

aj 1Aj (x) : Aj ∈ F, aj ∈ R}

j=1

11

be the set of (measurable) simple functions. We define the integral of f =

with respect to µ as below:

Z

f dµ(x) =

x∈E

n

X

Pn

j=1 aj 1Aj

aj µ(Aj ).

j=1

If aj , j = 1, . . . , n are distinct values, then Aj = f −1 ({aj }).

Definition 2.2.1 Let f : E → [0, ∞] be a positive measurable function. Define

Z

Z

f dµ = sup

gdµ.

g∈E:g≤f

Definition 2.2.2 Let

R f : E →RR be a general measurable function. Write f =

f + − f − . If both f + dµ and f − dµ are finite, we say that f is integrable with

respect to µ and the integral is defined to be:

Z

Z

Z

+

f dµ =

f dµ −

f − dµ.

E

E

E

The set of all integrable functions are denoted by L1 (µ), elements of which are said

to be L1 functions.

If A is a Borel measurable set, we denote by the following the same integral:

Z

Z

Z

f dµ,

f (x) µ(dx),

1A (x)f (x) dµ(x).

A

A

E

Proposition 2.2.3 Assume that f, g ∈ L1 (µ).

1. If f ∈ L1 (µ), so does |f | ∈ L1 (µ), and

Z

Z

f dµ ≤ |f | dµ.

2. If f, g ∈ L1 (µ), a, b, ∈ R, then af + bg ∈ L1 (µ) and

Z

Z

Z

(af + bg) dµ = a f dµ + b g dµ.

3. If f ≤ g,

Z

Z

f dµ ≤

12

gdµ.

4. If A is a measurable set, define

Z

R

R

f

dµ

=

1A f dµ. Then

A

Z

g dµ

f dµ =

A

A

for all measurable set A implies that f = g almost surely.

R

5. If f ≥ 0 and f dµ = 0 then f = 0 a.e.

2.3

Lp spaces

Let (E, B, µ) be a measure space. Two functions f, g : E → R are equivalent if

f = g almost surely. For 1 ≤ p < ∞, we define the Lp space:

Z

p

L (E, B, µ) = {f : E → R : measurable,

|f (x)|p dµ(x) < ∞}.

E

1

R

Define kf kLp = E |f (x)|p dµ(x) p . Let L∞ be the set of bounded measurable

functions with |f |L∞ = inf{a : µ(|f (x)| > a) > 0}. Then Lp spaces are Banach

spaces. The notations Lp (E, B), Lp (E) or Lp maybe used for simplicity.

Proposition 2.3.1 Hölder’s inequality (Cauchy-Schwartz if p = q = 2).

Z

Z

|f g|dµ ≤

p

1 Z

p

q

|g| dµ

|f | dµ

1

q

,

1 1

+ = 1.

p q

Minkowski Inequality for p ≥ 1,

kf + gkLp ≤ kf kLp + kgkLp .

p

q

Suppose that µ is a finite measure. Then by Hölder

inequality,

P

P L ⊂ L for p < q.

A function φ : R → R is P

convex if φ( pi xi ) ≤ i pi φ(xi ) where pi are

real positive numbers such that i pi = 1. If φ is twice differentiable f is convex

00

if and only if φ ≥ 0. Example of convex functions are: φ(x) = xp for p > 1,

φ(x) = ex .

Theorem 2.3.2 (Jensen’s Inequality) If φ is a convex function then for all integrable functions f and µ a probability measure

Z

Z

φ

f dµ ≤ φ(f )dµ.

13

Proposition 2.3.3 (Chebychevs inequality) If X is an Lp random variable then

for a ≥ 0,

1

P (|X| ≥ a) ≤ E|X|p .

a

1

RTheorem 2.3.4 If f ∈ L . For any > 0 there is a simple function φ such that

|f − φ| < .

Theorem 2.3.5 (The monotone convergence theorem) If fn are non-decreasing

sequence converging to f almost surely, and fn− ≤ g and g is integrable then

Z

Z

lim fn dµ = lim

f dµ.

n→∞

n→∞

Proof This is shown when fn ≥ 0. Otherwise take gn = fn + g. Then gn ≥ 0 and

{gn } is a non-decreasing sequence.

Theorem 2.3.6 (Dominated Convergence Theorem) If |fn | ≤ g where g is an

integrable function, and fn → f a.e. then f is integrable

Z

Z

lim

fn dµ =

lim fn dµ.

n→∞

n→∞

Theorem 2.3.7 (Fatou’s lemma) If fn is bounded below by an integrable function

then

Z

Z

lim inf fn dµ ≤ lim inf fn dµ

n→∞

2.4

n→∞

Notions of convergence of Functions

Definition 2.4.1 Let (E, B, µ) be a measure space. Let fn be a sequence of measurable functions.

• fn converges to f almost surely if there is a set Ω0 with µ(Ω0 ) = 0

lim fn (ω) = f (ω),

n→∞

∀ω 6∈ Ω0 .

• The sequence fn is said to converge to f in measure if for any δ > 0

lim P (|fn − f | > δ) = 0.

n→∞

• Lp convergence

Z

|fn − f |p dP → 0.

Proposition 2.4.2 Let An be measurable sets. The indicator functions 1An → 0

in probability if and only if µ(An ) → 0.

14

2.5

Convergence Theorems

Proposition 2.5.1

measure.

1. If fn converges to f in Lp , p ≥ 1, it converges to f in

2. If fn converges to f in measure there is a subsequence of fn which converges

almost surely.

3. fn converges to f in measure if and only if every subsequence has a further

subsequence that converges a.s.

Theorem 2.5.2 (Egoroff’s Theorem*) Suppose that µ(E) < ∞. Let fn be a sequence of functions converging to f almost surely. Then for any > 0 there is

a set E ⊂ E with µ(Ec ) ≤ such that fn converges to f uniformly on E . In

particular fn converges to f in measure.

2.5.1

Uniform Integrability

Let (Ω, F, µ) be a measure space.

Definition 2.5.3 A family of real-valued functions (fα , α ∈ I), where I is an index

set, is uniformly integrable (u.i.) if

Z

lim sup sup

|fα |dµ = 0.

C→∞

α

{|fα |≥C}

Proposition 2.5.4 Suppose that supα E|fα |p < ∞ for some p > 1, then the family

(fα , α ∈ I) is uniformly integrable.

Proof This follows from

Z

sup

|fα |dµ ≤

α

{|fα |≥C}

Z

1

C (p−1)

sup

α

|fα |p dµ → 0.

{|fα |≥C}

Definition 2.5.5 A family {fα } is uniformly absolutely continuous, if for any > 0

there is a δ > 0 such that,

Z

sup

|fα |dµ < ,

∀A ∈ F with µ(A) < δ.

α∈I

A

Lemma 2.5.6 Assume that µ(Ω)R< ∞ and f ∈ L1 . Given any > 0 there is

δ > 0 such that if µ(A) < δ then A |f |dµ < .

15

Suppose that the measure µ is finite. The lemma above shows that a L1 function

is (uniformly) absolutely continuous. The lemma brows show that it is uniformly

integrable.

Proposition 2.5.7 Let µ be a finite measure. The family (fα , α ∈ I) is uniformly

integrable if and only if the following two conditions hold:

R

1. (L1 boundedness): supα∈I |fα |dµ < ∞

2. The family fα is uniformly absolutely continuous.

Proof We first assume that (fα , α ∈ I) is uniformly integrable. For any 0 < < 1,

take C such that

Z

|fα |dµ ≤ /2,

|fα |≥C

Then, since µ(Ω) < ∞, we have L1 boundedness,

Z

Z

Z

sup |fα |dµ ≤

|fα |dµ + sup

|fα | ≤ + Cµ(Ω) < ∞.

2

α

α

|fα |≥C

|fα |<C

Also

Z

|fα |dµ ≤ sup

sup

α

Z

α

A

≤ sup

≤

|fα | dµ + sup

α

Z

|fα | dµ + sup

A∩{|fα |≥C}

Z

α

Z

α

{|fα |≥C}

|fα |dµ

A∩{|fα |<C}

Cdµ

.

A∩{|fα |<C}

+ C µ(A) → 0.

2

R

Thus supα A |fα |dµ → 0 as µ(A) → 0 (uniform absolutely continuity).

We next prove the converse. Assume that Xt is uniformly absolutely continuous and is L1 bounded. Then

Z

1

sup µ(|fα | ≥ C) ≤ sup |fα |dµ

C α

α

R

is mall when C is large. By choosing C large we see that |fα |≥C |fα |dµ is as small

as we want.

Proposition 2.5.8 Let fn , f ∈ L1 .

a) Suppose

that fn → f in measure and fn is uniformly integrable. Then

R

|fn − f |dµ → 0.

16

R

b) Suppose that |fn − f |dµ → 0 and µ(Ω) < ∞. Then fn → f in measure

and fn is uniformly integrable.

Proof Part a). Assume that {fα } is uniformly integrable. Then for any > 0 there

choose C > 1 such that

Z

Z

|f |dµ < .

sup

|fn |dµ +

n

{|f |≥C}

{|fn |≥C}

Sincefn → f in measure, we may choose N such that if n > N ,

µ(|fn − f | > ) <

.

2C

It follows that

Z

Z

|fn − f |dµ ≤ sup

|fn − f |dµ

n

|fn −f |≤

Z

!

Z

+

|fn |dµ

+

{|fn −f |>}∩{|fn |≥C}

{|fn −f |>}∩{|fn |≤C}

Z

!

Z

|f |dµ

+

+

{|fn −f |>}∩{|f |≥C}

{|fn −f |>}∩{|f |≤C}

Z

≤ + 2 + 2 sup

n

Cdµ

{|fn −f |>}

≤3 + 2Cµ{|fn − f | > }) ≤ 4.

R

R

R

Hence |fn − f |dµ → 0. Finally | fn dµ − f dµ| ≤ |fn − f |dµ → 0.

Proof of part b). The convergence in measure follows from

Z

1

µ(|fn − f | > ) <

|fn − f |dµ → 0.

R

For the uniform integrability, we observe that

Z

Z

Z

|fn |dµ ≤

|fn − f |dµ +

|f |dµ.

A

Ω

A

Take A = Ω to see that {fn } is L1 bounded.

By Lemma 2.5.6, for any > 0, there

R

is δ > 0 such that if µ(A) < δ, then A |f |dµ < . This means that

Z

Z

|fn |dµ ≤

|fn − f |dµ + A

Ω

and {fn } is absolutely continuous. Apply Proposition 2.5.8 to conclude the uniform integrability.

17

2.6

Pushed Forward Measures, Distributions of Random

Variables

Let (Ω, F, ν) be a measure space and (S, B) a measurable space. Let f : Y → S be

a measurable function. The pushed forward measure f∗ (ν) on (S, B) is defined

as follows:

f∗ (ν)(B) = ν{x : f (x) ∈ B},

B ∈ B.

Lemma 2.6.1 Let α : S → R be a bounded Borel measurable function. Then

Z

Z

α ◦ f dν =

α d(f∗ (ν)).

(2.2)

S

Ω

Let (Ω, F, P ) be a probability space. Let X : Ω → S be a measurable function.

Let α : S → R be a bounded measurable function. Define

Z

Eα(X) =

α ◦ X dP.

Ω

The measure X∗ (P ) on S is called the probability distribution or the probability

law of X. Let us denote this measure by µX ,

µX (B) = P (ω : X(ω) ∈ B).

The change of variable formula says that

Z

Eα(X)) =

α(y) dµX (y).

S

If S = R, then µX ((−∞, a)) = P (ω : X(ω) ≤ a). In particular,

Z

Z

EX =

y dµX (y),

var(X) = (y − EX) dµX (y).

S

S

If X1 , . . . , Xn are real valued random variables, then (X1 , . . . , Xn ) is a real

valued random viable. The joint distribution of X1 , . . . , Xn is the measure in Rn

induced by (X1 , . . . , Xn ).

18

2.7

Lebesgue Integrals

Definition 2.7.1 Let (Ω, F, P ) be a measure space. We say that F is complete if

any subset of a measurable set of null measure is in F.

The sets in the completion of the Borel σ-algebra are said to be Lebesgue measurable. The Lebesgue Measure L : B(R) → R is determined by,

X

L(A) = inf{ (bj − aj ) : ∪∞

A ∈ B(R).

j=1 (aj , bj ) ⊃ A},

j

The measure L of an interval is the length of the interval. The integrals developed

in the last section for functions f : R → R are Lebesgue integrals.

Proposition 2.7.2 A bounded function f : [a, b] → R is Riemann integrable if and

only if the set of discontinuity of f has Lebesque measure zero.

Proposition 2.7.3 If a bounded function f : [a, b] → R is Riemann integrable,

then it is Lebesgue measurable and Lebesgue integrable. Furthermore, the integrals have the common value:

Z

Z b

f (x)dµ =

f (x)dx.

[a,b]

a

Let F be a right continuous increasing function, there is a unique Borel measure on R, called the Lebesgue-Stieltjes measure associated to F , such that

µF ((a, b]) = F (b) − F (a).

Furthermore

X

µF (A) = inf{ (F (bj ) − F (aj )) : ∪∞

j=1 (aj , bj ) ⊃ A}.

j

R

R

It is customary to denote h dF for the intergal h dµF .

If F = f − g where f, g are right continuous increasing functions, define

µF = µf − µg . The measure µF is not necessarily positive valued. It is a signed

measure.

Definition 2.7.4 Let f, g : [a, b] → R be bounded functions. We say f is RiemannStieltjes integrable with respect to g if there is a number l such that for all > 0,

there is δ such that for all partitions

∆ : a = t0 < x1 < · · · < tn = b

19

with mesh |∆| := max1≤i≤n (ti − ti−1 ) < δ,

n

X

∗

< .

f

(t

)

[g(t

)

−

g(t

)]

−

l

j+1

j

j

j=0

Here t∗j is any point in [tj−1 , tj ]. If so we we define l to be the Riemann-Stieltjes

Rb

integral of f with respect to g and this is denoted by a f (t) dg(t).

Take a function F that is absolutely continuous on [a, b]. Then

Z x

F (x) = f (a) +

g(t)dt,

a

with g Lebesque integrable and f differentiable at almost surely all points. Furthermore F 0 = g almost surely. In this case

Z b

Z b

h(x)µF (dx) =

h(x)F 0 (x)dx.

a

a

A right continuous increasing function F on [0, ∞) has finite total variation,

We may assume that F (0) = 0. The measure µF is absolutely continuous with

respect to the Lebesque measure if and only if F is absolutely continuous.

2.8

Total variation

I will relate this to semi-martingales later.

Definition 2.8.1 Denote by P ([a, b]) the set of all partitions a ≤ t0 < t1 < · · · <

tn = b of [a, b]. The total variation of a function g on [a, b] is defined by

X

gT V ([a, b]) = sup

{|g(tj ) − g(tj−1 )|} .

∆∈P ([a,b]) j

If gT V ([a, b]) is finite we say g is of bounded variation on [a, b].

If f : R → R is an increasing then it has left and right derivatives at every

point and there are only a countable number of points of discontinuity. Furthermore

f is differentiable almost everywhere.

Let f be a function, Define f + (x) = max(f (x), 0) to be its positive part and

−

f = − min(f (x), 0) to be the absolute value of its negative part. For p = 1, note

that |f (ti+1 ) − f (ti ))| = [f (ti+1 ) − f (ti )]+ + [f (ti+1 ) − f (ti )]− hence the name

‘total variation’.

20

Proposition 2.8.2 A real valued function on the interval [a, b] is of bounded variation if and only if it is the difference of two monotone functions. Such a function

is differentiable almost everywhere with respect to the Lebesque measure.

Proposition 2.8.3 If f is an increasing function then its derivative exists almost

every. Furthermore f 0 is Borel measurable.

Example 2.8.4 Let f be a real valued integrable function defined on [a, b].

Rx

1. Its indefinite integral a f (t)dt is of bounded variation and continuous.

2. The derivative of its indefinite integral equals f almost surely.

A right continuous increasing function F on [0, ∞) has finite total variation,

We may assume that F (0) = 0. The measure µF is absolutely continuous with

respect to the Lebesque measure if and only if F is absolutely continuous.

21

Chapter 3

Lectures

3.1

Lectures 1-2

The objectives of the course are: At the end of the course we should be able to

understand and work with martingales and with stochastic differential equations.

Here is a stochastic differential equation (SDE) of Markovian type on Rd in

differential form:

m

X

dxt =

σi (t, xt )dBti + b(t, xt )dt.

(3.1)

i=1

Here (Bt1 , . . . , Btm ) is an Rm -valued “Brownian Motion” on a “filtered probability

space” (Ω, F, Ft , P ). Each xt is a function from the probability space Ω to Rd ,

We assume that each σi : R+ × Rd → Rd and b : R+ × Rd → Rd to be

Borel measurable. Suitable conditions will be introduced to ensure that there is a

‘solution’ and that “uniqueness of solutions” hold. If σi ≡ 0 for all i, this is an

ordinary differential equation: ẋt = b(t, xt ). Please review results concerns ODEs

and review the existence and uniqueness theorem which you learnt.

The integral form of the SDE (3.1), given below, is more instructive:

xt = x0 +

m Z

X

i=1

t

σi (s, xs )dBsi

0

Z

+

t

b(s, xs )ds.

0

Rt

We will soon define “stochastic integrals”. The notation 0 σi (xs )dBsi denotes

the stochastic integral, Itô integral in this case, of σ(s, xs ) with respect to the one

dimensional Brownian motion (Bsi ).

Let (ft ) be a suitable stochastic

R t process, ft : Ω → R, and (Bt ) a Brownian

motion. The “stochastic integral 0 fs dBs ” is a “local martingale”. We will need

22

to study martingales, local martingales and semi-martingales. By the “integral representation theorem for martingales”, All “sample continuous ” local martingales

are stochastic integrals of the form above. The Clark-Ocone formula, a popular

formula in Malliavin calculus, gives an explicit formula for the integrand. The L2

chaos expansion takes this further.

You might want to ask the question why we study SDE. We will be able to

answer this question better, later in the course and at the end of this course. For the

moment just believe that SDEs are popular and useful.

3.2

Notation

In this section we fix the notation.

Let (Ω, F) be a measure space: Ω is a set and F is a σ-algebra of subsets of Ω.

By “F is a σ-algebra” we meant that: ∅ ∈ F, the compliment Ac of a set from F

is in F, the union ∪∞

i=1 Ai of a sequence of sets Ai ∈ F is in F.

A signed measure µ on the measure

P∞space is a function∞from F to R such that

µ(∅) = 0, and that µ(∪∞

A

)

=

n=1 n

n=1 µ(An ) if {An }n=1 is pairwise disjoint.

Unless otherwise stated, by a measure we mean one that takes non-negative values,

µ : F → R+ . The measure is said to be finite if µ(Ω) < ∞ and it is a probability

measure if µ(Ω) = 1. A finite measure can always be normalised to a probability

measure : µ̃(A) := µ(A)

µ(Ω) . A measure is σ-additive if there is a countable cover of

Ω by measurable sets of finite measure.

In this paragraph we briefly review on metric spaces. The restriction d to a

subset A of a metric space is a distance function on A. A subset of a metric space

is complete if any Cauchy sequence converges to a point in the set. A closed subset

of a complete metric space is complete. A metric space is separable if it has a

countable dense set. The product metric on (X1 , d1 ) and (X2 , d2 ) is max(d1 , d2 ).

Definition 3.2.1

• A collection T of subsets of a set X is a topology if ∅ ∈

T , and T is closed under the union of any number of sets and under the

intersection of a finite number of sets. Sets from T are called open sets.

Compliments of open sets are closed sets.

• The Borel σ-algebra of a topological space is the smallest σ-algebra that

contains all open sets and is denoted by B(X). Elements of the Borel σalgebra are Borel sets.

Borel sets include: open sets, closed sets, countable unions and intersections of

closed sets.

23

Example 3.2.2 Let (X, d) be a metric space. The collection of open sets defined

by the distance defines the metric topology on X. The metric topology are determined by open balls. The following are examples of metric spaces: [0, 1], Rn ,

Banach spaces such as Lp ((Ω, µ); R), Hilbert spaces, and any finite dimensional

manifolds.

In this course we are concerned only with metric spaces that are separable and

1

complete. Single points {x} = ∩∞

n=1 B(x, n ) are Borel set.

Definition 3.2.3

• Let (X1 , F1 ) and (X2 , F2 ) be measure spaces. A function

f : X1 → X2 is measurable if the pre-image of a measurable set is measurable: f −1 (A) ∈ F1 if A ∈ F2 .

• Let (X1 , τ1 ) and (X2 , τ2 ) be topological spaces. A function f : X1 → X2 is

continuous if the pre-image of an open set is open: f −1 (U ) ∈ τ1 if U ∈ τ2 .

Proposition 3.2.4 All continuous maps are Borel measurable.

Proof For i = 1, 2, let (Xi , τi ) be topological spaces and Bi the Borel σ-algebras.

Let f : X1 → X2 be a continuous function. Let

f −1 (B2 ) = {f −1 (A) : A ∈ B2 },

f −1 (τ2 ) = {f −1 (A) : A ∈ τ2 }.

Then f −1 (B2 ) is a σ-algebra and f −1 (τ2 ) ⊂ τ1 ⊂ B1 be the continuity of f . It is

also easy to show that σ(f −1 (τ2 )) = f −1 (B2 ).

Definition 3.2.5 Two complete separable metric spaces are measurable isomorphic if there is a bijection φ such that both φ and φ−1 are measurable.

Definition 3.2.6 The space ([0, 1], B([0, 1]), and also those isomorphic to it, is a

standard Borel space.

Let (X, F) be a measurable space. A Borel set E inherits a σ algebra by restriction:

{E ∩ A : A ∈ F}.

Theorem 3.2.7 (Theorem 2.13, [21])) Let Xi , i = 1, 2 be complete separable metic

spaces, and Ei ⊂ Xi Borel sets. Then E1 and E2 , with the restricted Borel σalgebra, are measurable isomorphic if and only if they have the same cardinality.

The cardinality of [0, 1]Z is the same as that of the continuum. The cardinality

of C([0, 1]; Rd ) is that of the continuum. This is because continuous functions

are determined by their values at rational numbers (a countable set), and hence the

24

cardinality of the set of continuous functions is that of RQ , a countable product R.

The cardinality of Lp (R; R) is that of the continuum. First we consider Lp spaces

from [2πn, 2π(n + 1)] → R. The Fourier series of a function in Lp converges to it

in Lp . Hence its values are determined by a countable number of quantities (their

Fourier coefficients) and the sines and cosines with respect to which the expansion

is made.

For non-Borel σ-algebras, a more general concept exists. The moral of this

story is: “Most” of probability spaces without an atom is “measurably isomorphic”

to the standard Borel space. All “reasonable” measure spaces that are rich enough

to support a Brownian motion is a standard Borel space.

3.3

The Wiener Spaces

Define W d ≡ C([0, 1]; Rd ) := {ω : [0, 1] → Rd continuous } and

W0d ≡ C0 ([0, 1]; Rd ) := {ω : [0, 1] → Rd continuous , σ(0) = 0}.

Then W , with the uniform norm

kωk = sup |ω(t)|,

0≤t≤1

is a separable Banach space. Furthermore W0 a closed subspace. With appropriate

modification the discussion below applies to both W0 and W0d . The cardinality of

both spaces are that of the continuum. Hence they with their Borel σ-algebras are

standard Borel spaces.

Let {ωi } be a dense set of W0d . Let {tk } ∈ Q ∩ [0, 1]. Then the topology is

determined by {ω ∈ W0d : kω − ωi k < n1 }. The following sets is a base of the

topology:

1

1

} = W d ∩{ω ∈ (Rd )[0,1] : |ω(tk )−ωi (tk )| < }.

n

n

(3.2)

A cylindrical set is of the form

{ω ∈ W0d : |ω(tk )−ωi (tk )| <

{ω ∈ W0d : ω(t1 ) ∈ A1 , . . . , ω(tk ) ∈ Ak } = ∩ni=1 {ω(ti ) ∈ Ai },

where Ai ∈ B(Rd ) and 0 ≤ t1 < t2 < · · · < tk ≤ 1.

The collection Cyl of cylindrical sets generate B(W0d ), check e.g. Fubini’s theorem, and is measure determining (two finite measures on (W0d , B(W0d )) agreeing

on Cyl will agree). In fact any π system that generates a σ-algebra is measure

determining.

25

Denote by pt the heat kernel on Rd . For x, y ∈ Rd .

pt (x, y) =

1

−

d e

|x−y|2

2t

.

(2πt) 2

Then pt (x, y)dy is a probability measure.

Let πt : W d → Rd be the projection, πt (ω) = ω(t).

Theorem 3.3.1 There is a unique probability measure µ on (W0d , B(W0d )) such

that for 0 < t1 < t2 < · · · < tn ≤ 1, A1 , . . . , An ∈ B(Rn ),

µ(ω : πt1 (ω) ∈ A1 , . . . , πtn (ω) ∈ An )

Z

Z

(3.3)

n

pt1 (0, x1 )pt2 −t1 (x1 , x2 ) . . . ptn −tn−1 (xn−1 , xn )πi=1

=

...

dxi .

A1

An

This measure is commonly known as the Wiener measure. The space (W0d , B(W0d , µ)

is the Wiener space.

We will give a proof of the existence in section 3.8.1. The map πt as a stochastic

process on the probability space (W0d , B(W0d , µ) is a Brownian motion, which we

define shortly.

Note. The interval [0, 1] can be replaced by [0, T ] where T is a positive number.

3.4

Lecture 3. The Pushed forward Measure

Let (X, B) and (Y, G) be two measurable spacs and µ a measure on (X, B). A

measurable map φ : X → Y induces a measure on (Y, G) such that for any C ∈ Y ,

(φ∗ µ)(C) = µ({x : φ(x) ∈ C}).

Denote φ−1 (C) = {x : Φ(x) ∈ C}.

Lemma 3.4.1 Let f : Y → R be in L1 (Y, (φ)∗ (µ)), then

Z

Z

f (φ)(x)dµ(x) =

f (y)d(φ∗ µ)(y).

X

Y

Idea of Proof: First take f =

Z

f (y)d(φ∗ µ) =

Y

Z X

n

Pn

i=1 ai 1Ai ,

then

n

n

X

X

ai 1Ai d(φ∗ (µ) =

(φ∗ µ)(Ai ) =

µ(φ−1 (Ai )).

Y i=1

i=1

26

i=1

On the other hand

Z X

Z

n

n

X

f (φ)(x)dµ(x) =

ai 1Ai (φ(x))dµ(x) =

µ({x : φ(x) ∈ Ai }).

X i=1

X

i=1

Next take f a positive function. Take an increasing sequence of simple functions

that converges to f :

n2n

X k

1

1A (x).

fn (x) = n [2n f (x)] ∧ n =

2

2n k

k=1

n

n

where Ak = {x : f (x) ∈ [ 2kn , k+1

2n ). Note that [2 f (x)] = k, if k ≤ 2 f (x) <

n

(k + 1)2 .

For f ∈ L1 consider the integrals of f + and f − separately.

If φ : U ⊂ Rd → φ(U ) ⊂ Rd is a diffeomorphism onto its image. Let dx

denote the Lebesque measure. Then φ−1 induces the pushed forward measure,

which is pushed back of the Lebesque measure by φ . Then if f : Rd → R is any

bounded measurable function,

Z

Z

f (x)dx =

f (φ(x))(φ−1 )∗ (dx).

Y

X

On the other hand,

Z

Z

f (x)dx =

Y

f (φ(x))| det T φ(x)|dx.

X

This shows that, e.g. for any Borel set A, take f (x) = 1φ(A) , f (φ(x)) = 1A (x).

d(φ−1 )∗ (dx)

dx

3.5

= | det T φ(x)|.

Basics of Stochastic Processes

Let (Ω, F, P ) be a measure space. Assume that P (Ω) = 1. Two measurable sets

A and B are independent if P (A ∩ B) = P (A)P (B).

Definition 3.5.1

1. Let {Fα , α ∈ Λ} be a family of σ-algebras. We say that

{Fα , α ∈ Λ} are independent, if for any finite index set {α1 , . . . αn } ⊂ Λ

and any sets A1 ∈ Fα1 , . . . , An ∈ Fαn ,

P (∩ni=1 Ai ) = Πni=1 P (Ai ).

27

2. A family of random variables are mutually independent if the σ-algebras

generated by them are mutually independent.

Let (Ω, F, P ) be a probability space. If X : Ω → Rd is measurable (i.e. a

random variable), then X∗ P is the probability distribution of X and is denoted by

µX . And

Z

Z

EX =

XdP =

ydµX (y).

Rd

Ω

Let πi : Rn → R be the projection to the ith component. The tensor σ-algebra

⊗n B(R) is

⊗n B(R) = σ{πi−1 (A) : A ∈ B(R)}.

For example π1−1 (A) = A × R × · · · × R. If each Xi is an R-valued measurable

functions, (X1 , . . . , Xn ) : Ω → Rn is measurable with respect to ⊗n B(R). The

joint distribution of (X1 , . . . , Xn ) is the measure on Rd pushed forward to the map

(X1 , . . . , Xn ).

Definition 3.5.2 The random variables {X1 , . . . , Xn } are independent if

µ(X1 ,...,Xn ) = µX1 ⊗ · · · ⊗ µXn .

Independence holds, if the two measures in the identity above agree on cylindrical

sets:

n

P (X1 ∈ A1 , . . . , Xn ∈ An ) = πi=1

P (Xi ∈ Ai ),

Ai ∈ B.

Equivalently for any gi : R → R bounded measurable,

n

n

E(πi=1

gi (Xi )) = πi=1

Egi (Xi ).

Let (Ω, F, P ) be a probability space. Let S be a metric space which is considered to be endowed with the Borel σ-algebra B unless otherwise stated. In this

course we take S = Rd . Let T be a subset of R, e.g. R, [0, 1], [a, b], [a, b), and

{1, 2, . . . }.

Definition 3.5.3 Let I be a set. A function X : Ω × I → S is a stochastic process

if for each α ∈ I, X(·, α) : (Ω, F) → (S, B) is measurable.

The process is denoted by (Xt , t ∈ T ) or (Xt ) for short. A point t ∈ I is

referred as time and the stochastic process is said to be a continuous time stochastic

process if T is an interval. If I = Z we have a discrete time stochastic process, the

stochastic process is denoted by (Xn ).

28

Example 3.5.4

• Take Ω = [0, 1], F = B([0, 1]), and P the Lebesque measure. Take I = {1, 2, . . . }. Define Xn (ω) = ωn . These are continuous

functions from [0, 1] → R and hence measurable.

• Take I = [0, 3]. Let X, Y : Ω → R be two random variables. Then

Xt (ω) = X(ω)1[0, 1 ] (t) + Y (ω)1( 1 ,3] (t) is a stochastic process.

2

2

Definition 3.5.5 A stochastic process (Xt , t ∈ I) is said to have independent increments if for any n and any 0 = t0 < t1 < · · · < tn , ti ∈ I, the increments

{(Xti+1 − Xti )}ni=1 are independent.

Definition 3.5.6 A sample continuous stochastic process (Bt : t ≥ 0) on R1 is the

standard Brownian motion if B0 = 0 and the following holds:

1. For 0 ≤ s < t, the distribution of Bt − Bs is N (0, t − s) distributed.

2. Bt has independent increments.

Definition 3.5.7 A stochastic process (Xt , t ≥ 0) with state S is said to be sample

path continuous if t 7→ Xt (ω) is continuous for almost surely all ω. The terminology time continuous can also be used.

Remark: Another regular encountered class of stochastic processes are càdlag

processes: for almost all ω, the sample path t 7→ Xt (ω) has left limit and is right

continuous for all time t. Such functions have jumps at the point of discontinuity.

Definition 3.5.8 A stochastic process Xt is said to have stationary increments if

the distribution Xt − Xs and Xt+a − Xs+a are the same for all a > 0 and 0 ≤

s < t.

3.6

Brownian Motion

A Brownian motion describes the following properties: the law of the randomness we model are independent during disjoint time intervals and has the Gaussian

property.

Let I = [0, 1] or I = [0, ∞).

Definition 3.6.1 A stochastic process (Bt : t ≥ 0) on Rd is a Brownian motion

with initial value x the following holds:

1. B0 = x

29

2. t 7→ Bt (ω) is continuous for almost surely all ω.

3. For s ≥ 0 an t > 0, the distribution of Bt+s − Bs is N (0, tI) distributed.

4. Bt has independent increments, i.e. for any 0 = t0 < t1 < · · · < tk ,

{Bti+1 − Bti }ki=1 is a family of independent random variables.

If B0 = 0 and d = 1 this is the standard (linear ) Brownian motion.

Remark*: Let A be a d × d matrix, ABt + at is N (at, AAT t) distributed. Fix

t > 0, observe that

n −1

2X

− B in t ).

Bt − B0 =

(B i+1

n t

i=0

2

2

Assume that {B i+1

t − B 2in t } are independent identically distributed. This allures

2n

that Bt ∼ N (0, t). Indeed, for each t, Bt has infinitely divisible laws and is a Lévy

process. The sample continuity will conclude a Gaussian distribution N (at, tC)

some a ∈ Rd and some positive semi-definite symmetric matrix C. Up to a linear

transform the process is indeed a Brownian motion.

3.7

Lecture 4-5

Proposition 3.7.1 The joint distribution of (Bs , Bt ), s < t, has density ps (0, x)pt−s (x, y)

with respect to the Lebesque measure dxdy on (Rd )2 .

Proof For any A1 , A2 ∈ B(Rd ),

P (Bs ∈ A1 , Bt ∈ A2 ) = E1A1 (Bs )1A2 (Bt )

= E1A1 (Bs )1A2 (Bt − Bs + Bs )

Z Z

=

1A1 (x)1A2 (z + x)ps (0, x)pt−s (0, z)dzdx

d

d

ZR ZR

=

1A1 (x)1A2 (y)ps (0, x)pt−s (0, y − x)dzdx

d

d

ZR R

=

ps (0, x)pt−s (x, y)dydx.

A1 ×A2

3.7.1

Finite Dimensional Distributions

Let I = [0.T ]. Let (Xt , 0 ≤ t ≤ T ) be a stochastic process with values in a

separable metric space (S, B(S)).

30

Definition 3.7.2 For 0 ≤ t1 < t2 < · · · < tn , the measurable map

ω 7→ (Xt1 (ω), Xt2 (ω), . . . , Xtn (ω))

from (Ω, F) to (S n , B(S n )) induces a Borel measure µt1 ,...,tn on S n . These are

the finite dimensional distributions of the stochastic process (Xt ). They are also

known as marginal distributions.

For A ∈ B(S n ),

µt1 ,...,tn (A) = P (ω : (Xt1 (ω), Xt2 (ω), . . . , Xtn (ω)) ∈ A).

Exercise. Compute the finite dimensional distributions of a Brownian motion.

Let (Xt ) be a stochastic process, we define a map

X· : Ω → (Rd )[0,T ]

by X· (ω)(t) = Xt (ω). It is a measurable map. The measure (X· )∗ (P ) is the

probability distribution (also called the law ) of the process. Finite dimensional

distributions determine the distribution of the process.

3.7.2

Gaussian Measures on Rd

Given a measure µ, its characteristic function is:

Z

µ̂(λ) := eihλ,xi dµ(x),

λ ∈ Rd .

Two finite measures agree if their characteristic functions agree. See §3.8 (page

197), volume I of [3]. A probability measure is Gaussian if for some a ∈ Rd and

some symmetric positive semi-definite d × d matrix C,

1

µ̂(λ) = eiλa− 2 hCλ,λi .

This is denoted by N (a, C). The measure is absolutely continuous with respect to

the Lebesque measure if C is invertible. Take the subspace spanned the eigenvectors of C, we we that the Gaussian measure is non-degenerate on this subspace.

hence there is little need to study non-degenerate Gaussian measures on a finite

dimensional vector space.

Let a ∈ Rd , C a positive definite d × d matrix. The (non-degenerate) Gaussian

measure N (a, C) on Rd is defined as the measure that is absolutely continuous

with respect to dx and

1

1

dµ

−1

=

e− 2 hC (x−a),x−ai .

n√

dx

(2π) 2 det C

31

(3.4)

Take

t 0 ... 0

0 t ... 0

.

=

...

0 0 ... t

C = tId×d

|x−a|2

dµ

1

− 2t

e

= pt (a, x) =

.

d

dx

(2πt) 2

Definition 3.7.3 A random variable whose distribution is Gaussian is a Gaussian

variable. A stochastic process Xt is Gaussian if its marginal distributions are

Gaussian.

Proposition 3.7.4 Let X = (X1 , . . . , Xn ) ∼ N (a, C) where C = (Ckl ). Then

EX = a and cov(Xk , Xl ) = Ckl .

Proof If C is diagonal this is easy to see. Otherwise, let A be a square root of C,

C = AAT . Then det C = (det A)2 and hC −1 ξ, ξi = |A−1 ξ|2 , any ξ ∈ Rd . Let

{ej } be the standard o.n.b. of Rd , then

Z

EX =

x

Rd

(2π)

n

2

1

√

1

det C

e− 2 hC

−1 (x−a),x−ai

dx

Z

1

1

−1

e− 2 hC x,xi dx

√

y∈Rd

(2π) det C

Z

1

1

2

z=A−1 y

e− 2 |z| det(A)dz = 0.

=

(Az + a)

d√

z∈Rd

(2π) 2 det C

=

(y + a)

d

2

The key ingredients is that the measure is turned into a product of measures on

each

P factor and Az is a linear function of z. In components, hAz + a, ek i =

j Ak,j zj + ak .

Z

EXk = EhX, ek i =

(

z∈Rd

X

Ak,j zj + ak )

j

32

1

1

−2

d e

(2π) 2

P

2

i (zi )

Πdzi = ak .

cov(Xk , Xl ) = EhX − a, ek ihX − a, el i

Z

=

hx − a, ek ihx − a, el i

1

√

1

e− 2 hC

−1 (x−a),x−ai

dx

(2π) det C

Z

1

1

−1

=

hy, ek ihy, el i

e− 2 hC x,xi dx

d√

y∈Rd

(2π) 2 det C

Z

1

1

2

z=A−1 y

e− 2 |z| det(A)dz

hAz, ek ihAz, el i

=

d√

z∈Rd

(2π) 2 det C

Z

P

X

X

1

− 12 i |z|2

e

(

ak,j zj

al,i zi )

=

dz

d

z∈Rd j

(2π) 2

i

Z

P

X

1

− 12 i |z|2

=

Ak,i Al,i

dz = (AAT )k,l = Ck,l .

zi2

d e

d

2

z∈R

(2π)

i

Rd

d

2

A family of real valued random variables {X1 , . . . , Xk } are uncorrelated if

cov(Xj , Xk ) = 0 when j 6= k. For Gaussian random variables,being uncorrelated

and being independent are equivalent. In fact if Ci,j = 0 when i 6= j, C is diagonal

and the Gaussian measure is a product measure. A family of stochastic processes

are independent, if their joint probability distribution is a product of the individual

probability distribution. This can be tested with evaluation at a finite number of

times or the investigation of their finite dimensional distributions.

Remark 3.7.5 If (Bt ) = (Bt1 , . . . , Btd ) is a d-dimensional Brownian motion if

and only if {(Bt1 , . . . , Btd )} are independent one dimensional Brownian motions.

For each t, {Bt1 , . . . , Btd } are independent random variables from C = tId×d . The

independence of the stochastic processes follows from this and the independent

increment property.

3.8

Kolmogorov’s Continuity Theorem

In the following definitions, the Euclidean space Rd can be replaced by a Banach

space.

Definition 3.8.1 Let α ∈ (0, 1). Let I be an interval of R, a function f : I → Rd

is Hölder continuous of exponent α if

|f (t) − f (s)| ≤ C|t − s|α .

33

Definition 3.8.2 Let α ∈ (0, 1). Let I be an interval of R, a function f : I → Rd

is locally Hölder continuous of exponent α if on any compact subinterval [a, b] ⊂ I,

|f (t) − f (s)|

< ∞.

|t − s|α

t6=s,t,s∈[a,b]

sup

Let T ∈ R+ ∪ {0}.

Theorem 3.8.3 ( Kolmogorov’s Continuity Theorem) Let (xt , 0 ≤ t < T ) be a

stochastic process, taking values in a Banach space (E, k − k). Suppose that there

exist positive constants p, δ and C such that

Ekxt − xs kp ≤ C|t − s|1+δ .

Then there is a continuous modification of (xt ), denoted as (x̃t ), which is locally

Hölder continuous with exponent α ∈ (0, δ/p).

For a proof or a refined version of this theorem (due to Garsia-Rademich-Rumsey

1970) see §2.1 (pages 47-51) of Stroock-Varadhan [27].

Definition 3.8.4 Two stochastic processes (Xt ) and (Yt ) on the same probability

space are modifications of each other if for each t, P (Xt = Yt ) = 1.

Here the exceptional set, {ω : Xt (ω 6= Yt (ω)}, of zero measure may depend on t.

Definition 3.8.5 Two stochastic processes (Xt ) and (Yt ) on the same probability

space are indistinguishable of each other if P (Xt = Yt , ∀t) = 1.

Theorem 3.8.6 Let (xt , 0 ≤ t < T ) be a real valued stochastic process such that

xt − xs is N (0, t − s) distributed. It has a continuous modification and the paths

t 7→ xt (ω) are Hölder continuous on any interval [a, b] ⊂ [0, T ) of exponent α for

any α < 21 .

Proof For any p > 0,

1

p

Z

E|xt − xs | = p

2π(t − s)

√

x=( t−s)z

=

∞

−|x|2

|x|p e 2(t−s) dx

−∞

1

|t − s| √

2π

p

2

Z

∞

|z|p e

−|z|2

2

p

dz = E(|x1 |p )|t − s| 2 < ∞.

−∞

By Kolmogorov’s continuity criterion there is a continuous modification of the

p

−1

sample path. The Hölder exponent, α = 2 p = p−2

2p , can be taken arbitrarily close

1

to 2 by taking p large.

34

3.8.1

The existence of the Wiener Measure and Brownian Motion

Fix T > 0. We come back to prove Theorem 3.3.1, which states that there is a

unique probability measure on W d such that

µ(ω : πt1 (ω) ∈ A1 , . . . , πtn (ω) ∈ An )

Z

Z

pt1 (0, x1 )pt2 −t1 (x1 , x2 ) . . . ptn −tn−1 (xn−1 , xn )dxn . . . dx2 dx1 .

...

=

A1

An

(3.5)

We used πt (ω) = ωt to denote the evaluation map at t. By Kolmogorov’s extension

theorem, there is a unique probability measure µ0 on the product σ-algebra of

(Rd )[0,T ] . See §3.9.2 for detail. Treat (B(Rd ), ⊗[0,T ] B(Rd ), µ0 ) as a probability

space and πt : B(Rd ) → Rd is a measurable function. Since πt ∼ N (0, t −

s), by Theorem 3.8.6 πt has a continuous modification and hence the set of noncontinuous path has µ0 measure zero.

The product σ-algebra = ⊗[0,T ] B(Rd ) is smaller than the Borel σ-algebra of

the product topology. Since the projections πt are continuous, B(W d ) contains the

σ-algebra of the product topology. However continuous functions are determined

by their values on rational numbers. The Borel σ-algebra is contained in B(W d ) =

W d ∩ ⊗[0,T ] B(Rd ). However W d or W0d is not a measurable set in the tensor

σ-algebra! We refer to Revuz-Yor [23].

Corollary 3.8.7 There is a measurable map

φ : ((Rd )[0,T ] , ⊗[0,T ] B(Rd )) → (W0d , B(W0d )).

Let µ = φ∗ µ0 . Then µ satisfies (3.5). In conclusion Wiener measure exists and

Brownian motion exists. In fact πt is a Brownian motion on the Wiener space.

The map φ is constructed as below. If ω is not continuous on Q we define φ(ω) ≡

0. Let Ω0 be the collection of such sets. It is clearly measurable. If ω is continuous

on Q we define φ(ω) such that it agrees with ω on Q and is continuous. Now for

all Borel sets Ai ,

{ω : φ(ωti ) ∈ Ai , ti ∈ Q} = {ω : ωti ∈ Ai , ti ∈ Q}

or

{ω : φ(ωti ) ∈ Ai , ti ∈ Q} = {ω : ωti ∈ Ai , ti ∈ Q} ∪ Ω0 .

Both are measurable sets. By Corollary 3.8.6, µ0 (ω 0 ) = 0. On cylindrical sets,

φ ∗ µ 0 = µ0 .

35

3.8.2

Lecture 6. Sample Properties of Brownian Motions

Rt

We wish to define 0 fs dBs . Could we use the theory of Lebesque-Stieltjes inRb

tegration ? To make sense of a fs dgs as a Lebesque-Stieltjes integral g is assumed to be of finite total variation on [a, b]. Functions of finite total variation

is differentiable almost surely everywhere. A typical Brownian path is nowhere

differentiable. If fs is reasonably smooth we may overcome the problem of the

differentiability

of t 7→ Bt R. By the elsmentary integrationR by parts formula:

Rb

t

t

a fs dBs = fb Bb − fa Ba − 0 Bs dfs . The stochastic integral 0 fs dBs we define

later will not request such regularities on fs .

Since a d-dimensional Brownian motion consists of d independent one dimensional Brownian motions. In this section we assume that Bt is a one dimensional

Brownian motion.

Proposition 3.8.8 Let ∆n : a = tn0 < tn1 < · · · < tnMn +1 = b be a sequence of

partitions of [a, b] with |∆n | → 0. Then

lim E

n→∞

Mn

X

!2

2

(Btni+1 − Btni ) − (b − a)

= 0.

i=0

In particular Tn converges in probability to b − a. There is a sub-sequence of

partitions ∆nk , such that for almost surely all ω,

Mn

X

Tn ≡

(Btni+1 − Btni )2 → b − a.

i=0

Proof

ETn =

Mn

X

E(B

tn

i+1

2

−B ) =

tn

i

i=0

d

Note that Bt − Bs =

√

Mn

X

(tni+1 − tni ) = b − a.

i=0

t − sB1 (exercise).

2

E(Tn − (b − a)) = var(Tn ) =

Mn

X

var (Btni+1 − Btni )2

i=0

=

Mn

X

var (tni+1 − tni )B12 ,

d

sinceBt − Bs =

√

t − sB1

i=0

=

Mn

X

tni+1 − tni

2

(varB12 ) ≤ |∆n |(b − a)var(B12 ) → 0.

i=0

36

The first statement of the Proposition hold. Now L2 convergence implies convergence in probability, and so there is a sub-sequence that is convergent almost

surely.

Taking for example a dyadic partition, divide each interval by 2 each time, the

whole sequence converge almost surely, [14]

Definition 3.8.9 Let (xt , 0 ≤ t < ∞) be a stochastic process. Suppose that for

any sequence ∆n of partitions of [0, t] with limn→∞ |∆n | = 0,

T

(n)

(x· ) :=

Mn

X

(xtni+1 − xtni )2

i=0

converges, in probability, to a finite limit, which we denote by hx, xit . We say that

(xt ) has finite quadratic variation and hx, xit is its quadratic variation process.

the convergence.

We also denote the convergence mentioned above by

lim

n→∞

Mn

X

(P )

E(xtni+1 − xtni )2 = hx, xit

i=0

The quadratic variation process is an increasing function. By Proposition 3.8.8,

the Brownian motion (Bt , t ∈ [0, ∞)) has finite quadratic variation and its quadratic

variation process is t. We’ll see below that a sample continuous stochastic process

(xt , t ∈ [0, ∞)) with finite quadratic variation, which is not identically zero, cannot have finite total variation over any finite time interval. This can be seen in

the following proposition, where the Brownian motion (Bt ) can be replaced by a

continuous process.

Proposition 3.8.10 For almost surely all ω, the Brownian paths t 7→ Bt (ω) has

infinite total variation on any intervals [a, b]. And Bt (ω) cannot have Hölder continuous path of order α > 21 .

The total variation of a function g : [a, b] → R is:

gT V ([a, b]) = sup

n−1

X

|g(ti+1 ) − g(ti )|

∆ i=0

where ∆ : a = t0 < t1 < · · · < tn = b ranges through all partitions of [a, b].

Proof Fix an ω. Since Bt has almost surely continuous paths we only consider all

such ω with t 7→ Bt (ω) continuous.

37

Suppose that B(ω)T V ([a, b]) < ∞. For the partitions in the previous lemma

such that Tn converges almost surely,

Mn

Mn

X

X

n

2

n

n

n

(Bti+1 − Bti ) ≤ max Bti+1 (ω) − Bti (ω) ·

(Btni+1 − Btni )

i

i=0

i=0

n

≤ max Bti+1 (ω) − Btni (ω) · B(ω)T V ([a, b]) → 0

i

The convergence follows

Pn from the fact that Bt (ω)2is uniformly continuous on [a, b].

This contradicts that i=0 (Btni+1 (ω) − Btni (ω)) converges to d − c.

n

n

Suppose that Bti+1 (ω) − Bti (ω) ≤ C(ω)|t − s|α , where C(ω) is a constant

for each ω, for some α > 12 .

Mn

X

|B

tn

i+1

2

2

(ω) − B (ω)| ≤ C (ω)

tn

i

i=0

n

X

|tni+1 − tni |2α

i=0

≤ C 2 (ω)|∆n |2α−1

2

n

X

(tni+1 − tni )

i=0

n 2α−1

≤ C (ω)(b − a)|∆ |

→ 0,

as 2α − 1 > 0. This contradicts with Proposition 3.8.8.

38

3.8.3

The Wiener measure does not charge the space of finite energy*

Let F : R → R be an increasing function. Then f has only a countable number

of points of discontinuity and f is differentiable almost everywhere. We modify

the value of f at the points of discontinuity so that the modified function is right

continuous. Then f 0 = f˜0 where they are differentiable and this holds a.e..

A right continuous increasing function induces a measure µF on R, which is

determined by

µF ((a, b]) = F(b) − F (a).

It is easy to see that this is indeed a measure and the continuity of the measure

on a monotone sequence of sets is assured by the right continuity of F . This is

the Lebesgue-Stieltjes measure associated to F. If F : [c, d] → R is of finite total

variation with T V (F ) then both F1 = T V (F ) + F and F2 = T V (F ) − F are

increasing and F := F1 − F2 . If F is right continuous, T V (F ), F1 and F2 are

right continuous.

If the measure µF is absolutely continuous with respect to the Lebesque measure, denote by p(x) the its density.

Z

Z

f (x)dµF (x) = f (x)p(x)dx

for any f bounded measurable. In particular,

Z

b

p(x)dx = F (b) − F (a).

a

Suppose that F ∈ H 1 , the space of finite energy also known as W 1,2 :

Z 1

0 2

1

d

F (s) ds < ∞}.

H = {F ∈ W0 :

0

Then the weak derivative F 0 = p and

Z

b

F (b) − F (a) =

F 0 (x)dx.

a

By Cauchy-Schwartz, |F (b) − F (a)| ≤

qR

√

|F 0 (x)|2 dx b − a and F is Hölder

continuous of order 21 . Since almost surely all path in W d is not a Hölder continuous of order 12 , the Wiener measure does not charge µ(H) = 0.

39

3.9

Product-σ-algebras

Let I be an arbitrary index and let (Eα , Fα ), α ∈ I be a family of measurable

spaces. The tensor σ-algebra, also called the product σ-algebra, on E = Πα∈I Eα

is defined to be

⊗α∈I Fα = σ{πα−1 (Aα ) : Aα ∈ Fα , α ∈ I}.

Here πα : E = Πα∈I Eα → Eα is the projection (also called the coordinate map).

If x = (xα , α ∈ I) is an element of E, πα (x) = xα . The tensor σ-algebra is the

smallest one such that for all α ∈ I, the mapping

πα : (E, ⊗α∈I Fα ) → (Eα , Fα )

is measurable.

For example, we make take (Eα , Fα ) = (S, B), a metric space with its Borel

sigma-algebra. We often take S = R or S = Rn .

3.9.1

The Borel σ-algebra on the Wiener Space

The set of all maps from [0, 1] to Rd can be denoted as the product space (Rd )[0,1] .

The tensor σ-algebra ⊗[0,1] B(Rd ) is the smallest space such that the projections

πt (σ) = σ(t)

is measurable. The product topology is the smallest topology such that the projections are continuous. Hence the Borel σ-algebra of the product topology is larger

than the tensor σ-algebra.

A subset W d of (Rd )[0,1] is the subset of continuous functions. The tensor

σ-algebra of (Rd )[0,1] is determined by set of the form

{πt−1 (B) : B ⊂ B(Rd )}.

Note that B(W d ) ⊂ W d ∩⊗[0,1] B(Rd ) by (3.2). On the other hand πt : W d →

Rd is continuous with respect to the uniform topology:

|πt (ω1 ) − πt (ω2 )| ≤ kω1 − ω2 k.

This means that the uniform topology is finer than the product topology and B(W d )

contains the Borel σ-algebra of the product topology on (Rd )[0,1] ∩ W d and hence

contains W d ∩ ⊗[0,1] B(Rd ). In conclusion,

B(W d ) = ⊗[0,1] B(Rd ) ∩ W d .

40

Any measurable set in the tensor σ-algebra is determined by the a countable

number of projections. The action to determine whether a function is continuous

or not cannot be determined by a countable number of operations. Hence W d is not

a measurable set in the tensor σ-algebra. (Don’t be confused with the following:

once we know a function is continuous, the function can be determined by its values

on a countable dense set.)

3.9.2

Kolmogorov’s extension Theorem

For each ω, we may view t ∈ I 7→ X(ω, t) ∈ S an element of S I . Define

X· : Ω → S I to be the map from t to Xt (ω). Then X· : (Ω, F) → (S I , ⊗I B) is

measurable if and only if each Xt : (Ω, F) → (S, B) is measurable.

Definition 3.9.1 The map X : Ω → S [0,T ] induces a probability measure on

⊗[0,T ] B. This is the law or the distribution µX of the stochastic process.

The projection map from S [0,T ] to S n defined by

πt1 ,...,tn : X· ∈ S [0,T ] 7→ (Xt1 , . . . , Xtn ) ∈ S n

induces from µX the marginal distribution µt1 ,...,tn .

The question is whether the marginal distributions determine the law µ. A

cylindrical set in πα∈I S is product set each of whose factor spaces, with possibly

a finite number of exceptions, equals S. Let E denote the collection of cylindrical

sets:

E = {Πα∈I Aα , Aα ∈ B}

where Aα = S except for a finite number of index α. Then σ(E) = ΠI B. Let J ⊂

I be a subindex set. Let πJ : Πα∈I S → Πα∈J S be the projection. If J ⊂ K ⊂ I

let πKJ : Πα∈K S → Πα∈J S be the projection map. Let µ be a measure on the

product space πI S, and let µJ the measure induced by the projection from πI S to

πJ S. Then (µJ ) is a projective family of measures, which means (πKJ )∗ µK =

µJ . The family of marginal distributions forms a projective family of cylindrical

measures.

Definition 3.9.2 Let E be a separable metric space. Let {µJf } be a collection of

probability measures on ⊗I B where Jf runs through finite subsets of I. They are

called cylindrical measures. This is a projective family of measures if

(πKJ )∗ (µk ) = µJ .

41