FINISHED TRANSCRIPT ITU - ANDREA SAKS MARCH 12, 2012

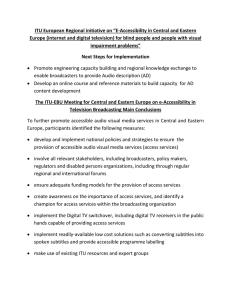

advertisement