` -regularized regression with generalized linear models

advertisement

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

`1 -regularized regression with generalized linear

models

largely based on Chapter 3 of “Statistical Learning with Sparsity”

Ioannis Kosmidis

Department of Statistical Science

University College London

i.kosmidis@ucl.ac.uk

Reading group on “Statistical Learning with Sparsity”

4 March 2016

1 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Outline

1 Generalized linear models

Model specification and estimation

Weighted lasso regression

Proximal Newton iteration

Logistic regression

Poisson log-linear models

Multinomial regression

2 Discussion

2 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Model specification and estimation

Exponential family of distributions

A random variable Y has a distribution from the exponential

family if its density/pmf is of the form

y θ − b(θ)

f (y ; θ, φ) = exp

+ c(y , φ)

φ

θ is the “natural parameter”

b(θ) is the “cumulant transform”

E(Y ; θ) = b 0 (θ); Var(Y ; θ, φ) = φb 00 (θ)

Variance is usually1 expressed in terms of the “dispersion” φ

and the mean µ = b 0 (θ) via the variance function

V (µ) = b 00 (b 0−1 (µ)).

1

e.g. in standard textbooks, like McCullagh and Nelder (1989), and family

objects in R

3 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Model specification and estimation

Generalized linear models

Data: (y1 , x1> ), . . . (yN , xN> ), where yi is the ith outcome and

xi ∈ <p is a vector of predictors

Generalized Linear Model (GLM) specification:

Random component: Y1 , . . . , YN are conditionally independent

given X1 , . . . , Xn and Yi |Xi = xi has a distribution from the

> >

exponential family with mean µ(β; xi ), where β = (β0 , β(x)

)

Systematic component: the predictors enter the model through

η(β; x) = β0 + x > β(x)

Link function: The mean is linked to the systematic component via

a link function g : C ⊂ < → < as

g (µ(β; x)) = η(β; x)

4 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Model specification and estimation

Maximum (regularized) likelihood

Let y = (y1 , . . . , yN )> and X the N × p matrix with ith row xi and

jth column gj , such that 1> gj = 0 and kgj k22 = 1

Maximum likelihood (ML) estimator β̂ (assuming that X is

of full rank and g strictly monotonic):

min {−`(β, φ; y , X )}

β

P

where `(β, φ; y , X ) = N

i=1 log f (yi ; θ(β; xi ), φ) with

θ(β; xi ) = b 0−1 (µ(β; xi ))

Maximum regularized likelihood (MRL) estimator β̂(λ):

min {−`(β, φ; y , X ) + Pλ (β)} ,

β

where the penalty Pλ (β) is either λ β(x) 1 or variants posed

by the model structure and/or the task (e.g. sum of `2 norms

of subsets of β, a.k.a. “grouped lasso” penalty)

5 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Model specification and estimation

ML via Iteratively reweighted least squares

A Taylor expansion of `(β, φ; y , X ) around an estimate β̃ gives the

log-likelihood approximation:

−

N

n

o2

1 X

w (β̃; xi ) z(β̃; yi , xi ) − η(β; xi ) + C (β̃, φ; y , X )

2φ

(1)

i=1

where

z(β; y , x) = η(β; x) + {y − µ(β; x)} /d(β; x) (“working variate”)

w (β; x) = {d(β; x)}2 /V (µ(β; x)) (“working weights”)

d(β; x) = 1/g 0 (µ(β; x))

C (β̃, φ; y , X ) collects terms that do no depend on β

Maximisation of (1) is a weighted least-squares problem with

responses z(β̃; y , xi ), predictors η(β; xi ) and weights w (β̃; xi )

6 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Model specification and estimation

Algorithm IWLS: Iteratively reweighted least squares

Input: y , X ; β(0)

Output: β̂

Iteration:

0 k ←0

1 Calculate W(k) = diag w (β(k) ; x1 ), . . . , w (β(k) , xn )

2 Calculate z(k) = (z(β(k) ; y , x1 ), . . . , z(β(k) ; y , xn ))>

−1 >

3 β(k+1) ← X > W(k) X

X W(k) z(k)

4 k ←k +1

5 Go to 1

W 1/2 X = QR can be used to simplify step 2

7 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Weighted lasso regression

Weighted lasso regression

Data:

Response vector: z = (z1 , . . . , zN )> such that

PN

i=1 zi

=0

Predictors: N × p matrix X with jth column gj , such that

1> gj = 0 and kgj k22 = 1

Weights: w1 , . . . , wn with wi > 0

Weighted lasso regression:

)

( n

2

1X >

min

wi zi − xi β + λ kβk1

β

2

i=1

8 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Weighted lasso regression

Algorithm CCD: Cyclic coordinate descent

Input: z, W , X ; β(0) , λ

Output: β̂(λ)

Iteration:

0 k ←0

1 For j = 1, . . . , p

βj,(k+1) ←

Sλ (gj> W (z − η−j,(k) ))

gj> W gj

where Sλ (a)

λ)+ and

P = sign(a)(|a| − P

p

η−j,(k) = j−1

β

g

+

t

t=1 t,(k+1)

t=j+1 βt,(k) gt with

Pb

t=a (.) = 0 if a > b

2 k ←k +1

3 Go to 1

“Pathwise coordinate descent”: Start from a value of λ for

which all coefficients are zero (e.g. λmax > maxj |gj> W z|) and

then move towards λ = 0 using “warm starts” for each CCD

9 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Proximal Newton iteration

MRL via a proximal Newton method2

n

o

min −`(β, φ; y , X ) + λ β(x) 1

β

Algorithm PN: Proximal Newton iteration

Input: y , X ; β(0) , λ

Output: β̂(λ)

Iteration:

0 k ←0

1 Update the quadratic approximation of `(β, φ, y , X ) at

β̃ := β(k) using (1) (step of the “outer loop”)

2 CCD for `1 -regularized weighted least squares (“inner loop”)

3 k ←k +1

4 Go to 1

2

see Lee et al. (2014) for details on proximal Newton-type methods and

their convergence properties

10 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Logistic regression

Logistic regression

Model: Y1 , . . . , YN are conditionally independent given X1 , . . . , XN

with Yi |Xi = xi ∼ Bernoulli(π(β, xi )) where

log

π(β, xi )

= β0 + xiT β(x)

1 − π(β, xi )

Log-likelihood:

N h oi

n

X

`(β; y , X ) =

yi β0 + xiT β(x) − log 1 + exp β0 + xiT β(x)

i=1

Penalty: Pλ (β) = λ β(x) 1

Working variate and working weight:

z(β; yi , xi ) = β0 + xi> β(x) +

yi − π(β, xi )

π(β, xi )(1 − π(β, xi ))

and w (β, xi ) = π(β, xi )(1 − π(β, xi ))

11 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Logistic regression

Logistic regression

If X has rank p, `(β; y , X ) is concave3

The ML estimate can have infinite components

If p > N − 1, then the model is over-parameterized and

regularization is required to achieve a stable solution

3

see, for example, Wedderburn (1976)

12 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Logistic regression

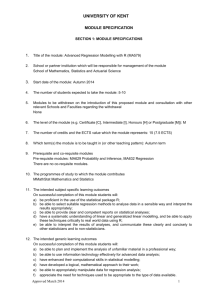

20-Newsgroups data

Document classification based on the 20-Newsgroups corpus with

feature set and class definition as in Koh et al. (2007)4

## Load the data

con <- url(paste0("https://www.jstatsoft.org/index.php/jss/article/",

"downloadSuppFile/v033i01/NewsGroup.RData"))

load(con)

## p >> N

dim(NewsGroup$x)

## [1]

11314 777811

## Feature matrix is sparse

mean(NewsGroup$x)

## [1] 0.0007721394

4

Data is available as a supplement for Friedman et al. (2010)

13 / 44

20-Newsgroups data: 1000 × 1000 block of feature matrix

1000

Row

2000

3000

4000

1000

2000

3000

Column

Dimensions: 5000 x 5000

4000

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Logistic regression

20-Newsgroups data: proximal Newton with warm starts

## Load glmnet

require("glmnet")

## Compute lasso path (alpha = 1 in glmnet arguments)

system.time(NewsFit <- glmnet(x = NewsGroup$x, y = NewsGroup$y,

family="binomial",

lambda.min.ratio = 1e-02,

thresh = 1e-05,

alpha = 1))

##

##

user

6.063

system elapsed

1.170

7.479

15 / 44

20-Newsgroups data: Solution path (λmin /λmax = 10−2 )

74

254

1076

3296

0.0

0.2

0.4

0.6

0.8

0

−2

−4

Coefficients

2

0

Fraction Deviance Explained

Dλ2 = (Devnull − Devλ )/Devnull

20-Newsgroups data: Solution path (λmin /λmax = 10−4 )

88

278

1087

3342

8103

0.0

0.2

0.4

0.6

0.8

1.0

0

−5

−10

Coefficients

5

0

Fraction Deviance Explained

Dλ2 = (Devnull − Devλ )/Devnull

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Logistic regression

Some observations

If p > N − 1, then the estimates diverge to ±∞ as λ → 0 in

order to achieve probabilities of 0 or 1

Plotting the solution path versus log(λ) or kβ̂(λ)k1 is

possible, though is not preferable to Dλ2 due to interpretation

issues in p >> N settings

18 / 44

20-Newsgroups data: Solution path (λmin /λmax = 10−2 )

74

254

1076

3296

0.0

0.2

0.4

0.6

0.8

0

−2

−4

Coefficients

2

0

Fraction Deviance Explained

20-Newsgroups data: Solution path (λmin /λmax = 10−2 )

1565

216

18

0

−6

−5

−4

−3

−2

0

−2

−4

Coefficients

2

4167

Log Lambda

20-Newsgroups data: Solution path (λmin /λmax = 10−2 )

2478

3790

4336

5066

0

500

1000

1500

2000

0

−2

−4

Coefficients

2

0

L1 Norm

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Logistic regression

Cross-validation

require("doMC")

registerDoMC(4)

## Run a 10-fold cross validation using doMC

cvNewsGroupC <- cv.glmnet(x = NewsGroup$x, y = NewsGroup$y,

family="binomial",

nfolds = 10,

lambda = NewsFit$lambda,

type.measure="class",

parallel = TRUE)

cvNewsGroupD <- cv.glmnet(x = NewsGroup$x, y = NewsGroup$y,

family="binomial",

nfolds = 10,

lambda = NewsFit$lambda,

type.measure="deviance",

parallel = TRUE)

22 / 44

Cross-validation

1.0

Binomial Deviance

0.5

●●

●●●●

●●●

●●●

●●

●●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●●

●●

●●

●●

●

●

●●●

●●●

●●●●

●●●●●●

●●●●●●●●●●

0.0

0.4

0.3

0.2

0.1

●●

●

●

●●●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●

●●

●●

●

●●

●●

●●

●●

●●●

●●●

●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●

0.0

Misclassification Error

0.5

1.5

5388 3800 1187 214 46 7 1

0.6

5388 3800 1187 214 46 7 1

−6

−5

−4

log(Lambda)

−3

−2

−6

−5

−4

log(Lambda)

−3

−2

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Poisson log-linear models

Poisson log-linear models

Model: Y1 , . . . , YN are conditionally independent given X1 , . . . , XN

with Yi |Xi = xi ∼ Poisson(µ(β, xi )) where

log µ(β, xi ) = β0 + xiT β(x)

Log-likelihood:

`(β; y , X ) =

N n o

X

yi β0 + xiT β(x) − exp β0 + xiT β(x)

i=1

Penalty: Pλ (β) = λ β(x) 1

Working variate and working weight:

z(β; yi , xi ) = β0 + xi> β(x) +

y − µ(β, xi )

µ(β, xi )

and w (β, xi ) = µ(β, xi )

24 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Poisson log-linear models

Poisson log-linear models

If X has rank p, `(β; y , X ) is concave5

If the intercept is not penalized (typically the case), then

direct differentiation of the penalized log-likelihood gives

N

1 X

µ(β̂(λ), xi ) = ȳ

N

i=1

When p > N − 1 and zero counts are observed then the

estimates diverge to ±∞ as λ → 0, in order to achieve means

of 0.

5

see, for example, Wedderburn (1976)

25 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Poisson log-linear models

Distribution smoothing

Data: vector of proportions r = {ri }N

i=1 such that

PN

i=1 ri

=1

Target distribution: u = {ui }N

i=1

Task: Find estimate q = {qi }N

i=1 such that

the relative entropy between q and u is as small as possible

q and r are within a given max-norm tolerance

Constrained maximum-entropy problem6 :

min

q∈<N ;qi ≥0

6

N

X

i=1

qi log

qi

ui

such that

kq − r k∞ ≤ δ;

N

X

qi = 1

i=1

see, Dubiner and Singer (2011)

26 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Poisson log-linear models

Distribution smoothing: Lagrange dual

The Lagrange-dual is an `1 -regularized Poisson log-linear

regression:

" N

#

X

max

{ri log qi (α, βi ) − qi (α, βi )} − δ kβk1

α,β

i=1

where qi (α, βi ) = ui exp{α + βi }

The

PN presence of the unpenalized intercept ensures that

i=1 qi (α̂(δ), β̂i (δ)) = 1

As δ grows, β̂i (δ) → 0 (i = 1, . . . , N) and α̂(δ) → 0.

27 / 44

Artificial example

●

●

●●● ●

target

observed

●

0.020

●●

● ●●

●

f(x)

0.010

●

● ●●

●

●●

●●● ●● ●

●● ● ●

●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●● ●●●●

−6

−4

● ● ●●●

●

●

●

●

●

● ● ●

●●● ●●●● ●●● ●●●●

● ● ●● ●

●●●●

●

● ●

● ●● ● ● ●

●●

●

●●

● ● ● ●●

0.000

●

● ●●

●●● ●● ●

●●

●●●●●●

●●●

● ● ●●●●●●●

●

−2

●

● ●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●

0

x

2

4

6

Artificial example

δ = 0.001396317

●

●

●●● ●

target

observed

estimated

●

●●

0.000

0.010

f(x)

0.020

●

●●

●●

●● ●

●●

●●● ●● ●●● ●● ●

● ● ● ●●

● ● ●●●●

●

●

●

● ● ● ● ●●

● ●●●● ●●●●

●● ●●●●

●●●●●●●●●●● ●

●●●●●●●●

●●●

●● ● ●

● ●●●●●

●● ●● ●● ●

●● ●

● ● ●●● ●●● ●

●●●●●●●●●

●

●

●

● ●●●●●●● ● ●●●●

● ●●●●●

●●●●●●●● ●

●●●●●●●●●●●●●●

●●

●●

●●●●●

●●●●●●●●●●●●●●●●●●●●●●●●●●●●● ●●●●

●

●

−6

−4

−2

●

● ●● ●●●●●

● ●●

● ● ●● ●

●●●

●●● ●●●

●

●● ●

●●

● ●

●

● ● ● ●●

●●● ● ●

● ●●●

●●

● ●● ● ● ● ●● ● ● ●●●

●

●●

●

●

●●●●

●

●

● ●

●●●

0

●

2

●●

●

●●● ●● ● ●●

●●

●●●

●●●●●●

●●

●●

● ● ●●●●●●●

●

●●●●●●●

●●●●●●●●●●●●●●

● ●●●●●●●●●●●●●●●●●●●

●●●●●●●●●

4

6

x

Basic call:

glmnet(x = Diagonal(N), y = r, offset = log(u), family = "poisson")

Artificial example

δ = 0.000457231

●

0.020

●

●

●●● ●

target

observed

estimated

●

●●

●

●

●● ● ● ●●●

● ●

●

●

●

●

● ● ●●●●●

f(x)

● ● ● ●●

●●

● ●●●● ●

●

●

● ●●●●●●●●●

● ●● ● ● ●● ●

0.010

0.000

●

●●● ●●●●

●●

●

●

●●

●●●●●

● ● ● ● ●●

● ●●●●●●●●

●

●●

●

−6

−4

−2

0

● ● ●●●

●

●●● ●● ● ●●

●●

●●

●●●

●●●●●●

●●

●

● ● ●●●●●●● ● ●●

●●●●●●

●●●●●●●●●●●

● ●●●●●●●●●●●●●●●●

●●

●●●

●●●●● ●●

● ●● ●●● ●● ● ●●●●● ●●●

●●

● ● ●●●●

●●

●●●●●●●●● ●●● ●●●●● ●●●●●●●

●●●●●●●●● ● ●●●●●●●●●●

● ● ●

●●● ●● ●

●●●●●

●●●●● ●●● ●

● ●●●●●●● ●

● ●●●●●●●●● ●●●●

●●●●●●● ●

●●

●●●●●●●●●●●●●●

●●●●●●

●●

●●

●●●●●●●●●●●●●●●●●●●●●●●●●●●●● ●●●●

●

2

4

6

x

Basic call:

glmnet(x = Diagonal(N), y = r, offset = log(u), family = "poisson")

Artificial example

δ = 0.0001497225

●

●

●●● ●

target

observed

estimated

●●●● ●

●● ●

● ●●●●● ●

● ● ● ●●● ●●

●●

● ● ● ●●●

●●● ●● ●●

●

●

●●●●● ●● ●●● ●

● ● ●●●●

●

0.010

0.000

●

●●●●● ●

f(x)

0.020

●

●

●●●●

● ●●● ●● ●

● ●●● ●●●●●●● ●●

● ● ●

●●

●

−4

−2

0

●

●●● ●●●●●●●●●

●

●●●●● ●●●●●● ●●●●●● ●●●●●●● ●●● ● ●

●

●● ●

● ● ●●●

●●●●●●

●●●●●●●●●●●● ●●●●

●●●●● ●●●●● ●●●●●

●

●

● ●

●●●●●●●●●●

●●●●

●

●

●

●●●●●●●●●●●●●●

●●●●●●

●●

●●

●●

●●●●●

●●●●●●●●●●●●●●●●●●●●●●●● ●●●●

●

−6

●

●

● ● ●●●●●●● ● ●●

●●

●●

●●●

●●●●●●●●●●●●●●

●

● ● ●●●●●●●●●●●●●●●●● ●

●●●●●●● ●

●●

●●

●●

●●

● ●●●●●●●●●●●●●●●●

●●

●●●

●●●●●●●●●

2

4

6

x

Basic call:

glmnet(x = Diagonal(N), y = r, offset = log(u), family = "poisson")

Artificial example

δ = 4.57231e−05

●●● ●

●●● ●

●

●

●● ●

●

●● ●

● ●●

● ●●

● ●● ●

● ●● ●

●

●● ●

●

●● ●

● ● ● ●●

● ●

● ●

● ● ● ●●

●

● ●● ● ● ●

● ●

● ●●●

● ●● ● ● ●

●

●●

●

●●

●

●●●●

●

●

●

●

●●

●

●●●●

●

●

●

●● ●

●

●

●● ●

●●● ●● ●

● ●● ●●● ●● ●

● ● ●

●●● ●● ●

● ●● ●●● ●● ●

● ● ●

●●●●●●

●●● ●●●● ●●● ●●●●

●●

●●●●●●

●● ●●●● ●●● ●●●●

●●

● ● ●●●●●●●

●

●●

● ● ●

●●

● ●

●● ● ● ●●●

● ● ●●●●●●●

●

●

●

● ● ●●●

●

●●●●●●●●●●●●●●●●●●●●●●●● ●●●●

● ●●●●●●●●●●●●●●●●●●●●●●●

●

●●●●●●●●●●●●●●

●●●●●●

●●

●●

●●

●●

●●

●●●

●●●●●●●●●●●●●●●●●●●●●●●● ●●●●

●

● ●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●

●

0.000

0.010

f(x)

0.020

●

target

observed

estimated

−6

−4

−2

0

2

4

6

x

Basic call:

glmnet(x = Diagonal(N), y = r, offset = log(u), family = "poisson")

Artificial example

δ = 1.497225e−05

●

0.020

●

●

●●

●●

● ●

●

●

target

observed

estimated

●

●

●

●●

●

● ●

●●

●

●

●

●

f(x)

0.010

●

●

● ●

●●

●

●

●

●

●●

●

●

●●

●●

●●

●●

● ●

●

●

● ●

●●

● ●

●

●●●●●●●●●●●●●●●●●●●

●●

●●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

● ●

●●

●●

●●

●

−6

−4

● ●

● ●

●●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●●

●●

● ●

●

● ●

● ●

●

●

●

●●

● ●

●●

●●

●●

● ●

●●

●●

● ●

●●

●●

●●

●

●●

● ●

● ●● ●

●

●

●

●●

●●

●●

●

●

●

● ●

●

●

● ●

●●

● ●

● ●

● ●

●

●

●

●●

●

●

●

●

●●

●

● ●

●●

● ●

●●

●

●

0.000

●

●

●●

●●

●

●

●

●●

●●

●●

●●

●●

●

●

●●

●

●

● ●

● ●

●●

●●

●●

●●

●●

●●

●

●●

●●

●

●

●

●

−2

●

●

●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●

●●●

●●●

●●●●●●●●●●

0

2

4

6

x

Basic call:

glmnet(x = Diagonal(N), y = r, offset = log(u), family = "poisson")

Artificial example

δ = 4.902738e−06

●

0.020

●

●

●●● ●

target

observed

estimated

●

●●

● ●●

●

f(x)

0.010

●

● ●●

●

●●

●●● ●● ●

●● ● ●

●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●● ●●●●

−6

−4

● ● ●●●

●

●

●

●

●

●●● ●● ●

● ● ●

●●● ●●●● ●●● ●●●●

● ● ●● ●

●●●●

●

● ●

● ●● ● ● ●

●●

●

●●

● ● ● ●●

0.000

●

● ●●

●●●●●●

●●

● ● ●●●●●●●

●●●

−2

●

● ●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●

●

0

2

4

6

x

Basic call:

glmnet(x = Diagonal(N), y = r, offset = log(u), family = "poisson")

Artificial example

δ = 1.497225e−06

●

0.020

●

●

●●● ●

target

observed

estimated

●

●●

● ●●

●

f(x)

0.010

●

● ●●

●

●●

●●● ●● ●

●● ● ●

●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●● ●●●●

−6

−4

● ● ●●●

●

●

●

●

●

●●● ●● ●

● ● ●

●●● ●●●● ●●● ●●●●

● ● ●● ●

●●●●

●

● ●

● ●● ● ● ●

●●

●

●●

● ● ● ●●

0.000

●

● ●●

●●●●●●

●●

● ● ●●●●●●●

●●●

●

−2

●

● ●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●

0

2

4

6

x

Basic call:

glmnet(x = Diagonal(N), y = r, offset = log(u), family = "poisson")

Artificial example (best λ from 10-fold CV with MSE loss)

δopt = 0.0001721445

●

●

●●● ●

target

observed

estimated

●●●● ●

●● ●

● ●●●●● ●

● ● ● ●●● ●●●

●

● ●

● ● ● ●●●

●●● ● ●

●

●

●●●●● ●● ●●● ●

● ● ●●●●

0.010

0.000

●

●●●●● ●

f(x)

0.020

●

●●

●●●

●●

●

●●

● ●●● ●● ●

●●●●

●

−4

−2

0

●

●●● ●●●●●●●●●

● ●●● ●●●●●●● ●● ● ● ●

●●

●

●●●

●● ●●●●● ●●●●●●● ●●●●●●● ●●● ● ●

●

●●

●●●●

●●●●●●

●●●●●●●●●●●●● ●●●●

●●●●● ●●●●● ●●●●●

●

● ●

● ● ●●

●●●●

●●●●●●●●●

●●●●●●●●●●●●●●

●●●●●●

●●

●●

●●

●●●●●

●●●●●●●●●●●●●●●●●●●●●●●● ●●●●

●

−6

●

●

● ● ●●●●●●● ● ●●

●●●●●●●●●●●●●●

●

● ● ●●●●●●●●●●●●●●●●● ●

●●●●●●● ●

●●

●●

●●

●●

● ●●●●●●●●●●●●●●●●

●●

●●●

●●●●●●●●●

2

4

6

x

Basic call:

glmnet(x = Diagonal(N), y = r, offset = log(u), family = "poisson")

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Multinomial regression

Multinomial regression

Model: The vectors {(Yi1 , . . . , YiK )}N

i=1 are conditionally

independent given X1 , . . . , XNP

, and (Yi1 , . . . , YiK )|Xi = xi has a

multinomial distribution with K

k=1 Yik = mi and kth-category

probability

exp β0,k + xiT β(x),k

(2)

π(βk , xi ) = PK

T

k=1 exp β0,k + xi β(x),k

Log-likelihood:

`(β; Y , X ) =

" K

N

X

X

i=1

yik (β0,k + xiT β(x),k )

k=1

−mi log

( K

X

exp β0,k +

xiT β(x),k

)#

k=1

Penalty: Pλ (β) = λ

PK

k=1 β(x),k 1

37 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Multinomial regression

Multinomial regression

The parameterization in (2) is not identifiable; adding

γ0 + xi> γ(x) to the ith predictors gives the same likelihood

ML can only estimate contrasts of parameters

e.g. setting βK = 0 gives the identifiable “baseline category

logit” that involves contrasts with category K

ML fit is invariant to the choice of baseline category

If X has rank p, the log-likelihood for the baseline category

logit is concave

MRL fit (using `1 -regularized likelihood) is not invariant to

the choice of baseline category

MRL estimation (using `1 -regularized likelihood) takes care of

the redundancy in (2) by an implied, coordinate-dependent

P

re-centering of β(x) (also need to set K

k=1 β0,k = 0).

38 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Multinomial regression

Maximum regularized likelihood

Partial quadratic approximation of the log-likelihood at β̃t allowing

only β0,k and β(x),k to vary:

N

n

o2

1X

−

w (β̃k , xi ) z(β̃k , yik , xi ) − β0,k − xi> β(x),k

2

(3)

i=1

+C ({β̃t }t6=k ; Y , X )

where C ({β̃t }t6=k ; Y , X ) collects terms that do not depend on β0,k

and β(x),k

z(βk , yik , xi ) = β0,k + xi> β(x),k +

yik /mi − π(βk , xi )

π(βk , xi ) {1 − π(βk , xi )}

and w (βk , xi ) = mi π(βk , xi ) {1 − π(βk , xi )}

39 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Multinomial regression

Nested loops for maximum regularized likelihood

outer : Cycle over t = {1, 2, . . . , K , 1, 2, . . .}

middle : for each t set {β̃0,t , β̃t }t6=k in the partial quadratic

approximation in (3) at the current estimates

inner : run CCD for the `1 -regularized weighted least squares

P

The penalty Pλ (β) = λ K

k=1 β(x),k 1 can select different

variables for different classes

40 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Generalized linear models

Multinomial regression

Grouped-lasso for multinomial regression

“Grouped-lasso” penalty

(G )

Pλ (β) = λ

p

X

kδj k2

j=1

where δj = (β(x),1j , . . . , β(x),Kj )>

Selects all coefficients for a particular variable to be in or out

of the model

41 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Discussion

Outline

1 Generalized linear models

2 Discussion

42 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Discussion

Other regression models

Similar procedures/algorithms can be applied in regression

settings with other objectives than the log-likelihood

e.g. Cox proportional hazards models using regularized partial

likelihood7

7

see Hastie et al. (2015, §3.5)

43 / 44

`1 -regularizion for GLMs

Ioannis Kosmidis, UCL

Discussion

References

Dubiner, M. and Y. Singer (2011). Entire relaxation path for maximum entropy

problems. In Proceedings of the Conference on Empirical Methods in Natural

Language Processing, EMNLP ’11, Stroudsburg, PA, USA, pp. 941–948.

Association for Computational Linguistics.

Friedman, J., T. Hastie, and R. Tibshirani (2010). Regularization paths for generalized

linear models via coordinate descent. Journal of Statistical Software 33 (1), 1–22.

Hastie, T., R. Tibshirani, and M. Wainwright (2015, May). Statistical Learning with

Sparsity: The Lasso and Generalizations (Chapman & Hall/CRC Monographs on

Statistics & Applied Probability). Chapman and Hall/CRC.

Koh, K., S.-J. Kim, and S. Boyd (2007, December). An interior-point method for

large-scale l1-regularized logistic regression. J. Mach. Learn. Res. 8, 1519–1555.

Lee, J. D., Y. Sun, and M. A. Saunders (2014). Proximal newton-type methods for

minimizing composite functions. SIAM Journal on Optimization 24 (3), 1420–1443.

McCullagh, P. and J. A. Nelder (1989). Generalized linear models (Second edition).

London: Chapman & Hall.

Wedderburn, R. W. M. (1976). On the existence and uniqueness of the maximum

likelihood estimates for certain generalized linear models. Biometrika 63 (1), 27–32.

44 / 44