/ ST413 - Bayesian Statistics & Decision Theory ST301 /11 2010

advertisement

ST301 / ST413 - Bayesian Statistics & Decision Theory

2010/11

Murray Pollock & Prof. Jim Smith

May 14, 2011

1

1.1

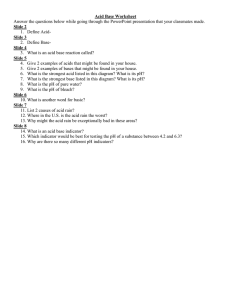

Exercise Sheet 1

Question 1

A doctor needs to diagnose which disease a patient has (I = {1, 2, 3}). She knows the patient will have one (and

only one) of these illnesses. She also knows that of the types of patients she will be asked to diagnose 80% will

have illness 1, 10% will have illness 2 and 10% will have illness 3.

To help her in her diagnosis she will observe the existence or otherwise (1 or 0) of four symptoms (X1 , X2 , X3 , X4 )

representing (respectively), high pulse rate, high blood pressure, low blood sugar and chest pains.

The probabilities of exhibiting various symptoms ({X1 = 1, X2 = 1, X3 = 1, X4 = 1}) given various diseases

are shown in Table 1:Symptom - X j

P(X1 = 1|I = i)

P(X2 = 1|I = i)

P(X3 = 1|I = i)

P(X4 = 1|I = i)

Disease - I = i

i=1 i=2 i=3

0.3

0.2

0.2

0.1

0.9

0.7

0.8

0.1

0.1

0.2

0.9

0.7

Table 1: Conditional probabilities of symptoms given disease.

You may assume the naive Bayes hypothesis1 throughout.

1.1.1

Question 1 i)

Given the doctor observes a patients’ symptoms (X1 , X2 , X3 , X4 ) to be (1, 1, 0, 1) calculate her posterior probabilities that the patient has illness 1, 2 and 3.

Using log-odds2 and noting from the question that the patient has precisely one disease (with P(I = 1) = 0.8,

P(I = 2) = 0.1 & P(I = 3) = 0.1) and furthermore noting that the patient either does or does not exhibit a

particular symptom ie - P(Xi = 1|I = j) = 1 − P(Xi = 0|I = j) ∀i, j then it is sufficient calculate for illnesses

I = {2, 3} the posterior log-odds ratio with respect to illness I = 1.

1 Naive Bayes hypothesis (see [?] §1.2.2 Defn 1.8): Assume that all symptoms are independent given the illness class {I = i} for all

possible illness classes i, 1 ≤ i ≤ n.

2 Log-odds (see [?] §1.2.3): Denoting I as an illness and Y as a vector of symptoms then given (I = k|Y = y) > 0 we have,

!

(Y = y|I = i) · (I = i)

(Y = y)

(I = i|Y = y)

=

!

(I = k|Y = y)

(Y = y|I = k) · (I = k)

(Y = y)

)

m (

Y

(Y j = y j |I = i)

(I = i)

=

·

(1)

(Y

=

y

|I

=

k)

(I

= k)

j

j

j=1

P

P

P

P

P

P

P

1

P

P

P

P

P

P

In particular for I = 2 we have,

4

X

λ j (2, 1, x j ) = log

j=1

P(X1 = 1|I = 2)

P(X2 = 1|I = 2)

P(X3 = 0|I = 2)

P(X4 = 1|I = 2)

+ log

+ log

+ log

P(X1 = 1|I = 1)

P(X2 = 1|I = 1)

P(X3 = 0|I = 1)

P(X4 = 1|I = 1)

0.2

0.9

1 − 0.1

0.9

+ log

+ log

+ log

= 4.8 to 1 decimal place.

0.3

0.1

1 − 0.8

0.2

P(I = 2)

O(2, 1) = log

P(I = 1)

0.1

= −2.1 to 1 decimal place.

= log

0.8

4

X

O(2, 1|x) =

λ j (2, 1, x j ) + O(2, 1)

= log

(3)

(4)

j=1

= 4.8 − 2.1 = 2.7 to 1 decimal place.

Similarly we have for I = 3

2.2 (to 1 dp).

P4

j=1

(5)

λ j (3, 1, x j ) = 4.3 (to 1 dp) and O(3, 1) = −2.1 (to 1 dp) implying O(3, 1|x) =

Now noting that,

3

X

P(I = i|X = x) = P(I = 1|X = x) + e2.7 · P(I = 1|X = x) + e2.2 · P(I = 1|X = x) = 1

(6)

i=1

we have,

1

= 0.04 to 2 decimal places.

1 + e2.7 + e2.2

P(I = 2|X = x) = e2.7 · P(I = 1|X = x) = 0.60 to 2 decimal places.

(8)

P(I = 3|X = x) = e · P(I = 1|X = x) = 0.36 to 2 decimal places.

(9)

P(I = 1|X = x) =

2.2

1.1.2

(7)

Question 1 ii)

Let R(D, I) denote the reduction in the patients’ life expectancy when he has disease I and is first given treatment

D. The values of R(D, I) are given in Table 2 (note here that D(i) is the best treatment for I = i where i = {1, 2, 3}).

What action minimizes the doctors expectation of R?

Using the law of total probability and noting that knowledge of the symptoms is irrelevant given knowledge

Now taking logs we have,

O(i, k|y) := log

|

(

m

P(Y j = y j |I = i) ) +

P(I = i|Y = y) ! = X

log

P(I {z

= k|Y = y)

P(Y j{z

= y j |I = k)

} j=1 |

}

posterior log-odds ratio

jth obsern log-likelihood ratio

2

P

P

m

X

(I = i)

log

=:

λ j (i, k, y j ) + O(i, k)

(I = k)

j=1

| {z }

prior log-odds ratio

(2)

Disease (I = i)

R D( j)|I = 1

R D( j)|I = 2

R D( j)|I = 3

Treatment - D( j)

j=1 j=2 j=3

1

1

2

9

8

9

9

7

3

Table 2: Expected number of years reduction in patient life expectancy R(D) given treatment D( j) first while

suffering from disease I = i.

of the illness then we have,

R D(k)|X = x) =

3

X

R(D(k), I = i|X = x)

i=1

=

3

X

R(D(k)|I = i, X = x) · P(I = i|X = x)

i=1

=

3

X

R D(k)|I = i) · P(I = i|X = x)

i=1

which using the results from Question 1 i), and considering I = 1, 2, 3 we find treatment D(3) is the best in terms

of lowest expected reduction in life expectancy (for some intuition consider the importance of prescribing D(3) in

the case where I = 3),

1.1.3

R D(1)|X = {1, 1, 0, 1}) = 1 · 0.04 + 9 · 0.6 + 9 · 0.36 = 8.68

(10)

R D(2)|X = {1, 1, 0, 1}) = 1 · 0.04 + 8 · 0.6 + 7 · 0.36 = 7.36

(11)

R D(3)|X = {1, 1, 0, 1}) = 2 · 0.04 + 9 · 0.6 + 3 · 0.36 = 6.56

(12)

Question 1 iii)

Does the doctors decision differ if the patients symptoms measurement X3 was omitted? What difference would

such an omission cause and why?

Noting the form of the log-likelihood ratio in (2) and the results of Question 1 i), the posterior probabilities

can be easily modified. In particular denoting Õ( j, i|x) as the modified log-likelihood ratio (omitting X3 ) we have,

1 − 0.1

= 1.2 to 1 decimal place.

1 − 0.8

1 − 0.1

Õ(3, 1|x) = O(3, 1|x) − λ3 (3, 1|x3 ) = 2.2 − log

= 0.7 to 1 decimal place.

1 − 0.8

Õ(2, 1|x) = O(2, 1|x) − λ3 (2, 1|x3 ) = 2.7 − log

(13)

(14)

As before note that,

3

X

P(I = i|X = x) = P(I = 1|X = x) + e1.2 · P(I = 1|X = x) + e0.7 · P(I = 1|X = x) = 1

(15)

i=1

we have,

1

= 0.16 to 2 decimal places.

1 + e1.2 + e0.7

P(I = 2|X = x) = e1.2 · P(I = 1|X = x) = 0.52 to 2 decimal places.

(17)

P(I = 3|X = x) = e · P(I = 1|X = x) = 0.32 to 2 decimal places.

(18)

P(I = 1|X = x) =

2.2

3

(16)

Furthermore, if we are to consider the prescription of some treatment as per Question 1 ii) we find,

R D(1)|X = {1, 1, 0, 1}) = 1 · 0.16 + 9 · 0.52 + 9 · 0.32 = 7.72

(19)

R D(2)|X = {1, 1, 0, 1}) = 1 · 0.16 + 8 · 0.52 + 7 · 0.32 = 6.56

(20)

R D(3)|X = {1, 1, 0, 1}) = 2 · 0.16 + 9 · 0.52 + 3 · 0.32 = 5.96

(21)

Although treatment D(3) is still the preferred treatment note that the cost of mistreatment in terms of life expectancy is now lower. In particular note that a priori it is assumed the patient has disease I = 1 which is less

threatening than the other two diseases, however the symptom X3 = 0 is inconsistent with this disease and as such

moves the assessment away from this prior (the addition of more information has provided a more informative

posterior).

2

2.1

Exercise Sheet 2

Question 1

You work for a catalogue sales agency. They have recently had designed a children’s jogging suit witch they intend

to market as sportswear and as such expect to make a profit of £30000 per month over the next year. However the

promotion team believe with probability 0.2 it could “take-off” (X = 1) as day-wear as well, if (and only if) it is

marketed as such. If X = 1 and it is marketed as day-wear then the company expect to receive an extra £100000

per month profit. However if it does not “take-off” (X = 0) and they market it as day-wear they expect to lose

£50000 per month in profits wasted by ineffective use of advertising space in the day-wear section of the catalogue

to subtract from their sportswear profits.

The company’s options are:- d1 - market the suit as sportswear only for 12 months.

- d2 - market the suit as both sportswear and day-wear for 12 months.

- d3 - survey the market quickly at the cost of £20000 and on the basis of this choose d1 or d2 . This result is

not however completely reliable giving a positive indication (Y = 1) given X = 1 with P(Y = 1|X = 1) = 0.8

and a negative result with P(Y = 0|X = 0) = 0.6.

Draw the decision tree of this problem and determine your optimal decision rule when you are trying to maximise

your expected profit over the year. What is this expected maximum profit?

From the information contained in the question the decision tree can be constructed as per Figure 1.

The unknown probabilities from Figure 1 can now be explicitly found. In particular,

p1 := P(Y = 1) = P(Y = 1|X = 0) · P(X = 0) + P(Y = 1|X = 1) · P(X = 1)

= (1 − 0.6) · (1 − 0.2) + 0.8 · 0.2 = 0.48 =: 1 − p2

P(Y = 1|X = 1) · P(X = 1) 0.8 · 0.2 1

p3 := P(X = 1|Y = 1) =

=

= =: 1 − p4

P(Y = 1)

0.48

3

P(Y = 0|X = 1) · P(X = 1) (1 − 0.8) · 0.2 1

p5 := P(X = 1|Y = 0) =

=

=

=: 1 − p6

P(Y = 0)

0.52

13

(22)

(23)

(24)

Using the probabilities calculated and making decisions based on maximizing expected pay-off then the optimal

decision can be found by backwards evaluation of the decision tree. In particular we have,

Ea = £1560000 · 0.2 − £240000 · 0.8 = £120000

(25)

Eb = max{£340000, Ec } · p1 + max{£340000, Ed } · p2

1

2

1

12 = max £340000, £1540000 · − £260000 ·

· 0.48 + max £340000, £1540000 ·

− £260000 ·

· 0.52

3

3

13

13

= £340000

(26)

4

5 £360k

d1

Da

3 £1560k

X=1 wp 0.2

/ ◦E

a

d2

X=0 wp 0.8

+

-£240k

5 £340k

d1

d3

Db

F

£1540k

;

d2

!

◦Ec

Y=1 wp p1

X=1 wp p3

X=0 wp p4

#

-£260k

◦Eb

Y=0 wp p2

5 £340k

d1

Dc

£1540k

;

d2

!

◦Ed

X=1 wp p5

X=0 wp p6

$

-£260k

Figure 1: Ex2 Q1 decision tree

5

As such we find the expected pay-offs for d1 , d2 & d3 to be £360000, £120000 & £340000 respectively and as such

d1 is the best option.

2.2

Question 2

Define the expected value of perfect information (EVPI). How can it be used to simplify a decision tree?

EVPI is the expected pay-off given perfect information about the current uncertainties minus the expected pay-off

with current uncertainties,

EV PI = Eθ max R(d, θ) − max Eθ R(d, θ)

(27)

d

d

EVPI is useful in decision trees as it provides an upper bound on the price worth paying for any experimentation,

limiting the extent of the decision tree.

Your customer insists you give him a guarantee that a piece of machinery will not be faulty for one year. As

the salesman you have the option of overhauling your machinery before delivering it to the customer (action a2 )

or not (action a1 ). The pay-offs you will receive by taking these actions when the machine is, or is not, faulty are

given in Table 3.

Action

a1

a2

1000 800

0

700

State

Not Faulty

Faulty

Table 3: Machinery guarantee pay-offs (£’s)

The machinery will work if a certain plate of metal is flat enough. You have scanning devices which will sound

an alarm if a small region of this plate is bumpy. The probabilities that any one of these scanning devices sound

an alarm given that the machine is, or is not faulty, are respectively 0.9 and 0.4.

You may decide to scan with scanning devices dn (n = 0, 1, . . . ) at the cost of £50 · n and overhaul the machine

or not depending on the number of alarms that ring. These devices will give independent readings conditional on

whether the machine is faulty or not. Prior to scanning you believe that the probability that your machine is faulty

is 0.2.

Draw a decision tree of the necessary decision sequences to find the optimal number of scanning devices to

use and how to use them to maximize your expected pay-off.

Letting F denote a fault (and F c no fault), U denote some pay-off function and noting from the question that

P(F) = 0.2 then applying (27) we have,

EV PI =

P(F c )U(a1 , F c ) + P(F)U(a2 , F) − max P(F)U(a1 , F) + P(F c )U(a1 , F c ) , P(F)U(a2 , F) + P(F c )U(a2 , F c )

= (1 − 0.2) · 1000 + 0.2 · 700 − max 0.2 · 0 + (1 − 0.2) · 1000 , 0.2 · 700 + (1 − 0.2) · 800

= 940 − 800 = 140

(28)

As a result it isn’t worth purchasing more than (b140/50c =) 2 alarms hence limiting the size of the decision tree

that has to be considered as shown in Figure 2.

In particular considering the three cases of device number (d0 , d1 , d2 ) then we find the conditional probabilities

from the decision tree to be as follows (noting the number of rings is bounded by 0 and the number of devices)

using the notation whereby A denotes an alarm activation (and Ac denotes no alarm activation) we have,

6

£(08 − 50 · a)

pF|a−b

1

◦Ea−b

E

Action a1

a devices/

◦Ea

b rings

pF c |a−b

&

£(1000 − 50 · a)

/ D

a−b

Action a2

£(700

8 − 50 · a)

pF|a−b

2

◦Ea−b

pF c |a−b

&

£(800 − 50 · a)

Figure 2: Ex2 Q1 decision tree

- In the case of d0 we have,

PF|0−0 = P(F) = 0.2

PF c |0−0 = P(F c ) = 0.8

E0 = P(b = 0|a = 0) · D0−0

= 1 · max 0.2 · 0 + 0.8 · 1000, 0.2 · 700 + 0.8 · 800 = 800

(29)

(30)

(31)

- In the case of d1 we have either 1 or 0 rings. In particular,

PF|1−0 = P(F|Ac ) =

P(Ac |F) · P(F)

0.1 · 0.2

=

= 0.04

c

P(A )

0.1 · 0.2 + 0.6 · 0.8

PF c |1−0 = P(F c |Ac ) = 0.96

PF|1−1 = P(F|A) = 0.36

PF c |1−1 = P(F c |A) = 0.64

E1 = P(b = 0|a = 1) · D1−0 + P(b = 1|a = 1) · D1−1

(32)

(33)

(34)

(35)

= (0.2 · 0.1 + 0.8 · 0.6) · D1−0 + (0.2 · 0.9 + 0.8 · 0.4) · D1−1

= 0.5 · max 0.04 · (0 − 50) + 0.96 · (1000 − 50), 0.04 · (700 − 50) + 0.96 · (800 − 50)

+ 0.5 · max 0.36 · (0 − 50) + 0.64 · (1000 − 50), 0.36 · (700 − 50) + 0.64 · (800 − 50)

= 0.5 · max(910, 746) + 0.5 · max(590, 714) = 812

7

(36)

- In the case of d2 we have either 2, 1 or 0 rings. In particular,

PF|2−0 = P(F|Ac Ac ) =

0.1 · 0.1 · 0.2

P(Ac Ac |F) · P(F)

=

= 0.0069

P(Ac Ac )

0.1 · 0.1 · 0.2 + 0.6 · 0.6 · 0.8

PF c |2−0 = P(F c |Ac Ac ) = 0.9931

PF|2−1 = P(F|Ac A) = 0.086

PF c |2−1 = P(F c |Ac A) = 0.914

PF|2−2 = P(F|AA) = 0.56

PF c |2−2 = P(F c |AA) = 0.44

P(AA) = 0.2 · 0.9 · 0.9 + 0.8 · 0.4 · 0.4 = 0.29

P(Ac A) = 0.42

P(Ac Ac ) = 0.29

D2−0

(37)

(38)

(39)

(40)

(41)

(42)

(43)

(44)

(45)

= max 0.0069 · (0 − 100) + 0.9931 · (1000 − 100), 0.0069 · (700 − 100) + 0.9931 · (800 − 100)

= max(893.1, 699.31) = 893.1

(46)

D2−1 = max 0.0086 · (0 − 100) + 0.914 · (1000 − 100), 0.0086 · (700 − 100) + 0.914 · (800 − 100)

D2−2

= max(814, 645) = 815

= max 0.56 · (0 − 100) + 0.44 · (1000 − 100), 0.56 · (700 − 100) + 0.44 · (800 − 100)

(47)

= max(340, 644) = 644

(48)

E2 = P(b = 0|a = 2) · D2−0 + P(b = 1|a = 2) · D2−1 + P(b = 2|a = 2) · D2−2

= 0.29 · 893.1 + 0.42 · 815 + 0.29 · 644 = 788

(49)

As a result the optimal choice of the number of scans is the choice which maximizes E0 , E1 , E2 which with

reference to (31), (36) and (49) we find to be £812 using a single scan (choice d1 ).

3

3.1

Exercise Sheet 3

Question 1

Given a minimum possible reward s and a maximum possible reward t describe how you might construct your

utility U(r) for a reward s ≤ r ≤ t. Given any two decisions d1 and d2 giving reward probability mass functions

p1 (r) and p2 (r) respectively and your utility function U(r) how do you decide whether to take decision d1 or d2 ?

Prefer d1 to d2 if,

Ū(d1 ) :=

X

U(r) · p1 (r) ≥

r

X

U(r) · p2 (r) =: Ū(d2 )

(50)

r

Your utility function U(r) is given by,

U(r) = 1 − exp{−cr}

c>0

(51)

You must decide between two decisions. Decision d1 will give you reward £1 with probability 3/4 and £0 with

probability 1/4. Decision d2 will give you a reward of £r with probability (1/2)r+1 (where r = 0, 1, . . . ). Prove

that you will prefer d1 to d2 if and only if the value of c in your utility function satisfies,

c ≥ log 3 − log 2

(52)

Re-arranging (50) we have d1 d2 if,

1−

X

U(r) · p1 (r) ≤ 1 −

r

0

1−

X

U(r) · p2 (r)

r

−cr

1−e

3(1 − e−c ) X e

−

≤

4

4

2r+1

r≥0

8

(53)

Letting x := e−c then d1 d2 if,

4(2 − x)−1 ≥ 1 + 3x

(3x − 2)(x − 1) ≥ 0

(54)

As a result e−c ≤ 2/3 (we are given c > 0 so e−c ≥ 1 isn’t possible), so re-arranging we have,

c ≥ log 3 − log 2

3.2

(55)

Question 2

Three investors (I1 , I2 , I3 ) each wish to invest $10000 in shareholdings. Four shares (S 1 , S 2 , S 3 , S 4 ) are available

to each person. S 1 will guarantee the shareholder exactly 8% interest over the next year. Share S 2 will pay nothing

with probability 0.1, 8% interest with probability 0.5 and 16% interest with probability 0.4. Share S 3 will pay 4%

interest with probability 0.2 and 12% with probability 0.8. Share S 4 will pay 16% interest with probability 0.8

and nothing with probability 0.2 over the next year.

Each investor must rank five portfolios (P1 , P2 , P3 , P4 , P5 ) in order of preference. Portfolio Pi (for 1 ≤ i ≤ 4)

represents the investment of $10000 in share S i . Portfolio P5 represents the investment of $5000 in share S 1 and

$5000 in share S 4 .

Investor I1 prefers P1 and P3 to P5 . Investor I2 prefers P1 to P3 and P3 to P4 . Investor I3 prefers P1 to P4

and P2 to P1 .

Find the pay-off distribution over the next year associated with each portfolio. Hence, or otherwise, identify

those investors whose preferences given above violate Axioms 8–113 and explain why. You may assume that each

investors utility is an increasing function of his pay-off in the next year.

3 As

given in the course notes we have,

- Axiom 8: Bets giving the same distribution of rewards should be considered equivalent.

- Axiom 9: If P1 , P2 and P are any three reward distributions, then for all α such that 0 < α < 1 then,

P1 - P2 ⇔ αP1 + (1 − α)P - αP2 + (1 − α)P

(56)

- Axiom 10: If P1 , P2 and P are any three reward distributions such that P1 ≺ P ≺ P2 then there exist (α, β) where 0 < α, β < 1 such

that,

P ≺ αP2 + (1 − α)P1

(57)

P βP2 + (1 − β)P1

(58)

- Axiom 11: Let P(r) be any distribution on the reward random vector R for which P{r1 ≺ R ≺ r2 } for some rewards r1 , r2 and p(r) be

U(r) − U(r1 )

its probability mass function. For each r let α(r) :=

, then P ∼ βr2 + (1 − β)r1 where,

U(r2 ) − U(r1 )

β=

r2

X

r=r1

9

α(r) · p(r)

(59)

From the question we find the distribution of the portfolios to be as follows,

n

w.p. 1

P1 ∼ $800

$1600

w.p. 0.4

$800

w.p. 0.5

P2 ∼

$0

w.p. 0.1

(

$1200

w.p. 0.8

P3 ∼

$400

w.p. 0.2

(

$1600

w.p. 0.8

P4 ∼

$0

w.p. 0.2

(

$400 + $800 = $1200

w.p. 0.8

P5 ∼

$400 + $0 = $400

w.p. 0.2

(60)

(61)

(62)

(63)

(64)

Now considering the investors we find as follows,

- I1 (P1 P5 & P3 P5 ) violates Axiom 8 (see footnote 3) as P3 P5 yet they have the same pay-off.

- I2 (P1 P3 & P3 P4 ) doesn’t violate Axioms 8-11 (see footnote 3) as, for instance, considering the

increasing utility function such that U(0) = 0, U(400) = 1, U(800) = 10, U(1200) = 11, U(1600) = 11.1

then,

U(P1 ) = 10 U(P3 ) = 11 · 0.8 + 1 · 0.2 = 9 U(P4 ) = 11.1 · 0.8 + 0 · 0.2 = 8.88

(65)

- I3 (P1 P4 & P2 P1 ) violates Axiom 9 (see footnote 3) as P2 = 0.5 · P1 + 0.5 · P4 P1 , however

P1 = 0.5 · P1 + 0.5 · P1 and yet P1 P4 .

3.3

Question 3

Table 4 gives the pay-offs in $ associated with six possible decisions (d1 , d2 , . . . , d6 ) where the outcome θ can take

one of two possible values (θ1 or θ2 ).

Outcome

θ1

θ2

d1

2

2

d2

8

2

Decision

d3 d4 d5

3

7

3

3

1

4

d6

2

6

Table 4: Decision pay-offs ($’s)

3.3.1

Question 3 i)

Find those decisions which can be Bayes decisions under a linear utility function for some values of the probability

p(θ1 ).

Specify the corresponding possible values of p(θ1 ) for each of these decisions.

Plotting the decision pay-offs (Table 3) and considering linear utility functions of the form G(d, θ1 ) = m·G(d, θ2 )+b

we find that only d2 and d6 can possibly maximise expected utility. In particular the red line in Table 3 connecting

d2 and d6 has a gradient m = −3/2.

In general some decision a will be chosen in preference to b if the expected utility of a is greater than b. Further-

10

more in the case of a linear utility function with two outcomes we have that a is preferred to b if,

E R(a) ≥ E R(b)

R(a, θ1 ) · P(θ1 ) + R(a, θ2 ) · P(1 − θ1 ) ≥ R(b, θ1 ) · P(θ1 ) + R(b, θ2 ) · P(1 − θ1 )

R(a, θ1 ) − R(b, θ1 ) · P(θ1 ) ≥ − R(a, θ2 ) − R(b, θ2 ) · P(1 − θ1 )

R(a, θ2 ) − R(b, θ2 )

1

P(θ1 )

≥−

=−

1 − P(θ1 )

m

R(a, θ1 ) − R(b, θ1 )

(66)

For our example we have that d2 will be preferred to d6 whenever,

2

P(θ1 )

1

≥−

=

1 − P(θ1 )

−3/2 3

P(θ1 ) ≥ 2/5

(67)

Figure 3: Ex3 Q3 plot of decision pay-offs

3.3.2

Question 3 ii)

Explain carefully which of the decisions (d1 , d2 , . . . , d6 ) can be Bayes decisions under some utility function (assumed strictly increasing in pay-off) and probability value 0 < p(θ) < 1.

A Bayes’ decision d∗ is one that maximizes expected utility maxd E U(d) . Decisions can only be Bayes decisions if there does not exist any other decision which can with certainty provide a pay-off at least as great as the

chosen decision for all outcomes and greater than the chosen decision for at least one outcome. More formally for

some decision di @ d j such that G(d j , θk ) ≥ G(di , θk ) ∀k and G(d j , θk ) > G(di , θk ) for some k.

Possible Bayes decisions can be found by comparing each pair of possible decisions. More intuitively, with

reference to Table 3 decisions can be eliminated by considering each decision and removing any decisions falling

in the lower left hand corner of that decision. The possible Bayes decisions are those left after this elimination

procedure. For instance, in this case we have d2 (which eliminates d1 and d4 ), d5 (which eliminates d3 ) and d6 as

our possible Bayes decisions.

The Bayes decision can only be found once the utility function is known, but will be one of the possible Bayes

decision as above. As in Question 3 ii) knowledge about the form of the utility function may further reduce the

number of possible Bayes decisions.

11

4

Exercise Sheet 4

4.1

Question 1

You are a member of a jury and you want to be rational (you want to maximize your expected utility). There are

essentially 4 combinations of outcomes and decision on which your utility can depend.

- u(0, 0) - Find the suspect is innocent and they are actually innocent.

- u(0, 1) - Find the suspect is innocent and they are actually guilty.

- u(1, 0) - Find the suspect is guilty and they are actually innocent.

- u(1, 1)- Find the suspect is guilty and they are actually guilty.

Assume that4 ,

u(0, 1) ≤ u(1, 0) < u(0, 0) = u(1, 1)

(68)

Write down your expected utility as a function of your posterior probability of guilt and the utilities given above.

If your utilities satisfy this what does this mean?

Let d0 and d1 be respectively the decision to find the suspect innocent and guilty. Furthermore set the boundary utilities as follows - u(0, 1) = 0 and u(0, 0) = u(1, 1) = 1 - and further let u(1, 0) = u for some u ∈ [0, 1].

For shorthand let G and Gc be guilty and innocent respectively, E be evidence given in the trial and denote

p = P(G) and p∗ = P(G|E).

Now we find the expected utilities associated with our decisions (d0 , d1 ) to be,

E U(d0 ) = P(G|E) · u(0, 1) + P(Gc |E) · u(0, 0)

= 0 + (1 − p∗ )

E U(d1 ) = P(G|E) · u(1, 1) + P(Gc |E) · u(1, 0)

= p∗ + (1 − p∗ ) · u

(69)

(70)

Assuming rationality then the suspect should be found guilty iff E U(d1 ) > E U(d0 ) ,

p∗ + (1 − p∗ ) · u > 1 − p∗

p∗

>1−u

1 − p∗

(71)

Prove that in this case you should decide to say guilty only if the following equality holds for some A (some

function of your prior odds of innocence and the utility values) and evaluate A explicitly,

P(Evidence|Guilty)

≥A

P(Evidence|Innocent)

(72)

This follows directly applying Bayes’ Theorem,

P(E|G) P(G|E) · P(Gc )

=

P(E|Gc ) P(Gc |E) · P(G)

p∗ · (1 − p)

(1 − p∗ ) · p

p −1

> (1 − u) ·

1− p

=

(73)

4 This can be interpreted as the worst outcome is that someone is found innocent when actually guilty and the best outcome is you determine

someones guilt or innocence correctly.

12

The decision made depends on two components - the strength of your preferences (u(0, 1)) and your assessment

of the likelihood someone is guilty as opposed to innocent.

4.2

Question 2

A clients’ utility function has three mutually utility independent attributes (m.u.i.a.) where each attribute (Xi ) can

take one of only two values, where Ui (Xi ) = 1 denotes the successful outcome of the ith attribute and Ui (Xi ) = 0

the failed outcome where Ui denotes the clients’ marginal utilities on Xi .

Letting θ x1 ,x2 ,x3 (d) denote the probability that {X1 , X2 , X3 } = {x1 , x2 , x3 } under decision d ∈ D prove that when

P3

i=1 ki = 1 then the expected utility of decision d is,

E U(d) = k1 · θ1,0,0 (d) + k2 · θ0,1,0 (d) + k3 · θ0,0,1 (d)

+ (k1 + k2 ) · θ1,1,0 (d) + (k1 + k3 ) · θ1,0,1 (d) + (k2 + k3 ) · θ0,1,1 (d) + θ1,1,1 (d)

(74)

As it is stated we have m.u.i.a. we can apply multi-attribute utility theory as developed in class (see [?] §6.3 Thm

P

6.9 for more detail). As we have 3i=1 ki = 1 then,

U(d) =

3

X

ki Ui (xi )

(75)

i=1

Noting that Ui (Xi ) has binary outcomes (it’s an indicator variable!, so E(1 x ) = P(X)) then consider,

E U(d) = E

3

X

ki Ui (xi )

i=1

=

3

X

k=1

3

X

ki · E Ui (xi ) =

ki · P(Xi = i)

k=1

= k1 · (θ1,0,0 (d) + θ1,1,0 (d) + θ1,0,1 (d) + θ1,1,1 (d))

+ k2 · (θ0,1,0 (d) + θ1,1,0 (d) + θ0,1,1 (d) + θ1,1,1 (d))

+ k3 · (θ0,0,1 (d) + θ1,0,1 (d) + θ0,1,1 (d) + θ1,1,1 (d))

= k1 · θ1,0,0 (d) + k2 · θ0,1,0 (d) + k3 · θ0,0,1 (d)

+ (k1 + k2 ) · θ1,1,0 (d) + (k1 + k3 ) · θ1,0,1 (d) + (k2 + k3 ) · θ0,1,1 (d) + θ1,1,1 (d)

and when

P3

i=1 ki

(76)

, 1 (letting si, j = ki + k j + K · ki · k j for i , j),

E U(d) = k1 · θ1,0,0 (d) + k2 · θ0,1,0 (d) + k3 · θ0,0,1 (d) + s1,2 · θ1,1,0 (d) + s1,3 · θ1,0,1 (d) + s2,3 · θ0,1,1 (d) + θ1,1,1 (d)

(77)

P

Q

Referring to the case in [?] §6.3 Thm 6.9 where 3i=1 ki , 1, constructing some K (= i (1+ki )−1) (the normalizing

constant which scales U(x) to the range [0, 1]) and denoting ri = K · ki then,

1 Y

ri · Ui (xi ) + 1 − 1

(78)

U(d) =

K i

13

Again as Ui (Xi ) has binary outcomes it’s an indicator variable and so,

3

Y

K · E U(d) = E

ri · Ui (xi ) + 1 − 1

i=1

=

3

X

i=1

=

3

X

i=1

3

X

ri · E[Ui (xi )] +

ri · r j · E[Ui (xi ) · U j (x j )] + r1 · r2 · r3 · E[U1 (X1 ) · U2 (X2 ) · U3 (X3 )]

i, j=1

ri · P(Xi = 1) +

3

X

ri · r j · P(Xi = 1, X j = 1) + r1 · r2 · r3 · P(X1 = 1, X2 = 1, X3 = 1)

i, j=1

= r1 · (θ1,0,0 (d) + θ1,1,0 (d) + θ1,0,1 (d) + θ1,1,1 (d)) + . . .

+ r1 · r2 · (θ1,1,0 (d) + θ1,1,1 (d)) + . . . + r1 · r2 · r3 · θ1,1,1 (d)

so by dividing through by K and simple re-arrangement we find our result as desired,

E U(d) = k1 · θ1,0,0 + k2 · θ0,1,0 + k3 · θ0,0,1 + s1,2 · θ1,1,0 + s1,3 · θ1,0,1 + s2,3 · θ0,1,1 + θ1,1,1

(79)

(80)

Discuss how this responds to different values of criterion weights.

Noting that si, j = ki + k j + K · ki · k j for i , j we see that as K → 0 we obtain a neutral position whereas

K < 0 down-weights and K > 0 up-weights the gain from having two successes.

4.3

Question 3

Let D x U(x) and D2x U(x) represent respectively the first and second derivatives of U(x) and assume U(x) is strictly

increasing in x ∀x.

Suppose your client tells you the following - If she is offered a choice to add to her current fortune an amount x

for certain and she finds this equivalent to a gamble which gives a reward x + h with probability α and x − h with

probability 1 − α (for some h , 0) then her value of α(h) may depend on h but does not depend on x.

Letting λ(h) =

1 − 2 · α(h)

prove that,

h · α(h)

U(x) − U(x − h) U(x + h) − 2 · U(x) + U(x − h) λ(h) ·

=

h

h2

(81)

Equivalence of the gambles implies their expected utilities are the same,

U(x) = α(h) · U(x + h) + (1 − α(h)) · U(x − h)

(82)

Hence,

U(x) − α(h) · U(x) − (1 − α(h)) · U(x − h) = α(h) · U(x + h) − α(h) · U(x)

(1 − α(h)) · (U(x) − U(x − h)) = α(h) · (U(x + h) − U(x))

(1 − 2α(h)) · (U(x) − U(x − h)) = α(h) · (U(x + h) − 2U(x) + U(x − h))

1 − 2 · α(h) U(x) − U(x − h) U(x + h) − 2U(x) + U(x − h)

·

=

h · α(h)

h

h2

U(x) − U(x − h) U(x + h) − 2 · U(x) + U(x − h) λ(h) ·

=

h

h2

(83)

Prove that her utility function U(x) must either:- be linear in the case where λ = limh→0 λ(h) = 0; or; otherwise

14

satisfy λD x U(x) = D2x U(x) and take the form U(x) = A + B exp(λx) λ , 0 (by noting that for any continuous

f (x)

differentiable function f (x) that D x log f (x) = Dfx (x)

)

In the case of linearity simply consider (83). Now if λ = limh→0 λ(h) = 0 we have for all x that,

U(x + h) − 2U(x) + U(x − h) #

2

=0

D x U(x) = lim

h→0

h2

(84)

Implying that U(x) is linear.

Considering (83) in the case where limh→0 λ(h) , 0, recalling λD x U(x) = D2x U(x) and applying the identity

in the question by letting f (x) = D x U(x) we have that if D2x U(x) , 0 then,

D x [D x U(x)] D2x U(x)

D x [log D x U(x) ] =

=

=λ

Dx U x

Dx U x

log D x U(x) = β + λx

(85)

D x U(x) = exp{β + λx}

U(x) = A + B exp{λx}

(86)

where A and B are some constants and noting that to ensure an increasing utility function B must be of the same

sign as λ. Note that this characterization is is the same for small and large values of h.

5

5.1

Exercise Sheet 5

Question 1

For the purposes of assessing probabilities given by weather forecasters you choose to use the Brier scoring rule

S 1 (a, q) where,

S 1 (a, q) = (a − q)2

(87)

You suspect however that for some λ > 0 that your clients’ utility function is not linear but is instead of the form,

U(S 1 ) = 1 − S 1λ

(88)

Prove that if this assertion is true that your client will quote q = p for all values of p ⇐⇒ λ = 1.

Prove that if λ > 1/2 and you are able to elicit the value of λ then you are able to express the clients’ true

probability p as a function of their quoted probability q. Write down this function explicitly and explain in what

sense when 1/2 < λ < 1 your client will appear overconfident and if λ > 1 under-confident in her probability

predictions.

Prove that if 0 ≤ λ ≤ 1/2 it will only be possible to determine from her quoted probability whether or not

she believes that p ≤ 1/2.

Note: a is some indicator variable representing the binary outcome of rain or no rain.

We are interested in the question - What q will the client quote when p is the true probability?

If the client is rational they will attempt to maximize their expected utility,

E(U(S 1 )) = P(a = 0) · U S 1 (0, q) + P(a = 1) · U s1 (1, q)

= (1 − p) · U(q2 ) + p · U (1 − q)2

= (1 − p) · (1 − q2λ ) + p · (1 − (1 − q)2λ )

15

(89)

Now, finding the q which maximizes (89) we have,

d

E U(S 1 ) = (1 − p) · (−2λq2λ−1 ) + p · (2λ(1 − q)2λ−1 ) = 0

dq

(90)

which holds whenever λ , 1/2 and p , 0, 1 iff,

q 2λ−1

p

=

1− p

1−q

log(p) − log(1 − p) = (2λ − 1) · [log(q) − log(1 − q)]

q 2λ−1

p=

1−q

q 2λ−1

1+

1−q

(91)

(92)

(93)

Checking the second derivative we have,

−2λ · (2λ − 1)[(1 − q)2λ−2 · p + q2λ−2 · (1 − p)]

which clearly shows the stationary point is a maximum iff λ >

q = p ⇐⇒ λ = 1.

Considering (91) note that for

1

2

1

2.

(94)

Furthermore considering (93) note that

< λ < 1 then5 ,

q −

1 > p −

2 1 2

(95)

which can be interpreted as the client will make their forecasts closer to 0 or 1 than they should be. On the other

hand if λ > 1 then,

q − 1 < p − 1 (96)

2 2

which can be interpreted as the statements will be more conservative.

Considering the case where 0 ≤ λ ≤ 21 , note that (94) will imply the stationary points will occur at either 0

or 1, and as such if p > 12 then to maximize utility then q = 1 will be chosen and if q < 12 then q = 0 will be

chosen.

5.2

Question 2

On each of 1000 consecutive days, two probability forecasters F1 and F2 state one of 6 possible outcomes which

represent the probabilities that rain will occur the next day {q(1) = 0, q(2) = 0.2, q(3) = 0.4, q(4) = 0.6, q(5) =

0.8, q(6) = 1}. The results of their selection and actual occurence of rain is given in Table 5.

5.2.1

Question 2 i)

Demonstrate which, if either, of the two forecasters are well calibrated and calculate each of their empirical Brier

scores. Who has the better score?

1

p

q

< 1, which in turn implies that as

has

1− p

1−q

p

1

1

q

been risen to a power less than 1 that

<

, meaning that q and 1 − q are further from one another, implying − q > − p. On the

1−q 1− p

2

2

p

q

q

p

other hand if p > 1/2 then

> 1, which in turn implies that as

has been risen to a power less than 1 that

>

, meaning

1− p

1−q

1−q

1− p

1

1

that q and 1 − q are closer to one another, implying q − > p −

2

2

5 To

see this note that if λ ∈

2

, 1 , then 2λ − 1 ∈ (0, 1). Now fixing λ, if p < 1/2 then

16

q1 (i)

0.0

0.2

0.4

0.6

0.8

1.0

0.0

40 − (0)

20 − (0)

10 − (0)

10 − (0)

20 − (0)

0 − (0)

0.2

20 − (0)

20 − (0)

10 − (0)

10 − (0)

20 − (0)

20 − (20)

q2 ( j)

0.4

0.6

60 − (0)

60 − (0)

60 − (0)

60 − (0)

30 − (0) 30 − (30)

30 − (0) 30 − (30)

60 − (60) 60 − (60)

60 − (60) 60 − (60)

0.8

20 − (0)

20 − (20)

10 − (10)

10 − (10)

20 − (20)

20 − (20)

1.0

0 − (0)

20 − (20)

10 − (10)

10 − (10)

20 − (20)

40 − (40)

Table 5: Forecaster rain probability selections and occurrence of rain. a − (b) represents selection of outcome

{q1 (i), q2 ( j)} a-times, and rain occurring b-times whenever this selection was made.

q2 ( j)

q1 (i)

0.0

0.2

0.4

0.6

0.8

1.0

P

0.0

40 − (0)

20 − (0)

10 − (0)

10 − (0)

20 − (0)

0 − (0)

100 − (0)

0.2

20 − (0)

20 − (0)

10 − (0)

10 − (0)

20 − (0)

20 − (20)

100 − (20)

0.4

60 − (0)

60 − (0)

30 − (0)

30 − (0)

60 − (60)

60 − (60)

300 − (120)

0.6

60 − (0)

60 − (0)

30 − (30)

30 − (30)

60 − (60)

60 − (60)

300 − (180)

0.8

20 − (0)

20 − (20)

10 − (10)

10 − (10)

20 − (20)

20 − (20)

100 − (80)

1.0

0 − (0)

20 − (20)

10 − (10)

10 − (10)

20 − (20)

40 − (40)

100 − (100)

P

200 − (0)

200 − (40)

100 − (50)

100 − (50)

200 − (160)

200 − (200)

1000 − (500)

Table 6: Marginalized Table 5.

With reference to Table 6 which contains marginal counts note that the second forecaster (F2 ) is well calibrated

ˆ = 50/100 = 0.5

ˆ = 0.6

as for every quote q = q̂. The first forecaster (F1 ) is not well calibrated as 0.4

Denoting for the ith prediction ai as the actual outcome (binary), fi as the forecasted probability and N as the

total number of predictions then the Brier score is simply,

N

1 X

(ai − fi )2

N i=1

(97)

F1 ’s Brier score is

1000

1 X

(ai − fi )2 = 200 (0/200) · (1 − 0)2 + (200/200) · (0 − 0)2

1000 i=1

+ 200 (40/200) · (1 − 0.2)2 + (160/200) · (0 − 0.2)2

+ 100 (50/100) · (1 − 0.4)2 + (50/100) · (0 − 0.4)2

+ 100 (50/100) · (1 − 0.6)2 + (50/100) · (0 − 0.6)2

+ 200 (160/200) · (1 − 0.8)2 + (40/200) · (0 − 0.8)2

+ 200 (200/200) · (1 − 1)2 + (0/200) · (0 − 1)2 /1000

= 0.116

(98)

17

F2 ’s Brier score is

1000

1 X

(ai − fi )2 = 100 (0/100) · (1 − 0)2 + (100/100) · (0 − 0)2

1000 i=1

+ 100 (20/100) · (1 − 0.2)2 + (80/100) · (0 − 0.2)2

+ 300 (120/300) · (1 − 0.4)2 + (180/300) · (0 − 0.4)2

+ 300 (180/300) · (1 − 0.6)2 + (120/300) · (0 − 0.6)2

+ 100 (80/100) · (1 − 0.8)2 + (20/100) · (0 − 0.8)2

+ 100 (100/100) · (1 − 1)2 + (0/100) · (0 − 1)2 /1000

= 0.176

(99)

F1 ’s score is better as it is lower, despite F1 being uncalibrated.

Adapt the forecasts of any uncalibrated forecaster so that their empirical Brier score improves and calculate the

extent of this improvement.

Merging q1 (i) = 0.4 and q1 (i) = 0.6 into q1 (i) = 0.5 in Table 6 will result in F1 being well calibrated and

will reduce the Brier score by 0.01 (2 · 50 · 0.62 + 2 · 50 · 0.42 − 200 · 0.52 )/200 .

5.2.2

Question 2 ii)

It is suggested to you that improvements to the forecast could be achieved by combining in some way the two

forecasts. Find a probability formula which gives an empirical Brier score of zero based on the data above.

Notice that in Table 6 jointly F1 and F2 can predict the rain with certainty. Simplifying Table 6 to Table 7

we see that if jointly F1 and F2 predict rain then with probability of 1 predict rain, otherwise predict rain with

probability of 0. The joint prediction is well calibrated and has a Brier score of 0.

q1 (i)

0.0

0.2

0.4

0.6

0.8

1.0

0.0

No Rain

No Rain

No Rain

No Rain

No Rain

No Rain

0.2

No Rain

No Rain

No Rain

No Rain

No Rain

Rain

q2 ( j)

0.4

0.6

No Rain No Rain

No Rain No Rain

No Rain

Rain

No Rain

Rain

Rain

Rain

Rain

Rain

0.8

No Rain

Rain

Rain

Rain

Rain

Rain

1.0

No Rain

Rain

Rain

Rain

Rain

Rain

Table 7: Simplified Joint Forecast.

6

6.1

Exercise Sheet 6

Recap - Relevance

- P0 (Independence) - X ⊥

⊥ Y ⇐⇒ pX,Y (x, y) = pX (x)pY (y) = pY (y)pX (x) ⇐⇒ Y ⊥

⊥X

- P1 (Functional Dependence) - For some X, Y, Z then Y ⊥

⊥ X|(Z, Y) (“knowing something”)

- P2 (Symmetry) - For some X, Y, Z then X ⊥

⊥ Y|Z ⇐⇒ pX,Y|Z (x, y|z) = pX|Z (x|z)pY|Z (y|z) = pY|Z (y|z)pX|Z (x|z) =

pY,X|Z (y, x|z) ⇐⇒ Y ⊥

⊥ X|Z

18

- P3 (Perfect Composition) - For some X, Y, Z, W then X ⊥

⊥ (Y, Z)|W ⇐⇒ X ⊥

⊥ Y|(W, Z) & X ⊥

⊥ Z|W

6.2

Question 1

One alternative to the usual definition of irrelevance might be to say Y is irrelevant for forecasting X given the

information in Z if the expectation of X depended on {Y, Z} only through it’s dependence on Z. Check whether

properties P1 and P2 hold under this definition.

Noting that as X ⊥

⊥ Y|Z ⇐⇒ pX,Y|Z (x, y|z) = pX|Z (x|z)pY|Z (y|z), we have that Bayes rule gives pX|Y,Z (x|y, z) =

pX,Y|Z (x,y|z)pZ (z)

pX|Z (x|z)pY|Z (y|z)

pX,Y,Z (x,y,z)

= pX|Z (x|z) so as a result,

pY,Z (y,z) = pY|Z (y|z)pZ (z) =

pY|Z (y|z)

E(X|Y, Z) :=

Z

x · pX|Y,Z (x|y, z) dx =

Z

x · pX|Z (x|z) dx =: E(X|Z)

(100)

In addition we have X ⊥

⊥ Y|Z ⇐⇒ Y ⊥

⊥ X|Z

Note however that considering Table 8 we have that E(Z|X, Y) = E(Z|Y) but E(X|Z, Y) , E(X|Z).

Z

0

1

−1

0

1/4

X=Y

0

1

1/2

0

0

1/4

Table 8: Outcome Table

6.3

Question 2 - Simpson’s Paradox

As the statistical meaning of “independence” is not the same as its colloquial meaning this leads to an elementary

but plausible error made by scientists is to believe that if X is independent of Y given W then X must be independent of Y. Construct a simple joint distribution over three binary random variables {X, Y, W} to show that this can’t

be deduced in general.

Consider the following joint mass function,

P(w = 0) = P(w = 1) = 1/2

P(x = 0|w = 0) = 1 − P(x = 1|w = 0) = 2/3

P(x = 0|w = 1) = 1 − P(x = 1|w = 1) = 1/3

P(y = 0|w = 1) = 1 − P(y = 1|w = 1) = 1/5

P(y = 0|w = 0) = 1 − P(y = 1|w = 0) = 4/5

Clearly we have,

P(x = 0) = P(x = 1) = P(y = 0) = P(y = 1) = 1/2

Considering the conditional joint mass function we have,

P(x = 0, y = 0, w = 0)

P(w = 0)

P(x = 0|y = 0, w = 0)P(y = 0|w = 0)P(w = 0)

=

P(w = 0)

= P(x = 0|w = 0)P(y = 0|w = 0)

P(x = 0, y = 0|w = 0) =

= 2/3 · 4/5 = 8/15

... = ...

19

As a result we have the following joint mass function,

P(x = 0, y = 0) = P(x = 0, y = 0, w = 0) + P(x = 0, y = 0, w = 1)

= P(x = 0|y = 0, w = 0)P(y = 0|w = 0)P(w = 0) + P(x = 0|y = 0, w = 1)P(y = 0|w = 1)P(w = 1)

= P(x = 0|w = 0)P(y = 0|w = 0)P(w = 0) + P(x = 0|w = 1)P(y = 0|w = 1)P(w = 1)

= 2/3 · 4/5 · 1/2 + 1/3 · 1/5 · 1/2 = 3/10

, 1/4 = P(x = 0)P(y = 0)

P(x = 0, y = 1) = . . . = 2/3 · 1/5 · 1/2 + 1/3 · 4/5 · 1/2 = 1/5 , 1/4 = P(x = 0)P(y = 1)

P(x = 1, y = 0) = . . . = 1/3 · 4/5 · 1/2 + 2/3 · 1/5 · 1/2 = 1/5 , 1/4 = P(x = 1)P(y = 0)

P(x = 1, y = 1) = . . . = 1/3 · 1/5 · 1/2 + 2/3 · 4/5 · 1/2 = 3/10 , 1/4 = P(x = 1)P(y = 1)

Exhaustive checking of each outcome shows X ⊥

⊥ Y|W but we don’t have X ⊥

⊥ Y.

Why must it be true if, in addition, either X or Y is independent of W?

We have,

X⊥

⊥ W ⇐⇒ pX,W (x, w) = pX (x)pW (w)

X⊥

⊥ Y|W ⇐⇒ pX,Y|W (x, y|w) = pX|W (x|w)pY|W (y|w)

Now,

pX,Y (x, y) =

X

pX,Y,W (x, y, w) =

W

=

X

pX,Y|W (x, y|w)pW (w)

W

X

pX|W (x|w)pY|W (y|w)pW (w)

W

=

X

pX,W (x, w)pY|W (y|w)

W

=

X

pX (x)pW (w)pY|W (y|w) = pX (x)

X

pW (w)pY|W (y|w)

W

W

= pX (x)

X

pY,W (y, w) = pX (x)pY (y) ⇐⇒ X ⊥

⊥Y

W

6.4

Question 3 - Positivity

Another axiom of irrelevance which is sometimes used is to demand that if Y is irrelevant about X once Z is known

and Z is irrelevant about X once Y is known then the pair {Y, Z} must be irrelevant to X.

By considering the case X = Y = Z show that this can’t always be deduced for the usual conditional independence

definition of irrelevance. Show however that when {X, Y, Z} have a discrete distribution and that all combinations

of these variables are possible (i.e. P(X = x, Y = y, Z = z) > 0 ∀ possible x, y, z) then this property will hold for

conditional independence.

Considering X = Y = Z we have X ⊥

⊥ X|X, however we clearly don’t have X 6⊥

⊥ (X, X) as they are equal!

As a result this can’t always be deduced.

Now considering the case where X, Y, Z are distrete and the joint density is positive for all x, y, z recall that,

X⊥

⊥ (Y, Z) ⇐⇒ pX,Y,Z (x, y, z) = pX (x)pY,Z (y, z)

(101)

Noting that X ⊥

⊥ Y|Z ⇐⇒ pX,Y|Z (x, y|z) = pX|Z (x|z)pY|Z (y|z) we have,

pX,Y,Z (x, y, z) = pX|Z (x|z)pY|Z (y|z)pZ (z)

= pX|Z (x|z)pY,Z (y, z)

20

(102)

Similarily X ⊥

⊥ Z|Y ⇐⇒ pX,Z|Y (x, z|y) = pX|Y (x|y)pZ|Y (z|y) means we have,

pX,Y,Z (x, y, z) = pX|Y (x|y)pZ|Y (z|y)pY (y)

= pX|Y (x|y)pY,Z (y, z)

(103)

Equating (101), (102) and (103) we have pX (x) = pX|Z (x|z) = pX|Y (x|y) and so,

pX,Y (x, y) = pX (x)pY (y) ⇐⇒ X ⊥

⊥Y

(104)

pX,Z (x, z) = pX (x)pZ (z) ⇐⇒ X ⊥

⊥Z

(105)

Together (104) and (105) imply X ⊥

⊥ (Y, Z).

7

7.1

Exercise Sheet 7

Question 1

A Markov chain {Xt }nt=1 has the property that ∀ i ∈ {3, . . . , n} Xi |Xi−1 ⊥

⊥ {Xt }i−2

t=1 . Representing this as an influence

6

diagram, use d-Separation to prove the following,

The DAG of this process takes the form of that in Figure 4 below,

◦X1

/ ◦X2

/ ◦Xi−2

/ ◦Xi−1

/ ◦Xi

/ ◦Xi+1

/ ◦Xi+2

/ ◦Xn−1

/ ◦Xn

Figure 4: Ex7 Q1 - DAG

The DAG in Figure 4 is clearly decomposable so we can check for irrelevance by considering seperation on the

moralized and undirected version of this graph as in Figure 5,

◦X1

◦X2

◦Xi−2

◦Xi−1

◦Xi

◦Xi+1

◦Xi+2

◦Xn−1

◦Xn

Figure 5: Ex7 Q1 - Moralized & Undirected

7.1.1

Question 1a)

R1 (Xi−1 ) ⊥

⊥ R2 (Xi−1 )|Xi−1 , where R1 (Xi−1 ) = {X1 , . . . , Xi−2 } and R2 (Xi−1 ) = {Xi , . . . , Xn } (i.e our predictions about

the future of the process depends only on the last observation).

As shown in Figure 6 R1 is clearly seperated from R2 by Xi−1 and so R1 ⊥

⊥ R2 |Xi−1 ,

◦X1

◦X2

◦Xi−2

•Xi−1

◦Xi

◦Xi+1

◦Xi+2

◦Xn−1

◦Xn

Figure 6: Ex7 Q1a

7.1.2

Question 1b)

P⊥

⊥ Xi |(Xi−1 , Xi+1 ) for 2 ≤ i ≤ n − 1 and P = {X1 , X2 , . . . , Xi−2 , Xi+2 , . . . , Xn } (i.e. given the variables preceeding

and suceeding Xi no other observation gives further relevant information about Xi ).

As shown in Figure 7 P is seperated from Xi given Xi−1 and Xi+1 so P ⊥

⊥ Xi |(Xi−1 , Xi+1 ),

6 d-Seperation Theorem: Let X, Y, Z be disjoint sets of nodes in a valid DAG - G. Then Y is irrelevant for predictions about X|Z is a valid

deduction from G iff in the undirected version of the moralized graph G[S ](m) of the ancestral DAG on the set S = {X, Y, Z}, Z seperates X

from Y.

21

◦X1

◦X2

◦Xi−2

•Xi−1

◦Xi

•Xi+1

◦Xi+2

◦Xn−1

◦Xn

Figure 7: Ex7 Q1b

7.2

Question 2

The DAG of a valid influence diagram is given in Figure 8. Use the d-Separation theorem to check the following

irrelevance statements,

◦XO 8

< ◦XO 7

◦XO 5 b

◦XO 6

◦XO 3

/ ◦X4

O

◦X1

/ ◦X2

Figure 8: Ex7 Q2 - DAG

To begin lets find the corresponding moralized undirected graph as shown in Figure 9,

i) - Moralized

ii) - Undirected

◦XO 8

8 ◦XO 7

◦X8

◦X7

◦XO 5 f

◦XO 6

◦X5

◦X6

◦XO 3

/ ◦X4

O

◦X3

◦X4

◦X1

/ ◦X2

◦X1

◦X2

Figure 9: Ex7 Q2 - Undirected moralized graph

7.2.1

Question 2a)

(X3 , X4 ) ⊥

⊥ X7 |(X5 , X6 )

As shown in Figure 10 removal of X5 and X6 results in X3 and X4 being seperated from X7 , so we can conclude

that (X3 , X4 ) ⊥

⊥ X7 |X5 , X6

7.2.2

Question 2b)

X2 ⊥

⊥ X3 |(X1 , X4 )

22

◦X8

◦X7

•X5

•X6

◦X3

◦X4

◦X1

◦X2

Figure 10: Ex7 Q2a

As shown in Figure 11 removal of X1 and X4 still leaves an edge between X2 and X3 , so we can conclude that

X2 6⊥

⊥ X3 |(X1 , X4 )

◦X8

◦X7

◦X5

◦X6

◦X3

•X4

•X1

◦X2

Figure 11: Ex7 Q2b & Q2c

7.2.3

Question 2c)

X2 ⊥

⊥ (X5 , X8 )|(X1 , X4 )

As shown in Figure 11 removal of X1 and X4 leaves X1 connected to X5 and X8 via X3 , so we can conclude

that X2 6⊥

⊥ (X5 , X8 )|(X1 , X4 )

7.3

Question 3

Let W = (X, Y, Z) have a multivariate normal distribution with covariance matrix V of full rank and write (noting

that P12 = P21 , P13 = P31 and P23 = P32 ),

P11 P12 P13

V −1 = P = P21 P22 P23

(106)

P31 P32 P33

By considering the form of the log joint density of W show that X ⊥

⊥ Y|Z ⇐⇒ P12 is the matrix of all zeros.

23

Denoting n as the dimension of w we have,

¯

1

1

T −1

exp

−

(w

−

µ

)

V

(w

−

µ

)

f (w) =

w

w

2 ¯

(2π)n/2 |V|1/2

¯

¯

n

1

1

log( f (w)) = − log(2π) − log(V) − (w − µw )T V −1 (w − µw )

2

2

2 ¯

¯

¯

−2 log( f (x, y, z)) = n log(2π) − log(P)

+ (x − µ x )T P11 (x − µ x ) + (x − µ x )T P12 (y − µy ) + (x − µ x )T P13 (z − µz )

+ (y − µy )T P21 (x − µ x ) + (y − µy )T P22 (y − µy ) + (y − µy )T P23 (z − µz )

+ (z − µz )T P31 (x − µ x ) + (z − µz )T P32 (y − µy ) + (z − µz )T P33 (z − µz )

fX,Y,Z (x, y, z)

, we have −2 log( fX,Y|Z (x, y|z)) = −2 log( fX,Y,Z (x, y, z)) + 2 log( fZ (z))

fZ (z)

and as a result we can simply split our conditional density into components which depend on z and those which

depend only on x and y,

Now as we have fX,Y|Z (x, y|z) =

−2 log( f (x, y|z)) = τ(z) + τ(x, z) + τ(y, z) + τ(x, y)

(107)

where,

τ(z) = (z − µz )T P33 (z − µz ) + 2 log( fZ (z))

τ(x, z) = (x − µ x )T P11 (x − µ x ) + (x − µ x )T P13 (z − µz ) + (z − µz )T P31 (x − µ x )

τ(y, z) = (y − µy )T P22 (y − µy ) + (y − µy )T P23 (z − µz ) + (z − µz )T P32 (y − µy )

τ(x, y) = (x − µ x )T P12 (y − µy ) + (y − µy )T P21 (x − µ x )

(108)

Recalling that we have X ⊥

⊥ Y|Z then we similarly have,

−2 log( f (x, y|z)) = −2 log( f (x|z) f (y|z)) = −2 log( f (x|z)) − 2 log( f (y|z)) = τ(z) + τ(x, z) + τ(y, z)

(109)

Equating the results we conclude that X ⊥

⊥ Y|Z ⇐⇒ τ(x, y) = 0 ⇐⇒ P12 = P21 = 0.

8

8.1

Exercise Sheet 8

Question 1

Elementary particles are emitted independently from a nuclear source. If X1 denotes the time before the first

emission, in minutes, and Xi denotes the time between the emission of the (i − 1)th and the ith particle (i =

1, 2, 3, 4, . . . ) we know that the density f (x|θ) of each Xi is given by,

(

θ exp{−θx}

x > 0, y > 0

f1 (x|θ) =

(110)

0

otherwise

When you first obtain the data you find that you have only been given the number y j of emissions in the interval

( j − 1, j]. You know, however, that from the above Y j ( j = 1, 2, . . . ) are independent with the following mass

function,

y

θ

exp{−θ}

y = 0, 1, 2, . . . θ > 0

(111)

f2 (y|θ) =

y!

0

otherwise

You take in observations and your last observation arrives exactly on the nth minute. Your prior distribution for θ

was a Gamma distribution G(α∗ , β∗ ), with density π(θ) where,

( α∗ −1

θ

exp{−β∗ θ}

θ, α, β > 0

π(θ) ∝

(112)

0

otherwise

24

Find your posterior distribution for θ,

i) using y1 , . . . , yn

ii) using x1 , . . . , xn

and show that the two analyses give identical inferences on θ.

Calculating the likelihoods of i) and ii) and noting that the sum of the particle emissions in each period will

P

produce the total number emissions ( ni=1 yi = m) and that the sum of time between emissions to some time n

P

where an emission occurs will produce the length of the total interval ( m

i=1 xi = n), we have (for the parameters

conforming to the above restrictions),

L(θ|y) =

¯

∝

L(θ|x) =

¯

=

n

Y

i=1

n

Y

i=1

m

Y

i=1

m

Y

f2 (yi |θ)

θyi exp{−θ} = θ

Pn

i=1 yi

exp{−nθ} = θm exp{−nθ}

(113)

f2 (xi |θ)

(

θ exp{−θxi } = θ exp −

m

i=1

m

X

)

xi θ = θm exp{−nθ}

(114)

i=1

Noting that the prior doesn’t contain any data then,

π(θ|x) = π(θ|y) ∝ L(θ|y)π(θ)

¯

¯

¯ ∗

= θm θ−θn θα −1 exp{−β∗ θ}

∗

= θm+α −1 exp{−θ(n + β∗ )}

(115)

Noting that as we have a density our posterior will integrate to 1 and further noting that we have the same form as

the Gamma distribution earlier we conclude that our posterior has a G(m + α∗ , n + β∗ ) distribution.

If α∗ > 1 express the posterior mode of θ as a weighted average of y and the prior mode (where the weight ρ

depends only on β∗ /n).

Denoting m1 as the posterior mode and m0 as the prior mode, and further noting that for a G(α, β) distribution

the mode m = α−1

β , we have,

m + α∗ − 1

n + β∗

m n

α∗ − 1 β∗

=

+

n n + β∗

β∗ n + β∗

m1 =

= ȳρ + m0 (1 − ρ)

25

where ρ =

n

n + β∗

(116)

8.2

Question 2

8.2.1

Question 2a)

Show that if P = {p(θ, α) : α ∈ A ⊆ Rm } is a family closed under sampling7 to the likelihood L(θ|x) then so is the

family,

(

)

k

k

X

X

Pk = p(θ, α) =

γi pi (θ, α) :

γi = 1 γi > 0 pi ∈ P 1 ≤ i ≤ k

(117)

i=1

i=1

P

Let p(θ) ∈ Pk we have p(θ) = ki=1 γi pi (θ) where pi (θ) ∈ P and denoting τ(x) as some normalizing constant

then note that by applying Bayes rule we have,

p(θ|x) = p(θ)L(θ|x)τ−1 (x) =

k

X

γi pi (θ)L(θ|x)τ−1 (x)

i=1

=

k

X

γi τi (x)pi (θ|x)τ−1 (x)

i=1

=

k

X

β∗i pi (θ|x)

where β∗i =

i=1

γi τi (x)

τ(x)

(118)

Now as pi (θ|x) ∈ P (P is closed under sampling) we have Pk is closed under sampling.

8.2.2

Question 2b)

Suppose random variables X1 , . . . , Xn constitute a random sample from a rectangular distribution (continuous

uniform distribution) with density f (x|θ) given by,

( −1

θ

0<x<θ

f (x|θ) =

(119)

0

otherwise

Letting

(

pi (θ) =

αθiα θ−(α+1)

0

θ > θi where α, θi > 0

otherwise

If a prior density of the form p(θ) of the form

(

βp1 (θ) + (1 − β)p2 (θ)

p(θ) =

0

0<β<1

otherwise

i = 1, 2

(120)

θ1 < θ2

(121)

is used, find the posterior density p(θ|x) of θ.

Defining x∗ = maxn xi then

(

L(θ|x) =

θ−n

0

θ > x∗

otherwise

(122)

Letting θi∗ = maxn (x∗ , θi ) (recall the i subsript relates to the ith prior!) Then we find our ith posterior,

(

αθiα θ−(α+1) θ−n

θ > θi∗

pi (θ|x) ∝

0

otherwise

R

(123)

7 Closed Under Sampling (See [?] §5.4 Thm 5.3): A family of prior distributions P = {p(θ|α) : α ∈ A ⊆

n } is closed under sampling for

a likelihood L(θ|x) if for any prior p(θ) ∈ P the corresponding posterior distribution p(θ|x) ∈ P

Intuition: It is convenient if the prior and posterior have the same distribution form as it typically results in more tractibility, the normalizing

constant ’for free’ and some understanding on the contributory weighting effect of the prior and the data.

Intuition in this question: Think of Pk as a richer form built through mixing.

26

Noting the form of pi (θ) above we can find the normalizing constant,

(

(α + n)θi∗(α+n) θ−(n+α+1)

θ > θi∗

pi (θ|x) =

0

otherwise

(124)

Explicitly our normalizing constant is,

τi (x) =

αθiα

(125)

(α + n)θi∗(α+n)

Now from Question 1 we have p(θ|x) = β∗1 p1 (θ|x) + (1 − β∗1 )p2 (θ|x) where β∗1 = β1 τ1 (x)τ−1 (x) and β∗2 = (1 − β∗1 ) =

β2 τ2 (x)τ(x) = (1 − β1 )τ2 (x)τ−1 (x). Cancellation gives,

h

i−1

θ1α θ1∗(α+n) β

β∗ = h

i−1

h

i−1

θ1α θ1∗(α+n) β + θ2α θ2∗(α+n) (1 − β)

(126)

Sketch the different samples {x1 , x2 , . . . , xn }.

Letting θ1 < θ2 and noting that θ1∗ and θ2∗ both depend on x∗ , we find that we have 3 cases to consider: (i)

x∗ ≤ θ1 < θ2 ; (ii) θ1 < x∗ ≤ θ2 ; (iii) θ1 < θ2 < x∗ .

Case (i) - x∗ ≤ θ1 < θ2 - Here we have θ1∗ = θ1 and θ2∗ = θ2 and as such,

β∗ =

θ1−n β

→ 1 as n → ∞

θ1−n β + θ2−n (1 − β)

(127)

Figure 12: Ex8 Q2 - Case (i)

Case (ii)- θ1 < x∗ ≤ θ2 - Here we have θ1∗ = x∗ and θ2∗ = θ2 and as such,

β∗ =

θ1α (x∗(α+n) )−1 β

θ1α (x∗(α+n) )−1 β + θ2α (θ2∗(α+n) )−1 (1 − β)

27

→ 1 as n → ∞

(128)

Figure 13: Ex8 Q2 - Case (ii)

Figure 14: Ex8 Q2 - Case (iii)

Case (iii)- θ1 < θ2 < x∗ - Here we have θ1∗ = θ2∗ = x∗ . As a result β∗ = 1/2 and p1 (θ|x) = p2 (θ|x) = p(θ|x).

As indicated in Cases (i)-(iii) we find in Figure 15 that as n increases our posterior density p(θ|x) → p1 (θ|x).

Notice that this change is noticable for even a small number of samples.

Considering the effect of β on the posterior, note that in effect we are simply weighting two densities (in both

the prior and posterior case). Figure 16 demonstrates this effect, in particular we see that as β is increased p2 (θ)

contributes more to the prior p(θ) which in turn changes the posterior p(θ|x).

8.3

Question 3

For integer (α, β) the Beta distribution Be(α, β) has density f (θ|α, β) given by

(α + β − 1)! α−1

θ (1 − θ)β−1

0<θ<1

f (θ|α, β) =

(α

− 1)!(β − 1)!

0

otherwise

(129)

A coin is tossed n times and the number of heads x is counted. You choose a prior of the form,

π(θ) = γ f (θ|k, k) + (1 − γ) f (θ|1, 1)

28

0<γ<1

(130)

Figure 15: Ex8 Q2 - Effect of varying n on posterior

where θ represents the probability of heads and where f (θ|α, β) is defined above and k is a large integer. What sort

of prior belief does this represent?

f (θ|1, 1) is essentially a flat prior on [0, 1] whereas f (θ|k, k) has a density which tightens around 1/2 as k → ∞. In

essence this prior for large k essesntially says with probability γ the coin will be fair and with probability (1 − γ)

heads could form any proportion of the total tosses.

If γ = 1/2 and the number of head observed x = 0, show that the posterior distribution of θ is also a mix

ture of two Beta distributions with posterior weights γ∗ and (1 − γ∗ ) where γ∗ = 1 + t(n) −1 and t(n) =

−1

(n + 1)−1 (n + 2k − 1)(n + 2k − 1) . . . (n + k) (2k − 1)(2k − 1) . . . (k) . Show that for k ≥ 2 then γ∗ → 0 as

n → ∞ and interpret this result.

Denoting f1 (θ) = f (θ|k, k) and f2 (θ) = f (θ|1, 1) then,

f (θ|x = 0) ∝ γ f1 (θ)L1 (θ|x = 0) + (1 − γ) f2 (θ)L2 (θ|x = 0)

= τ1 (x) f1 (θ|x = 0) + τ2 (x) f2 (θ|x = 0)

(131)

Now8 ,

n!

(2k − 1)!

θk−1 (1 − θ)k−1 ·

θ0 (1 − θ)n

(k − 1)!(k − 1)!

0!(n − 0)!

∼ Be(k, k + n)

(2 − 1)!

n!

f2 (θ|x = 0) ∝

θ1−1 (1 − θ)1−1 ·

θ0 (1 − θ)n

(1 − 1)!(1 − 1)!

0!(n − 0)!

∝ θ(1)−1 (1 − θ)(n+1)−1

f1 (θ|x = 0) ∝

∼ Be(1, 1 + n)

(132)

(133)

Estimating the normalizing constants for f1 (θ|x = 0) and f2 (θ|x = 0) is now trivial given their analytic density,

(2k − 1)!

k + (n + k) − 1)!

τ1 (x) =

(k − 1)!(k − 1)!

(k − 1)!((n + k) − 1)!

(2k − 1)!(k + n − 1)!

τ1 (x) =

(k − 1)!(2k + n − 1)!

n!

1

τ2 (x) =

=

(n + 1)! n + 1

8 Noting

that Be(θ|α, β) has pdf

(α + β − 1)! α−1

θ (1 − θ)β−1

(α − 1)!(β − 1)!

29

(134)

(135)

Figure 16: Ex8 Q2 - Effect of varying beta on posterior

We have γ∗ = (1 + t)−1 therefore,

t = (1 − γ∗ )/γ∗

(2k + n − 1)!(k − 1)!

= (n + 1)−1

(k + n − 1)!(2k − 1)!

[(2k + n − 1)(2kn − 1) . . . (k + n)]

= (n + 1)−1

[(2k − 1)(2k − 1) . . . (k)]

n n n

= (n + 1)−1

+1

+ 1 ...

+1

2k − 1

2k − 2

k

2k−1

Y

n

= (n + 1)−1

1+

→ ∞ as n → ∞

r

r=k

(136)

Hence γ∗ → 0 as n → ∞ and as such as the sequence of tails becomes larger more weight is given to the coin

being unfair.

Figure 17 illustrates that for even a moderate sequence of tails the contribution to the posterior of the infor

mative prior f2 (θ) is greatly diminished leaving only a slight ‘hump’ in the density. Note that considering the

30

posterior f2 (θ|x = 0) given the prior f2 (θ) we see that it moves only slightly and has no density for θ = 0.

Figure 17: Ex8 Q3 Illustration of posterior for large n and large k

Investigating the effect on the posterior of an increase in the parameter k of the informative prior f2 (θ) = f (θ|k, k)

in Figure 18 we see that it has the effect of changing the density and emphasising the ‘hump.’ As way of explaining

this phenomenon note that as k increases the posterior f2 (θ|x = 0) given the prior f2 (θ) becomes more concentrated

around a mode which moves progessively more modestly.

Figure 18: Ex8 Q3 Comparison of posterior for varying k

Figure 19 illustrates the changes in the posterior whenever the sequence of tails becomes larger. We find that

density is drawn from the ‘hump’ caused by the informative prior and resulting in it becoming closer to that of the

posterior f1 (θ|x = 0) given the prior f1 (θ) as indicated in the results shown in (133).

31

Figure 19: Ex8 Q3 Comparison of posterior for varying n

32