Sparse Source Localization in Presence of Co-Array Perturbations Ali Koochakzadeh Piya Pal

advertisement

Sparse Source Localization in Presence of Co-Array

Perturbations

Ali Koochakzadeh

Piya Pal

Dept. of Electrical and Computer Engineering

University of Maryland, College Park

Email: alik@umd.edu

Dept. of Electrical and Computer Engineering

University of Maryland, College Park

Email: ppal@umd.edu

Abstract—New spatial sampling geometries such as nested

and coprime arrays have recently been shown to be capable

of localizing O(M 2 ) sources using only M sensors. However,

these results are based on the assumption that the sampling

locations are exactly known and they obey the specific geometry

exactly. In contrast, this paper considers an array with perturbed

sensor locations, and studies how such perturbation affects source

localization using the co-array of a nested or coprime array. An

iterative algorithm is proposed in order to jointly estimate the

directions of arrival along with the perturbations. The directions

are recovered by solving a sparse representation problem in each

iteration. Identifiability issues are addressed by deriving Cramér

Rao lower bound for this problem. Numerical simulations reveal

successful performance of our algorithm in recovering of the

source directions in the presence of sensor location errors. 1

I. I NTRODUCTION

Uniform spatial sampling geometries such as the Uniform

Linear Array (ULA) have been studied for decades and they

are well known to be capable of resolving O(M ) sources

using M sensors [1], [2]. Recently, new non-uniform array

geometries, namely nested [3], and coprime [4] arrays, have

been proposed which can dramatically increase degrees of

freedom from O(M ) to O(M 2 ) leading to capability of

estimating O(M 2 ) source directions. In these techniques, the

vectorized form of the covariance matrix of received signals is

studied, which leads to the concept of virtual co-arrays. Two

main approaches have been proposed to use this enhanced

degrees of freedom in order to improve the DOA (directions

of arrival) estimation. The first approach is the subspace

methods, where the typical subspace methods for arrays, such

as MUSIC, are performed on the spatially smoothed co-array

manifold [5]. In the second approach, sparsity is used as the

key to recover the directions of sources. In this case, the range

of all possible directions is discretized into a grid, and the

DOA estimation problem is then reformulated as a sparse

representation problem [6]–[9]. We review this approach in

more details in Section II-A.

The results established so far are based on an unperturbed

array geometry, one in which the sensor locations exactly

satisfy the geometrical constraints. Array imperfections, however, are known to severely deteriorate the performance of

DOA estimation algorithms [10], [11]. This is mainly due to

strong dependence of these algorithms to the availability of an

accurately known array manifold. One of the most common

forms of these imperfections is uncertainty about the accurate

location of the sensors. To address the array imperfections

and suppress their effect on DOA estimation, there are many

1 This

work was supported by the University of Maryland, College Park.

c

978-1-4673-7353-1/15/$31.00 2015

IEEE

methods proposed in the literature, which are known as selfcalibration methods. In these methods, the perturbations are

modeled as unknown but deterministic parameters, and these

parameters are estimated jointly with the directions of the

sources. The methods in [11], [12], and [13] resolve the sensor

location uncertainty, using eigenstructure-based methods. [14]

proposes a unified framework to formulate the array imperfections, and renders a sparse Bayesian perspective for array

calibration and DOA estimation. In all of these methods, the

array is assumed to be ULA, and none of the self-calibration

methods in the literature use the concept of co-arrays.

In this paper, we study the effect of random perturbations on

the sensor locations and investigate if it is possible to perform

DOA estimation using a co-array in presence of perturbations.

We use the grid based model to obtain a sparse representation

and propose a sparsity-aware estimation framework to jointly

recover the DOAs and the perturbation errors using an iterative algorithm. We also address identifiability issues for this

framework by explicitly deriving the Cramér Rao bound and

studying conditions under which it exists.

The paper is organized as follows. In Sec II we formulate

our problem. Sec III establishes Cramér Rao based conditions

under which our problem is identifiable. In Sec IV, our proposed approach for jointly estimating the source directions and

perturbations is presented. Sec V elaborates the performance

of our proposed algorithm via numerical simulations. Sec VI

concludes the paper.

Notation: Throughout this paper, matrices are represented

by capital bold letters, vectors by lower case bold letters. xi

denotes the ith entry of a vector x. xa:b represents the (Matlabstyle) subvector of x starting at a and ending at b. Similarly,

X(a:b,c:d) stands for the submatrix of X consisting of the

(a, b)th through (c, d)th elements. (.)∗ , (.)T , (.)H represent

the conjugate, transpose, and hermitian, respectively. ◦, ⊙, ⊗

stand for Hadamard product, column-wise Khatri-Rao product,

and Kronecker product, respectively. k.kF denotes the matrix

Frobenius norm. k.k0 , and k.k denote the ℓ0 pseudo-norm

and ℓ2 norm of a vector, respectively. vec(.) represents the

vectorized form of a matrix. Re{.} returns the real part of a

complex valued number.

II. P ROBLEM F ORMULATION

We consider an array of M sensors impinged by K narrowband sources with unknown directions θ. Let y(t) ∈ CM

represent the vector of the received base-band samples on each

sensor, s(t) ∈ CK be the vector of the emitted signals from

each source, and w(t) be the additive noise on each sensor,

respectively, at the time snapshot t. The sources are assumed

to be zero mean, stationary and pairwise uncorrelated signals.

Further, we assume that the noise vector is zero mean, i.i.d.,

2

white with variance σw

, and uncorrelated from the signal.

Assume the sensors are originally designed to be at locations

introduced by the vector d ∈ RM , however due to deformation

of the sensor array, the sensors are actually located at d + δ,

in which δ ∈ RM is an unknown perturbation vector. The

received samples can be written as

y(t) = A(θ, δ)s(t) + w(t),

(1)

in which A(θ, δ) = [a(θ1 , δ), . . . , a(θK , δ)] denotes the array

manifold, and a(θi , δ) ∈ CM is the steering vector for the kth

source whose mth element is am (θk ) = ej(2π/λ)(dm +δm ) sin θk .

We can further rewrite the array manifold A(θ, δ) as

A(θ, δ) = A0 (θ) ◦ P(θ, δ),

(2)

where A0 (θ) is the array manifold as if the sensors were

located at their originally designed positions dm , P(θ, δ) is

the perturbation matrix introduced by the sensor location errors

δm , and ◦ denotes the Hadamard matrix product. Furthermore,

the (m, k)th components of M × K complex valued matrices

A0 (θ) and P(θ, δ) are ej(2π/λ)dm sin θk , and ej(2π/λ)δm sin θk ,

respectively. Given the problem setting as stated above, the

goal is to jointly estimate both the vectors δ and θ by

observing L snapshots of the received samples on the sensors.

We compute the covariance matrix of the received signals as

Ry = E(yyH ). Assuming that the sources are uncorrelated,

following [3] the vectorized form of the covariance matrix is

2

z = AKR (θ, δ)rss + σw

vec(IB ),

(3)

where AKR (θ, δ) = A(θ, δ)∗ ⊙ A(θ, δ) is the difference

co-array, rss = [σ1 , σ2 , . . . , σK ] is the diagonal of Rs =

E(ssH ) the covariance matrix of the sources, and z =

vec(Ry ). The (m + m′ M, k)-th element of AKR (θ, δ) is

given by ej(2π/λ)sin(θk )(dm +δm −dm′ −δm′ ) . Thus each column

of AKR (θ, δ) is characterized by the difference co-array:

Sca = {dm − dm′ , 1 ≤ m, m′ ≤ M }

Therefore, AKR (θ, δ) acts as a larger virtual array with

sensors located at dm + δm − (dm′ + δm′ ) for 1 ≤ i, j ≤ M .

In the rest of this paper, without loss of generality, we put

the first sensor on the origin. Therefore d1 = δ1 = 0, and

∂

∂δ1 = 0. It can be simply verified that adding a common bias

to the locations of the sensors will not alter the covariance

matrix Ry .

A. A Grid Based Model and Role of Co-Array

In order to estimate the directions of arrival, following the

same approach as in [7], we use a grid based model. In this

setting, the range of all possible directions, [−π/2, π/2], is

quantized into a certain number of grid points, Nθ , in order

to construct a grid based array manifold Agrid (δ) ∈ CM ×Nθ ,

where each column of this matrix is a steering vector corresponding to a particular direction in the grid. The gridded coarray manifold is given by Aca (δ) = Agrid (δ)∗ ⊙ Agrid (δ).

Clearly, this co-array manifold only depends on δ, and the

structure of the array. We can now rewrite (3) as

2

z = Aca (δ)γ + σw

vec(IB ),

where the non-zero elements of γ ∈ CNθ are equal to the corresponding elements of rss . We can further suppress the effect

of noise by only considering the off-diagonal elements of the

covariance matrix Ry , or in vectorized form by removing the

rows in z and Aca (δ) corresponding to i − j = 0. Hence, we

obtain

zu = Auca (δ)γ

We assume that K is sufficiently smaller than Nθ to have a

sparse vector γ. We will further elaborate this assumption in

the next sections. Considering that the value of perturbations

(δ) are given, the directions of the sources can be found by

recovering the sparse support of γ. The size of the recoverable

sparse support (or equivalently, the number of sources, K)

fundamentally depends on the Kruskal Rank of Agrid (δ). The

support can be recovered by solving

min kγk0

γ≥0

s.t.

z = Auca (δ)γ.

In Absence of Perturbation: It is shown in [15, Thm. 8], in

absence of perturbation (δ = 0), the size of the recoverable

support of γ satisfies Supp(γ) ≤ Mca /2, where Mca is the

number of distinct elements in the co-array Sca . For a ULA,

the coarray is given by

U LA

= {ndn = −M, . . . , M }

Sca

and hence Mca = O(M ) whereas for nested array with even

number of sensors and two levels of nesting

M M

N ested

(

+ 1) − 1}

= {ndn = −M ′ , . . . , M ′ ; M ′ =

Sca

2 2

and hence Mca = O(M 2 ).

Perturbation and Size of Support: The presence of perturbation has a non trivial effect on the size of the recoverable support. Of particular interest is the question: Does

the presence of perturbation δ reduce the size of identifiable

support from O(M 2 ) to O(M ) for nested arrays? By a simple

equations-versus-unknown argument, it can be seen that for

nested arrays, the number of distinct equations in (4) is still

O(M 2 ), whereas the number of unknowns is M + K, since

we have M unknown values for the perturbation and K

unknown values for the source directions. Since the number

of unknowns only increases by O(M ), it may be still possible

to identify K = O(M 2 ) sources in presence of perturbations.

The next section presents a more formal Cramér Rao bound

based study of this problem.

III. I DENTIFIABILTY AND C RAM ÉR R AO B OUND

We derive the stochastic Cramér Rao bound (CRB) for

the grid based model under perturbation, using which we

can claim some necessary conditions under which the sparse

support is identifiable for the perturbed model, for a given

perturbation δ. We consider the signals s(t) to be stochastic

with normal distribution N (0, Rs ), in which Rs is a diagonal

matrix with a few non-zero elements corresponding to the

directions on the grid where we have active sources. Assuming

the noise to be zero mean uncorrelated Gaussian with distri2

bution N (0, σw

I), we get

2

y(t) ∼ N (0, Agrid (δ)Rs AH

grid (δ) + σw I)

2

is known. Thus, the

We assume that the power of noise σw

goal is to estimate the parameter set ψ = [δ 2:M , γ] which

completely characterizes the distribution of y(t), where γ =

diag(Rs ), and δ1 = 0.

Remarks on the derivation of CRB: There are two ways

to derive the CRB. Since the model is underdetermined

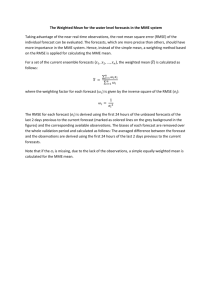

20

Nested (Ideal)

ULA (Ideal)

Nested (Pertubed)

ULA (Perturbed)

0

−20

−40

i−th singular value (dB)

(Nθ ≫ M ), it may seem that we need priors on our parameter

γ (such as sparsity, or some other form of Bayesian prior)

for the Fisher Information matrix to be invertible. However,

we will show that the FIM continues to be non singular,

i.e. the parameters continue to be identifiable without

incorporating any prior on the parameter as long as Nθ

remains smaller than a certain threshold. We also show that

beyond this threshold, the FIM necessarily becomes rank

deficient.

−60

−80

−100

−120

−140

A. CRB without Sparsity Priors

Theorem 1. The Fisher information matrix of the parameter

set ψ is given by

Jδδ Jδγ

(4)

J = JH J

δγ

γγ

0

50

100

150

i

Fig. 1. The singular values of Aca (δ) for nested and ULA arrays in perturbed

and ideal settings. M = 12, Nθ = 200, δ = 0.3 × δ 0 with δ 0 defined in

Section V.

(2:M +Nθ ,2:M +Nθ )

where

−160

−T

Jγγ = LAH

⊗ Ry −1 Aca (δ)

(5)

ca (δ) Ry

n

−1

−1 T

◦ D δ Rs AH

Jδδ = 2L Re Dδ Rs AH

grid Ry

grid Ry

T o

−1

(6)

+ Ry −1 ◦ Dδ Rs AH

Agrid Rs DH

grid Ry

δ

n

o

T

−1

−1

H

Jδγ = 2L Re Dδ Rs AH

R

A

◦

A

R

y

grid

y

grid

grid

(7)

PM ∂Agrid

in which Dδ =

i=2 ∂δ i , and we temporarily omitted

argument of δ from Agrid (δ) for the simplicity of notation.

The first row and column of the matrix in the RHS of (4) are

eliminated since we assumed that δ1 is known and equal to 0.

Proof. Jγγ is obtained similar to [16], by plugging the perturbed coarray manifold in the equations therein. The rest

of the Fisher information matrix is derived using the same

approach as in [17] (pp. 960-962). The Cramer-Raó lower

bound on the parameter set ψ is then given by J−1 .

Corollary 1. A necessary condition for identifiability of the

DOA estimation problem is Nθ = rank(Aca (δ)).

Proof. For the matrix J to be invertible, it is necessary that

each diagonal block be nonsingular. (However, this is not a

sufficient condition since the Schur complement of J, Jδδ −

H

Jδγ J−1

γγ Jδγ , should also be nonsingular). It is shown [16] that

Jγγ is nonsingular iff Nθ = rank(Aca ) which completes the

proof.

Remark 1. For δ = 0, rank(Aca (δ)) can be determined

using the structure of the array. For instance, for a ULA array

rank(Aca (δ)) = O(M ), and rank(Aca (δ)) = O(M 2 ) for

nested and coprime arrays. However, for non-zero δ, the rank

of the coarray increases and it can be as large as M 2 −M +1,

since the differences δi − δj can given rise to more unique

differences. This is further validated using simulation. For

ULA, the singular values of Aca (δ) start decaying quickly

beyond 2M , whereas, for nested array, they decay only beyond

O(M 2 ). Figure 1 shows the singular values of Aca (δ) for

ULA and nested arrays in the presence of perturbation. We

will investigate into this interesting effect thoroughly in future

and compare their performance in sparse support recovery.

Notice that the sparsity constraint on the vector γ is not used

so far. The next question to ask would be: how to address the

question of identifiability when Nθ > rank(Aca (δ))?.

B. Oracle CRB

For J to be nonsingular, we need Nθ = rank(Aca (δ)).

When Nθ > rank(Aca (δ)), J (computed without assuming

any prior on γ) is singular, indicating that beyond this value

of Nθ , some form of prior on γ is necessary for identifiability.

It has been shown in [16] that γ is still identifiable, as long

as γ is sparse with

k-rank(Aca (δ))

,

(8)

2

where k-rank(.) represents the Kruskal rank of a matrix.

Considering that γ satisfies the sparsity constraint, we can

still define an oracle CRB assuming that the support of γ

(indices of non-zero elements in γ) is known. In this case,

the same expressions for the Fisher information matrix as

in (4) are valid, if we replace Agrid with AΩ , and AKR

with AKR,Ω , where AΩ , and AKR,Ω are constructed by only

picking the columns corresponding to the indices Ω, from

matrices A and AKR , respectively, where Ω is the support

of γ. By inverting the Fisher information matrix, we obtain

an oracle CRB for the problem of estimating the source powers

jointly with the sensor location errors. Although, the support

of γ is not known in the actual DOA estimation problem, this

oracle CRB indicates a fundamental lower bound that every

estimation algorithm must obey.

kγk0 ≤

IV. A N I TERATIVE A LGORITHM F OR J OINT S UPPORT AND

P ERTURBATION E STIMATION

As per the model from Section II-A, in order to estimate the

directions of sources jointly with the perturbation, we need to

solve a sparse representation problem to recover the support of

γ. Since the sensor location errors δ are unknown, the matrix

Agrid (δ) is also unknown. Hence, the sparse representation

problem cannot be solved directly. To overcome this difficulty,

we use a two-stage approach to jointly estimate the sensor

location errors and solve the sparse representation problem.

In the first stage, we assume that the vector δ is fixed, and

then we solve the following sparse representation problem:

s.t. kγk0 ≤ K. (9)

γ̃ = arg min kAgrid (δ)γ − zk2F

γ

This problem can be solved using basic sparse recovery

methods like OMP [18] or LASSO [19]. In the second stage,

the perturbations are updated assuming that the recovered

sparse vector in the first stage is fixed. Using the updated

vector δ, the first stage is again repeated until a convergence

criterion is met, which can be a certain number of iterations.

The algorithm is initialized with δ (0) = 0. A pseudocode for

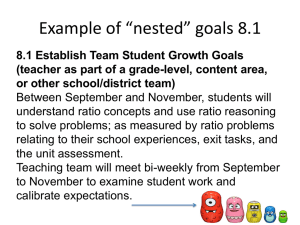

this algorithm is illustrated in Figure 2.

A. Updating the perturbation vector

In order to update the vector δ, in the second stage, we

minimize the error value E(δ) = kAca (δ)γ̃ − zk2F . This

minimization can be done by running T iterations of gradient

descent

∂E(δ)

,

(10)

δ ←δ−µ

∂δ

in which µ > 0 is the step size parameter and the mth

is obtained by

component of ∂E(δ)

∂δ

∂Pca (δ)

∂E(δ)

= tr −2zγ̃ T + 2A0,ca γ̃ γ̃ T

, (11)

∂δm

∂δm

where A0,ca , and Pca (δ) are matrices constructed by gridding

A0 (θ), and P(θ, δ).

Lemma 1. E(δ) is Lipschitz continuous with constant C,

i.e.k∇E(δ (1) ) − ∇E(δ (2) )k ≤ C(δ (1) − δ (2) ) , where

√

4πM 2M

k − zγ̃ T + A0,ca γ̃ γ̃ T k

(12)

C=

λ

Corollary 2. A sufficient condition for gradient descent to

converge to a local minimum is µ < µ0 , where µ0 = C2 , and

C is given by (12).

Although the gradient descent approach can find the minimum value of E(δ) given the step size parameter µ is smaller

than µ0 given in Corollary 2, it may require relatively large

number of iterations T for gradient descent to converge. Therefore, the updating process may become very time consuming.

Hence, we propose another approach for minimizing E(δ).

B. Updating the perturbation vector: a linearized approach

To simplify the minimization of E(δ) we can assume that

the perturbations are sufficiently small, which is a natural

assumption. We can use first order Taylor expansion of

Agrid (δ) with respect to δ to approximate E(δ), and then

we minimize this approximated function instead. In this case,

the minimization task simplifies to a least squares problem.

We can approximate E(δ) as

E(δ) ≃ kA0,ca γ̃ + Qδ − zk2 ,

(13)

ca (δ) where the ith column of Q is equal to ∂A∂δ

γ̃. The

δ=0

i

minimizer of (13) is then given by δ̄ 1 = 0, δ̄ 2:M =

(Q̄H Q̄)−1 Q̄H (z − A0,ca γ̃), with Q̄ = Q(2:M,2:M ) .

C. Convergence

The proposed algorithm has the same flavour as K-SVD

[20] algorithm for dictionary learning, in the sense that by

iteratively performing sparse recovery and updating the dictionary atoms, an unknown dictionary is recovered. In our

problem, Agrid (δ) is a structured unknown dictionary. Similar

to K-SVD, the convergence here is subject to success of both

stages. For the first stage to succeed, the sparsity of γ should

be high enough so that recovering a unique sparse solution be

guaranteed. For the second stage, if gradient descent is used,

the step-size parameter and the number of iterations should

be tuned to avoid divergence of this stage. If the linearized

approach is used, the perturbations should be small enough

for the linear approximation to be valid. If all these criteria

are met, in each iteration of our algorithm, the positive valued

function E(δ) decays until converging to a local minimum.

1:

2:

3:

4:

5:

6:

7:

δ (0) ← 0, i ← 0

repeat

n

o

γ (i) ← arg minγ kAgrid (δ (i) )γ − zk2F s.t. kγk0 ≤

K

δ (i+1) ← arg minδ kAgrid (δ)γ (i) − zk2F

i←i+1

until Convergence

δ̂ ← δ (i) , γ̂ ← γ (i)

Fig. 2. Algorithm for jointly estimating the perturbations and the source

directions

V. E XPERIMENTAL R ESULTS

We conduct several numerical experiments to study the

effect of perturbation on DOA estimation, and the performance

of our proposed algorithm. The simulations are performed for

the uniform linear array (ULA), and nested arrays. In all of

the cases the number of sensors are fixed to be M = 8.

In each case, we run the experiment for different number of

sources. The sources are assumed to be uncorrelated complex

Gaussian signals. The number of grid points (Nθ ) is either

19 or 139, where − π2 and π2 are not included in the grid.

These choices are to study both cases where Nθ = rank(Aca )

and Nθ > rank(Aca ). In the cases where number of sources

are 12, we assume that sources are located at directions:

[−63◦ −45◦ −27◦ −18◦ −9◦ 0◦ 9◦ 27◦ 36◦ 45◦ 54◦ 72◦ ].

For 9 sources, we consider the same directions except for

−9◦ , 9◦ , 54◦ . For 6 sources, −45◦ , −18◦ , 27◦ are also discarded. In all the simulations, the vector of location errors (perturbations) is δ = αδ 0 , where δ 0 = λ2 ×

[0 −0.25 −0.1 0.1 0.4 0.35 0.45 0.3]T , and α > 0 is

a scalar, and λ > 0 is the wavelength of the impinging

wave. For the ULA, the unperturbed sensor locations are

d = λ2 ×[0, 1, 2, 3, 4, 5, 6, 7]T . For the nested array, the sensors

are located at d = λ2 × [0, 1, 2, 3, 7, 11, 15, 19]T . In all the

simulations, the number of time snapshots is assumed to be

2

= 4 × 10−4 .

L = 800, and the power of noise is set to σw

We use LASSO [19] to perform sparse recovery, and gradient

descent approach for updating the perturbation vector in each

iteration of our algorithm. The number of sources K can

be estimated by looking into the recovered sparse support

(obtained from LASSO) and then pruning the spurious peaks

by a simple thresholding.

In the first experiment, we consider a nested array with 8

sensors and 9 sources, with the sensor and source locations as

mentioned in the previous paragraph, and α = 0.3, Nθ = 139.

Figure 3 illustrates the recovered powers with and without using our proposed algorithm for recovering the sensor location

errors, showing the improvement in recovery of source powers

when our algorithm is used. In 3a the source powers are all

equal to one. However, in 3b they have the following values,

respectively: 0.7, 1, 0.7, 1, 0.6, 0.8, 0.6, 0.9, 1. The plots show

satisfactory performances in both cases.

In the second experiment, we conduct Monte Carlo simulations to study the performance of our algorithm for recovery of the sensor location errors. Figure 4 shows the

Root Mean Square Error (RMSE) of the recovered sensor

location

q errors δ̂ versus α. The RMSE in this case is defined

PNtests kδ̂−δk2

as

i=1

Ntests , where Ntests indicates the number of

Monte-Carlo simulations for each value of α (In all the

simulations Ntests = 100). As α increase (larger perturbations),

1.4

1.4

γ

γ̃

γ̂

γ

γ̃

γ̂

1.2

1.2

1

1

0.8

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0

−100

−80

−60

−40

−20

0

20

40

60

0

100 −100

80

−80

−60

−40

−20

0

20

40

60

80

100

(a) Equal source powers

(b) Unequal source powers

Fig. 3. γ, γ̃, γ̂ indicate the original source powers, the initial guess assuming

δ = 0, and the recovered source powers using our algorithm, respectively.

20

15

15

10

10

5

RMSE, nested, K=6

CRB, nested, K=6

RMSE, nested, K=9

CRB, nested, K=9

RMSE, nested, K=12

CRB, nested, K=12

RMSE, ula, K=6

o-CRB, ula, K=6

RMSE, ula, K=9

o-CRB, ula, K=9

RMSE, ula, K=12

o-CRB, ula, K=12

0

−5

−10

−15

−20

−25

0

0.05

0.1

0.15

0.2

0.25

0.3

0.35

0.4

0.45

RMSE (dB)

RMSE (dB)

5

0

RMSE, nested, K=6

o-CRB, nested, K=6

RMSE, nested, K=9

o-CRB, nested, K=9

RMSE, nested, K=12

o-CRB, nested, K=12

RMSE, ula, K=6

o-CRB, ula, K=6

RMSE, ula, K=9

o-CRB, ula, K=9

RMSE, ula, K=12

o-CRB, ula, K=12

−5

−10

−15

−20

−25

0

0.5

0.05

0.1

0.15

0.2

0.25

α

0.3

0.35

0.4

0.45

0.5

α

(a) Nθ = 19

(b) Nθ = 139

Fig. 4. The RMSE of the recovered δ̂ vs α, for different array structures and

different number of sources.

35

30

30

25

25

RMSE (degrees)

RMSE (degrees)

20

20

15

15

10

RMSE,

RMSE,

RMSE,

RMSE,

RMSE,

10

RMSE, nested, K=6

RMSE, nested, K=9

RMSE, nested, K=12

RMSE, ula, K=6

RMSE, ula, K=9

5

0

0

0.05

0.1

0.15

0.2

0.25

0.3

0.35

0.4

0.45

5

0

0

0.5

0.05

0.1

0.15

0.2

α

0.25

0.3

0.35

nested, K=6

nested, K=9

nested, K=12

ula, K=6

ula, K=9

0.4

0.45

0.5

α

(a) Nθ = 19

(b) Nθ = 139

Fig. 5. The RMSE of the recovered source directions vs α, for different array

structures and different number of sources.

0

−20

−10

−30

−20

RMSE, nested, K=6

CRB, nested, K=6

RMSE, nested, K=9

CRB, nested, K=9

RMSE, nested, K=12

CRB, nested, K=12

RMSE, ula, K=6

o-CRB, ula, K=6

RMSE, ula, K=9

o-CRB, ula, K=9

RMSE, ula, K=12

o-CRB, ula, K=12

−40

−50

−60

−70

−80

0

0.05

0.1

0.15

0.2

0.25

α

0.3

0.35

0.4

0.45

0.5

RMSE (dB)

RMSE (dB)

−40

−30

RMSE, nested, K=6

o-CRB, nested, K=6

RMSE, nested, K=9

o-CRB, nested, K=9

RMSE, nested, K=12

o-CRB, nested, K=12

RMSE, ula, K=6

o-CRB, ula, K=6

RMSE, ula, K=9

o-CRB, ula, K=9

RMSE, ula, K=12

o-CRB, ula, K=12

−50

−60

−70

−80

0

0.05

0.1

0.15

0.2

0.25

0.3

0.35

0.4

0.45

0.5

α

(a) Nθ = 19

(b) Nθ = 139

Fig. 6. The RMSE of the recovered source powers vs α, for different array

structures and different number of sources. o-CRB denotes the oracle CRB.

the algorithm begins to fail to find the correct sensor locations.

However, the CRB still suggests the possibility of identifying

the correct sensor positions in those circumstances. Figure

6 shows the RMSE of the recovered source powers. The

experiments are conducted for both Nq

θ = 19 and Nθ = 139.

PNtests kγ̂−γk2

The RMSE in this case is defined by

i=1

Ntests . In all

the plots, CRB of the corresponding parameters is calculated

by taking square-root of the trace of the CRB matrix for

those parameters. For the cases where Nθ > rank(Aca ), the

oracle CRB is calculated, and is shown by o-CRB. Figure 5

depicts the RMSE of source directions, which is calculated

by comparing the support of γ̂ with that of γ. As it can be

observed, for the nested array, our algorithm can successfully

recover the true support even in high perturbations.

VI. C ONCLUSION

In this paper we studied the effect of random perturbations

on the sensor locations in DOA estimation problem using the

concept of co-arrays. We proposed an iterative algorithm to

find the direction of sources along with the perturbations.

We addressed identifiabilty issues by deriving Cramér Rao

lower bounds. The experimental results revealed successful

performance of our algorithm especially for nested arrays. We

can conclude that conclude that co-arrays of nested arrays are

robust to small perturbations in the sensor locations.

R EFERENCES

[1] H. Krim and M. Viberg, “Two decades of array signal processing

research: the parametric approach,” Signal Processing Magazine, IEEE,

vol. 13, no. 4, pp. 67–94, 1996.

[2] R. O. Schmidt, “Multiple emitter location and signal parameter estimation,” Antennas and Propagation, IEEE Transactions on, vol. 34, no. 3,

pp. 276–280, 1986.

[3] P. Pal and P. Vaidyanathan, “Nested arrays: a novel approach to array

processing with enhanced degrees of freedom,” IEEE Trans. Signal

Process., vol. 58, no. 8, pp. 4167–4181, 2010.

[4] P. P. Vaidyanathan and P. Pal, “Sparse sensing with co-prime samplers

and arrays,” Signal Processing, IEEE Transactions on, vol. 59, no. 2,

pp. 573–586, 2011.

[5] P. Pal and P. Vaidyanathan, “Coprime sampling and the music algorithm,” in Digital Signal Processing Workshop and IEEE Signal

Processing Education Workshop (DSP/SPE), 2011 IEEE. IEEE, 2011,

pp. 289–294.

[6] Y. D. Zhang, M. G. Amin, and B. Himed, “Sparsity-based doa estimation

using co-prime arrays,” in Acoustics, Speech and Signal Processing

(ICASSP), 2013 IEEE International Conference on. IEEE, 2013, pp.

3967–3971.

[7] P. Pal and P. Vaidyanathan, “Correlation-aware techniques for sparse

support recovery,” in Statistical Signal Processing Workshop (SSP), 2012

IEEE. IEEE, 2012, pp. 53–56.

[8] ——, “On application of lasso for sparse support recovery with imperfect correlation awareness,” in Signals, Systems and Computers

(ASILOMAR), 2012 Conference Record of the Forty Sixth Asilomar

Conference on. IEEE, 2012, pp. 958–962.

[9] ——, “Correlation-aware sparse support recovery: Gaussian sources,”

in Acoustics, Speech and Signal Processing (ICASSP), 2013 IEEE

International Conference on. IEEE, 2013, pp. 5880–5884.

[10] L. Seymour, C. Cowan, and P. Grant, “Bearing estimation in the

presence of sensor positioning errors,” in Acoustics, Speech, and Signal

Processing, IEEE International Conference on ICASSP’87., vol. 12.

IEEE, 1987, pp. 2264–2267.

[11] Y.-M. Chen, J.-H. Lee, C.-C. Yeh, and J. Mar, “Bearing estimation

without calibration for randomly perturbed arrays,” Signal Processing,

IEEE Transactions on, vol. 39, no. 1, pp. 194–197, 1991.

[12] A. J. Weiss and B. Friedlander, “Array shape calibration using sources in

unknown locations-a maximum likelihood approach,” Acoustics, Speech

and Signal Processing, IEEE Transactions on, vol. 37, no. 12, pp. 1958–

1966, 1989.

[13] M. Viberg and A. L. Swindlehurst, “A bayesian approach to autocalibration for parametric array signal processing,” Signal Processing,

IEEE Transactions on, vol. 42, no. 12, pp. 3495–3507, 1994.

[14] Z.-M. Liu and Y.-Y. Zhou, “A unified framework and sparse bayesian

perspective for direction-of-arrival estimation in the presence of array imperfections,” Signal Processing, IEEE Transactions on, vol. 61,

no. 15, pp. 3786–3798, 2013.

[15] P. Pal and P. Vaidyanathan, “Pushing the limits of sparse support recovery using correlation information,” Accepted for publication in IEEE

Transactions on Signal Processing. DOI: 10.1109/TSP.2014.2385033,

2015.

[16] ——, “Parameter identifiability in sparse bayesian learning,” in Acoustics, Speech and Signal Processing (ICASSP), 2014 IEEE International

Conference on. IEEE, 2014, pp. 1851–1855.

[17] H. L. Van Trees, Detection, Estimation, and Modulation Theory, Optimum Array Processing. John Wiley & Sons, 2004.

[18] J. A. Tropp, “Greed is good: Algorithmic results for sparse approximation,” Information Theory, IEEE Transactions on, vol. 50, no. 10, pp.

2231–2242, 2004.

[19] R. Tibshirani, “Regression shrinkage and selection via the lasso,”

Journal of the Royal Statistical Society. Series B (Methodological), pp.

267–288, 1996.

[20] M. Aharon, M. Elad, and A. Bruckstein, “-svd: An algorithm for

designing overcomplete dictionaries for sparse representation,” Signal

Processing, IEEE Transactions on, vol. 54, no. 11, pp. 4311–4322, 2006.