Analysis of Generalized Ridge Functions in High Dimensions Sandra Keiper

advertisement

Analysis of Generalized Ridge Functions in High

Dimensions

Sandra Keiper

Technische Universität Berlin

Institut für Mathematik

Straße des 17. Juni 136, 10623 Berlin

Email: keiper@math.tu-berlin.de

Abstract—The approximation of functions in many variables

suffers from the so-called “curse of dimensionality”. Namely,

functions on RN with smoothness of order s can be recovered

at most with an accuracy of n−s/N applying n-dimensional

spaces for linear or nonlinear approximation. However, there is

a common belief that functions arising as solutions of real world

problems have more structure than usual N -variate functions.

This has led to the introduction of different models for those

functions. One of the most popular models is that of so-called

ridge functions, which are of the form

RN ⊇ Ω 3 x 7→ f (x) = g(Ax),

(1)

m,N

where A ∈ R

is a matrix and m is considerably smaller than

N . The approximation of such functions was for example studied

in [1], [2], [3], and [4].

However, by considering functions of the form (1), we assume

that real world problems can be described by functions that are

constant along certain linear subspaces. Such assumption is quite

restrictive and we, therefore, want to study a more generalized

form of ridge functions, namely functions which are constant

along certain submanifolds of RN . Hence, we introduce the notion

of generalized ridge functions, which are defined to be functions

of the form

RN 3 x 7→ f (x) = g(dist(x, M )),

(2)

N

where M is a d-dimensional, smooth submanifold of R and g ∈

C s (R). Note that if M is an (N −1)-dimensional, affine subspace

of RN and we consider the signed distance in equation (2), we

indeed have the case of a usual ridge function. We will analyze

how the methods to approximate usual ridge functions apply to

generalized ridge functions and investigate new algorithms for

their approximation.

I. I NTRODUCTION

An approach to break the curse of dimensionality is to

consider ridge functions of the form (1), where A is usually

called ridge matrix and g ∈ C s (Rm ), 1 ≤ s ≤ 2, is called

ridge profile.

For particular choices of A, different approaches have

been investigated. For example, if A is of the form AT =

[ei1 , . . . , eim ], for eik ∈ RN the canonical unit vectors, ik ∈

{1, . . . , N }, f can be rewritten as a function which depends

only on a few variables, i.e. f (x1 , . . . , xN ) = g(xi1 , . . . , xim ).

An approach of recovering the active variables and approximating the ridge profile g has been given in [1]. For g in

some approximation class As defined in [1] the result reads

as follows:

Theorem I.1 ([1]). If f (x) = g(xi1 , . . . , xim ) with g ∈ As ,

then the function fˆ determined by the algorithm introduced in

[1] satisfies

kf − fˆkC([0,1]N ) ≤ |g|As l−s ,

where the number of point values used in the algorithm is

smaller than

(l + 1)m #(A) + mdlog2 N e,

for a family A of partitions of {1, . . . , N } into m disjoint

subsets, which is rich enough in the sense that given m distinct

integers i1 , . . . , im ∈ {1, 2, . . . , N } there is a partition A ∈ A

such that each set in A contains precisely one of the integers

i1 , . . . , im .

Note that A can be chosen such that #(A) is bounded by

#(A) . log2 N .

Another approach is to assume that m = 1 and that the

matrix A therefore is a vector, usually called ridge vector and

denoted by A =: a. In this case f is of the form

f (x) = g(hx, ai).

(3)

We usually denote the space of all ridge functions of this type

with R(s) and with R+ (s) if all entries of a are assumed to

be positive. The recovery of usual ridge functions from point

queries was first considered by Cohen, Daubechies, DeVore,

Kerkyacharian and Picard in [2] for ridge functions with

positive ridge vector. It was shown that the accuracy of their

method is close to the approximation rate of one-dimensional

functions:

Theorem I.2 ([2]). Let f = g(h·, ai) such that f ∈ R+ (s),

with s > 1 and kgkC s ≤ M0 as well as kakw`p ≤ M1 .

Then the algorithm introduced in [2] requires O(l) queries to

approximate f by fˆ satisfying,

kf − fˆkC([0,1]N ) ≤ C0 M0 l−s + C1 M1 (N, l)1/p−1 ,

where l ≥ 1, C0 is a constant depending only on s, C1 depends

on p and

(

[1 + log(N/l)]/l, if l < N

(N, l) :=

0,

if l ≥ N.

In the remainder of this abstract we will denote the space

of functions f ∈ R+ (s) which fulfill the assumptions of the

above theorem by R+ (s, p; M0 , M1 ).

However, the algorithm from [2] does not apply to arbitrary

ridge vectors. In [3] and [4] new algorithms were introduced

to waive the assumption of a positive ridge vector. In [4] it

was shown:

Theorem I.3 ([4]). Let f : [−1, 1]N → R be a ridge function

with f (x) = g(hx, ai), where g is a Lipschitz continuous

function which is differentiable with g 0 (0) > 0 and g 0 also

Lipschitz continuous. For a arbitrary h>0 one can construct

a function fˆ, satisfying

kf − fˆk∞ ≤ 2c0 ka − âk1 ≤

4c0 c1 h

,

0

g (0) − c1 h

using N + 1 samples and assuming that g 0 (0) − c1 h > 0.

Here is â the approximation of the ridge vector a found by the

algorithm. The constants c0 and c1 are given by the Lipschitz

constants of g and g 0 .

The main idea of the algorithm in [4] is to approximate the

gradient of f by divided differences exploiting the fact that the

gradient of f is some scalar multiple of the ridge vector. The

accuracy of the approximation of the gradient is determined by

the choice of the number h, whereas the number of sampling

points is fixed.

The approach by Fornasier, Schnass and Vybiral [3] is rather

based on compressed sensing. Thus, not the gradient but the

directional derivatives of f were approximated at a certain

number, say LX , random points in LΦ random directions. It

was shown:

Theorem I.4 ([3]). Let 0 < s < 1 and let log N ≤ LΦ ≤

[log 6]−2 N . Then for every h > 0 there is a constant C such

that using LX (LΦ +1) function evaluations of f , the algorithm

introduced in [3] defines a function fˆ that, with probability

!

2 2

1−

e−CLΦ + e−

√

LΦ N

−

+ 2e

2LX s α

4

C2

,

will satisfy

kf − fˆk∞ ≤ 2C2 p

ν1

α(1 − s) − ν1

,

where α is the expected value of g 0 with respect to the uniform

surface measure µSd−1 on the sphere, namely

Z

α=

|g 0 (ha, ξi)|2 dµSd−1 (ξ),

Sd−1

which ensures a lower bound for g 0 (ha, ·i), and

ν1 = C

0

LΦ

log(N/LΦ )

1/2−1/q

h

+√

LΦ

Note that h again plays the role of determining the accuracy

of approximating the directional derivative by divided differences and that the last mentioned approach can also be applied

to functions of type (1).

II. A LMOST R IDGE F UNCTIONS

The assumption that functions arising as solutions of real

world problems are precisely of the form (3) is very strong.

Therefore, it may be useful to consider functions that are only

close to usual ridge functions.

Hence, we introduce two possibilities to define almost ridge

functions and analyze how particular algorithms, which are

designed to capture usual ridge functions, apply to recover

almost ridge functions. In particular we analyze functions of

type (2) with M being close to some hyperplane.

Let us define almost ridge functions of type I. Note that for

an (N − 1)-dimensional submanifold M the signed distance

is given as

dist(x, M ),

if x lies on an outward

normal ray of M

dist± (x, M ) =

− dist(x, M ), if x lies on an inward

normal ray of M ,

and that we denote the orthogonal projection to an affine

subspace H of RN by PH .

Definition II.1. Let g ∈ C s (R) and let M be an (N − 1)dimensional smooth, connected submanifold of RN , so that we

can find an (N −1)-dimensional affine subspace H of RN and

an ε ≥ 0, such that dist(H, M ) := supx∈M kPH (x)−xk2 ≤ ε

and that PH : M → H is surjective. Then we call

f (x) := g(dist± (x, M ))

an almost ridge function of type I and fˆ = g(dist(·, H)) a

ridge estimator of the almost ridge function.

The algorithm by Cohen et al. [2] can be applied immediately, since it was proven a stability result for noisy

measurements.

Theorem II.2 ([2]). Suppose that we receive the values of

a ridge function f only up to an accuracy ε. That is, when

sampling the value of f at any point x, we receive instead

the value f˜(x) satisfying |f (x) − f˜(x)| ≤ ε. Then if f ∈

R+ (s, q; M0 , M1 ) and

M0 −2S+ 2S+1/2 +3/2

s̄

l

,

6

where s̄, S > 0 and s̄ ∈ N, such that s̄ + 1 ≤ s ≤ S, the

output fˆ of the algorithm satisfies

kf − fˆk∞ ≤ C0 M0 l−s + C1 M1 (N, l)1/q−1 ,

ε≤

!

,

where the positive constant C 0 depends only on C1 ≥ kakq

and on C2 ≥ sup|α|≤2 kDα gk∞ .

for some constants C0 depending on s̄, S and C1 depending

on q and l the number of function evaluations.

Note that we indeed reach an approximation result for

almost ridge functions of type I by setting the almost ridge

function f = f˜ and a corresponding ridge estimator fˆ = f .

Then the algorithm gives an approximation fˆ which fulfills:

kf − fˆk∞ ≤ kf − fˆk∞ + kfˆ − fˆk∞

≤ ε + C0 M0 l−s + C1 M1 (N, l)1/q−1 .

Also in [4] a stability result for the case of random noise

was proven. However, the difference between an almost ridge

function and a usual ridge function cannot be seen as random

noise. Instead we can prove the following result.

Theorem II.3. Given an almost ridge function of type I as

f (x) = g(dist± (x, M )) and let fˆ = g(dist± (·, H)) ∈ R+ (s)

be a ridge estimator such that for (x) := dist± (x, M ) −

dist± (x, H) holds kε0 (x)k2 ≤ η. Then by the method introduced in [4] we can approximate the ridge vector a of the

ridge estimator fˆ by â, with an error bounded from above by:

p

hν1 (η) + h|g 0 (0)|ν2 (η) + |g 0 (0)|N η

,

ka − âk2 ≤ p

|g 0 (0)|2 ν3 (η) − |g 0 (0)|hν4 (η) − h2 ν5 (η)

where νi (η), i = 1, . . . , 5, are some constants depending on

and decreasing with η.

Note that the assumption on (·) to be bounded by η is very

natural since it ensures that the normal vector to the manifold

M does not change to much and is therefore close to the

normal vector a of H, which we try to recover.

Another possibility to define almost ridge functions is to

allow a varying ridge vector. These are almost ridge functions

to which we refer to as almost ridge functions of type II.

Definition II.4. Let a(·) : RN → RN be a smooth function

which has norm one and is close to a constant vector, i.e. there

exists a vector a ∈ RN , kak2 = 1, with ka(x) − ak2 ≤ ε and

ka(x)k2 = 1 for all x. We then call a function of the form

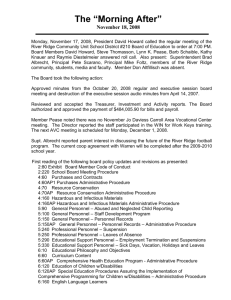

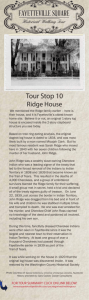

Fig. 1: Generalized ridge function of the form f (x) :=

g(dist(x, L)2 ), where L is some one-dimensional affine subspace of RN . The figure shows two different sets of constant

function values for the function f (x1 , x2 , x3 ) = x22 + x23 =

dist(x, L)2 , where L := span (1, 0, 0) (blue line).

III. G ENERALIZED R IDGE F UNCTIONS

The disadvantage of the method described before is that

the approximation error cannot fall below inf fˆ∈R(s) kf − fˆk,

where R(s) denotes the space of usual ridge functions. Thus,

it would be more convenient to approximate a function of the

form (2) by an estimator of the same form. Moreover, it would

be favorable to waive the assumption that the manifold M is

close to some hyperplane. We initially analyze generalized

ridge functions of the form

f (x) = g(dist(x, L)2 ),

(4)

where L is some d-dimensional affine subspace of RN . We

will exploit the fact that we can estimate the tangent plane in

some x0 ∈ RN of the (N − 1)-dimensional submanifold

x ∈ RN : dist(x, L) = dist(x0 , L)

as the unique hyperplane which is perpendicular to the gradient

of f in x0 and that the function f restricted to this tangent

plane is again of the form (4).

We propose the following algorithm:

f (x) = g(hx, a(x)i),

an almost ridge function of type II.

Algorithm

The algorithm of Cohen et al. does again immediately

apply to almost ridge functions of type II. For the algorithm

introduced in [4] we can prove the following result on the

approximation of almost ridge functions of type II.

Theorem II.5. Given an almost ridge function f by f (x) =

g(hx, a(x)i) with g ∈ C 2 ([0, 1]) and a := a(0) such that ka −

a(x)k2 ≤ min{ε, ε/kxk2 }. Then by the method introduced in

[4] we can approximate the ridge vector a(x) by â with an

error of at most

ka(x) − âk2 ≤ ε +

√

N

(1 + ε)ϑ + |g 0 (0)|ε

,

[|g 0 (0)| − c1 h(1 + ε)] (1 − ε)

where

1/2

ϑ = c1 h(1 + ε) + 2c1 h [1 + ε + (2c1 h(1 + ε|g 0 (0)|))]

for all x in RN and c1 the Lipschitz constant of g 0 .

Initialize: f˜N := f and T̃ N = RN .

Repeat: For i = N, . . . , d + 1:

1) For some arbitrarily chosen x̃i ∈ T˜i compute

"

#N

˜i (x̃i + hek ) − f˜i (x̃i )

f

i

∇h f˜ (x̃i ) =

.

h

k=1

2) Set ũi := ∇h f˜i (x̃i )/k∇h f˜i (x̃i )k2 .

⊥

3) Define T̃ i−1 = (span{ũi , . . . , ũN }) .

4) Let f˜i−1 be the restriction of f to T̃ i−1 .

Result: Set L̃⊥ = T̃ d .

Using this algorithm to recover f , we can show the following approximation result.

Theorem III.1. Let f be a generalized ridge function of the

form (4). Assume that the derivative of g ∈ C s (R), s ∈ (1, 2],

is bounded by some positive constants c2 , c3 . By sampling the

function f at (N − d)(N + 1) appropriate points we can

construct an approximation of L by a subspace L̃ ⊂ RN ,

such that the error is bounded by

√

kPL − PL̃ kop . (1 + K)d dh,

for some arbitrarily small h > 0, where K is some constant

depending on the Hölder constant and bounds of g 0 .

It then only remains to recover the ridge profile g. We begin

to show that the described algorithm is well-defined if L is a

subspace of RN , i.e. contains the zero. Thus, our first aim is

to show that the system, which is formed by the gradients of

the restrictions of f , forms indeed a basis for L. We denote

the gradient of a function f by ∇f .

Theorem III.2. Let f (x) = g(dist(x, L)2 ) = g(kPP xk22 ),

where P = L⊥ . We compute the vectors ui , i = N, . . . d + 1,

iteratively in the following way:

Initialize f N = f and T N = RN . For i = N, . . . , d + 1 set

1) ui := ∇f i (xi )/k∇f i (xi )k`i2 for some randomly chosen

point xi ∈ T i ,

⊥

2) T i−1 := span ui , . . . , uN ,

3) f i−1 := f T i−1 the restriction of f to T i−1 .

Then L is given by L = T d .

Proof: Assume we can write L as L = span {u1 , . . . , ud }

where {u1 , . . . , ud , ud+1 , . . . , uN } is an orthonormal basis of

RN , then we set V = [u1 . . . ud ud+1 . . . uN ]. We begin by

computing the gradient of f and obtain

∇f (x) = 2g 0 (kPP xk22 )PP x,

which is obviously perpendicular to L, since P and L are

perpendicular.

Therefore, we can assume that uN = ∇f (xN )/k∇f (xN )k2 ,

since the choice of V is not unique. Thus, we get the representation of T N −1 = span{u1 , . . . , uN −1 }. Now define f N −1 to

be the restriction of f to T N −1 and hN −1 : RN −1 → R by

x̂

x̂

hN −1 (x̂) := f (V

) = f N −1 (V

).

0

0

T

Then ∇hN −1 (x̂) = ∇f (x)T V̂N −1 , where V̂i results by

deleting the (i + 1)-th up to the N -th column of V and x :=

V (x̂, 0)T ∈ T N −1 . Thus,the gradient of hN −1 considered as a

∇hN −1 (x̂)

vector in RN is given by

= VNT−1 ∇f (x), where

0

Vi results by substituting the i-th up to the N -th column of V

with the zero-vector. And since hN −1 is the rotated version

of f N −1 the gradient of f N −1 is the rotated version of the

gradient of hN −1 , i.e.

∇f N −1 (x) = V VNT−1 ∇f (x) = 2g 0 (kPP xk22 )V VNT−1 PP x.

An easy computation shows that V VNT−1 PP x is the projection of PP x to T N −1 . Thus, it is obvious that

∇f N −1 (x) is perpendicular to L. Further is ∇f N −1 (x)

also orthogonal to uN . Therefore, we set uN −1 =

∇f N −1 (xN −1 )/k∇f N −1 (xN −1 )k2 for some xN −1 ∈ T N −1

and T N −2 := span {u1 , . . . , uN −2 }. We repeat this procedure

until we get a basis {ud+1 , . . . , uN } of P , which then gives

us the desired space L = P ⊥ .

The previous theorem shows that, if we could compute the

gradient in (N − d) points, we would be able to recover the

space L, respective its orthogonal complement P , exactly.

However, we are only allowed to sample the function at a

few points. Thus, we can only approximate the gradients by

computing the divided differences:

N

f (x + hei ) − f (x)

∇h f (x) =

.

h

i=1

The described procedure can of course not find the correct

plane P , however, it is able to compute a good approximation

of P , where the approximation error depends on the choice of

h.

Proof of Theorem III.1: As mentioned above the idea is

to approximate the gradients of f = f N and f i for i = d +

1, . . . , N − 1. Since we need N + 1 samples for each gradient

approximation, we need (N − d)(N + 1) samples altogether.

We already know from Theorem III.2 that the subspace P can

be written in terms of the gradients of f and its restrictions.

Hence, we assume P = L⊥ = span {ud+1 . . . , uN }, where

the ui ’s are given as stated in Theorem III.2.

Lemma III.3. Under the assumptions of Theorem III.1 and

with the choice of the ũi ’s, i = d + 1, . . . , N , as proposed in

the algorithm it holds:

√

√

2Ĉ dh

2Ĉ dh

≤

=: S0 ,

(5)

kũN − uN k2 ≤

c2

Cd

Q

where C j := min{1, min{ i∈I k∇f N −i (xi )k2 } : I ⊂

{0, . . . , j}}, Ĉ some positive constant and xi = PT i x̃i .

We use the approximation of the gradient to approximate

the tangent plane T N −1 at x with T̃ N −1 = ∇h f (x)⊥ . The

approximation error is then of course given by (5). Further

we let f N −1 and f˜N −1 be the restriction of f to T N −1

and T̃ N −1 respective.

In addition, we define the functions

x

hN −1 = f (V

)) and its approximation through h̃N −1 :=

0

x

f (Ṽ

) for x ∈ RN −1 , where V and Ṽ are unitary matrices

0

mapping RN −1 ⊂ RN to T N −1 respective T̃ N −1 . Again we

want to step by step compute the column vectors ui of V ,

i = N, . . . , d + 1, as the normalized gradients of f , f i . But

instead of computing the gradient of f j , respective hj , we can

only approximate it trough a approximation of the gradient

of f˜j , respective h̃j . Thus, we step by step set the columns

ũi , i = N, . . . , d + 1 of Ṽ by the normalized approximated

gradients of f˜i . The error of the approximation can then be

estimated by:

Lemma III.4. With the same assumptions and choices as in

Theorem III.1 and Claim III.3 it holds

S1 := kũN −1 − uN −1 k2 ≤ S0 + 2[c2 + C̃]S0 ,

where C̃ := 2kgkC s + c3 .

We first have to proof the following lemma:

Lemma III.5. With the same assumptions and choices as

before, let x := PT N −1 x̃, where x̃ ∈ T̃ N −1 then

kPP x − PP x̃k2 ≤ kuN − ũN k2 .

We can show that similar estimations as in Lemma III.4

hold for kui − ũi k2 , i = d + 1, . . . , N − 2. First it follows

similarly to Lemma III.5:

Lemma III.6. With the same assumptions and choices as

before it holds for x̃i ∈ T̃ i and x = PT i x̃i that:

kPP xi − PP x̃i k22 ≤

N

X

kũj − uj k22 .

j=i+1

direction η ∈ S N −1 , and all H ∈ G(N − d, N ) such that

η∈

/ H ⊥:

gH (kPH xt k22 ):= gH,η (kPH xt k22 ) := f (xt ),

and we will minimize the objective function

G(N − d, N ) 3 H 7→F̂ l (H)

n

X

2

l

=

f (xi ) − ĝH

(kPH xi k22 ) ,

i=1

l

for some appropriately chosen points x1 , . . . , xn and ĝH

being

the approximation of the one-dimensional function gH , which

can be computed by sampling gH at l equally spaced points

along the line given by η. Note that η is almost surely not

in L and that in this case gL = g holds true. By choosing the

xi , i = 1, . . . , n, carefully we can ensure that L is indeed the

unique minimizer of the objective function

This inequality in turn yields the estimation we wished for:

Lemma III.7. With the same assumption and choices as

before and with K := 2[C̃ + 1 + c2 ] it holds:

kuN −i − ũN −i k2 ≤ S0 + K

i−1

X

Sj := Si ,

j=0

and therefore Sd ≤ (1 + K)d S0 . It further holds for every

constant K ≥ 1

d−1

X

(1 + K)i ≤ (1 + K)d .

i=0

Putting the conclusions of the previous lemmas together

finishes the proof of Theorem III.1.

The estimation of g itself is again very simple. Thus,

computing the gradient gives the direction in which f changes,

and in this direction it becomes a one-dimensional function.

Hence, we can estimate g with well-known numerical methods. Indeed, we have already seen that the gradient of f in

some point x is given by ∇f (x) = g 0 (kPP xk22 )PP x, i.e. the

normalized direction is a := PP x/kPP xk2 . Setting xt := ta

yields

f (xt ) = g(kPP xt k22 ) = g(

t2

kPP xk22 ) = g(t2 ).

kPP xk22

We only have to work further if we do not know that H is a

subspace but an affine subspace in advance. In this case, we

can only approximate g up to a translation with the method

described before.

However, similar to the algorithm in [4], this algorithm

uses a fixed number of samples and the estimation cannot

be improved by taking more samples. We therefore aim for

an algorithm which yields a true convergence result. For this

purpose we again rewrite the distance of a point x ∈ RN to a

subspace L as dist(x, L)2 = kPL⊥ xk22 . A possibility is now

to introduce an optimization problem over a Grassmannian

manifold G(N −d, N ). Thereto we set, for a randomly chosen

G(N −d, N ) 3 H 7→ F(H) =

n

X

f (xi ) − gH (kPH xi k22 )

2

.

i=1

Thus, we will give results on how many points xi , i = 1, . . . , n

are needed and how to choose them. We will further show that

l

F̂ almost surely converges to F for l → ∞ and that therefore

the solution L̃ of the minimization problem

l

L̃ := argminH∈G(N −d,N ) F̂ (H)

gives a suitable approximation to L. Namely, we can show the

following result:

Theorem III.8. Suppose that the derivative of g is bounded

from below by some positive constant and that d = 1 or d =

N − 1. Let L̃ := argminH∈G(N −d,N ) F̂M (H), then

√

kPL̃ − PL kop . εl = l−1/2 .

IV. O UTLOOK

The algorithm we have introduced to recover generalized

ridge functions of type (4) is based on the approximation of

the gradient of f at several points. We also aim to use gradient

approximations to capture generalized ridge functions of the

type (2). In particular we want to use the gradients to compute

samples from the manifold. We then aim to apply the methods

of [5] to estimate the manifold M .

R EFERENCES

[1] R. DeVore, G. Petrova, and P. Wojtaszczyk, “Approximation of functions of few variables in high dimensions,” Constructive Approximation,

vol. 33, no. 1, pp. 125–143, 2011.

[2] A. Cohen, I. Daubechies, R. DeVore, Kerkyarcharian, and D. Picard,

“Capturing ridge functions in high dimensions from point queries,”

Constr. Approx., vol. 35, pp. 225–243, 2012.

[3] M. Fornasier, K. Schnass, and J. Vybiral, “Learning functions of few

arbitrary linear parameters in high dimensions,” Found. Comput. Math.,

vol. 12, pp. 229–262, 2012.

[4] A. Kolleck and J. Vybiral, “On some aspects of approximation of ridge

functions,” 2014. [Online]. Available: http://arxiv.org/pdf/1406.1747.pdf

[5] W. K. Allard, G. Chen, and M. Maggioni, “Multi-scale geometric methods for data sets ii: Geometric multi-resolution analysis,” Applied and

Computational Harmonic Analysis, vol. 32, no. 3, pp. 435–462, 2012.