An Empirical Trust Computation Method In Agent Based System Preeti Nutipalli ,

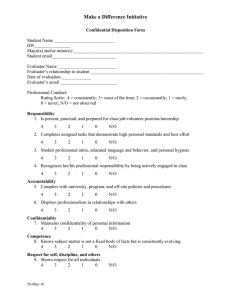

advertisement

International Journal of Engineering Trends and Technology (IJETT) – Volume 4 Issue 9- Sep 2013 An Empirical Trust Computation Method In Agent Based System Preeti Nutipalli1, Tammineni Ravi Kumar2 1 M.Tech Scholar, 2 Sr.Assistant Professor 1,2 Dept of Computer Science & Engineering, in Aditya Institute of Technology and Management, Tekkali, Andhra Pradesh Abstract:-Now a day’s security and privacy issues have become critically important with the fast expansion of multi agent systems(MAS).Most network applications such as grid computing , and p2p n/w ‘s can be viewed as MAS which are open, anonymous, and dynamic in nature. Such characteristics of MAS introduce vulnerabilities and threats to providing secured communication when malicious agents appear. One feasible way to minimize the threats is to evaluate the trust and reputation of the interacting agents Most trust/computation models works on statistical measures(i.e. Direct and indirect trust computations)which are not optimal to address this issue. So, to cope with the strategically altering behavior of malicious agents; we present in this paper an efficient trust computation method, which is based on classification for analyzing the nodes or user when connects to the network. Key Terms: Multi agent systems, trust management, trust and computation models from interactions with agent b and the various relationships that may exist between them (e.g. owned by the same organization, relationships derived from relationships between the agents’ owners in the real life such as friendship or relatives, relationships between a service provider agent and its registered consumer agents). The latter consists of observations by the society of agent b’s past behavior (here termed its reputation). These indirect observations are aggregated in some way to define agent b’s past behavior based on the experiences of all the participants in the system.[5][6][7]. Agent Interaction Organizational Relationship 1 INTRODUCTION A wide variety of networked computer systems (such as the Grid, the Semantic Web, and peer-to-peer systems) can be viewed as multi-agent systems (MAS) in which the individual components act in an autonomous and flexible manner in order to achieve their objectives [1]. An important class of these systems is those that are open; here defined as systems in which agents can freely join and leave at any time and where the agents are owned by various stakeholders with different aims and objectives. From these two features, it can be assumed that in open MAS: (1) the agents are likely to be unreliable and self interested; (2) no agent can know everything about its environment; and (3) no central authority can control all the agents. Despite these many uncertainties, a key component of such systems is the interactions that necessarily have to take place between the agents. Moreover, as the individuals only have incomplete knowledge about their environment and their peers, trust plays a central role in facilitating these interactions [1- 4]. Specifically, trust is here defined as the subjective probability with which an agent a assesses that another agent b will perform a particular action, both before a can monitor such action and in a context in which it affects its own action (adapted from [4]). Generally speaking, trust can arise from two views: the individual and the society level. The former consists of agent a’s direct experiences ISSN: 2231-5381 Sphere of Visibility & interaction Environment Canonical view of MAS Given its importance, a number of computational models of trust and reputation have been developed, but none of them are well suited to open MAS. Specifically, given the above characteristics, in order to work efficiently in open MAS, a trust model needs to possess the following properties: 1. It should take into account a variety of sources of trust information in order to have a more precise trust measure http://www.ijettjournal.org Page 4153 International Journal of Engineering Trends and Technology (IJETT) – Volume 4 Issue 9- Sep 2013 (by cross correlating several perspectives) and to cope with the situation that some of the sources may not be available. 2. Each agent should be able to evaluate trust for itself. Given the ‘no central authority’ nature of open MAS, agents will typically be unwilling to rely solely on a single centralized trust/reputation service. 3. It should be robust against possible lying from agents (since the agents are self-interested). 2 RELATED WORK A wide variety of trust and reputation models have been developed in the last few years (e.g. [1], [4] [5][7][9][10]This section reviews a selection of notable models and shows how computational trust models have evolved in recent years with a particular emphasis on their applicability in open MAS Specifically that derive trust using certificates, rules, and policies. Second, surveys the popular trust models that follow the centralized approaching which witness observations are reported to a central authority. Finally notable models that follow the decentralized approach in which no central authority is needed for trust evaluations. From now on, for the convenience in referring agents, we call the agent evaluating the trustworthiness of another the evaluator, or agent a; and the agent being evaluated by a the target agent, or agent b. As can be seen in the previous section, trust can come from a number of information sources: direct experience, witness information, rules or policies. However, due to the openness of MAS, the level of knowledge of an agent about its environment and its peers may vary greatly during its life cycle. Therefore, at any given time, some information sources may not be available, or adequate, for deducing trust. For example, the following situations may (independently) happen: – An agent may never have interacted with a given target agent and, hence, its experience cannot be used to deduce how trustworthy the target agent is. – An agent may not be able to locate a witness for the target agent (because of a lack of knowledge about the target agent’s society) and, therefore, it cannot obtain witness information about that agent’s behaviors. – The current set of rules to determine the level of trust is not applicable for the target In such scenarios, trust models that use only one source of information will fail to provide a trust value of the target agent. For that reason, FIRE adopts a broader base of information than has hitherto been used for providing trust- ISSN: 2231-5381 related information. Although the number of sources that provide trust-related information can be greatly varied from application to application, we consider that most of them can be categorized into the four main sources as follows: – Direct experience: The evaluator uses its previous experiences in interacting with the target agent to determine its trustworthiness. This type of trust is called Interaction Trust. – Witness information: Assuming that agents are willing to share their direct experiences, the evaluator can collect experiences of other agents that interacted with the target agent. Such information will be used to derive the trustworthiness of the target agent based on the views of its witnesses. Hence this type of trust is called Witness Reputation. – Role-based rules: Besides an agent’s past behaviors (which is used in the two previous types of trust), there are certain types of information that can be used to deduce trust. These can be the various relationships between the evaluator and the target agent or its knowledge about its domain (e.g. norms, or the legal system in effect). For example, an agent may be preset to trust any other agent that is owned, or certified, by its owner; it may trust that any authorized dealer will sell products complying to their company’s standards; or it may trust another agent if it is a member of a trustworthy group.4 Such settings or beliefs (which are mostly domain-specific) can be captured by rules based on the roles of the evaluator and the target agent to assign a predetermined trustworthiness to the target agent. Hence this type of trust is called Role-based Trust. – Third-party references provided by the target agents: In the previous cases, the evaluator needs to collect the required information itself. However, the target agent can also actively seek the trust of the evaluator by presenting arguments about its trustworthiness. In this paper, such arguments are references produced by the agents that have interacted with the target agents certifying its behaviors. However, in contrast to witness information which needs to be collected by the evaluator, the target agent stores and provides such certified references on request to gain the trust of the evaluator. Those references can be obtained by the target agent (assuming the cooperation of its partners) from only a few interactions, thus, they are usually readily available. This type of trust is called Certified Reputation. Apart from these traditional trust computation methods we are introducing an efficient and novel approach for calculating the trust of the agent or node in multi agent systems. http://www.ijettjournal.org Page 4154 International Journal of Engineering Trends and Technology (IJETT) – Volume 4 Issue 9- Sep 2013 3 PROPOSED WORK Now a day’s identifying the unauthorized user in network is still an important research issue during the peer to peer connections. Networks are protected using many firewalls and encryption software’s. But many of them are not sufficient and effective. Most trust computation systems for mobile ad hoc networks are focusing on either routing protocols or its efficiency, but it fails to address the security issues. Some of the nodes may be selfish, for example, by not forwarding the packets to the destination, thereby saving the battery power. Some others may act malicious by launching security attacks like denial of service or hack the information. The ultimate goal of the security solutions for wireless networks is to provide security services, such as authentication, confidentiality, integrity, anonymity, and availability, to mobile users. This paper incorporates agents and data mining techniques to prevent anomaly intrusion in mobile adhoc networks. Home agents present in each system collect the data from its own system and using data mining techniques to observe the local anomalies. The Mobile agents monitoring the neighboring nodes and collect the information from neighboring home agents to determine the correlation among the observed anomalous patterns before it will send the data. This system was able to stop all of the successful attacks in an adhoc networks and reduce the false alarm positives. In our approach we proposes an efficient classification based approach for analyzing the anonymous users and calculates the trust measures based on the training data with the anonymous testing data. Our architecture contributes with the following modules like Analysis agent, Neighbor hood node, Classifier and data collection and preprocess as follows 1) Analysis agent –Analysis agent or Home Agent is present in the system and it monitors its own system continuously. If an attacker sends any packet to gather information or broadcast through this system, it calls the classifier construction to find out the attacks. If an attack has been made, it will filter the respective system from the global networks. Normal profile is created using the data collected during the normal scenario. Attack data is collected during the attack scenario. 4) Data pre-process - The audit data is collected in a file and it is smoothed so that it can be used for anomaly detection. Data pre-process is a technique to process the information with the test train data. In the entire layer anomaly detection systems, the above mentioned preprocessing technique is used For the classification process we are using Bayesian classifier for analyzing the neighbor node testing data with the training information. Bayesian classifier is defined by a set C of classes and a set A of attributes. A generic class belonging to C is denoted by cj and a generic attribute belonging to A as Ai. Consider a database D with a set of attribute values and the class label of the case. The training of the Naïve Bayesian Classifier consists of the estimation of the conditional probability distribution of each attribute, given the class. In our example we will consider a synthetic dataset which consists of various anonymous and non anonymous users node names, type of protocols and number of packets transmitted and class labels, that is considered as our feature set C (c1,cc,……cn) for training of system and calculates overall probability for positive class and negative class and then calculate the posterior probability with respect to all features ,finally calculate the trust probability. Algorithm to classify malicious agent Sample space: set of agent H= Hypothesis that X is an agent P(H/X) is our confidence that X is an agent P(H) is Prior Probability of H, ie, the probability that any given data sample is an agent regardless of its behavior P(H/X) is based on more information, P(H) is independent of X 2) Neighbouring node - Any system in the network transfer any information to some other system, it broadcast through intermediate system. Before it transfer the message, it send mobile agent to the neighbouring node and gather all the information and it return back to the system and it calls classifier rule to find out the attacks. If there is no suspicious activity, then it will forward the message to neighbouring node. Estimating probabilities 3) Data collection - Data collection module is included for each anomaly detection subsystem to collect the values of features for corresponding layer in an system. Steps Involved: ISSN: 2231-5381 P(X), P(H), and P(X/H) may be estimated from given data Bayes Theorem P(H|X)=P(X|H)P(H)/P(X) 1. Each data sample is of the type http://www.ijettjournal.org Page 4155 International Journal of Engineering Trends and Technology (IJETT) – Volume 4 Issue 9- Sep 2013 X=(xi) i =1(1)n, where xi is the values of X for attribute Ai 2. Suppose there are m classes Ci, i=1(1)m. X Î Ci iff P(Ci|X) > P(Cj|X) for 1£ j £ m, j¹i i.e BC assigns X to class Ci having highest posterior probability conditioned on X The class for which P(Ci|X) is maximized is called the maximum posterior hypothesis. From Bayes Theorem 2. If class prior probabilities not known, then assume all classes to be equally likely Figure 1 P(X) is constant. Only need be maximized. Otherwise maximize Figure 1 represents the training information which consists of the characteristics of the agents when connects to the neighborhood node, it takes the node names, destination code it is connected, number of packets transmitted to the node and type of protocol used to connect. P(Ci) = Si/S Problem: computing P(X|Ci) is unfeasible! 4. Naïve assumption: attribute independence P(X|Ci) = P(x1,…,xn|C) = PP(xk|C) 5. In order to classify an unknown sample X, evaluate for each class Ci. Sample X is assigned to the class Ci iff P(X|Ci)P(Ci) > P(X|Cj) P(Cj) for 1£ j £ m, j¹i While comparing the traditional measure with our novel approach with following measures in figure2, it shows the common trust with individual characteristics but not with the trust measure, because the final trust measure calculated in novel approach with the classification mechanism it analyzes with the positive and negative detections. 4 EXPERIMENTAL ANALYSIS Traditional trust computation measures based on static measures direct trust, indirect trust, recent trust, Historical trust and expected trust all these trust measures leads to the score of trust computation, it may not leads to optimal trust computation. In our novel approach we are considering the characteristics of the agents, from the nodes we can analyze the agent when connects to the other agent by using the classifier instead of gathering the information from the other sources and trusting and rely on others. Synthetic dataset can be shown as follow Figure 2 5 CONCLUSION To summarize, we have identified the questions to be addressed when trying to find a solution to the problem of trust assessment based on reputation management in a multiagent systems a simple, yet robust method that shows that a solution to this problem is feasible. In this approach data about the observed behavior about all other agents and from all other agents is made directly available for evaluating trustworthiness using the naïve Bayesian classification mechanism by considering the feature sets or ISSN: 2231-5381 http://www.ijettjournal.org Page 4156 International Journal of Engineering Trends and Technology (IJETT) – Volume 4 Issue 9- Sep 2013 characteristics of the agent. This allows to compute directly the expected outcome respectively risk involved in an interaction with an agent, and makes the level of trust directly dependent on the expected utility of the interaction. Nutipalli Preeti Completed MCA from Maharaja Post Graduate College, Vizianagram and she pursuing M.Tech in Aditya Institute of Technology and Management, Tekkali. Her Interested areas are Mobile Computing, Disributed & parallel computing. REFERENCES Mr. Tammineni Ravi Kumar received the BTech in CSIT and MTech in CSE Engg degrees from the Department of Computer Science and Engineering at the Jawaharlal Nehru Technological University, Hyderabad(JNTUH) respectively. He is currently working as Sr.Asst.Prof. in Department of Computer Science & Engineering, in Aditya Institute of Technology and Management, Tekkali, Andhra Pradesh, India. He has 7 years of experience in teaching Computer Science and Engineering related subjects. His Research interests include Software Engineering, Image Processing and Distributed & Parallel Computing. [1] N.R. Jennings, “An Agent-Based Approach for Building Complex Software Systems,” Comm. ACM, vol. 44, no. 4, pp. 35-41, 2001. [2] R. Steinmetz and K. Wehrle, Peer-to-Peer Systems and Applications. Springer-Verlag, 2005. [3] Gnutella, http://www.gnutella.com, 2000. [4] Kazaa, http://www.kazaa.com, 2011. [5] edonkey2000, http://www.emule-project.net, 2000. [6] I. Foster, C. Kesselman, and S. Tuecke, “The Anatomy of the Grid: Enabling Scalable Virtual Organizations,” Int’l J. High Performance Computing Applications, vol. 15, no. 3, pp. 200-222, 2001. [7] T. Berners-Lee, J. Hendler, and O. Lassila, “The Semantic Web,”Scientific Am., pp. 35-43, May 2001. [8] D. Saha and A. Mukherjee, “Pervasive Computing: A Paradigm for the 21st Century,” Computer, vol. 36, no. 3, pp. 25-31, Mar. 2003. [9] S.D. Ramchurn, D. Huynh, and N.R. Jennings, “Trust in Multi- Agent Systems,” The Knowledge Eng. Rev., vol. 19, no. 1, pp. 1-25, 2004. [10] P. Dasgupta, “Trust as a Commodity,” Trust: Making and Breaking Cooperative Relations, vol. 4, pp. 49-72, 2000. [11] P. Resnick, K. Kuwabara, R. Zeckhauser, and E. Friedman, “Reputation Systems,” Comm. ACM, vol. 43, no. 12, pp. 45-48, 2000. [12] A.A. Selcuk, E. Uzun, and M.R. Pariente, “A Reputation-Based Trust Management System for P2P Networks,” Proc. IEEE Int’l Symp. Cluster Computing and the Grid (CCGRID ’04), pp. 251-258, 2004. [13] M. Gupta, P. Judge, and M. Ammar, “A Reputation System for Peer-to-Peer Networks,” Proc. 13th Int’l Workshop Network and Operating Systems Support for Digital Audio and Video (NOSSDAV ’03), pp. 144-152, 2003. [14] K. Aberer and Z. Despotovic, “Managing Trust in a Peer-2-Peer Information System,” Proc. 10th Int’l Conf. Information and Knowledge Management (CIKM ’01), pp. 310-317, 2001. [15] L. Mui, M. Mohtashemi, and A. Halberstadt, “A Computational Model of Trust and Reputation for EBusinesses,” Proc. 35th Ann. Hawaii Int’l Conf. System Sciences (HICSS ’02), pp. 2431-2439, 2002. BIOGRAPHIES: ISSN: 2231-5381 http://www.ijettjournal.org Page 4157