IRIS & Finger Print Recognition Using PCA for Multi Modal

advertisement

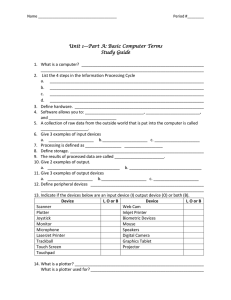

International Conference on Global Trends in Engineering, Technology and Management (ICGTETM-2016) IRIS & Finger Print Recognition Using PCA for Multi Modal Biometric System Piyush G. Kale, Khandelwal C.S. M.E. (EC), Department of Electronics & Telecommunication, Jawaharlal Nehru Engineering College, Aurangabad. India Abstract—A Biometric System is of one the essential Pattern Recognition system that are used for identifying individuals using different Biometric Traits.The Authentication System design on only one Biometric modality may not satisfy the requirement of demanding applications in term of properties such as Accuracy, Acceptability &performances. In this paper, we proposed IRIS & Finger print recognition based on PCA. In proposed a multi modal recognition system that we fuses results from both Principal Component Analysis, Minutia extraction and Weighted LBP feature extraction on different biometric traits. The proposed authentication system uses the IRIS and Fingerprint of a person for recognizing a person. We will use two different methods for comparing the performance& accuracy. The classifier viz., SVM & ANN are used for matching. It is observed that proposed algorithm that has best performance parameters as compared existing algorithms. Key words: Biometrics, IRIS, Finger Print, PCA, Minutia, Weighted LBP. I. INTRODUCTION In recent year authentication using biometric system has been considerably improved in reliability & accuracy. In many of organisation still biometric system are using for the authentication of the individuals. Even many of biometric systems are still facing problems &generally suffers from many difficulties such as intraclass variability , limited Degree of Freedom , noisy input data & data sets, spoof attack & other related parameters problems which affect the Performance , Reliability & Accuracy of Biometric authentication system[1]. Mainly biometric system performs the four basic steps i.e. Acquisition, Feature Extraction, Matching and Decision making. So the limitations of unimodal biometric system can be overcome by multi modal biometric systems. The Biometric authentication technology certifies recognition of human on the basis of some unique characteristics owned by him. IRIS and Finger print recognition are one of the techniques used for biometric authentication. ISSN: 2231-5381 In design of this system we will use two types of authentication traits of biometric systems i.e. IRIS and Finger print. IRIS & Finger print images of person we will use in system for authentication of them also we will check the reliability and accuracy of the system, so this system can be helpful in privacy also. II. MULTI MODAL BIOMETRIC Multimodal biometric systems are those that use more than one physiological or behavioural characteristic for enrolment, verification &identification. An overview of Multimodal Biometrics and have proposed various levels of fusions, various possible scenarios, the different kind’s modes of operation, integration methods and design issues. Some of the limitations imposed by unimodal biometric systems can be overcome by using multiple biometric modalities. Such systems, known as multimodal biometric systems, are expected to be safer due to the presence of multiple independent pieces of proof’s [1]. These systems are also able to meet the stringent performance requirements imposed by various applications. Multimodal biometric systems address the problem of non-universality since multiple traits ensure sufficient population coverage. Further, multimodal biometric systems provide anti-spoofing measures by making it difficult for an intruder to simultaneously spoof the multiple biometric traits of a legitimate user [3]. By asking the user to present a random subset of biometric traits, the system ensures that a “live” user is indeed present at the point of data possession. Thus, a challenge-response type of authentication can be facilitated using multimodal biometric systems. In applications such as boundary entry &exit, access control, civil identification network surveillance, multi-modal biometric systems are looked to as a means of reducing false non-match and false match rates providing a secondary means http://www.ijettjournal.org Page 78 International Conference on Global Trends in Engineering, Technology and Management (ICGTETM-2016) of enlistment, verification, and recognition if sufficient data cannot be acquired from a given biometric samples & combating attempts to fool biometric systems through fraudulent data sources such as fake fingers [4]. III. PRINCIPLE COMPONENT ANALYSIS Principal Component Analysis (PCA) is the generalized technique which uses sophisticated underlying mathematical principles to transforms a number of possibly correlated variables into a smaller number of variables called Principal Components. [1] The origins of Principal Component Analysis lie in multivariate data analysis; however, it has a various range of applications. PCA has been called one of the most important results from applied linear algebra and perhaps its most common use is as the first step in trying to analyze huge data sets. Some of the other common applications include de-noising signals, blind source separation, and data compression. The PCA uses a vector space transform to reduce the dimensionality of large data sets into compressed data set. Using mathematical principle, the authenticated data set which may have involved many variables, can often be explained in just a few variables (the principal components) [2].It is therefore often the case that an examination of the reduced dimension data set will allow the user to analyze trends, patterns and outliers in the data far more easily than would have been possible without performing the principal component analysis [3]. The PCA is mostly used as a tool in exploratory data analysis and for making predictive models. It can be done by Eigen value decomposition of a Data Covariance (or correlation) matrix or singular value decomposition of a data matrix, usually after mean centering (and normalizing or using Z-scores) the data matrix for each attribute. The results of a Principal Component Analysis are usually discussed in terms of component scores, sometimes called factor scores (the transformed variable values corresponding to a particular data point), and loadings (the weight by which each standardized original variable should be multiplied to get the component score)[4]. ISSN: 2231-5381 PCA is the simplest of the true eigenvector-based multivariate analyses. Often this operation can be thought of as revealing the internal structure of the data in a way that best explains the variance in the data. GOAL OF PCA a. b. c. d. Extract the Most Important data from the Data Base or Data Table Modify & Compress the Size of Data set by keeping the only important data Analyze & Simplify the description of Data set Observe & Signify the structure of the data variables present SUPPORT VECTOR MACHINE (SVM) In empirical data modeling a process of induction is used to build up a model of the system, from which it is hoped to reduce responses of the system that have yet to be observed. Ultimately the quantity and amount of the observations govern the performance of this empirical model. By observational nature data obtained is limited and sampled, typically this sampling is non-uniform and due to the high dimensional nature of the problem the data will form only a sparse distribution in the input space. Consequently the problem is always ill posed in the sense of Hadamard.Traditional neural network approaches have suffered difficulties with generalized, produce models that can overfit the data. These are the step of the optimization algorithms used for parameter selection and the statistical measures used to select the optimize model. The foundations of Support Vector Machines (SVM) have been developed by Vapnik in 1995 at AT & T Bell Laboratory and are gaining popularity due to many attractive Features and promising empirical performance. The manipulation embodies the Structural Risk Minimization (SRM) principle, which has been shown to be higher to old Empirical Risk Minimization (ERM) principle employed by traditional neural networks. SRM will be minimizes an upper bound on the expected hazard, as opposed to ERM that minimizes the error on the training data. This is the difference which equips SVM with a higher ability to generalize, which is the target in statistical learning [1]. SVMs were developed to solve the classification problem, but recently they have been extending to the domain of regression problems. The term SVM is typically used to describe classification with support vector methods and support vector regression is used to describe http://www.ijettjournal.org Page 79 International Conference on Global Trends in Engineering, Technology and Management (ICGTETM-2016) regression with support vector methods. In this paper the term SVM will refer to both classification and regression methods and the terms Support Vector Classification (SVC) and Support Vector Regression (SVR) will be used for specification. This part will continue with a complete introduction to the structural risk. Minimization principle [1]. The SVM is introduced in the setting of classification, being both historical and more usable. This leads onto mapping the input into a higher dimensional feature space by a require choice of kernel function. The report then considers to the problem of regression. SVM has successful application in Bioinformatics, Text & Image Recognition. ARTIFICIAL NEURAL NETWORK (ANN) Artificial Neural Networks (ANN) are non-linear mapping structures based on the function of the human brain. The human brain is powerful tools for modeling especially when the underlying data relationship is unknown. ANN can identify and learn related patterns between input data sets and corresponding destination values. After training, ANNs can be used to predict the outcome of new independent input data. ANNs imitate the learning process of the human brain and can process problems involving non-linear and complex data even if the data are imprecise and noisy. Thus they are naturally suited for the modelling of agricultural data which are known to b e difficult and often non-linear. ANNs has more capacity in predictive modelling i.e., all the characters describing the unknown situation can be presented to the trained ANNs, and then guess on agricultural systems is guaranteed. An ANN is a computational structure that is inspired b y observed process in natural networks of biological neurons in the brain. It consists of easy computational units called neurons, which are highly connected with each other. ANNs have become focus the much attention, largely because of their large range of applicability and the ease with which they can treat difficult problems. ANNs are parallel computational models comprised of densely interconnected adaptive execution units. These networks are fine grained parallel implementations of nonlinear static or public systems. This is important feature of these networks is their adaptive nature, where “learning by example” replaces “programming” in solving problems. This feature are used makes such computational models very appealing in application domains where one has little or incomplete understanding of the problem to be solved but where training data is already available. the area of classification and prediction, where regression model and other related mathematical techniques have traditionally been employed. The most widely used learning algorithm in an ANN is the Back propagation algorithm. There are various types of ANNs like Multilayered Perception, Radial Basis Function and Kohonen networks. These networks are “neural” in the sense that they may have been inspired by neuroscience but not necessarily because they are faithful models of biological neural or cognitive phenomena. In fact most number of the network are more closely related to traditional mathematical and statistical models such as non-parametric pattern classifiers, clustering algorithms, nonlinear filters, and statistical regression models than they are to neurobiology models. ANNs have been used for a wide variety of applications where statistical methods are conventionally employed. The problems which were normally solved through classical statistical methods, such as discriminant analysis, logistic regression, Bayes analysis, multiple regression, and ARIMA time-series models are being tackled by ANN. It is therefore, time to recognize ANN as a powerful tool for data analysis. IV. METHODOLGY In this approach of IRIS & Finger Print Recognition using Principle Component Analysis even though various classifiers are specified already but less accuracy are matched in available Data Set & Real Image. So this is the challenging part when we think about designing this application [1]. Our Solution is as follows: Firstly collect the data base of IRIS & Finger print of Individuals separately. Take a Real Appearance of IRIS & Finger of Individual. Using Algorithms associated Classifiers; we will compare both the snap at Extraction Point by different classifiers. So formulate the Result of Extraction point of snap, after that check the Acceptance Ratio& Rejection Ratio distinctly. So from the Point of Exemption, we can analysis accuracy level of Snap. ANNs are now being increasingly recognized in ISSN: 2231-5381 http://www.ijettjournal.org Page 80 International Conference on Global Trends in Engineering, Technology and Management (ICGTETM-2016) V. PROPOSED IMPLEMENTATION Hardware Platform: We will useFutronics FS 80 Biometric Finger Print Module & IRIS Scanner Module for the taking the Real snap of Finger & IRIS Templates respectively.Futronics FS 80 module is Electronic device used to capture a digital image of the fingerprint pattern.It is very compatible &excellent communications reliability with power supply in a wide range of secureapplications. Iris Scanner module performs recognition detection of a person's identity by mathematical analysis of the random patterns that are visible within the iris of an eye from some distance. It combines computer vision, pattern recognition, statistical inference and optics it is generally conceded that IRIS recognition is the most accurate. Iris cameras, in general, take a digital photo of the iris pattern and recreating an encrypted digital template of that pattern. That encrypted templates cannot be reproduced in any sort of visual image. IRIS recognition therefore affords the highest level defence against identifying theft, the most immediately growing crime. Today's commercial IRIS Scanner use infrared light to illuminate the iris without causing harm or discomfort to the eye. So along with this both hardware we will use the software that is MATLAB version 8 for the implementation of system. So in the implementation of system, we will take the snap of IRIS & FINGER print, and then it will passes through the isolated processing respectively. After processing of data it will send through PCA algorithms along with the classifier. So after that feature extraction & fusion matching of real snap with enrolled data set will take place. Then at the end we will get the recognised result from the system [1]. VI. CONCLUSION This is first kind of implementation on IRIS & FINGER print recognition using PCA for multi modal biometric system with this specialized hardware’s & software, but such an implementation is very much costly [1]. So Same thing we can implement using low cost only with the real appearance of Finger print with its module & present data set of IRIS snap without its module. The cost of IRIS module is too costly; if we don’t required real data set of IRIS then it is possible. ACKNOWLEDGMENT We are sincerely thankful to our institute Jawaharlal Nehru Engineering College for providing us the world class infrastructure for the developing of the project. We express our sincere thanks to our respected Principal Dr. Sudhir D. Deshmukh, Head of Department Prof. J.G.Rana, Prof. Khandelwal C.S. and Prof. B.A.Patil for permitting us to present this paper and also to all staff members & our colleagues for their continuous encouragement, suggestions and Support during the tenure of the paper & project. We hereby also acknowledge the literature which helped us directly or indirectly in the preparation of this paper & project. REFERENCES [1] Dinkardas CN, Dr.S.Perumal Sankar, Nisha George “A Multimodal Performance Evaluation On Two Different Models Based On Face, Fingerprint AndIris Templates” IEEE Paper 2013. [2] Qazi Emadul Haq , Muhammad Younus Javed ,Qazi Sami ulHaq“Efficient and Robust Approach of Iris Recognition through Fisher Linear Discriminant Analysis Method and Principal Component Analysis Method” IEEE 2008. [3] Hariprasath.S, Prabakar.T.N “Multimodal Biometric Recognition Using Iris Feature Extraction and Palmprint Features” IEEE Paper 2012. [4] S. Anu H Nair, P.Aruna & M. Vadivukarassi “PCA BASED Image Fusion And Biometric Features” IJACTE ISSN (Print):2319–2526, Volume-1, Issue-2, 2013 Fig. 1 Flow Chart for proposed implementation ISSN: 2231-5381 http://www.ijettjournal.org Page 81