Recursive Formulation with Learning Suehyun Kwon April 24, 2014

advertisement

Recursive Formulation with Learning

Suehyun Kwon∗

April 24, 2014

Abstract

This paper provides an algorithm to find the optimal contract with learning. There

is a payoff-relevant state, and the principal and the agent start with a common prior.

Each period, the agent takes an unobservable action, and the distribution of outcome

is determined by the agent’s action and the state. Because of learning, the principal

and the agent have asymmetric information off the equilibrium path, and the usual

algorithms don’t apply. The proposed algorithm decomposes the continuation values

by each state, and the one-shot deviation principle is shown. The algorithm allows for

any risk preference of the agent and different commitment powers of the principal.

1

Introduction

Consider the worker’s ability in Harris and Holmström (1982) or Holmström (1982). This

paper presents a model of dynamic moral hazard in which the principal and the agent learn

about the agent’s type over time. An algorithm to find the optimal contract in a general

environment is provided. The main difference from the two papers cited above is that the

outside option of the agent is assumed to be exogenous. The focus of the paper is how

learning affects the agent’s incentives within the relationship and how one can characterize

the optimal contract with an algorithm.

When the principal hires an agent, the agent’s ability may be unknown to both the

principal and the agent. Or if the quality of matching between the principal and the agent

is unknown at the onset of the relationship, the principal and the agent learn about the

match quality over time. In innovation or experimentation, neither the principal or the

agent knows the quality of the project, and they have to learn over time. Learning brings

a few challenges into the characterization of the optimal contract. The first challenge is

∗

Kwon: University College London, suehyun.kwon@ucl.ac.uk, Department of Economics, University College London, Gower Street, London, WC1E 6BT United Kingdom. I thank Dilip Abreu, V. Bhaskar,

Patrick Bolton, Antonio Cabrales, Pierre-André Chiappori, Martin Cripps, Glenn Ellison, Jihong Lee, George

Mailath and participants at UCL Economics Research Day for helpful comments.

1

to represent the continuation values of the principal and the agent. When the principal

offers history-contingent payments, the agent evaluates it with his prior beliefs at every

information set. If the states are partially or fully persistent, the agent updates his belief

after each period, given his action and the realization of the outcome. In order to evaluate

the agent’s payoff after some history, one needs to know the prior after the history, the

equilibrium strategies and the payment for each history.

The problem is further complicated when one considers the IC constraint for the one-shot

deviation. When the agent deviates, the agent updates his posterior belief with the action

he actually took. However, the principal believes that the agent took a different action and

uses a different probability distribution to update his posterior belief. The principal and the

agent have different prior beliefs in all periods after the agent deviates unless they learn the

state perfectly after certain outcomes. In the IC constraint for the one-shot deviation, even

if the agent deviates only once, now the agent evaluates his deviation payoff with a different

prior from the principal’s prior. When the principal and the agent play the equilibrium

strategies from the following period and on, the payments for each history is the same as

when the agent has never deviated, but because the agent has a different prior belief, the

agent’s continuation value is different from the on-the-equilibrium-path continuation value.

To find the optimal contract recursively, one needs to express the agent’s payoffs on and off

the equilibrium path.

The second challenge is to show the one-shot deviation principle. Given a history and

a prior, the agent has infinitely many deviations he can do, and there are infinitely many

IC constraints. In addition, if the agent has deviated previously, then the principal and the

agent have different prior beliefs, and in order to account for all deviations, the principal

also has to consider the potential prior beliefs the agent may have. I show that it is sufficient

to consider IC constraints for one-shot deviations when the agent has never deviated before.

If the agent has never deviated before, the principal and the agent share the same prior,

and there is only one IC constraint for the one-shot deviation.

The algorithm I propose in this paper circumvents these issues by decomposing the

continuation values in each state. Instead of keeping track of the agent’s prior belief at every

information set, I note that the state transition is exogenous, and once the principal and the

agent are in a particular state, the transition probabilities and the probability distribution

of the outcome for each action are independent of the initial prior. The continuation value

when the principal and the agent follow the equilibrium strategies and they are in state i

in the current period is given by some number, and the continuation value of the agent can

be written as a linear combination of those values, with the agent’s prior as weights.

Once we decompose the continuation values in each state, the one-shot deviation principle follows from the supermodularity of the optimal contract. If the agent’s continuation

2

value under the optimal contract is supermodular in his effort and the state, then it is sufficient to consider IC constraints for one-shot deviations when the agent has never deviated

before. I assume that the agent can only deviate to a less costly action, and the agent’s

posterior after a deviation dominates the principal’s posterior in the sense of first-order

stochastic dominance.

Decomposition of continuation values and the one-shot deviation principle allow one

to find the optimal contract with one IC constraint at a time. In addition to the oneshot deviation principle in the usual sense, the principal doesn’t need to worry about the

potential priors the agent may have if he has deviated previously. If the environment is

finite, i.e., the set of states is finite and the set of outcomes is finite, then there are a

finite number of state variables, and one can find the largest self-generating set subject to

constraints. With a continuum of states and outcomes, one can discretize the state space.

The algorithm can be applied for a general outcome structure. There are a few recent

papers on dynamic moral hazard and learning. See Bhaskar (2012), DeMarzo and Sannikov

(2011), He, Wei and Yu (2013), Jovanovic and Prat (2013) and Kwon (2013). For innovation

and experimentation, see Bergemann and Hege (1998, 2005) and Hörner and Samuelson

(2013). DeMarzo and Sannikov (2011), He, Wei and Yu (2013) and Jovanovic and Prat

(2013) share the similar outcome structure in which the outcome is the sum of the agent’s

type and the effort with noise. In Bergemann and Hege (1998, 2005), Hörner and Samuelson

(2013) or Kwon (2013), there is a positive probability of a good outcome only if the agent

works and the project (state) is good. I assume the supermodularity of the optimal contract,

but even in a binary environment, the outcome structure allows for a positive probability of

a good outcome when the agent shirks if the state is good. This implies that the principal

can learn the state without leaving any rent by letting the agent shirk. If there is no

probability of a good outcome when the agent shirks, the literature finds it optimal to

frontload the incentives early on and learn the state. This property has to change when

there is a chance of learning with shirking. The principal loses output because the outside

option is inefficient, but he doesn’t have to leave any rent to the agent, and it can be optimal

to let the agent shirk early on in the relationship until they learn the state sufficiently.

DeMarzo and Sannikov (2011) and He, Wei and Yu (2013) assume that the state follows

a Brownian motion, and other papers cited above assume that the state is fully persistent.

When the state is fully persistent, the principal’s problem is a stopping-time problem. The

algorithm I propose can allow for both partially persistent states and fully persistent states.

Even if the state evolves over time, the algorithm can be applied to find the optimal contract.

Another advantage of my algorithm is that it allows for both risk-neutrality and riskaversion of the agent. If the agent is risk-averse, he may have any concave and increasing

function. All of the above papers assume either a risk-neutral agent or the CARA preference.

3

I don’t show qualitative properties of the optimal contract in this paper, but my algorithm

can provide the optimal contract for wider risk preferences. I can also allow for different

commitment powers of the principal. The algorithm can accommodate both with-in period

commitment power and no-commitment power.

There is a large literature on models with learning starting with Harris and Holmström

(1982) and Holmström (1982). Harris and Holmström (1982) doesn’t have moral hazard

while the agent in Holmström (1982) decides how much effort to exert. Cabrales, CalvóArmengol and Pavoni (2007) is a more recent paper on the topic. The main difference from

this literature is that I assume an exogenous outside option of the agent, and there is no

competitive market. Without the competitive market, the agent doesn’t benefit from the

market expectation through the outside option, and the agent’s IC constraint focuses on

the potential information asymmetry between the principal and the agent.

Lastly, there are papers with private Markovian types. Fernandes and Phelan (2000)

provides a recursive formulation with a first-order Markov chain with two types, and Zhang

(2009) solves for optimal contracts in continuous time. Recent papers include Farhi and

Werning (2013), Golosov et al (2011) and Williams (2011).

The rest of the paper is organized as follows. Section 2 describes the model, and I set

up the recursive formulation in Section 3. Section 4 discusses extensions, and Section 5

concludes.

2

Model

The principal hires an agent over an infinite horizon, t = 0, 1, 2, · · · . At the beginning of

each period, the principal offers a contract to the agent, and the agent decides whether to

accept. If the agent accepts, he takes an action from a finite set, a ∈ A = {a1 , · · · , an }, and

the outcome is realized. The distribution of the outcome is determined by the underlying

state ω ∈ Ω = [0, 1] and the agent’s action. The outcome y is in Y = [0, 1], and the

distribution of the outcome is denoted by F (·|a, ω) with pdf f (·|a, ω). The agent’s action

is unobservable to the principal, which leads to moral hazard, and the underlying state is

unobservable to both the principal and the agent; the principal only observes the outcome.

The principal makes a payment, and they move on to the next period. If the agent rejects,

the principal and the agent each get their outside options in this period, v̄ and ū, and

continue.

At the beginning of period 0, the principal and the agent start with a common prior

π0.

The states follow a first-order Markov chain, and the probability of transition from

state ω to ω 0 is denoted by m(ω 0 |ω). For the moment, I’m not assuming anything about

the transition matrix, but I will impose a condition in Assumption 3. Action ai costs ci to

4

the agent, and I assume 0 ≤ c1 < c2 < · · · < cn . I assume the principal has within-period

commitment power, and the agent has limited liability.1 The agent cannot save, and his

consumption in each period is bounded from below by 0. The principal is risk-neutral, but

the agent has an increasing utility function u(·) with u0 > 0, u(0) = 0. I allow for both

risk-aversion and risk-neutrality. The equilibrium concept is perfect Bayesian equilibrium.

I make three assumptions. The first assumption is that the agent becomes more optimistic than the principal if he takes a less costly action.

Assumption 1. Let π̂(π, ai , y) be the posterior of the agent when the prior is π, the agent

takes action ai and gets outcome y. π̂(π, ai , y) dominates π̂(π, aj , y) in the sense of firstorder stochastic dominance for all j > i.

If the principal believes that the agent took action aj , his posterior belief after getting

outcome y is π̂(π, aj , y). If the agent deviated to ai such that i < j, then the agent’s

posterior belief after the deviation is π̂(π, ai , y), and the agent’s posterior dominates the

principal’s posterior in the sense of first-order stochastic dominance.

The next assumption is that the agent can only deviate to a less costly action.

Assumption 2. Suppose the principal wants the agent to take action ai . The agent can

only deviate to aj such that j < i.

I’m considering an environment where the agent needs access to a particular technology

to produce. In some environments, the agent has to be on site to do his work, and sometimes,

researchers can access the data only from a designated location or a machine. If the agent

needs access to a technology, then the principal can limit the amount of time the agent

has access, and the agent can deviate downwards, but he can’t work more than what the

principal allows him to.

The last assumption is that the agent’s payoff under the optimal contract is supermodular in his effort and the state. This is an assumption on endogenous objects, but it is

automatically satisfied in the following environment. Suppose there are two states (“good”

and “bad”), two actions (“work” and “shirk”) and two outcomes (“good” and “bad”). If

the agent works in the good state, he gets a good outcome with some probability pH > 0. If

the agent shirks in the good state, he gets a good outcome with some probability pL < pH .

If the state is bad, then the outcome is bad. Assumption 3 is always satisfied for any pL , pH ,

and this environment embeds other models on innovation and experimentation.2

1

I consider full-commitment power and no-commitment power in Section 4.1.

See for example Hörner and Samuelson (2013). Their model is in continuous time, but the agent can

produce a good outcome with a positive probability only if he works and the project is good. I can also

allow the state to evolve over time.

2

5

Assumption 3. Under the optimal contract, the agent’s payoff is supermodular in the

agent’s effort and the state:

Z

(u(wy ) + δVyω0 m(ω 0 |ω))dF (y|a, ω)dω 0

is supermodular in a and ω, where Vyω0 is the agent’s payoff on the equilibrium path after

outcome y if the state in the following period is ω 0 and wy is the payment for outcome y in

the current period.

A history htP = (d1 , y 1 , w1 , d2 , · · · ) is the history from the principal’s perspective where

dt is the agent’s decision to accept or reject the offer. A history htA = (d1 , a1 , y 1 , w1 , d2 , · · · )

is the history from the agent’s perspective. The set of histories for the principal and the

t , respectively. Since the agent’s effort is

agent are denoted by HP = ∪HPt and HA = ∪HA

unobservable to the principal, the agent’s history also keeps track of his past efforts. The

principal’s history is a public history, but I allow private strategies so that the agent’s effort

can depend on his past effort. In this context, the updating of prior beliefs depends on the

agent’s effort. The principal updates his belief based on the on-the-equilibrium-path action

of the agent, whereas if the agent deviates, the agent updates his belief with the true action.

3

Recursive Formulation

This section develops the recursive formulation to characterize the optimal contracts. I

first show the results for the binary environment in Section 3.1 and illustrate what are the

challenges and the methodological contributions of the paper. Section 3.2 generalizes the

results to the model in Section 2.

3.1

Binary Environment

I consider the binary environment in this section. I decompose the principal’s continuation

value and the agent’s continuation value by the on-the-equilibrium-path continuation values in each state. Then I show the one-shot deviation principle holds, and in particular,

it is sufficient to consider the IC constraint for one-shot deviations when the agent has

never deviated before. Combining the decomposition of continuation values and the oneshot deviation principle, I construct the recursive formulation to characterize the optimal

contracts.

There are two states (“good” and “bad”), two actions (“work” and “shirk”) and two

outcomes (“good” and “bad”). If the agent works in the good state, he gets a good outcome

with some probability pH > 0. If the agent shirks in the good state, he gets a good outcome

with some probability pL < pH . If the state is bad, then the outcome is bad for both

6

actions. Work costs c > 0 to the agent, and shirking costs nothing. Assumptions 1 and 2

hold. Denote the Markov transition matrix by M , where Mij is the probability of being in

state j after being in state i.

3.1.1

Decomposition of Continuation Values

The first step is to decompose the continuation values of the principal and the agent.

Consider the principal’s problem. The principal maximizes his payoff in period 0 subject to

IR, IC and limited liability every period. Since the principal has within-period commitment

power, the principal’s payoff at each information set also has to be weakly greater than his

outside option. At any information set, the principal’s payoff should be weakly greater than

his payoff off the equilibrium path. This payoff is bounded from below by his outside option.

Since I’m interested in the set of all equilibrium payoffs, there is no loss of generality in

assuming that off the equilibrium path, the agent believes that his payoff is constant across

all outcomes. The agent takes the least expensive action, and anticipating this, the principal

takes his outside option.

Given a history ht and the principal’s prior π, there are infinitely many IC constraints,

but first consider the IC constraint for the one-shot deviation when the agent has never

deviated before. If the agent has never deviated before, the principal and the agent share

the same prior π. Let V (ht , π) be the agent’s continuation value after history ht with prior

π when both the principal and the agent play the equilibrium strategies. The IC constraint

for the one-shot deviation takes the following form:

− c + π1 pH (u(w1 ) + δV (ht 1, (1, 0)M )) + (1 − π1 pH )(u(w0 ) + δV (ht 0, π̂))

≥ π1 pL (u(w1 ) + δV (ht 1, (1, 0)M )) + (1 − π1 pL )(u(w0 ) + δV (ht 0, π̃)).

If they get a good outcome, then they learn that they are in the good state, and the prior

is updated according to the Markov matrix. If they get a bad outcome, the posterior belief

depends on which action the principal believes the agent took. On the equilibrium path,

the agent worked, and we have

π̂ =

π1 (1 − pH )

1 − π1

,

π1 (1 − pH ) + (1 − π1 ) π1 (1 − pH ) + (1 − π1 )

M.

On the other hand, when the agent deviated, he updates his belief to

π̃ =

π1 (1 − pL )

1 − π1

,

π1 (1 − pL ) + (1 − π1 ) π1 (1 − pL ) + (1 − π1 )

M.

Given history ht 0, the principal believes he’s offering V (ht 0, π̂) to the agent, but off-the-

7

1

π 0 p~

1

h

0

π~

p

π1

h

1 − π~

p

0

π̂~

p

1

1 − π1 h

0

pH

1 − pH

0

1

1

pH

1

0

0

1

0

0

1

0

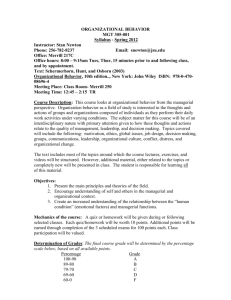

Figure 1: Decomposition of Continuation Values

equilibrium-path, the agent is getting V (ht 0, π̃). Because of this difference, we can’t take

the agent’s continuation value as the state variable anymore, and the approach in Spear

and Srivastava (1987) doesn’t work in this environment. Furthermore, ht 0 determines the

payoff stream on the equilibrium path, while the prior keeps getting updated every period;

we need to evaluate the expected payoff with respect to the prior. The first innovation

of the paper is to decompose the agent’s continuation value into on-the-equilibrium-path

continuation values in each state and to represent V (ht , π) as a linear combination of π for

any prior π. Suppose for the moment, the Markov matrix is the identity. Figure 1 shows the

decomposition of the continuation values. π 0 = (1, 0)M is the prior in the period following

a good outcome, and p~ = p0H is the probability of a good outcome in each state when the

agent works. The graph on the left is the usual representation of the information set and

the priors, and the graph on the right is the decomposition. After history ht , the principal

and the agent don’t know which state they are in. However, the state is exogenous, and

once they are in a particular state, the probability of a good outcome given the agent’s

action is the same every period. In particular, we can express the conditional probability of

a history as the sum of conditional probabilities in each state. For example, the probability

of ht 11 given history ht is π~

p × π 0 p~. But we can express it as π1 p2H + (1 − π1 ) × 0. We

can express every probability as the sum of two probabilities with π as the weights. Given

history ht , there exists V1 and V0 such that V (ht , π) = π1 V1 + (1 − π1 )V0 for all π. V1 is

the expected payoff of the agent on the equilibrium path in the good state, and V0 is the

expected payoff of the agent on the equilibrium path in the bad state.

The decomposition works for any matrix M . In Figure 2, the principal and the agent

don’t know which state they are in. If they are in the good state, they get the good outcome

with probability pH , and they get the bad outcome in the bad state. After the outcome is

realized, the state transits to the next state, and the transition probabilities are determined

by what state they are in currently. The state transition is exogenous, and it’s not affected

8

pH

1

h

1 − pH 0

π1

1

1 − π1

M11

ω1

M12

M11

ω0

ω1

M12

ω0

M21

ω1

M22

M21

ω0

ω1

M22

ω0

0

h

0

1

Figure 2: Decomposition for Partially Persistent States

by the agent’s effort or the realization of the outcome.3 Given a history, the payoff stream

on the equilibrium path is determined, and we can decompose the continuation value by the

continuation value on the equilibrium path in each state: If the principal wants the agent

to work this period, the agent’s continuation value is given by

V (ht , π) = − c + π1 pH (u(w1 ) + δ(M11 V11 + M12 V10 )) + π1 (1 − pH )(u(w0 ) + δ(M11 V01 + M12 V00 ))

+ (1 − π1 ) × 0 + (1 − π1 )(u(w0 ) + δ(M21 V01 + M22 V00 )),

where Vyω0 is the agent’s continuation value on the equilibrium path if the outcome this

period is y and they are in state ω 0 in the following period. w1 and w0 are the payments

for the good outcome and the bad outcome, respectively.

Once we decompose the continuation values by the on-the-equilibrium-path continuation

values in each state, then we can express V (ht 0, π̂) and V (ht 0, π̃) with same V11 , V10 , V01

and V00 , and the only difference between the two continuation values is that we use π̂ and

π̃ as weights for each of them.

Proposition 1. Given a contract, fix any public history ht . The agent’s expected payoff

satisfies the following equations for any prior π if the principal and the agent follow the

equilibrium strategies after ht . If the principal wants the agent to work this period, there

3

In some circumstances, one can allow the transition probabilities to be endogenous. Section 4.2 discusses

the endogenous states.

9

exist V11 , V10 , V01 and V00 such that

V (ht , π) = − c + π1 pH (u(w1 ) + δ(M11 V11 + M12 V10 )) + π1 (1 − pH )(u(w0 ) + δ(M11 V01 + M12 V00 ))

+ (1 − π1 )(u(w0 ) + δ(M21 V01 + M22 V00 )).

If the principal wants the agent to shirk this period, there exist V1 , V0 such that for any prior

π,

V (ht , π) = ū + (π1 M11 + (1 − π1 )M21 )δV1 + (π1 M12 + (1 − π1 )M22 )δV0 .

Similarly, let W (ht , π) be the principal’s payoff after history ht given prior π when the principal and the agent follow the equilibrium strategies after ht . W (ht , π) satisfies the following

equations. If the principal wants the agent to work this period, there exist W11 , W10 , W01

and W00 such that for any prior π,

W (ht , π) =π1 pH (1 − w1 + δ(M11 W11 + M12 W10 )) + π1 (1 − pH )(−w0 + δ(M11 W01 + M12 W00 ))

+ (1 − π1 )(−w0 + δ(M21 W01 + M22 W00 )).

If the principal wants the agent to shirk this period, there exist W1 , W0 such that for any

prior π,

W (ht , π) = v̄ + (π1 M11 + (1 − π1 )M21 )δW1 + (π1 M12 + (1 − π1 )M22 )δW0 .

The decomposition of continuation value allows one to express the agent’s continuation

values on and off the equilibrium path with the same state variables when the agent does

a one-shot deviation. The only difference between the on- and off-the-equilibrium-path

continuation values is that the agent has different prior beliefs, which is the weight in the

linear combination of the state variables. Without decomposition, one doesn’t know what

are the possible deviation payoffs of the agent when the principal offers certain amount as

the on-the-equilibrium-path continuation value, which is a problem when one considers the

IC constraint of the agent. The next section shows how the decomposition comes in the IC

constraints.

3.1.2

One-Shot Deviation Principle

The next step is to reduce the set of IC constraints. I show that the IC constraints for

one-shot deviations when the agent has never deviated before are sufficient conditions for

all IC constraints.

Given any history ht and prior π, the agent has infinitely many ways to deviate, and

10

there are an infinite number of IC constraints. Furthermore, if the agent has deviated before

reaching ht , the principal and the agent have different prior beliefs, and in order to account

for all potential prior beliefs the agent may have, we need to know the time index and

history ht , which prevents the recursive representation of the problem. However, I show

that the IC constraints for one-shot deviations when the agent has never deviated before

are sufficient, and at any point, it is sufficient to consider only one IC constraint.

Suppose after history ht , the principal has prior π. If the agent has never deviated

before, the principal and the agent share the common prior π. From Proposition 1, if the

principal wants the agent to work this period, we can decompose the agent’s continuation

value by V11 , V10 , V01 and V00 . The IC constraint for the one-shot deviation can be written

as

V (ht , π) = − c + π1 pH (u(w1 ) + δ(M11 V11 + M12 V10 )) + π1 (1 − pH )(u(w0 ) + δ(M11 V01 + M12 V00 ))

+ (1 − π1 )(u(w0 ) + δ(M21 V01 + M22 V00 ))

≥ π1 pL (u(w1 ) + δ(M11 V11 + M12 V10 )) + π1 (1 − pL )(u(w0 ) + δ(M11 V01 + M12 V00 ))

+ (1 − π1 )(u(w0 ) + δ(M21 V01 + M22 V00 )).

Note that the continuation values from the following period and on are V11 , V10 , V01 and

V00 , and they don’t change when the agent deviates. The agent’s deviation affects the

distribution of outcomes in this period, but the agent’s expected payoffs in the continuation

game are completely determined by the outcome this period and the state in the next

period. The state transition is also exogenous, and the probabilities are independent of the

agent’s action.

We can simplify the IC constraint as

π1 (pH − pL )((u(w1 ) + δ(M11 V11 + M12 V10 )) − (u(w0 ) + δ(M11 V01 + M12 V00 ))) ≥ c.

(1)

From Assumptions 1 and 2, the agent has prior π̂ with π̂1 ≥ π1 if he has deviated before.

The agent’s IC constraint for the one-shot deviation becomes

π̂1 (pH − pL )((u(w1 ) + δ(M11 V11 + M12 V10 )) − (u(w0 ) + δ(M11 V01 + M12 V00 ))) ≥ c,

and it is satisfied whenever (1) holds. Therefore, the IC constraints for one-shot deviations

when the agent has never deviated before are sufficient conditions for all IC constraints for

one-shot deviations. Since the principal has within-period commitment power, the agent’s

payoff is bounded from above, and together with limited liability, we have continuity at

infinity. Therefore, the IC constraints for one-shot deviations are sufficient conditions for

all IC constraints, and we have the following proposition.

11

Proposition 2. In the binary environment with pL < pH , IC constraints for one-shot

deviations when the agent has never deviated before are sufficient conditions for all IC

constraints.

3.1.3

Recursive Formulation: Binary environment

This section sets up the algorithm. After history ht , suppose the principal has prior π and

he wants the agent to work this period. The principal’s payoff should be weakly greater

than his outside option,

W = π1 pH (1 − w1 + δ(M11 W11 + M12 W10 )) + π1 (1 − pH )(−w0 + δ(M11 W01 + M12 W00 ))

v̄

+ (1 − π1 )(−w0 + δ(M21 W01 + M22 W00 )) ≥

.

(2)

1−δ

The agent has following three constraints:

(IC) : π1 (pH − pL )((u(w1 ) + δ(M11 V11 + M12 V10 )) − (u(w0 ) + δ(M11 V01 + M12 V00 ))) ≥ c,

(3)

(IR) : V = −c + π1 pH (u(w1 ) + δ(M11 V11 + M12 V10 ))

+ π1 (1 − pH )(u(w0 ) + δ(M11 V01 + M12 V00 ))

+ (1 − π1 )(u(w0 ) + δ(M21 V01 + M22 V00 ))

ū

,

≥

1−δ

(LL) : w1 , w0 ≥ 0.

(4)

(5)

One can decompose the continuation values of the agent by Proposition 1, and it is sufficient

to consider one IC constraint by Proposition 2. From the decomposition, we also know that

the following four equalities should hold:

W1 = pH (1 − w1 + δ(M11 W11 + M12 W10 )) + (1 − pH )(−w0 + δ(M11 W01 + M12 W00 )), (6)

W0 = −w0 + δ(M21 W01 + M22 W00 ),

(7)

V1 = pH (u(w1 ) + δ(M11 V11 + M12 V10 )) + (1 − pH )(u(w0 ) + δ(M11 V01 + M12 V00 )),

(8)

V0 = u(w0 ) + δ(M21 V01 + M22 V00 ).

(9)

The set of continuation values the principal can get when he wants the agent to work this

period is given by

12

Sw = {(π, W1 , W0 , V1 , V0 )|∃(π 0 , W11 , W10 , V11 , V10 ), (π̂, W01 , W00 , V01 , V00 ) ∈ S,

π 0 = (1, 0)M,

π1 (1 − pH )

1 − π1

M,

π̂ =

,

π1 (1 − pH ) + (1 − π1 ) π1 (1 − pH ) + (1 − π1 )

V1

W1

,

, V =π

W =π

V0

W0

(2) − (9) hold.}

where S is the set of all (π, W1 , W0 , V1 , V0 ) the principal can implement.

If the principal takes his outside option this period, both the principal and the agent

get their outside options and continue to the next period. The set of continuation values is

given by

So = {(π, W1 , W0 , V1 , V0 )|∃(π̌, W̌1 , W̌0 , V̌1 , V̌0 ) ∈ S,

π̌ = πM,

V1 = ū + M11 δ V̌1 + M12 δ V̌0 ,

V0 = ū + M21 δ V̌1 + M22 δ V̌0 ,

W1 = v̄ + M11 δ W̌1 + M12 δ W̌0 ,

W0 = v̄ + M21 δ W̌1 + M22 δ W̌0 .}

Proposition 3. The set of payoffs the principal can implement is given by the largest selfgenerating set S = Sw ∪ So . (π, W1 , W0 , V1 , V0 ) ∈ S corresponds to a contract starting

with prior π, the principal gets W1 if he’s in the good state and W0 if he’s in the bad state.

V1 , V0 are the agent’s payoffs in the good state and the bad state, respectively. The principal’s

V1

1

expected payoff is π W

W0 , and the agent’s expected payoff is π V0 .

3.2

General Case

This section develops the recursive formulation for the model in Section 2. The main

steps are the decomposition of continuation values and the one-shot deviation principle.

Assumptions 1 to 3 hold in this section.

In the binary environment, I decomposed the continuation values of the agent by the

continuation values on the equilibrium path given outcome y and state ω. Since there were

two outcomes and two states, I can decompose the continuation value as a linear combination

of four values, Vyω , for any given prior π. The decomposition works similarly when there

is a continuum of states and a continuum of outcomes. Let Vyω be the continuation value

13

of the agent after outcome y when the state in the next period is ω and both the principal

and the agent follow the equilibrium strategies from this period on. The state transition is

exogenous, and the history of outcomes determines the payments in the future. Let wy be

the payment for outcome y in the current period, and we can write the agent’s payoff as

follows:

V (ht , π) = −ci +

Z

π(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )dF (y|ai , ω)dω 0 dω,

when the agent takes action ai .

The next step is the sufficiency of IC constraints for one-shot deviations when the agent

has never deviated before. From Assumption 3, we know

Z

(u(wy ) + δm(ω 0 |ω)Vyω0 )dF (y|a, ω)dω 0

is supermodular in a and ω. Consider the IC constraint for the one-shot deviation from ai

to aj . From Assumption 2, j < i, and the IC constraint is given by

Z

π(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )dF (y|ai , ω)dω 0 dω

Z

≥ −cj + π(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )dF (y|aj , ω)dω 0 dω.

t

V (h , π) = − ci +

We can rewrite the IC constraint as

Z

π(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )(dF (y|ai , ω) − dF (y|aj , ω))dω 0 dω ≥ ci − cj .

Supermodularity implies that

R

(u(wy ) + δm(ω 0 |ω)Vyω0 )(dF (y|ai , ω) − dF (y|aj , ω))dω 0 in-

creases with ω. If the agent has deviated previously, the agent took a less costly action,

and Assumption 1 implies that the agent’s prior π̂ dominates the principal’s prior π in the

sense of first-order stochastic dominance. Together with the supermodularity, we know

Z

Z

≥

π̂(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )(dF (y|ai , ω) − dF (y|aj , ω))dω 0 dω

π(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )(dF (y|ai , ω) − dF (y|aj , ω))dω 0 dω

≥ ci − cj ,

and the agent will work again in the current period even if he has deviated before. Since

the principal has within-period commitment power and the agent has limited liability, the

agent’s continuation values are bounded, and we have continuity at infinity. The IC constraints for one-shot deviations when the agent has never deviated before are sufficient

14

conditions for all IC constraints.

Proposition 4. IC constraints for one-shot deviations when the agent has never deviated

before are sufficient conditions for all IC constraints.

We can find the optimal contract using the following recursive formulation: Let S0 , S1 , · · · , Sn

be defined by

S0 = {(π, W, V )|∃(π̌, W̌ , V̌ ) ∈ S

π̌ = πM,

Z

W (ω) = v̄ + δ m(ω 0 |ω)W̌ (ω 0 )dω 0 ,

Z

V (ω) = ū + δ m(ω 0 |ω)V̌ (ω 0 )dω 0 .}

Si = {(π, W, V )|∃(π y , W y , V y ) ∈ S, ∀y ∈ Y,

π y is updated using Bayes’ rule with action ai and outcome y,

Z

W (ω) = (−wy + m(ω 0 |ω)W y (ω 0 ))dω 0 dF (y|ai , ω),

Z

V (ω) = (u(wy ) + m(ω 0 |ω)V y (ω 0 ))dω 0 dF (y|ai , ω),

Z

v̄

,

π(ω)W (ω)dω ≥

1−δ

Z

ū

,

π(ω)V (ω)dω ≥

1−δ

Z

π(ω)(u(wy ) + δm(ω 0 |ω)V y (ω 0 ))(dF (y|ai , ω) − dF (y|aj , ω))dω 0 dω ≥ ci − cj , ∀j < i,

wy ≥ 0, ∀y ∈ Y.}

∀i = 1, · · · , n,

where (π, W, V ) ∈ S satisfies W, V : Ω → R and wy is the payment for outcome y in this

period.

Proposition 5. S is the largest self-generating set such that S = ∪ni=0 Sn .

The above recursive formulation requires Assumptions 1 to 3. Assumption 3 is about

the agent’s payoff under the optimal contract, which is an endogenous object. However,

we can consider the relaxed problem without Assumption 3 and verify ex-post that the

optimal contract does satisfy the assumption. If we don’t require Assumption 3, we don’t

know whether the IC constraints for one-shot deviations when the agent has never deviated

are sufficient conditions for all IC constraints. The recursive formulation with only the IC

constraints for one-shot deviations when the agent has never deviated before is a relaxed

15

problem of the original problem of finding the optimal contract. But if we verify ex-post

that the optimal contract of the relaxed problem does satisfy Assumption 3, then it is the

optimal contract of the original problem.

4

Discussion

This section extends the algorithm to different commitment powers of the principal and

endogenous state transition.

4.1

Commitment Power

The model in Section 2 assumes that the principal has within-period commitment power.

This can be generalized to no-commitment power case.

4.1.1

No-commitment Power

Suppose the principal has no-commitment power and one is interested in the set of all

perfect Bayesian equilibria. All constraints in the case of within-period commitment power

case have to be satisfied when the principal has no-commitment power. In addition, the

principal should be willing to make the payment he promised. The payment at any point

is bounded from above by the difference in the continuation value of the principal and

his outside option. The algorithm with this promise-keeping constraint finds the set of all

perfect Bayesian equilibria. Since the agent’s payoff is bounded, we still have continuity at

infinity, and the one-shot deviation principle holds.

4.1.2

Full-commitment Power

Suppose the principal has full-commitment power. The continuation value of the principal

doesn’t have to be above his outside option, and we can drop the constraint on the principal’s

payoff. For the agent, if he also commits to the contract, then IR will bind only in the

very first period. Otherwise, if the agent can quit anytime, then the agent has the same

constraints as in Section 3. With full-commitment power, we don’t necessarily know that

the agent’s continuation is bounded from above, and we have to show continuity at infinity.

In particular, if the agent is risk-neutral, the principal can always delay the payment at

no cost, and we need to restrict the class of contracts such that the principal makes the

payment at the earliest possible time. As long as we are interested in the principal’s payoff

in period 0, this is without loss of generality. When the agent is risk-averse, the principal has

incentives to smooth the agent’s consumption. I conjecture the agent’s payoff is bounded

under the optimal contract, but I have to formally show this yet.

16

4.2

Endogenous State Transition

Consider the binary environment from Section 3.1. The algorithm generalizes when the state

transition from the good state is a function of the state and the agent’s action. Specifically,

let M1ja be the probability of transiting from state 1 to state j when the agent takes action

a. Given the state in the following period, one can decompose the agent’s continuation

value by state, and the IC constraint for the one-shot deviation when the agent has never

deviated before is given as follows.

V (ht , π) = − c + π1 pH (u(w1 ) + δ(M111 V11 + M121 V10 )) + π1 (1 − pH )(u(w0 ) + δ(M111 V01 + M121 V00 ))

+ (1 − π1 )(u(w0 ) + δ(M21 V01 + M22 V00 ))

≥ π1 pL (u(w1 ) + δ(M110 V11 + M120 V10 )) + π1 (1 − pL )(u(w0 ) + δ(M110 V01 + M120 V00 ))

+ (1 − π1 )(u(w0 ) + δ(M21 V01 + M22 V00 )).

The IC constraint simplifies as

π1 (pH (u(w1 ) + δ(M111 V11 + M121 V10 )) + (1 − pH )(u(w0 ) + δ(M111 V01 + M121 V00 ))

− pL (u(w1 ) + δ(M110 V11 + M120 V10 )) − (1 − pL )(u(w0 ) + δ(M110 V01 + M120 V00 )))

≥ c.

(10)

If the agent has deviated before, his prior satisfies π̂1 ≥ π1 , and the agent will work again.

The one-shot deviation principle holds in this environment, and the algorithm with (10) as

the IC constraint characterizes the set of all equilibrium payoffs the principal can implement.

5

Conclusion

I develop an algorithm to find the optimal contract when the principal and the agent learn

the payoff-relevant state over time. The principal and the agent start with symmetric

information about the underlying state, and every period after observing the outcome, they

update their beliefs about the state. The updating depends on the action of the agent, and

off the equilibrium path, the principal and the agent have asymmetric information which

leads to information rent.

Since the agent’s continuation value depends on both the payments in the future and his

prior in the current period, the agent’s deviation payoff off the equilibrium path takes into

account the agent’s prior after a deviation. The first innovation of this paper is to decompose

the agent’s continuation value by the continuation value in each state. If the principal and

the agent play the equilibrium strategies from this period on, the principal’s and the agent’s

continuation values can be written as a linear combination of the continuation values in each

17

state. The prior at the beginning of this period enters as weights in the linear combination.

Once one decomposes the continuation values, one can also show the one-shot deviation

principle. What I show in this paper is stronger than the usual one-shot deviation principle

in a sense that we can consider one-shot deviations when the agent has never deviated before.

If the agent has never deviated before, then the principal and the agent have the same prior,

and there is only one IC constraint for the one-shot deviation. The key assumptions for

the one-shot deviation principle are downward deviations, supermodularity of the agent’s

payoff and the assumption that the agent is weakly more optimistic than the principal after

a deviation.

Together, the decomposition and the one-shot deviation principle allows one to formulate

the principal’s problem recursively. My algorithm finds the set of all PBEs the principal

can implement.

In the binary environment when the supermodularity is already satisfied, the principal

has incentives to learn the state cheaply by letting the agent shirk. The principal is losing

the output, but he doesn’t have to leave any rent, and the principal doesn’t necessarily want

to frontload all the effort. Characterizing the qualitative properties of the optimal contract

when the principal can learn without leaving rent is one direction the future research can

take.

Another topic for further research is to find primitive conditions for supermodularity. It

is satisfied in the binary environment, but in a more general environment, it is an assumption

on endogenous objectives. It’ll be useful if one can find primitive conditions under which

the agent’s payoff under optimal contract is supermodular.

References

[1] Abreu, Dilip, Pearce, David, and Ennio Stacchetti. 1990. “Toward a Theory of

Discounted Repeated Games with Imperfect Monitoring.” Econometrica 58 (5): 10411063.

[2] Athey, Susan, and Kyle Bagwell. 2008. “Collusion with Persistent Cost Shocks.”

Econometrica 76 (3): 493-540.

[3] Battaglini, Marco. 2005. “Long-Term Contracting with Markovian Consumers.”

American Economic Review 95 (3): 637-658.

[4] Bergemann, Dirk, and Ulrich Hege. 1998. “Venture capital financing, moral hazard, and learning.” Journal of Banking and Finance 22 (6): 703-735.

18

[5] Bergemann, Dirk, and Ulrich Hege. 2005. “The Financing of Innovation: Learning

and Stopping.” RAND Journal of Economics 36 (4): 719-752.

[6] Bhaskar, V. 2012. “Dynamic Moral Hazard, Learning and Belief Manipulation.”

http://www.ucl.ac.uk/∼uctpvbh.

[7] Cabrales, Antonio and Calvó-Armengol, Antoni and Pavoni, Nicola. 2008.

“Social preferences, skill segregation, and wage dynamics.” Review of Economic Studies

75 (1): 65-98.

[8] DeMarzo, Peter M., and Yuliy Sannikov. 2011. “Learning, Termination, and Payout Policy in Dynamic Incentive Contracts.” http://www.princeton.edu/∼sannikov.

[9] Escobar, Juan F., and Juuso Toikka. 2012. “Efficiency in Games with Markovian

Private Information.” http://economics.mit.edu/faculty/toikka.

[10] Farhi, Emmanuel, and Ivn Werning. 2013. “Insurance and taxation over the life

cycle.” Review of Economic Studies 80 (2): 596-635.

[11] Fernandes, Ana, and Christopher Phelan. 2000. “A Recursive Formulation for

Repeated Agency with History Dependence.” Journal of Economic Theory 91 (2):

223-247.

[12] Garrett, Daniel, and Alessandro Pavan. 2012. “Managerial Turnover in a Changing World.” Journal of Political Economy 120 (5): 879-925.

[13] Garrett,

agerial

Daniel,

Compensation:

and

On

Alessandro

the

Pavan.

Optimality

of

2013.

“Dynamic

Seniority-based

Man-

Schemes.”

http://faculty.wcas.northwestern.edu/∼apa522.

[14] Golosov, Mikhail, Maxim Troshkin, and Aleh Tsyvinski. 2011. “Optimal dynamic taxes.” National Bureau of Economic Research No. w17642.

[15] Green, Edward. 1987. “Lending and the Smoothing of Uninsurable Income.” Contractual arrangements for intertemporal trade 1: 3-25.

[16] Halac, Marina, Navin Kartik, and Qingmin Liu. 2012. “Optimal Contracts for

Experimentation.” http://www0.gsb.columbia.edu/faculty/mhalac/research.html.

[17] Harris, Milton, and Bengt Holmström. 1982. “A Theory of Wage Dynamics.”

Review of Economic Studies 49 (3): 315-333.

[18] He, Zhiguo, Bin Wei, and Jianfeng Yu. 2013. “Optimal Long-term Contracting

with Learning.” http://faculty.chicagobooth.edu/zhiguo.he/pubs.html.

19

[19] Holmström, Bengt. 1982. “Managerial Incentive Schemes—A Dynamic Perspective.” In Essays in Economics and Management in Honor of Lars Wahlbeck. Helsinki:

Swedish School of Economics.

[20] Hörner, Johannes, and Larry Samuelson. 2012. “Incentives for Experimenting

Agents.” https://sites.google.com/site/jo4horner.

[21] Jovanovic, Boyan, and Julien Prat. 2013. “Dynamic Contracts when Agent’s Quality is Unknown.” forthcoming in Theoretical Economics

[22] Kwon,

Suehyun. 2013. “Dynamic Moral Hazard with Persistent States.”

http://www.ucl.ac.uk/∼uctpskw.

[23] Spear, Stephen E., and Sanjay Srivastava. 1987. “On Repeated Moral Hazard

with Discounting.” Review of Economic Studies 54 (4): 599-617.

[24] Strulovici, Bruno. 2011. “Contracts, Information Persistence, and Renegotiation.”

http://faculty.wcas.northwestern.edu/∼bhs675/

[25] Tchistyi, Alexei. 2013. “Security Design with Correlated Hidden Cash Flows: The

Optimality of Performance Pricing.” http://faculty.haas.berkeley.edu/Tchistyi.

[26] Williams, Noah. 2011. “Persistent Private Information.” Econometrica 79 (4): 12331275.

[27] Zhang, Yuzhe. 2009. “Dynamic Contracting with Persistent Shocks.” Journal of Economic Theory 144 (2): 635675.

A

Proofs

Proof of Proposition 1. Suppose the principal and the agent follow the equilibrium strategies after ht under a given contract and the principal wants the agent to work this period. If

the principal and the agent are in ω1 in the following period, there is no information asymmetry about the probability distributions of the outcome and the state transition in the

future. The history ht and the outcome this period determines the payment stream in the

future, and there exist V11 , V01 , W11 , W01 such that Vy1 is the agent’s payoff after outcome

y. Wy1 is the principal’s payoff after outcome y. Similarly, one can define V10 , V00 , W10 , W00

if the state in the next period is ω0 .

The prior π after ht doesn’t affect the continuation values from the following period,

and π only affects the probability of transition into each state in the next period. Therefore,

20

the agent’s payoff satisfies

V (ht , π) = − c + π1 pH (u(w1 ) + δ(M11 V11 + M12 V10 )) + π1 (1 − pH )(u(w0 ) + δ(M11 V01 + M12 V00 ))

+ (1 − π1 )(u(w0 ) + δ(M21 V01 + M22 V00 )),

and the principal’s payoff satisfies

W (ht , π) =π1 pH (1 − w1 + δ(M11 W11 + M12 W10 )) + π1 (1 − pH )(−w0 + δ(M11 W01 + M12 W00 ))

+ (1 − π1 )(−w0 + δ(M21 W01 + M22 W00 )).

If the principal wants the agent to shirk this period, they get their outside options

in the current period, and if the principal and the agent follow the equilibrium strategies

from the following period, the continuation values from the following period are completely

determined by what state they are in next period. There exist V1 , V0 , W1 , W0 such that for

any prior π,

V (ht , π) = ū + (π1 M11 + (1 − π1 )M21 )δV1 + (π1 M12 + (1 − π1 )M22 )δV0 ,

W (ht , π) = v̄ + (π1 M11 + (1 − π1 )M21 )δW1 + (π1 M12 + (1 − π1 )M22 )δW0 ,

where Vi and Wi are the agent’s payoff and the principal’s payoff if they’re in ωi next

period.

Proof of Proposition 2. Suppose after history ht , the principal has prior π. If the agent

has never deviated before, the principal and the agent share the common prior π. From

Proposition 1, if the principal wants the agent to work this period, we can decompose the

agent’s continuation value by V11 , V10 , V01 and V00 . The IC constraint for the one-shot

deviation can be written as

V (ht , π) = − c + π1 pH (u(w1 ) + δ(M11 V11 + M12 V10 )) + π1 (1 − pH )(u(w0 ) + δ(M11 V01 + M12 V00 ))

+ (1 − π1 )(u(w0 ) + δ(M21 V01 + M22 V00 ))

≥ π1 pL (u(w1 ) + δ(M11 V11 + M12 V10 )) + π1 (1 − pL )(u(w0 ) + δ(M11 V01 + M12 V00 ))

+ (1 − π1 )(u(w0 ) + δ(M21 V01 + M22 V00 )).

Note that the continuation values from the following period and on are V11 , V10 , V01 and

V00 , and they don’t change when the agent deviates. The agent’s deviation affects the

distribution of outcomes in this period, but the agent’s expected payoffs in the continuation

game are completely determined by the outcome this period and the state in the next

period. The state transition is also exogenous, and the probabilities are independent of the

21

agent’s action.

The IC constraint can be rewritten as

π1 (pH − pL )((u(w1 ) + δ(M11 V11 + M12 V10 )) − (u(w0 ) + δ(M11 V01 + M12 V00 ))) ≥ c,

and from Assumptions 1 and 2, the agent’s prior π̂ satisfies π̂1 ≥ π1 if he has deviated

before. The agent’s IC constraint for the one-shot deviation becomes

π̂1 (pH − pL )((u(w1 ) + δ(M11 V11 + M12 V10 )) − (u(w0 ) + δ(M11 V01 + M12 V00 ))) ≥ c,

and it is satisfied whenever the IC constraint holds for π. Therefore, the IC constraints for

one-shot deviations when the agent has never deviated before are sufficient conditions for all

IC constraints for one-shot deviations. Since the principal has within-period commitment

power, the agent’s payoff is bounded from above, and together with limited liability, we have

continuity at infinity. Therefore, the IC constraints for one-shot deviations are sufficient

conditions for all IC constraints.

Proof of Proposition 3. At every period, the IR constraints for the principal and the agent

should hold. The agent also has the IC constraints, and he has limited liability. From

Proposition 2, it is sufficient to consider the IC constraint for the one-shot deviation when

the agent has never deviated before. The principal also should have incentives to offer the

on-the-equilibrium-path contract than to deviate. Since we are interested in the set of all

PBEs, there is no loss of generality in assuming that off the equilibrium path, the agent

chooses the least expensive action, and anticipating this, the principal takes his outside

option. Therefore, the IC constraint for the principal can be replace with the IR. The other

equalities are for accounting, and the set of all PBEs is the largest self-generating set subject

to the constraints.

Proof of Proposition 4. Consider the IC constraint for the one-shot deviation from ai to aj .

From Assumption 2, j < i, and the IC constraint is given by

t

Z

π(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )dF (y|ai , ω)dω 0 dω

Z

≥ −cj + π(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )dF (y|aj , ω)dω 0 dω.

V (h , π) = − ci +

We can rewrite the IC constraint as

Z

π(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )(dF (y|ai , ω) − dF (y|aj , ω))dω 0 dω ≥ ci − cj .

Supermodularity implies that

R

(u(wy ) + δm(ω 0 |ω)Vyω0 )(dF (y|ai , ω) − dF (y|aj , ω))dω 0 in22

creases with ω. If the agent has deviated previously, the agent took a less costly action,

and Assumption 1 implies that the agent’s prior π̂ dominates the principal’s prior π in the

sense of first-order stochastic dominance. Together with the supermodularity, we know

Z

Z

≥

π̂(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )(dF (y|ai , ω) − dF (y|aj , ω))dω 0 dω

π(ω)(u(wy ) + δm(ω 0 |ω)Vyω0 )(dF (y|ai , ω) − dF (y|aj , ω))dω 0 dω

≥ ci − cj ,

and the agent will work again in the current period even if he has deviated before. Since

the principal has within-period commitment power and the agent has limited liability, the

agent’s continuation values are bounded, and we have continuity at infinity. The IC constraints for one-shot deviations when the agent has never deviated before are sufficient

conditions for all IC constraints.

Proof of Proposition 5. The set of constraints are the same as in the binary environment.

From Proposition 4, it is sufficient to consider the IC constraint for the one-shot deviation

when the agent has never deviated before. Si is the set of payoffs the principal can implement

if he wants the agent to take action ai in the current period. S0 is the set of payoffs

when the principal takes his outside option. Therefore, the set of all PBEs is the union of

Si , i = 1, · · · , n and S0 .

23