Mean field Ising models Sumit Mukherjee Columbia University

advertisement

Mean field Ising models

Sumit Mukherjee

Columbia University

Joint work with Anirban Basak, Duke University

What is an Ising model?

An Ising model is a probability distribution on {−1, 1}n .

Sumit Mukherjee

Mean field Ising models

1 / 46

What is an Ising model?

An Ising model is a probability distribution on {−1, 1}n .

The probability mass function of the Ising model is given by

β

0

Pn (X = x) = e 2 x An x−Fn .

Sumit Mukherjee

Mean field Ising models

1 / 46

What is an Ising model?

An Ising model is a probability distribution on {−1, 1}n .

The probability mass function of the Ising model is given by

β

0

Pn (X = x) = e 2 x An x−Fn .

Here An is a symmetric n × n matrix with 0 on the diagonals,

and β > 0 is a one dimensional parameter usually referred to

as inverse temperature.

Sumit Mukherjee

Mean field Ising models

1 / 46

What is an Ising model?

An Ising model is a probability distribution on {−1, 1}n .

The probability mass function of the Ising model is given by

β

0

Pn (X = x) = e 2 x An x−Fn .

Here An is a symmetric n × n matrix with 0 on the diagonals,

and β > 0 is a one dimensional parameter usually referred to

as inverse temperature.

Fn is the log partition function, which will be the main focus

of this talk.

Sumit Mukherjee

Mean field Ising models

1 / 46

Applications of Ising model

Ferromagnetism: Ising model was introduced by Wilhelm Lenz

in 1920 to study orientation of configurations of electrons in

Ferromagnetic materials. The idea is that if most of the

electrons are oriented in the same direction, we observe

magnetism.

Sumit Mukherjee

Mean field Ising models

2 / 46

Applications of Ising model

Ferromagnetism: Ising model was introduced by Wilhelm Lenz

in 1920 to study orientation of configurations of electrons in

Ferromagnetic materials. The idea is that if most of the

electrons are oriented in the same direction, we observe

magnetism.

Lattice gas: Ising models have been used to model motion of

atoms of gas, by partitioning space into a lattice. Each

position is either 1 or −1 depending on whether an atom is

present or absent.

Sumit Mukherjee

Mean field Ising models

2 / 46

Applications of Ising model

Ferromagnetism: Ising model was introduced by Wilhelm Lenz

in 1920 to study orientation of configurations of electrons in

Ferromagnetic materials. The idea is that if most of the

electrons are oriented in the same direction, we observe

magnetism.

Lattice gas: Ising models have been used to model motion of

atoms of gas, by partitioning space into a lattice. Each

position is either 1 or −1 depending on whether an atom is

present or absent.

Neuroscience: Ising models have been used to study neuron

activity in our brains. Here each neuron is +1 if active, and

−1 if inactive.

Sumit Mukherjee

Mean field Ising models

2 / 46

Applications of Ising model

Ferromagnetism: Ising model was introduced by Wilhelm Lenz

in 1920 to study orientation of configurations of electrons in

Ferromagnetic materials. The idea is that if most of the

electrons are oriented in the same direction, we observe

magnetism.

Lattice gas: Ising models have been used to model motion of

atoms of gas, by partitioning space into a lattice. Each

position is either 1 or −1 depending on whether an atom is

present or absent.

Neuroscience: Ising models have been used to study neuron

activity in our brains. Here each neuron is +1 if active, and

−1 if inactive.

A generalization of the Ising model for more than two states

(Potts model) has been used to study Protein folding and

Image Processing.

Sumit Mukherjee

Mean field Ising models

2 / 46

Commonly studied Ising models

Sumit Mukherjee

Mean field Ising models

3 / 46

Commonly studied Ising models

Ferromagnetic Ising model on generals graphs: An is the

adjacency matrix of a finite graph (scaled by the average

degree):

Sumit Mukherjee

Mean field Ising models

3 / 46

Commonly studied Ising models

Ferromagnetic Ising model on generals graphs: An is the

adjacency matrix of a finite graph (scaled by the average

degree):

Lattice graphs: Original paper by Ising (1925) considered the

one dimensional lattice, subsequent focus has been on higher

dimensional lattices;

Sumit Mukherjee

Mean field Ising models

3 / 46

Commonly studied Ising models

Ferromagnetic Ising model on generals graphs: An is the

adjacency matrix of a finite graph (scaled by the average

degree):

Lattice graphs: Original paper by Ising (1925) considered the

one dimensional lattice, subsequent focus has been on higher

dimensional lattices;

Curie-Weiss Model: Complete graph;

Sumit Mukherjee

Mean field Ising models

3 / 46

Commonly studied Ising models

Ferromagnetic Ising model on generals graphs: An is the

adjacency matrix of a finite graph (scaled by the average

degree):

Lattice graphs: Original paper by Ising (1925) considered the

one dimensional lattice, subsequent focus has been on higher

dimensional lattices;

Curie-Weiss Model: Complete graph;

Random Graphs: Erdős-Rényi, random regular, etc.

Sumit Mukherjee

Mean field Ising models

3 / 46

Commonly studied Ising models

Ferromagnetic Ising model on generals graphs: An is the

adjacency matrix of a finite graph (scaled by the average

degree):

Lattice graphs: Original paper by Ising (1925) considered the

one dimensional lattice, subsequent focus has been on higher

dimensional lattices;

Curie-Weiss Model: Complete graph;

Random Graphs: Erdős-Rényi, random regular, etc.

Sherrington-Kirkpatrick: An is a matrix of i.i.d. N (0, 1/n).

Sumit Mukherjee

Mean field Ising models

3 / 46

Commonly studied Ising models

Ferromagnetic Ising model on generals graphs: An is the

adjacency matrix of a finite graph (scaled by the average

degree):

Lattice graphs: Original paper by Ising (1925) considered the

one dimensional lattice, subsequent focus has been on higher

dimensional lattices;

Curie-Weiss Model: Complete graph;

Random Graphs: Erdős-Rényi, random regular, etc.

Sherrington-Kirkpatrick: An is a matrix of i.i.d. N (0, 1/n).

Hopfield model of neural networks: where An = n1 Bn Bn0 , with

Bn a matrix of i.i.d. random matrix taking values ±1 with

probability 21 .

Sumit Mukherjee

Mean field Ising models

3 / 46

Why is the log partition function important?

The log partition function gives information about the

moments of the distribution. For e.g.

∂Fn

= E(Xi Xj ).

∂An (i, j)

Similar statements holds for higher derivatives.

Sumit Mukherjee

Mean field Ising models

4 / 46

Why is the log partition function important?

The log partition function gives information about the

moments of the distribution. For e.g.

∂Fn

= E(Xi Xj ).

∂An (i, j)

Similar statements holds for higher derivatives.

The partition function gives information about the stable

configurations under the model.

Sumit Mukherjee

Mean field Ising models

4 / 46

Why is the log partition function important?

The log partition function gives information about the

moments of the distribution. For e.g.

∂Fn

= E(Xi Xj ).

∂An (i, j)

Similar statements holds for higher derivatives.

The partition function gives information about the stable

configurations under the model.

The uniform distribution on the space of q colorings of a

graph is a (limiting) Potts model, and the partition function is

the number of q colorings of the graph.

Sumit Mukherjee

Mean field Ising models

4 / 46

Why is the log partition function important?

The log partition function gives information about the

moments of the distribution. For e.g.

∂Fn

= E(Xi Xj ).

∂An (i, j)

Similar statements holds for higher derivatives.

The partition function gives information about the stable

configurations under the model.

The uniform distribution on the space of q colorings of a

graph is a (limiting) Potts model, and the partition function is

the number of q colorings of the graph.

Many estimation techniques in Statistics require the

knowledge, or good estimates of the partition function.

Sumit Mukherjee

Mean field Ising models

4 / 46

Mean field technique of Statistical Physics

Let D(·||·) denote the Kullback-Leibler divergence.

Sumit Mukherjee

Mean field Ising models

5 / 46

Mean field technique of Statistical Physics

Let D(·||·) denote the Kullback-Leibler divergence.

Then for any probability measure Qn on {−1, 1}n , D(Qn ||Pn )

equals

X

Qn (x)

Qn (x) log

Pn (x)

n

x∈{−1,1}

Sumit Mukherjee

Mean field Ising models

5 / 46

Mean field technique of Statistical Physics

Let D(·||·) denote the Kullback-Leibler divergence.

Then for any probability measure Qn on {−1, 1}n , D(Qn ||Pn )

equals

X

Qn (x)

Qn (x) log

Pn (x)

x∈{−1,1}n

X

X

=

Qn (x) log Qn (x) −

Qn log Pn (x)

x∈{−1,1}n

x∈{−1,1}n

Sumit Mukherjee

Mean field Ising models

5 / 46

Mean field technique of Statistical Physics

Let D(·||·) denote the Kullback-Leibler divergence.

Then for any probability measure Qn on {−1, 1}n , D(Qn ||Pn )

equals

X

Qn (x)

Qn (x) log

Pn (x)

x∈{−1,1}n

X

X

=

Qn (x) log Qn (x) −

Qn log Pn (x)

x∈{−1,1}n

=

X

x∈{−1,1}n

Qn (x) log Qn (x) −

x∈{−1,1}n

+

X

β

2

X

Qn (x)x0 An x

x∈{−1,1}n

Qn (x)Fn

x∈{−1,1}n

Sumit Mukherjee

Mean field Ising models

5 / 46

Mean field technique of Statistical Physics

Let D(·||·) denote the Kullback-Leibler divergence.

Then for any probability measure Qn on {−1, 1}n , D(Qn ||Pn )

equals

X

Qn (x)

Qn (x) log

Pn (x)

x∈{−1,1}n

X

X

=

Qn (x) log Qn (x) −

Qn log Pn (x)

x∈{−1,1}n

=

X

x∈{−1,1}n

Qn (x) log Qn (x) −

x∈{−1,1}n

+

X

β

2

X

Qn (x)x0 An x

x∈{−1,1}n

Qn (x)Fn

x∈{−1,1}n

n

o

β

=EQn log Qn (X) − X 0 An X + Fn .

2

Sumit Mukherjee

Mean field Ising models

5 / 46

Mean field technique of Statistical Physics

Since D(Qn ||Pn ) ≥ 0 with equality iff Qn = Pn , we have

Fn ≥ EQn

nβ

2

o

X 0 An X − log Qn (X) ,

with equality iff Qn = Pn .

Sumit Mukherjee

Mean field Ising models

6 / 46

Mean field technique of Statistical Physics

Since D(Qn ||Pn ) ≥ 0 with equality iff Qn = Pn , we have

Fn ≥ EQn

nβ

2

o

X 0 An X − log Qn (X) ,

with equality iff Qn = Pn .

Equivalently,

Fn = sup EQn

Qn

nβ

2

o

X 0 An X − log Qn (X) ,

where the sup is over all probability measures on {−1, 1}n .

Sumit Mukherjee

Mean field Ising models

6 / 46

Mean field technique of Statistical Physics

Instead, if we restrict the sup over product measures Qn , we

get a lower bound

o

nβ

X 0 An X − log Qn (X) .

(1)

Fn ≥ sup EQn

2

Qn

Sumit Mukherjee

Mean field Ising models

7 / 46

Mean field technique of Statistical Physics

Instead, if we restrict the sup over product measures Qn , we

get a lower bound

o

nβ

X 0 An X − log Qn (X) .

(1)

Fn ≥ sup EQn

2

Qn

Under a product measure Qn , each Xi is independently

distributed with mean mi ∈ [−1, 1], say.

Sumit Mukherjee

Mean field Ising models

7 / 46

Mean field technique of Statistical Physics

Instead, if we restrict the sup over product measures Qn , we

get a lower bound

o

nβ

X 0 An X − log Qn (X) .

(1)

Fn ≥ sup EQn

2

Qn

Under a product measure Qn , each Xi is independently

distributed with mean mi ∈ [−1, 1], say.

Then Xi takes the value {1, −1} with probabilities

1−mi

respectively.

2

Sumit Mukherjee

Mean field Ising models

1+mi

2

and

7 / 46

Mean field technique of Statistical Physics

Instead, if we restrict the sup over product measures Qn , we

get a lower bound

o

nβ

X 0 An X − log Qn (X) .

(1)

Fn ≥ sup EQn

2

Qn

Under a product measure Qn , each Xi is independently

distributed with mean mi ∈ [−1, 1], say.

i

Then Xi takes the value {1, −1} with probabilities 1+m

and

2

1−mi

respectively.

2

Q

Also Qn (x) becomes ni=1 Qn,i (xi ), which on taking

expectation gives

EQn log Qn (X) =

n

X

E log Qn,i (Xi )

i=1

Sumit Mukherjee

Mean field Ising models

7 / 46

Mean field technique of Statistical Physics

=

n h

X

1 + mi

i=1

2

log

1 + mi 1 − mi

1 − mi i

+

log

2

2

2

Sumit Mukherjee

Mean field Ising models

8 / 46

Mean field technique of Statistical Physics

=

n h

X

1 + mi

i=1

2

log

1 + mi 1 − mi

1 − mi i

+

log

2

2

2

Thus the RHS of (1) on the last slide becomes

n

X

β 0

m An m +

I(mi ),

2

i=1

1+x

where I(x) = − 1+x

2 log 2 −

entropy function.

Sumit Mukherjee

1−x

2

log 1−x

2 is the Binary

Mean field Ising models

8 / 46

Mean field technique of Statistical Physics

=

n h

X

1 + mi

i=1

2

log

1 + mi 1 − mi

1 − mi i

+

log

2

2

2

Thus the RHS of (1) on the last slide becomes

n

X

β 0

m An m +

I(mi ),

2

i=1

1+x

where I(x) = − 1+x

2 log 2 −

entropy function.

1−x

2

log 1−x

2 is the Binary

Combining we have nFn ≥ Mn , where

o

P

Mn = supm∈[−1,1]n β2 m0 An m + ni=1 I(mi ) .

Sumit Mukherjee

Mean field Ising models

8 / 46

Mean field technique of Statistical Physics

=

n h

X

1 + mi

i=1

2

log

1 + mi 1 − mi

1 − mi i

+

log

2

2

2

Thus the RHS of (1) on the last slide becomes

n

X

β 0

m An m +

I(mi ),

2

i=1

1+x

where I(x) = − 1+x

2 log 2 −

entropy function.

1−x

2

log 1−x

2 is the Binary

Combining we have nFn ≥ Mn , where

o

P

Mn = supm∈[−1,1]n β2 m0 An m + ni=1 I(mi ) .

This lower bound to the log partition function is referred to as

mean field prediction.

Sumit Mukherjee

Mean field Ising models

8 / 46

Eg: Mean field prediction for Regular graphs

For a regular graph with degree dn the matrix An (i, j) is

taken to be d1n if i ∼ j, and 0 otherwise.

Sumit Mukherjee

Mean field Ising models

9 / 46

Eg: Mean field prediction for Regular graphs

For a regular graph with degree dn the matrix An (i, j) is

taken to be d1n if i ∼ j, and 0 otherwise.

This scaling ensures that the resulting Ising model is non

trivial, for all values of dn .

Sumit Mukherjee

Mean field Ising models

9 / 46

Eg: Mean field prediction for Regular graphs

For a regular graph with degree dn the matrix An (i, j) is

taken to be d1n if i ∼ j, and 0 otherwise.

This scaling ensures that the resulting Ising model is non

trivial, for all values of dn .

By our scaling, each row sum of An equals 1.

Sumit Mukherjee

Mean field Ising models

9 / 46

Upper bound for Mn

Thus Perron Frobenius theorem gives λ∞ (An ) = 1.

Sumit Mukherjee

Mean field Ising models

10 / 46

Upper bound for Mn

Thus Perron Frobenius theorem gives λ∞ (An ) = 1.

This gives

Mn ≤

sup

m∈[−1,1]n

Sumit Mukherjee

nβ

2

m0 m +

n

X

o

I(mi )

i=1

Mean field Ising models

10 / 46

Upper bound for Mn

Thus Perron Frobenius theorem gives λ∞ (An ) = 1.

This gives

Mn ≤

sup

m∈[−1,1]n

=

sup

m∈[−1,1]n

Sumit Mukherjee

nβ

2

m0 m +

o

I(mi )

i=1

n

nβ X

2

n

X

o

m2i + I(mi )

i=1

Mean field Ising models

10 / 46

Upper bound for Mn

Thus Perron Frobenius theorem gives λ∞ (An ) = 1.

This gives

Mn ≤

sup

nβ

2

m∈[−1,1]n

=

sup

=

2

sup

i=1 mi ∈[−1,1]

Sumit Mukherjee

n

X

o

I(mi )

i=1

n

nβ X

m∈[−1,1]n

n

X

m0 m +

o

m2i + I(mi )

i=1

nβ

2

o

m2i + I(mi )

Mean field Ising models

10 / 46

Upper bound for Mn

Thus Perron Frobenius theorem gives λ∞ (An ) = 1.

This gives

Mn ≤

sup

nβ

2

m∈[−1,1]n

=

sup

=

2

2

i=1 mi ∈[−1,1]

=n

sup

m∈[−1,1]

Sumit Mukherjee

o

I(mi )

o

m2i + I(mi )

i=1

nβ

sup

n

X

i=1

n

nβ X

m∈[−1,1]n

n

X

m0 m +

nβ

2

o

m2i + I(mi )

o

m2 + I(m) .

Mean field Ising models

10 / 46

Lower bound for Mn

Also, by restricting the sup over m ∈ [−1, 1]n such that

mi = m is same for all i, we have

Mn ≥

sup

nβ

m∈[−1,1]

Sumit Mukherjee

2

o

m2 10 An 1 + nI(m)

Mean field Ising models

11 / 46

Lower bound for Mn

Also, by restricting the sup over m ∈ [−1, 1]n such that

mi = m is same for all i, we have

Mn ≥

sup

nβ

m∈[−1,1]

=n

sup

m∈[−1,1]

Sumit Mukherjee

o

m2 10 An 1 + nI(m)

2

nβ

2

o

m2 + I(m) .

Mean field Ising models

11 / 46

Lower bound for Mn

Also, by restricting the sup over m ∈ [−1, 1]n such that

mi = m is same for all i, we have

Mn ≥

sup

nβ

m∈[−1,1]

=n

sup

m∈[−1,1]

o

m2 10 An 1 + nI(m)

2

nβ

2

o

m2 + I(m) .

In the last line we use An 1 = 1.

Sumit Mukherjee

Mean field Ising models

11 / 46

Lower bound for Mn

Also, by restricting the sup over m ∈ [−1, 1]n such that

mi = m is same for all i, we have

Mn ≥

nβ

sup

m∈[−1,1]

=n

sup

o

m2 10 An 1 + nI(m)

2

nβ

m∈[−1,1]

2

o

m2 + I(m) .

In the last line we use An 1 = 1.

Thus combining we have

Mn = n

sup

m∈[−1,1]

Sumit Mukherjee

nβ

2

o

m2 + I(m) .

Mean field Ising models

11 / 46

Solving the optimization

Consider the optimization of the function m 7→ β2 m2 + I(m)

on [−1, 1].

Sumit Mukherjee

Mean field Ising models

12 / 46

Solving the optimization

Consider the optimization of the function m 7→ β2 m2 + I(m)

on [−1, 1].

Differentiating with respect to m gives the equation

m = tanh(βm).

Sumit Mukherjee

Mean field Ising models

12 / 46

Solving the optimization

Consider the optimization of the function m 7→ β2 m2 + I(m)

on [−1, 1].

Differentiating with respect to m gives the equation

m = tanh(βm).

If β ≤ 1 then this equation has a unique solution m = 0.

Sumit Mukherjee

Mean field Ising models

12 / 46

Solving the optimization

Consider the optimization of the function m 7→ β2 m2 + I(m)

on [−1, 1].

Differentiating with respect to m gives the equation

m = tanh(βm).

If β ≤ 1 then this equation has a unique solution m = 0.

If β > 1 then this equation has three solutions,

{0, mβ , −mβ }.

Sumit Mukherjee

Mean field Ising models

12 / 46

Solving the optimization

Consider the optimization of the function m 7→ β2 m2 + I(m)

on [−1, 1].

Differentiating with respect to m gives the equation

m = tanh(βm).

If β ≤ 1 then this equation has a unique solution m = 0.

If β > 1 then this equation has three solutions,

{0, mβ , −mβ }.

Of these 0 is a local minimizer, and ±mβ are glolal

maximizers.

Sumit Mukherjee

Mean field Ising models

12 / 46

So?

For any sequence of dn regular graphs, we have

lim inf

n→∞

nβ

o

1

Fn ≥ sup

m2 + I(m) .

n

m∈[−1,1] 2

Sumit Mukherjee

Mean field Ising models

13 / 46

So?

For any sequence of dn regular graphs, we have

lim inf

n→∞

nβ

o

1

Fn ≥ sup

m2 + I(m) .

n

m∈[−1,1] 2

If the graph happens to be a complete graph (dn = n − 1),

then using large deviation arguments for i.i.d. random

variables one can show the limit is tight.

Sumit Mukherjee

Mean field Ising models

13 / 46

So?

For any sequence of dn regular graphs, we have

lim inf

n→∞

nβ

o

1

Fn ≥ sup

m2 + I(m) .

n

m∈[−1,1] 2

If the graph happens to be a complete graph (dn = n − 1),

then using large deviation arguments for i.i.d. random

variables one can show the limit is tight.

It is also known that equality does not hold in the above limit

if the graph happens to be a random dn regular graph with

dn = d fixed.

Sumit Mukherjee

Mean field Ising models

13 / 46

So?

For any sequence of dn regular graphs, we have

lim inf

n→∞

nβ

o

1

Fn ≥ sup

m2 + I(m) .

n

m∈[−1,1] 2

If the graph happens to be a complete graph (dn = n − 1),

then using large deviation arguments for i.i.d. random

variables one can show the limit is tight.

It is also known that equality does not hold in the above limit

if the graph happens to be a random dn regular graph with

dn = d fixed.

This raises the natural question: “For which sequence of

regular graphs is the above limit tight?”

Sumit Mukherjee

Mean field Ising models

13 / 46

Regular graphs continued

In a regular graph the scaled adjacency matrix has maximum

eigenvalue 1, with eigenvector √1n 1.

Sumit Mukherjee

Mean field Ising models

14 / 46

Regular graphs continued

In a regular graph the scaled adjacency matrix has maximum

eigenvalue 1, with eigenvector √1n 1.

Assume that all other eigenvalues are on (1).

Sumit Mukherjee

Mean field Ising models

14 / 46

Regular graphs continued

In a regular graph the scaled adjacency matrix has maximum

eigenvalue 1, with eigenvector √1n 1.

Assume that all other eigenvalues are on (1).

Thus we have λ∞ An − n1 110 = on (1).

Sumit Mukherjee

Mean field Ising models

14 / 46

Regular graphs continued

In a regular graph the scaled adjacency matrix has maximum

eigenvalue 1, with eigenvector √1n 1.

Assume that all other eigenvalues are on (1).

Thus we have λ∞ An − n1 110 = on (1).

For any x ∈ {−1, 1}n , this gives

1

x0 An − 110 x ≈ on (1)x0 x = o(n)

n

Sumit Mukherjee

Mean field Ising models

14 / 46

Regular graphs continued

In a regular graph the scaled adjacency matrix has maximum

eigenvalue 1, with eigenvector √1n 1.

Assume that all other eigenvalues are on (1).

Thus we have λ∞ An − n1 110 = on (1).

For any x ∈ {−1, 1}n , this gives

1

x0 An − 110 x ≈ on (1)x0 x = o(n)

n

n

1 X 2

⇒ x0 An x = (

xi ) + o(n)

n

i=1

Sumit Mukherjee

Mean field Ising models

14 / 46

Regular graphs continued

In a regular graph the scaled adjacency matrix has maximum

eigenvalue 1, with eigenvector √1n 1.

Assume that all other eigenvalues are on (1).

Thus we have λ∞ An − n1 110 = on (1).

For any x ∈ {−1, 1}n , this gives

1

x0 An − 110 x ≈ on (1)x0 x = o(n)

n

n

1 X 2

⇒ x0 An x = (

xi ) + o(n)

n

i=1

X

1

= [n +

xi xj ] + o(n)

n

i6=j

Sumit Mukherjee

Mean field Ising models

14 / 46

Regular graphs continued

Summing over x ∈ {−1, 1}n we have

X

X

β 0

e 2 x An x ≈ eo(n)

β

e 2n

P

i6=j

xi xj

.

x∈{−1,1}n

x∈{−1,1}n

Sumit Mukherjee

Mean field Ising models

15 / 46

Regular graphs continued

Summing over x ∈ {−1, 1}n we have

X

X

β 0

e 2 x An x ≈ eo(n)

β

e 2n

P

i6=j

xi xj

.

x∈{−1,1}n

x∈{−1,1}n

Upto a o(n) term, the RHS above is exactly the partition

function of the Curie Weiss model.

Sumit Mukherjee

Mean field Ising models

15 / 46

Regular graphs continued

Summing over x ∈ {−1, 1}n we have

X

X

β 0

e 2 x An x ≈ eo(n)

β

e 2n

P

i6=j

xi xj

.

x∈{−1,1}n

x∈{−1,1}n

Upto a o(n) term, the RHS above is exactly the partition

function of the Curie Weiss model.

Thus using the Curie Weiss solution we readily get

nβ

o

Fn

= sup

m2 + I(m) .

lim

n→∞ n

m∈[−1,1] 2

Sumit Mukherjee

Mean field Ising models

15 / 46

Regular graphs continued

Summing over x ∈ {−1, 1}n we have

X

X

β 0

e 2 x An x ≈ eo(n)

β

e 2n

P

i6=j

xi xj

.

x∈{−1,1}n

x∈{−1,1}n

Upto a o(n) term, the RHS above is exactly the partition

function of the Curie Weiss model.

Thus using the Curie Weiss solution we readily get

nβ

o

Fn

= sup

m2 + I(m) .

lim

n→∞ n

m∈[−1,1] 2

The above heuristic is rigorous, and so we get the same

asymptotics for any regular graph, as soon as the matrix An

has only one dominant eigenvalue, i.e. there is a spectral gap.

Sumit Mukherjee

Mean field Ising models

15 / 46

Example: Erdős-Renyi graphs

Suppose Gn is the adjacency matrix of an Erdős-Renyi graph

on n vertices with parameter pn .

Sumit Mukherjee

Mean field Ising models

16 / 46

Example: Erdős-Renyi graphs

Suppose Gn is the adjacency matrix of an Erdős-Renyi graph

on n vertices with parameter pn .

In this case we choose An to be Gn scaled by npn , which is

the average degree.

Sumit Mukherjee

Mean field Ising models

16 / 46

Example: Erdős-Renyi graphs

Suppose Gn is the adjacency matrix of an Erdős-Renyi graph

on n vertices with parameter pn .

In this case we choose An to be Gn scaled by npn , which is

the average degree.

log n

If p

n n then

λ∞ (Gn√) ≈ npn (Krivelevich-Sudakov ’03), and

0

λ∞ Gn − pn 11 = Op ( npn )(Feige Ofek ’05).

Sumit Mukherjee

Mean field Ising models

16 / 46

Example: Erdős-Renyi graphs

Suppose Gn is the adjacency matrix of an Erdős-Renyi graph

on n vertices with parameter pn .

In this case we choose An to be Gn scaled by npn , which is

the average degree.

log n

If p

n n then

λ∞ (Gn√) ≈ npn (Krivelevich-Sudakov ’03), and

0

λ∞ Gn − pn 11 = Op ( npn )(Feige Ofek ’05).

1

Thus λ∞ An − n1 110 = Op √np

= on (1), as

n

npn log n.

Sumit Mukherjee

Mean field Ising models

16 / 46

Example: Erdős-Renyi graphs

Thus it follows that

nβ

o

Fn

= sup

m2 + I(m) .

n→∞ n

m∈[−1,1] 2

lim

Sumit Mukherjee

Mean field Ising models

17 / 46

Example: Erdős-Renyi graphs

Thus it follows that

nβ

o

Fn

= sup

m2 + I(m) .

n→∞ n

m∈[−1,1] 2

lim

If pn logn n then estimates for λ1 (Gn ) and λ∞ (Gn − p110 )

are not as nice.

Sumit Mukherjee

Mean field Ising models

17 / 46

Example: Erdős-Renyi graphs

Thus it follows that

nβ

o

Fn

= sup

m2 + I(m) .

n→∞ n

m∈[−1,1] 2

lim

If pn logn n then estimates for λ1 (Gn ) and λ∞ (Gn − p110 )

are not as nice.

Also we know mean field prediction does not hold when npn is

constant.

Sumit Mukherjee

Mean field Ising models

17 / 46

Example: Erdős-Renyi graphs

Thus it follows that

nβ

o

Fn

= sup

m2 + I(m) .

n→∞ n

m∈[−1,1] 2

lim

If pn logn n then estimates for λ1 (Gn ) and λ∞ (Gn − p110 )

are not as nice.

Also we know mean field prediction does not hold when npn is

constant.

Thus a natural question is where does the mean field

approximation break down.

Sumit Mukherjee

Mean field Ising models

17 / 46

Example: Random dn regular graphs

Suppose Gn is the adjacency matrix of a random dn regular

graph, and An is Gn scaled by dn .

Sumit Mukherjee

Mean field Ising models

18 / 46

Example: Random dn regular graphs

Suppose Gn is the adjacency matrix of a random dn regular

graph, and An is Gn scaled by dn .

In this case λ1 (Gn ) = dn , and λ2 (Gn ) = o(dn ) as soon as

dn ≥ (log n)γ for some γ (Chung-Lu-Vu ’03, Coja-Oghlan-Lanka

’09).

Sumit Mukherjee

Mean field Ising models

18 / 46

Example: Random dn regular graphs

Suppose Gn is the adjacency matrix of a random dn regular

graph, and An is Gn scaled by dn .

In this case λ1 (Gn ) = dn , and λ2 (Gn ) = o(dn ) as soon as

dn ≥ (log n)γ for some γ (Chung-Lu-Vu ’03, Coja-Oghlan-Lanka

’09).

Thus a similar argument goes through, proving that mean

field prediction is asymptotically correct if dn ≥ (log n)γ .

Sumit Mukherjee

Mean field Ising models

18 / 46

Example: Random dn regular graphs

Suppose Gn is the adjacency matrix of a random dn regular

graph, and An is Gn scaled by dn .

In this case λ1 (Gn ) = dn , and λ2 (Gn ) = o(dn ) as soon as

dn ≥ (log n)γ for some γ (Chung-Lu-Vu ’03, Coja-Oghlan-Lanka

’09).

Thus a similar argument goes through, proving that mean

field prediction is asymptotically correct if dn ≥ (log n)γ .

Again there is a question of how far one can stretch this

result.

Sumit Mukherjee

Mean field Ising models

18 / 46

Example: Random dn regular graphs

Suppose Gn is the adjacency matrix of a random dn regular

graph, and An is Gn scaled by dn .

In this case λ1 (Gn ) = dn , and λ2 (Gn ) = o(dn ) as soon as

dn ≥ (log n)γ for some γ (Chung-Lu-Vu ’03, Coja-Oghlan-Lanka

’09).

Thus a similar argument goes through, proving that mean

field prediction is asymptotically correct if dn ≥ (log n)γ .

Again there is a question of how far one can stretch this

result. It is known that the mean field prediction is not correct

if dn = d is fixed.

Sumit Mukherjee

Mean field Ising models

18 / 46

What is the underlying picture?

If the random graph is dense enough, then the mean field

prediction is expected to hold via dominant eigenvalue

argument.

Sumit Mukherjee

Mean field Ising models

19 / 46

What is the underlying picture?

If the random graph is dense enough, then the mean field

prediction is expected to hold via dominant eigenvalue

argument.

How dense is dense enough depends on the underlying model.

Sumit Mukherjee

Mean field Ising models

19 / 46

What is the underlying picture?

If the random graph is dense enough, then the mean field

prediction is expected to hold via dominant eigenvalue

argument.

How dense is dense enough depends on the underlying model.

None of the results mentioned above applies for a specific

sequence of non random graphs.

Sumit Mukherjee

Mean field Ising models

19 / 46

What is the underlying picture?

If the random graph is dense enough, then the mean field

prediction is expected to hold via dominant eigenvalue

argument.

How dense is dense enough depends on the underlying model.

None of the results mentioned above applies for a specific

sequence of non random graphs.

However, the mean field prediction has this very nice form for

any regular graph.

Sumit Mukherjee

Mean field Ising models

19 / 46

Example: The Hypercube

Suppose Gn is the adjacency matrix of a d dimensional

hypercube {0, 1}d .

Sumit Mukherjee

Mean field Ising models

20 / 46

Example: The Hypercube

Suppose Gn is the adjacency matrix of a d dimensional

hypercube {0, 1}d .

In this case the number of vertices is n = 2d .

Sumit Mukherjee

Mean field Ising models

20 / 46

Example: The Hypercube

Suppose Gn is the adjacency matrix of a d dimensional

hypercube {0, 1}d .

In this case the number of vertices is n = 2d .

Gn has eigenvalues d − 2i with multiplicity

Sumit Mukherjee

d

i

Mean field Ising models

, for 0 ≤ i ≤ d.

20 / 46

Example: The Hypercube

Suppose Gn is the adjacency matrix of a d dimensional

hypercube {0, 1}d .

In this case the number of vertices is n = 2d .

Gn has eigenvalues d − 2i with multiplicity

d

i

, for 0 ≤ i ≤ d.

Thus the eigenvalues of Gn range between [−d, d], and there

is no dominant eigenvalue.

Sumit Mukherjee

Mean field Ising models

20 / 46

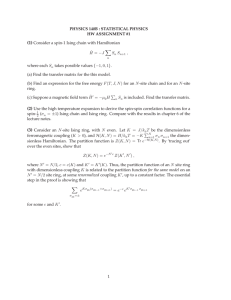

Example: The Hypercube (d = 50)

4

6

x 10

5

4

3

2

1

0

−0.8

−0.6

−0.4

−0.2

0

0.2

0.4

0.6

0.8

Sumit

Mukherjee

Ising models with d = 50.

Figure: Histogram of

eigenvalues

ofMean

thefield

hypercube

21 / 46

Example: The hypercube

In this case d = dn = log2 n, which is somewhere between

sparse and dense graphs.

Sumit Mukherjee

Mean field Ising models

22 / 46

Example: The hypercube

In this case d = dn = log2 n, which is somewhere between

sparse and dense graphs.

There is essentially no spectral gap, and hence dominant

eigenvalue argument fails.

Sumit Mukherjee

Mean field Ising models

22 / 46

Example: The hypercube

In this case d = dn = log2 n, which is somewhere between

sparse and dense graphs.

There is essentially no spectral gap, and hence dominant

eigenvalue argument fails.

Thus it is not clear even at a heuristic level whether the mean

field prediction should be correct.

Sumit Mukherjee

Mean field Ising models

22 / 46

A more general question

More generally, for a general sequence of matrices An (not

necessarily an adjacency matrix), we pose the question of

when is the mean field prediction tight.

Sumit Mukherjee

Mean field Ising models

23 / 46

A more general question

More generally, for a general sequence of matrices An (not

necessarily an adjacency matrix), we pose the question of

when is the mean field prediction tight.

Thus we look for sufficient condition on the sequence of

matrices An such that

lim

n→∞

1

(Fn − Mn ) = 0.

n

Sumit Mukherjee

Mean field Ising models

23 / 46

A more general question

More generally, for a general sequence of matrices An (not

necessarily an adjacency matrix), we pose the question of

when is the mean field prediction tight.

Thus we look for sufficient condition on the sequence of

matrices An such that

lim

n→∞

1

(Fn − Mn ) = 0.

n

If this holds, then we are able to reduce the asymptotics of Fn

to the asymptotics of Mn .

Sumit Mukherjee

Mean field Ising models

23 / 46

A more general question

More generally, for a general sequence of matrices An (not

necessarily an adjacency matrix), we pose the question of

when is the mean field prediction tight.

Thus we look for sufficient condition on the sequence of

matrices An such that

lim

n→∞

1

(Fn − Mn ) = 0.

n

If this holds, then we are able to reduce the asymptotics of Fn

to the asymptotics of Mn .

A follow up question is how to solve the optimization problem

in Mn .

Sumit Mukherjee

Mean field Ising models

23 / 46

A more general question

More generally, for a general sequence of matrices An (not

necessarily an adjacency matrix), we pose the question of

when is the mean field prediction tight.

Thus we look for sufficient condition on the sequence of

matrices An such that

lim

n→∞

1

(Fn − Mn ) = 0.

n

If this holds, then we are able to reduce the asymptotics of Fn

to the asymptotics of Mn .

A follow up question is how to solve the optimization problem

in Mn . As we checked, the optimization problem is easy for

regular graphs.

Sumit Mukherjee

Mean field Ising models

23 / 46

The proposed solution

We claim that a sufficient condition for the mean field

prediction to hold is

n

X

An (i, j)2 = tr(A2n ) =

i,j=1

n

X

λ2i = o(n).

i=1

Sumit Mukherjee

Mean field Ising models

24 / 46

The proposed solution

We claim that a sufficient condition for the mean field

prediction to hold is

n

X

An (i, j)2 = tr(A2n ) =

i,j=1

n

X

λ2i = o(n).

i=1

This basically says that the empirical eigenvalue distribution

converges to 0 in L2 .

Sumit Mukherjee

Mean field Ising models

24 / 46

The proposed solution

We claim that a sufficient condition for the mean field

prediction to hold is

n

X

An (i, j)2 = tr(A2n ) =

i,j=1

n

X

λ2i = o(n).

i=1

This basically says that the empirical eigenvalue distribution

converges to 0 in L2 .

One way to think about this claim is that in this case most of

the eigenvalues are o(1).

Sumit Mukherjee

Mean field Ising models

24 / 46

The proposed solution

We claim that a sufficient condition for the mean field

prediction to hold is

n

X

An (i, j)2 = tr(A2n ) =

i,j=1

n

X

λ2i = o(n).

i=1

This basically says that the empirical eigenvalue distribution

converges to 0 in L2 .

One way to think about this claim is that in this case most of

the eigenvalues are o(1).

Thus the number of dominant eigenvalues is o(n).

Sumit Mukherjee

Mean field Ising models

24 / 46

Example: Ising models on graphs

In this case An is the adjacency matrix of Gn scaled by the

n)

average degree 2E(G

.

n

Sumit Mukherjee

Mean field Ising models

25 / 46

Example: Ising models on graphs

In this case An is the adjacency matrix of Gn scaled by the

n)

average degree 2E(G

.

n

Thus we have

n

X

i,j=1

A2n (i, j) =

n

X

n2

n2

.

1{i

∼

j}

=

4E(Gn )2

2E(Gn )

i,j=1

Sumit Mukherjee

Mean field Ising models

25 / 46

Example: Ising models on graphs

In this case An is the adjacency matrix of Gn scaled by the

n)

average degree 2E(G

.

n

Thus we have

n

X

i,j=1

A2n (i, j) =

n

X

n2

n2

.

1{i

∼

j}

=

4E(Gn )2

2E(Gn )

i,j=1

The RHS is o(n) iff E(Gn ) n.

Sumit Mukherjee

Mean field Ising models

25 / 46

Example: Ising models on graphs

In this case An is the adjacency matrix of Gn scaled by the

n)

average degree 2E(G

.

n

Thus we have

n

X

i,j=1

A2n (i, j) =

n

X

n2

n2

.

1{i

∼

j}

=

4E(Gn )2

2E(Gn )

i,j=1

The RHS is o(n) iff E(Gn ) n.

Thus mean field prediction holds as soon as the graph has

super linear edges.

Sumit Mukherjee

Mean field Ising models

25 / 46

Example: Erdős-Renyi graphs

In this case 2E(Gn ) ≈ n2 pn .

Sumit Mukherjee

Mean field Ising models

26 / 46

Example: Erdős-Renyi graphs

In this case 2E(Gn ) ≈ n2 pn .

This is super linear as soon as npn → ∞.

Sumit Mukherjee

Mean field Ising models

26 / 46

Example: Erdős-Renyi graphs

In this case 2E(Gn ) ≈ n2 pn .

This is super linear as soon as npn → ∞.

Also if npn = c is fixed, then mean field prediction does not

hold.

Sumit Mukherjee

Mean field Ising models

26 / 46

Example: Erdős-Renyi graphs

In this case 2E(Gn ) ≈ n2 pn .

This is super linear as soon as npn → ∞.

Also if npn = c is fixed, then mean field prediction does not

hold.

This gives a complete picture for Erdős-Renyi graphs.

Sumit Mukherjee

Mean field Ising models

26 / 46

Example: Regular graphs

In this case 2E(Gn ) = ndn .

Sumit Mukherjee

Mean field Ising models

27 / 46

Example: Regular graphs

In this case 2E(Gn ) = ndn .

This is super linear, as soon as dn → ∞.

Sumit Mukherjee

Mean field Ising models

27 / 46

Example: Regular graphs

In this case 2E(Gn ) = ndn .

This is super linear, as soon as dn → ∞.

Thus mean field prediction holds for any sequence of dn

regular graphs, as soon as dn → ∞.

Sumit Mukherjee

Mean field Ising models

27 / 46

Example: Regular graphs

In this case 2E(Gn ) = ndn .

This is super linear, as soon as dn → ∞.

Thus mean field prediction holds for any sequence of dn

regular graphs, as soon as dn → ∞. In particular this covers

the hypercube, as dn = log2 n.

Sumit Mukherjee

Mean field Ising models

27 / 46

Example: Regular graphs

In this case 2E(Gn ) = ndn .

This is super linear, as soon as dn → ∞.

Thus mean field prediction holds for any sequence of dn

regular graphs, as soon as dn → ∞. In particular this covers

the hypercube, as dn = log2 n.

For dn = d fixed mean field prediction does not hold.

Sumit Mukherjee

Mean field Ising models

27 / 46

Sanity check: SK Model

In this case An (i, j) =

√1 Z(i, j),

n

i.i.d.

where

{Z(i, j), 1 ≤ i < j ≤ n} ∼ N (0, 1).

Sumit Mukherjee

Mean field Ising models

28 / 46

Sanity check: SK Model

In this case An (i, j) =

√1 Z(i, j),

n

i.i.d.

where

{Z(i, j), 1 ≤ i < j ≤ n} ∼ N (0, 1).

This gives

n

n

1 X

1 X

p

An (i, j)2 = 2

Z(i, j)2 → 1.

n

n

i,j=1

Sumit Mukherjee

i,j=1

Mean field Ising models

28 / 46

Sanity check: SK Model

In this case An (i, j) =

√1 Z(i, j),

n

i.i.d.

where

{Z(i, j), 1 ≤ i < j ≤ n} ∼ N (0, 1).

This gives

n

n

1 X

1 X

p

An (i, j)2 = 2

Z(i, j)2 → 1.

n

n

i,j=1

i,j=1

P

Thus we have ni,j=1 An (i, j)2 = Θp (n), and so mean field

condition does not hold.

Sumit Mukherjee

Mean field Ising models

28 / 46

Sanity check: SK Model

In this case An (i, j) =

√1 Z(i, j),

n

i.i.d.

where

{Z(i, j), 1 ≤ i < j ≤ n} ∼ N (0, 1).

This gives

n

n

1 X

1 X

p

An (i, j)2 = 2

Z(i, j)2 → 1.

n

n

i,j=1

i,j=1

P

Thus we have ni,j=1 An (i, j)2 = Θp (n), and so mean field

condition does not hold.

This is what should be the case, as the mean field prediction

does not hold for the SK Model.

Sumit Mukherjee

Mean field Ising models

28 / 46

Comments about our results

Our results allow for the P

presence of an external magnetic

field term of the form B ni=1 xi in the Ising model.

Sumit Mukherjee

Mean field Ising models

29 / 46

Comments about our results

Our results allow for the P

presence of an external magnetic

field term of the form B ni=1 xi in the Ising model.

Our results apply for Potts model as well, where we have q

states instead of 2.

Sumit Mukherjee

Mean field Ising models

29 / 46

Comments about our results

Our results allow for the P

presence of an external magnetic

field term of the form B ni=1 xi in the Ising model.

Our results apply for Potts model as well, where we have q

states instead of 2.

There is a technical condition required on the matrix An ,

which is

sup

n

n X

X

xi An (i, j) = O(n).

x∈[−1,1]n i=1

j=1

Sumit Mukherjee

Mean field Ising models

29 / 46

Comments about our results

Our results allow for the P

presence of an external magnetic

field term of the form B ni=1 xi in the Ising model.

Our results apply for Potts model as well, where we have q

states instead of 2.

There is a technical condition required on the matrix An ,

which is

sup

n

n X

X

xi An (i, j) = O(n).

x∈[−1,1]n i=1

j=1

We don’t know of a single model in the literature which

violates this assumption, be it mean field or otherwise

Sumit Mukherjee

Mean field Ising models

29 / 46

Comments about our results

Our results allow for the P

presence of an external magnetic

field term of the form B ni=1 xi in the Ising model.

Our results apply for Potts model as well, where we have q

states instead of 2.

There is a technical condition required on the matrix An ,

which is

sup

n

n X

X

xi An (i, j) = O(n).

x∈[−1,1]n i=1

j=1

We don’t know of a single model in the literature which

violates this assumption, be it mean field or otherwise (Ising

model on all graphs, SK Model, Hopfield Model).

Sumit Mukherjee

Mean field Ising models

29 / 46

What about the optimization problem?

Our general sufficient condition gives a wide class of graphs

for which the mean field prediction holds.

Sumit Mukherjee

Mean field Ising models

30 / 46

What about the optimization problem?

Our general sufficient condition gives a wide class of graphs

for which the mean field prediction holds.

This means that for Ising models on such graphs we have

limn→∞ n1 (Fn − Mn ) = 0.

Sumit Mukherjee

Mean field Ising models

30 / 46

What about the optimization problem?

Our general sufficient condition gives a wide class of graphs

for which the mean field prediction holds.

This means that for Ising models on such graphs we have

limn→∞ n1 (Fn − Mn ) = 0.

Recall that

Mn =

sup

n1

m∈[−1,1]n

Sumit Mukherjee

2

0

m An m +

n

X

o

I(mi ) .

i=1

Mean field Ising models

30 / 46

What about the optimization problem?

Our general sufficient condition gives a wide class of graphs

for which the mean field prediction holds.

This means that for Ising models on such graphs we have

limn→∞ n1 (Fn − Mn ) = 0.

Recall that

Mn =

sup

n1

m∈[−1,1]n

2

0

m An m +

n

X

o

I(mi ) .

i=1

Solving this for regular graphs was easy.

Sumit Mukherjee

Mean field Ising models

30 / 46

What about the optimization problem?

Our general sufficient condition gives a wide class of graphs

for which the mean field prediction holds.

This means that for Ising models on such graphs we have

limn→∞ n1 (Fn − Mn ) = 0.

Recall that

Mn =

sup

n1

m∈[−1,1]n

2

0

m An m +

n

X

o

I(mi ) .

i=1

Solving this for regular graphs was easy. What can we say

about other graphs?

Sumit Mukherjee

Mean field Ising models

30 / 46

Approximately regular graphs

¯ with

Suppose all the degrees di are approximately close to d,

d¯ → ∞.

Sumit Mukherjee

Mean field Ising models

31 / 46

Approximately regular graphs

¯ with

Suppose all the degrees di are approximately close to d,

d¯ → ∞.

Then one expects the same asymptotics for Fn as in the

regular graph case.

Sumit Mukherjee

Mean field Ising models

31 / 46

Approximately regular graphs

¯ with

Suppose all the degrees di are approximately close to d,

d¯ → ∞.

Then one expects the same asymptotics for Fn as in the

regular graph case.

We show this is the case

P under thew assumption that the

empirical distribution ni=1 n1 δ di → δ1 .

d¯

Sumit Mukherjee

Mean field Ising models

31 / 46

Approximately regular graphs

¯ with

Suppose all the degrees di are approximately close to d,

d¯ → ∞.

Then one expects the same asymptotics for Fn as in the

regular graph case.

We show this is the case

P under thew assumption that the

empirical distribution ni=1 n1 δ di → δ1 .

d¯

As a by product of our analysis, we show that X̄ has the same

large deviation for all approximately regular graphs in the

Ferro-magnetic domain (β > 0), as soon as the average

degree goes to +∞.

Sumit Mukherjee

Mean field Ising models

31 / 46

Ising model on bi-regular bi-partite graphs

Let Gn be a sequence of bi-regular bi-partite graphs with

bipartition sizes an and bn .

Sumit Mukherjee

Mean field Ising models

32 / 46

Ising model on bi-regular bi-partite graphs

Let Gn be a sequence of bi-regular bi-partite graphs with

bipartition sizes an and bn .

Thus we have an + bn = n, the total number of vertices.

Sumit Mukherjee

Mean field Ising models

32 / 46

Ising model on bi-regular bi-partite graphs

Let Gn be a sequence of bi-regular bi-partite graphs with

bipartition sizes an and bn .

Thus we have an + bn = n, the total number of vertices.

Assume that limn→∞ n1 an = p ∈ (0, 1).

Sumit Mukherjee

Mean field Ising models

32 / 46

Ising model on bi-regular bi-partite graphs

Let Gn be a sequence of bi-regular bi-partite graphs with

bipartition sizes an and bn .

Thus we have an + bn = n, the total number of vertices.

Assume that limn→∞ n1 an = p ∈ (0, 1).

This removes the possibility that one of the partitions

dominate.

Sumit Mukherjee

Mean field Ising models

32 / 46

Ising model on bi-regular bi-partite graphs

Assume that each bi-partition has constant degree cn and dn

respectively.

Sumit Mukherjee

Mean field Ising models

33 / 46

Ising model on bi-regular bi-partite graphs

Assume that each bi-partition has constant degree cn and dn

respectively.

Then we must have an cn = bn dn , which equals the total

number of edges.

Sumit Mukherjee

Mean field Ising models

33 / 46

Ising model on bi-regular bi-partite graphs

Assume that each bi-partition has constant degree cn and dn

respectively.

Then we must have an cn = bn dn , which equals the total

number of edges.

Thus for E(Gn ) to be super linear, we need

cn ( or equivalently dn ) to go to +∞.

Sumit Mukherjee

Mean field Ising models

33 / 46

Ising model on bi-regular bi-partite graphs

Choose An to be the adjacency matrix of Gn scaled by

Sumit Mukherjee

Mean field Ising models

1

cn +dn .

34 / 46

Ising model on bi-regular bi-partite graphs

Choose An to be the adjacency matrix of Gn scaled by

The asymptotics of

sup

Fn

n

1

cn +dn .

is given by

{βp(1 − p)m1 m2 + pI(m1 ) + (1 − p)I(m2 )}.

m1 ,m2 ∈[−1,1]

Sumit Mukherjee

Mean field Ising models

34 / 46

Ising model on bi-regular bi-partite graphs

Choose An to be the adjacency matrix of Gn scaled by

The asymptotics of

sup

Fn

n

1

cn +dn .

is given by

{βp(1 − p)m1 m2 + pI(m1 ) + (1 − p)I(m2 )}.

m1 ,m2 ∈[−1,1]

Thus again the asymptotics is universal for any sequence of

bi-regular bi-partite graphs, as soon as the average degree

goes to +∞.

Sumit Mukherjee

Mean field Ising models

34 / 46

Ising model on bi-regular bi-partite graphs

Choose An to be the adjacency matrix of Gn scaled by

The asymptotics of

sup

Fn

n

1

cn +dn .

is given by

{βp(1 − p)m1 m2 + pI(m1 ) + (1 − p)I(m2 )}.

m1 ,m2 ∈[−1,1]

Thus again the asymptotics is universal for any sequence of

bi-regular bi-partite graphs, as soon as the average degree

goes to +∞.

Differentiating with respect to m1 , m2 we get the equations

m1 = tanh(β(1 − p)m2 ), m2 = tanh(βpm1 ).

Sumit Mukherjee

Mean field Ising models

34 / 46

Ising model on bi-regular bi-partite graphs

If |β| ≤ √

1

p(1−p)

then there is a unique solution

(m1 , m2 ) = (0, 0).

Sumit Mukherjee

Mean field Ising models

35 / 46

Ising model on bi-regular bi-partite graphs

If |β| ≤ √

1

p(1−p)

then there is a unique solution

(m1 , m2 ) = (0, 0).

If β > √

1

,

p(1−p)

then there are two solutions (xβ,p , yβ,p ) and

(−xβ,p , −yβ,p ).

Sumit Mukherjee

Mean field Ising models

35 / 46

Ising model on bi-regular bi-partite graphs

If |β| ≤ √

1

p(1−p)

then there is a unique solution

(m1 , m2 ) = (0, 0).

If β > √

1

,

p(1−p)

then there are two solutions (xβ,p , yβ,p ) and

(−xβ,p , −yβ,p ).

If β < − √

1

,

p(1−p)

then there are again two solutions

(xβ,p , −yβ,p ) and (−xβ,p , yβ,p ).

Sumit Mukherjee

Mean field Ising models

35 / 46

Difference between Kn and Kn/2,n/2

Consider Ising model on two graphs, the complete graph and

the complete bi-partite graph.

Sumit Mukherjee

Mean field Ising models

36 / 46

Difference between Kn and Kn/2,n/2

Consider Ising model on two graphs, the complete graph and

the complete bi-partite graph.

If β > 0 then both the models show same asymtotic behavior.

Sumit Mukherjee

Mean field Ising models

36 / 46

Difference between Kn and Kn/2,n/2

Consider Ising model on two graphs, the complete graph and

the complete bi-partite graph.

If β > 0 then both the models show same asymtotic behavior.

In particular, there is a phase transition in β.

Sumit Mukherjee

Mean field Ising models

36 / 46

Difference between Kn and Kn/2,n/2

Consider Ising model on two graphs, the complete graph and

the complete bi-partite graph.

If β > 0 then both the models show same asymtotic behavior.

In particular, there is a phase transition in β.

If β < 0 then the two models behave differently.

Sumit Mukherjee

Mean field Ising models

36 / 46

Difference between Kn and Kn/2,n/2

Consider Ising model on two graphs, the complete graph and

the complete bi-partite graph.

If β > 0 then both the models show same asymtotic behavior.

In particular, there is a phase transition in β.

If β < 0 then the two models behave differently.

Ising model on the complete bi-partite graph shows a phase

transition, whereas that on the complete graph does not.

Sumit Mukherjee

Mean field Ising models

36 / 46

Graphs converging in cut metric

Given a graph Gn on n vertices, one can define a function

WGn on the unit square as follows:

Sumit Mukherjee

Mean field Ising models

37 / 46

Graphs converging in cut metric

Given a graph Gn on n vertices, one can define a function

WGn on the unit square as follows:

Partition the unit square into n2 squares each of equal size.

Sumit Mukherjee

Mean field Ising models

37 / 46

Graphs converging in cut metric

Given a graph Gn on n vertices, one can define a function

WGn on the unit square as follows:

Partition the unit square into n2 squares each of equal size.

Define WGn to be equal to 1 on the (i, j)th box if (i, j) is an

edge in Gn , and 0 otherwise.

Sumit Mukherjee

Mean field Ising models

37 / 46

Graphs converging in cut metric

Given a graph Gn on n vertices, one can define a function

WGn on the unit square as follows:

Partition the unit square into n2 squares each of equal size.

Define WGn to be equal to 1 on the (i, j)th box if (i, j) is an

edge in Gn , and 0 otherwise.

Thus we have WGn is a function on the unit square, with

´

2E(Gn )

.

[0,1]2 |WGn (x, y)|dxdy =

n2

Sumit Mukherjee

Mean field Ising models

37 / 46

Graphs converging in cut metric

To proceed in a manner which works across edge densities,

n)

and call the resulting

scale the function WGn by 2E(G

n2

f

function by WGn .

Sumit Mukherjee

Mean field Ising models

38 / 46

Graphs converging in cut metric

To proceed in a manner which works across edge densities,

n)

and call the resulting

scale the function WGn by 2E(G

n2

f

function by WGn .

Thus after scaling we have

Sumit Mukherjee

´

[0,1]2

fGn (x, y)|dxdy = 1.

|W

Mean field Ising models

38 / 46

Graphs converging in cut metric

To proceed in a manner which works across edge densities,

n)

and call the resulting

scale the function WGn by 2E(G

n2

f

function by WGn .

Thus after scaling we have

´

[0,1]2

fGn (x, y)|dxdy = 1.

|W

Consider the space of symmetric measurable functions

W ∈ L1 [0, 1]2 , to be henceforth referred as graphons.

Sumit Mukherjee

Mean field Ising models

38 / 46

Graphs converging in cut metric

To proceed in a manner which works across edge densities,

n)

and call the resulting

scale the function WGn by 2E(G

n2

f

function by WGn .

Thus after scaling we have

´

[0,1]2

fGn (x, y)|dxdy = 1.

|W

Consider the space of symmetric measurable functions

W ∈ L1 [0, 1]2 , to be henceforth referred as graphons.

For any graphon W , define

the cut norm

´

||W || = supS,T ⊂[0,1] | S×T W (x, y)dxdy|.

Sumit Mukherjee

Mean field Ising models

38 / 46

Graphs converging in cut metric

We will say a sequence of graphs Gn converge in cut metric

fGn converge in cut norm to W .

to W , if the functions W

Sumit Mukherjee

Mean field Ising models

39 / 46

Graphs converging in cut metric

We will say a sequence of graphs Gn converge in cut metric

fGn converge in cut norm to W .

to W , if the functions W

As example, the complete graph converges to W1 ≡ 1.

Sumit Mukherjee

Mean field Ising models

39 / 46

Graphs converging in cut metric

We will say a sequence of graphs Gn converge in cut metric

fGn converge in cut norm to W .

to W , if the functions W

As example, the complete graph converges to W1 ≡ 1.

It follows from (Borgs et. al ’14) that if a sequence of graphs Gn

converge W in cut metric, then |E(Gn )| n.

Sumit Mukherjee

Mean field Ising models

39 / 46

Graphs converging in cut metric

We will say a sequence of graphs Gn converge in cut metric

fGn converge in cut norm to W .

to W , if the functions W

As example, the complete graph converges to W1 ≡ 1.

It follows from (Borgs et. al ’14) that if a sequence of graphs Gn

converge W in cut metric, then |E(Gn )| n.

Thus the mean field condition holds in this case, and so it

suffices to consider the asymptotics of Mn .

Sumit Mukherjee

Mean field Ising models

39 / 46

Graphs converging in cut metric

By a simple analysis of Mn , the limiting log partition function

is

ˆ

nˆ

o

β

m(x)m(y)W (x, y)dxdy +

sup

I(m(x))dx .

[0,1]2 2

[0,1]

m(.)

Sumit Mukherjee

Mean field Ising models

40 / 46

Graphs converging in cut metric

By a simple analysis of Mn , the limiting log partition function

is

ˆ

nˆ

o

β

m(x)m(y)W (x, y)dxdy +

sup

I(m(x))dx .

[0,1]2 2

[0,1]

m(.)

Here the supremum is over all measurable functions

m : [0, 1] 7→ [−1, 1].

Sumit Mukherjee

Mean field Ising models

40 / 46

Graphs converging in cut metric

By a simple analysis of Mn , the limiting log partition function

is

ˆ

nˆ

o

β

m(x)m(y)W (x, y)dxdy +

sup

I(m(x))dx .

[0,1]2 2

[0,1]

m(.)

Here the supremum is over all measurable functions

m : [0, 1] 7→ [−1, 1].

This result was already proved in (Borgs et. al ’14).

Sumit Mukherjee

Mean field Ising models

40 / 46

Graphs converging in cut metric

By a simple analysis of Mn , the limiting log partition function

is

ˆ

nˆ

o

β

m(x)m(y)W (x, y)dxdy +

sup

I(m(x))dx .

[0,1]2 2

[0,1]

m(.)

Here the supremum is over all measurable functions

m : [0, 1] 7→ [−1, 1].

This result was already proved in (Borgs et. al ’14).

In our paper we re-derive this result as a consequence of mean

field prediction, to illustrate the flexibility of this approach.

Sumit Mukherjee

Mean field Ising models

40 / 46

Graphs converging in cut metric

By a simple analysis of Mn , the limiting log partition function

is

ˆ

nˆ

o

β

m(x)m(y)W (x, y)dxdy +

sup

I(m(x))dx .

[0,1]2 2

[0,1]

m(.)

Here the supremum is over all measurable functions

m : [0, 1] 7→ [−1, 1].

This result was already proved in (Borgs et. al ’14).

In our paper we re-derive this result as a consequence of mean

field prediction, to illustrate the flexibility of this approach.

As an immediate application, we obtain the phase transition

1

1

point for such Ising models, which is β = λ1 (W

) ≈ λ1 (An ) .

Sumit Mukherjee

Mean field Ising models

40 / 46

Proof Strategy

The main tool for proving the mean field prediction is a large

deviation estimate for binary variables (Chatterjee-Dembo ’14).

Sumit Mukherjee

Mean field Ising models

41 / 46

Proof Strategy

The main tool for proving the mean field prediction is a large

deviation estimate for binary variables (Chatterjee-Dembo ’14).

They give a general technique to prove mean field type

predictions in exponential families of the form ef (x) on binary

random variables x ∈ {−1, 1}n .

Sumit Mukherjee

Mean field Ising models

41 / 46

Proof Strategy

The main tool for proving the mean field prediction is a large

deviation estimate for binary variables (Chatterjee-Dembo ’14).

They give a general technique to prove mean field type

predictions in exponential families of the form ef (x) on binary

random variables x ∈ {−1, 1}n .

The idea is that if the gradient of the function ∇f (x) can be

expressed in o(n) bits, then mean field prediction works.

Sumit Mukherjee

Mean field Ising models

41 / 46

Proof Strategy

The main tool for proving the mean field prediction is a large

deviation estimate for binary variables (Chatterjee-Dembo ’14).

They give a general technique to prove mean field type

predictions in exponential families of the form ef (x) on binary

random variables x ∈ {−1, 1}n .

The idea is that if the gradient of the function ∇f (x) can be

expressed in o(n) bits, then mean field prediction works.

In our case f (x) = 12 x0 An x for a symmetric matrix An .

Sumit Mukherjee

Mean field Ising models

41 / 46

E.g. Ising model on Complete graph

For a specific example take f (x) =

Sumit Mukherjee

1

n

P

xi xj .

1≤i<j≤n

Mean field Ising models

42 / 46

E.g. Ising model on Complete graph

For a specific example take f (x) =

1

n

P

xi xj .

1≤i<j≤n

This is the Curie-Weiss Ising model on the complete graph.

Sumit Mukherjee

Mean field Ising models

42 / 46

E.g. Ising model on Complete graph

For a specific example take f (x) =

1

n

P

xi xj .

1≤i<j≤n

This is the Curie-Weiss Ising model on the complete graph.

A direct calculation gives

∂f

1X

xi

(x) =

xj = x̄ − .

∂xi

n

n

j6=i

Sumit Mukherjee

Mean field Ising models

42 / 46

E.g. Ising model on Complete graph

For a specific example take f (x) =

1

n

P

xi xj .

1≤i<j≤n

This is the Curie-Weiss Ising model on the complete graph.

A direct calculation gives

∂f

1X

xi

(x) =

xj = x̄ − .

∂xi

n

n

j6=i

This is approximately x̄, and so every component of ∇f (x)

can be expressed by one bit of information x̄.

Sumit Mukherjee

Mean field Ising models

42 / 46

E.g. Ising model on Z 1

For an example of a model that is not mean field, take

n−1

P

f (x) =

xi xi+1 .

i=1

Sumit Mukherjee

Mean field Ising models

43 / 46

E.g. Ising model on Z 1

For an example of a model that is not mean field, take

n−1

P

f (x) =

xi xi+1 .

i=1

This is the Ising model on the one dimensional integer lattice.

Sumit Mukherjee

Mean field Ising models

43 / 46

E.g. Ising model on Z 1

For an example of a model that is not mean field, take

n−1

P

f (x) =

xi xi+1 .

i=1

This is the Ising model on the one dimensional integer lattice.

In this case we have

∂f

(x) = xi−1 + xi+1

∂xi

for 2 ≤ i ≤ n − 1.

Sumit Mukherjee

Mean field Ising models

43 / 46

E.g. Ising model on Z 1

For an example of a model that is not mean field, take

n−1

P

f (x) =

xi xi+1 .

i=1

This is the Ising model on the one dimensional integer lattice.

In this case we have

∂f

(x) = xi−1 + xi+1

∂xi

for 2 ≤ i ≤ n − 1.

This vector is no longer expressable in terms of o(n) many

quantities.

Sumit Mukherjee

Mean field Ising models

43 / 46

E.g. Ising model on Z 1

For an example of a model that is not mean field, take

n−1

P

f (x) =

xi xi+1 .

i=1

This is the Ising model on the one dimensional integer lattice.

In this case we have

∂f

(x) = xi−1 + xi+1

∂xi

for 2 ≤ i ≤ n − 1.

This vector is no longer expressable in terms of o(n) many

quantities.

Thus Ising model on complete graph is mean field, whereas

Ising model on Z 1 is not.

Sumit Mukherjee

Mean field Ising models

43 / 46

Extending to general matrices

In general if f (x) = 12 x0 An x then ∇f (x) = An x.

Sumit Mukherjee

Mean field Ising models

44 / 46

Extending to general matrices

In general if f (x) = 12 x0 An x then ∇f (x) = An x.

Assume An has spectral decomposition

Sumit Mukherjee

Pn

0

i=1 λi pi pi

Mean field Ising models

44 / 46

Extending to general matrices

In general if f (x) = 12 x0 An x then ∇f (x) = An x.

Assume An has spectral decomposition

Pn

Recall that our mean field assumption is

which says most eigenvalues are o(1).

Sumit Mukherjee

0

i=1 λi pi pi

1

n

Pn

Mean field Ising models

2

i=1 λi

= o(n),

44 / 46

Extending to general matrices

In general if f (x) = 12 x0 An x then ∇f (x) = An x.

Assume An has spectral decomposition

Pn

Recall that our mean field assumption is

which says most eigenvalues are o(1).

Thus Ax ≈

PK

0

i=1 λi pi pi x

Sumit Mukherjee

0

i=1 λi pi pi

1

n

Pn

2

i=1 λi

= o(n),

with K = o(n).

Mean field Ising models

44 / 46

Extending to general matrices

In general if f (x) = 12 x0 An x then ∇f (x) = An x.

Assume An has spectral decomposition

Pn

Recall that our mean field assumption is

which says most eigenvalues are o(1).

Thus Ax ≈

PK

0

i=1 λi pi pi x

0

i=1 λi pi pi

1

n

Pn

2

i=1 λi

= o(n),

with K = o(n).

This is expressable using at most K bits of information

{p0i x}K

i=1 , which is why the resulting model is mean field.

Sumit Mukherjee

Mean field Ising models

44 / 46

Open questions?

Extend the argument to other exponential families, such as

ERGMs.

Sumit Mukherjee

Mean field Ising models

45 / 46

Open questions?

Extend the argument to other exponential families, such as

ERGMs.

Think of edges in graphs as binary random variables.

Sumit Mukherjee

Mean field Ising models

45 / 46

Open questions?

Extend the argument to other exponential families, such as

ERGMs.

Think of edges in graphs as binary random variables.

Find for what values of parameters the mean field prediction is

tight.

Sumit Mukherjee

Mean field Ising models

45 / 46

Open questions?

Extend the argument to other exponential families, such as

ERGMs.

Think of edges in graphs as binary random variables.

Find for what values of parameters the mean field prediction is

tight.

Partially addressed in Chatterjee-Dembo, results are almost

sharp.

Sumit Mukherjee

Mean field Ising models

45 / 46

Open questions?

Extend the argument to other exponential families, such as

ERGMs.

Think of edges in graphs as binary random variables.

Find for what values of parameters the mean field prediction is

tight.

Partially addressed in Chatterjee-Dembo, results are almost

sharp.

Sumit Mukherjee

Mean field Ising models

45 / 46

Open questions?

Extend the argument to other exponential families, such as

ERGMs.

Think of edges in graphs as binary random variables.

Find for what values of parameters the mean field prediction is

tight.

Partially addressed in Chatterjee-Dembo, results are almost

sharp.

Solve optimization problem for more graph ensembles.

Sumit Mukherjee

Mean field Ising models

45 / 46

Sumit Mukherjee

Mean field Ising models

46 / 46