Adiabatic Monte Carlo

advertisement

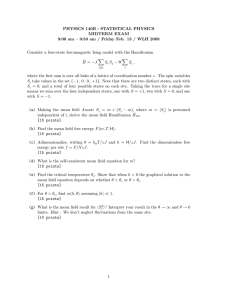

Adiabatic Monte Carlo Michael Betancourt @betanalpha University of Warwick CRiSM Workshop: Estimating Constants, University of Warwick April 21, 2016 Computational statistics is all about computing expectations with respect to a given target distribution. E⇡ [f ] = Z f (q)⇡(q) dq High-dimensional target distributions exhibit concentration of measure, which frustrates these computations. Markov chains provide a generic scheme for finding and then exploring the resulting typical set. Markov chains provide a generic scheme for finding and then exploring the resulting typical set. In particular, Markov chain define consistent Markov Chain Monte Carlo estimators of the desired expectations. In particular, Markov chain define consistent Markov Chain Monte Carlo estimators of the desired expectations. 1 N N X n=0 f (qn ) In particular, Markov chain define consistent Markov Chain Monte Carlo estimators of the desired expectations. 1 lim N !1 N N X n=0 f (qn ) ! E⇡ [f ] In order to scale to high-dimensional target distributions, however, we need efficient exploration of the typical set. In order to scale to high-dimensional target distributions, however, we need efficient exploration of the typical set. Hamiltonian Monte Carlo Hamiltonian Monte Carlo uses auxiliary momenta and density gradients to generate coherent exploration. q ! (q, p) Hamiltonian Monte Carlo uses auxiliary momenta and density gradients to generate coherent exploration. q ! (q, p) ⇡(q) ! ⇡(q, p) = ⇡(p | q) ⇡(q) Hamiltonian Monte Carlo uses auxiliary momenta and density gradients to generate coherent exploration. q ! (q, p) ⇡(q) ! ⇡(q, p) = ⇡(p | q) ⇡(q) The addition of momenta defines a Hamiltonian that decomposes into a potential energy and kinetic energy. H(p, q) = log ⇡(p|q) ⇡(q) The addition of momenta defines a Hamiltonian that decomposes into a potential energy and kinetic energy. H(p, q) = = log ⇡(p|q) ⇡(q) log ⇡(p|q) log ⇡(q) The addition of momenta defines a Hamiltonian that decomposes into a potential energy and kinetic energy. H(p, q) = = log ⇡(p|q) ⇡(q) log ⇡(p|q) T log ⇡(q) The addition of momenta defines a Hamiltonian that decomposes into a potential energy and kinetic energy. H(p, q) = = log ⇡(p|q) ⇡(q) log ⇡(p|q) T log ⇡(q) V The Hamiltonian defines a vector field aligned with the typical set from which we can generate exploration. dq @T = dt @p dp = dt @T @q @V @q The Hamiltonian defines a vector field aligned with the typical set from which we can generate exploration. dq @T = dt @p dp = dt @T @q @V @q In practice we integrate along this vector field only approximately, using powerful symplectic integrators. In practice we integrate along this vector field only approximately, using powerful symplectic integrators. The numerical error introduced by the integrator can be eliminated with a careful Metropolis correction. @T q !q+✏ @p p!p ✏ ✓ @T @V + @q @q ✓ ⇡(accept) = min 1, ⇡( ◆ ⌧ (p, q)) ⇡(p, q) ◆ http://arxiv.org/abs/1405.3489 Adiabatic Monte Carlo Like any MCMC algorithm, Hamiltonian Monte Carlo struggles to explore multimodal target distributions. Like any MCMC algorithm, Hamiltonian Monte Carlo struggles to explore multimodal target distributions. In Bayesian settings the marginal likelihood is difficult to estimate from the Markov chain output. ⇡(D) = Z ⇡(D | q) ⇡(q) dq In Bayesian settings the marginal likelihood is difficult to estimate from the Markov chain output. ⇡(D) = Z ⇡(D | q) ⇡(q) dq ⇡(D) = Eprior [⇡(D | q)] In Bayesian settings the marginal likelihood is difficult to estimate from the Markov chain output. ⇡(D) = Z ⇡(D | q) ⇡(q) dq ⇡(D) = Eprior [⇡(D | q)] h ⇡(D) = Epost (⇡(D | q)) 1 i Both of these problems are facilitated by interpolating between the target and an auxiliary, unimodal distribution. 1 ⇡ (q) = ( ⇡(q)) ⇡B (q) Z( ) Both of these problems are facilitated by interpolating between the target and an auxiliary, unimodal distribution. 1 ⇡ (q) = ( ⇡(q)) ⇡B (q) Z( ) Both of these problems are facilitated by interpolating between the target and an auxiliary, unimodal distribution. 1 ⇡ (q) = ( ⇡(q)) ⇡B (q) Z( ) Both of these problems are facilitated by interpolating between the target and an auxiliary, unimodal distribution. 1 ⇡ (q) = ( ⇡(q)) ⇡B (q) Z( ) q Both of these problems are facilitated by interpolating between the target and an auxiliary, unimodal distribution. 0 0.2 0.4 0.6 0.8 1 Both of these problems are facilitated by interpolating between the target and an auxiliary, unimodal distribution. =0 (q) = ⇡B (q) q ⇡ 0 0.2 0.4 0.6 0.8 1 Both of these problems are facilitated by interpolating between the target and an auxiliary, unimodal distribution. =1 (q) = ⇡(q) q ⇡ 0 0.2 0.4 0.6 0.8 1 Both of these problems are facilitated by interpolating between the target and an auxiliary, unimodal distribution. =0 (q) = ⇡B (q) ⇡ =1 (q) = ⇡(q) q ⇡ 0 0.2 0.4 0.6 0.8 1 q To move along the interpolation in practice, however, we need to impose a discrete partition of the interpolation. 0 0.2 0.4 0.6 0.8 1 q To move along the interpolation in practice, however, we need to impose a discrete partition of the interpolation. 0 0.2 0.4 0.6 0.8 1 q To move along the interpolation in practice, however, we need to impose a discrete partition of the interpolation. 0 0.2 0.4 0.6 0.8 1 q If the partition is chosen poorly then transitions will be difficult, and we are left with a delicate tuning problem. 0 0.2 0.4 0.6 0.8 1 q If the partition is chosen poorly then transitions will be difficult, and we are left with a delicate tuning problem. 0 0.2 0.4 0.6 0.8 1 q If the partition is chosen poorly then transitions will be difficult, and we are left with a delicate tuning problem. 0 0.2 0.4 0.6 0.8 1 Mirroring Hamiltonian Monte Carlo, Adiabatic Monte Carlo generates optimal transitions by making the perk dynamic. q ! (q, p, ) Mirroring Hamiltonian Monte Carlo, Adiabatic Monte Carlo generates optimal transitions by making the perk dynamic. q ! (q, p, ) ⇡(q) ! ⇡ (q, p) = ⇡(p | q) ⇡ (q) Introducing auxiliary momenta to the interpolating distributions defines a contact Hamiltonian. H(q, p, ) = log ⇡(p | q) ⇡ (q) Introducing auxiliary momenta to the interpolating distributions defines a contact Hamiltonian. H(q, p, ) = = log ⇡(p | q) ⇡ (q) log ⇡(p | q) log log ⇡B (q) ⇡(q) + log Z( ) + H0 Introducing auxiliary momenta to the interpolating distributions defines a contact Hamiltonian. H(q, p, ) = = log ⇡(p | q) ⇡ (q) log ⇡(p | q) T log log ⇡B (q) ⇡(q) + log Z( ) + H0 Introducing auxiliary momenta to the interpolating distributions defines a contact Hamiltonian. H(q, p, ) = = log ⇡(p | q) ⇡ (q) log ⇡(p | q) log log ⇡B (q) VB ⇡(q) + log Z( ) + H0 Introducing auxiliary momenta to the interpolating distributions defines a contact Hamiltonian. H(q, p, ) = = log ⇡(p | q) ⇡ (q) log ⇡(p | q) log log ⇡B (q) ⇡(q) + log Z( ) + H0 V Introducing auxiliary momenta to the interpolating distributions defines a contact Hamiltonian. H(q, p, ) = = log ⇡(p | q) ⇡ (q) log ⇡(p | q) log log ⇡B (q) ⇡(q) + log Z( ) + H0 The contact Hamiltonian defines a vector field that generates efficient motion along the interpolation. dq @T = dt @p dp = dt @T @q @V + @q d = dt V @T p @p E⇡ [ V ] p The contact Hamiltonian defines a vector field that generates efficient motion along the interpolation. dq @T = dt @p dp = dt @T @q @V + @q d = dt V @T p @p E⇡ [ V ] p The contact Hamiltonian defines a vector field that generates efficient motion along the interpolation. dq @T = dt @p dp = dt @T @q @V + @q d = dt V @T p @p E⇡ [ V ] p The contact Hamiltonian defines a vector field that generates efficient motion along the interpolation. dq @T = dt @p dp = dt @T @q @V + @q d = dt V @T p @p E⇡ [ V ] p Because the contact Hamiltonian is invariant to this motion, we can also recover the normalizing constant. H(q, p, ) = log ⇡(p | q) log log ⇡B (q) ⇡(q) + log Z( ) + H0 Because the contact Hamiltonian is invariant to this motion, we can also recover the normalizing constant. H(q, p, ) = log ⇡(p | q) log log ⇡B (q) ⇡(q) + log Z( ) + H0 Because the contact Hamiltonian is invariant to this motion, we can also recover the normalizing constant. H(q, p, ) = log ⇡(p | q) log log Z( ) = log ⇡B (q) ⇡(q) + log Z( ) + H0 H(q, p, ) Adiabatic Monte Carlo dynamically transitions from the base distribution to the target distribution. Adiabatic Monte Carlo dynamically transitions from the base distribution to the target distribution. Adiabatic Monte Carlo dynamically transitions from the base distribution to the target distribution. Adiabatic Monte Carlo dynamically transitions from the base distribution to the target distribution. To see the optimality of adiabatic transitions, consider the interpolation of a unidimensional distribution. 1 0.8 q 0.6 0.4 0.2 0 0 0.2 0.4 0.6 0.8 1 Adiabatic transitions automatically equilibrate, implicitly generating an optimal interpolation partition. 1 0.8 q 0.6 0.4 0.2 0 0 0.2 0.4 0.6 0.8 1 Adiabatic transitions automatically equilibrate, implicitly generating an optimal interpolation partition. 1 0.012 0.01 0.8 q 0.008 0.6 0.006 0.4 0.004 0.2 0.002 00 0 0 0.2 0.2 0.4 0.4 0.6 0.6 0.8 0.8 1 1 In theory we can recover the normalizing constant exactly. In practice we can recover it incredibly accurately. 0 log Z( (t) ) -5 -10 -15 Exact Flow -20 (Exact - Flow) / Exact (t) 0.02 0.01 0 -0.01 -0.02 Open Problems The immediate problem with adiabatic transitions is that metastabilities prevent them from being isomorphisms. q X p The immediate problem with adiabatic transitions is that metastabilities prevent them from being isomorphisms. Heating Metastability Cooling Metastability q X p Fortunately we can readily recover from a metastability by resampling the momenta, effectively reheating the system. p ⇠ ⇡(p | q) q X p We also need to compute the intermediate expectations needed to generate each transition. dq @T = dt @p dp = dt @T @q @V + @q d = dt V @T p @p E⇡ [ V ] p Hamiltonian Monte Carlo gives efficient local estimations, which can be aggregated together into a global estimator. E⇡ [ V ] ⇡ PN bn d Z V ( ) n=1 PN b Z n=1 n Finally, there is the problem of correcting for the error from numerical approximations to the exact transitions. (qf , pf ) ⇠ ⇡ =0 q (qi , pi ) ⇠ ⇡ 0 0.2 0.4 0.6 0.8 1 =1 We can’t apply a naive Metropolis correction, but perhaps we can apply a correction with a swap? (qf , pf ) ⇠ ⇡ ? =0 q (qi , pi ) ⇠ ⇡ 0 0.2 0.4 0.6 0.8 1 =1 Unfortunately, swapping states doesn’t work because discretized perks will not, in general, be aligned. (qf , pf ) ⇠ ⇡ ? ⇡0 q (qi , pi ) ⇠ ⇡ 0 0.2 0.4 0.6 0.8 1 ⇡1 Unfortunately, swapping states doesn’t work because discretized perks will not, in general, be aligned. (qf , pf ) ⇠ ⇡ ⇡0 q (qi , pi ) ⇠ ⇡ 0 0.2 0.4 0.6 0.8 1 ⇡1