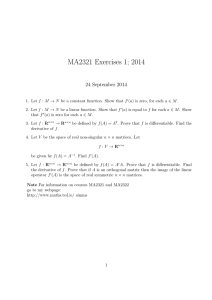

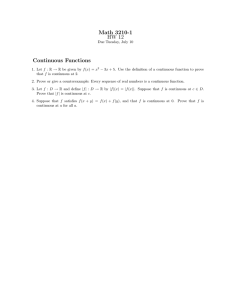

Sample Problems

advertisement

Sample Problems 1. For any matrix A, the trace of A is defined by (a) Prove that for all matrices A and B. (b) Prove that, for all matrices A and B and all scalars and , (c) Prove that an matrix A satisfies if and only if A=0. 2. Let A be an matrix. Prove that T 3. T Here Null(A) denotes the null space (kernel) of A, while Col(A ) is the column space (range) of A . The (a) (b) matrix A is given by Find the Singular Value Decomposition (SVD) of A. Find , the Moore-Penrose generalized inverse of A(you can express it in factored form, if (c) 4. convenient). Find the least-squares solution of Ax=b, where Let V be the vector space of real polynomials of degree less than or equal to 2. Define an inner product on V by (a) Use the Gram-Schmidt (or modified Gram-Schmidt) procedure to produce an orthogonal basis for V from the standard basis . (b) 2 5. Find the coordinates of p(x)=6x in the orthogonal basis you just computed. The set , where is an orthogonal basis for , while is an orthogonal basis for . The , where matrix A is defined by Find orthogonal bases for: (a) Null(A) (b) T (c) (d) Null(A ) Col(A) T Col(A ) 6. Suppose A is an (a) (b) 7. Prove that the eigenvalues of A are real, and the corresponding eigenvectors can be chosen to be real. Prove that eigenvectors of A corresponding to distinct eigenvalues are orthogonal. Let A be an 8. real symmetric matrix. real symmetric matrix. Prove that there is an orthonormal basis for consisting of eigenvectors of A. (You may use the results of the previous exercise.) T Let A be a real T matrix. Prove that AA and A Ahave the same nonzero eigenvalues. 9. 2 Let P be a symmetric matrix satisfying P =P, and assume that P is neither the zero matrix nor the identity matrix. Let W be the column space of P and W be the null space of P. 1 2 (a) Prove that if and only if P x=x. (b) (c) Prove that if is an eigenvalue of P, then Prove that is the direct sum of W and W . That is, prove that every 1 written uniquely as x=y+z, , is zero or one. 2 can be . (d) Prove that, for each , P x is the vector in W closest to x (in the Euclidean norm). 1 10. Let A be an matrix. Prove that eigenvectors corresponding to distinct eigenvalues are linearly independent. That is, prove that if are corresponding eigenvectors, then are distinct eigenvalues of A, and is a linearly independent set. 11. Suppose and are two different orthonormal bases for a subspace W of . Suppose further that the scalars a , i,j=1,2,3, satisfy ij (a) Show that the matrix A whose entries are a , i,j=1,2,3, is orthogonal. ij (b) T T T +u u +u u 1 1 2 2 3 3 Prove that the matrices u u T T T +v v +v v 1 1 2 2 3 3 and v v are equal. (c) T T T +u u +u u : 1 1 2 2 3 3 Interpret the action of P=u u 12. If , what is the significance of Px? Let V be an inner product space, let W be a finite-dimensional subspace of V with basis , and let v be any vector in V. (a) Prove that there is a unique vector (b) 13. closest to v (the best approximation to v from W). ``Closest'' is defined in terms of the norm induced by the inner product. Derive the normal equations for computing the best approximation w to v from W. Give an example to show that Gaussian elimination without partial pivoting can be unstable in finite precision arithmetic. Show that the use of partial pivoting eliminates the instability in your example. (Hint: The matrix need not be large--a matrix will do!) 14. Suppose A is a nonsingular Give a bound on matrix and in terms of satisfy .