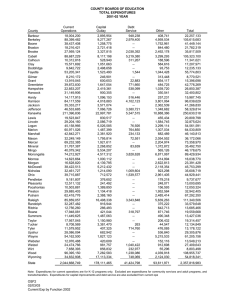

How Much Are Districts Spending to Implement Teacher Evaluation Systems?

advertisement