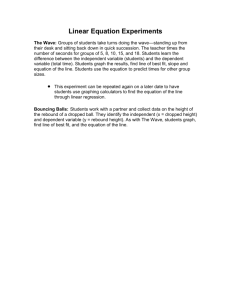

56 Classroom Activity: 1 48

advertisement