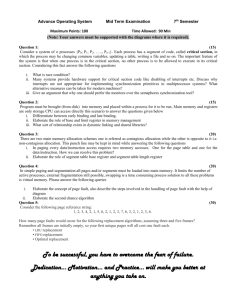

V M IRTUAL EMORY

advertisement