I l r .

advertisement

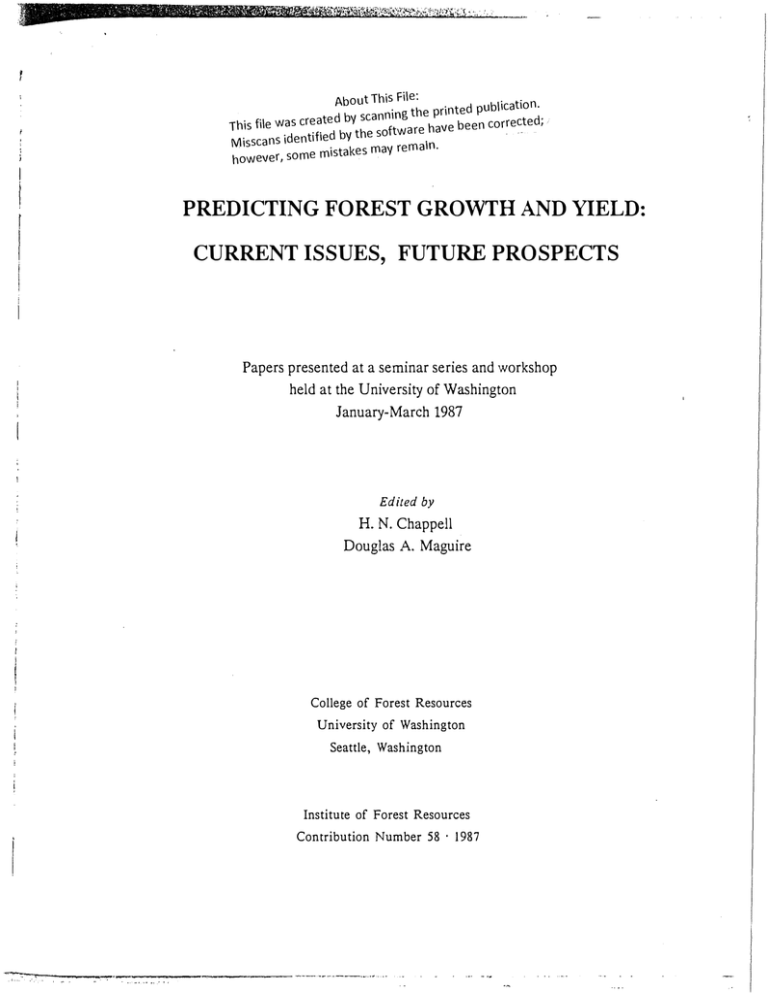

---- - - -- I r l e: . About This Fil tion. . nted publica pri the g scannm create d by corrected·, This file was have been -e software th y b d 1 e t tl den .m Misscans i may rema . e mistakes however, som \ · · · PREDICTING FOREST GROWTH AND YIELD: CURRENT ISSUES, FUTUREPROSPECTS Papers presented at a seminar series and workshop held at the University of Washington January-March 1987 Edited by H. N. Chappell Douglas A. Maguire College of Forest Resources University of Washington Seattle, Washington Institute of Forest Resources Contribution Number 58 --.·-:-- -- ...... . .. -- �----- -- -------------- · - · · · 1987 -- --- - - ---- . -- -· -- PREDICTING FOREST GROWTH AND YIELD: CURRENT ISSUES, FUTURE PROSPECTS Robert 0. Curtis Successful modeling depends on a number of factors (in addition to the money One might group these into three general categories: ( 1) formal with which to do it). technique, (2) art, and (3) data. Formal Technique In this category I include formal training in biometrics, simulation methods, and computers. Some of us can still remember analyses done by ocularly fitted curves and alignment charts. The rotary calculator put an end to these, and the computer led to Along procedures that allow us to handle large amounts of data and many variables. with this has gone development of more efficient, more powerful, and more esoteric methods of analysis. In one of our seminars, George Furnival commented that development of new methodology is a lot of fun and is certainly beneficial to researchers at performance rating time, but doesn't necessarily have much effect on results. In a way, I find it comforting to read a paper that, after an involved analysis with autoregression methods that I only half comprehend, concludes with the statement that the final result differed very little from that obtained with ordinary least squares. Maybe this is defensive decry the value of progress, allow us to do things that we But it is still possible data. way of formal biometric theory. rationalization on my part. I certainly don't mean to and the methods developed in recent years do indeed could not do before, and to make more effective use of to do useful work with fairly modest equipment in the One thing the modeler definitely needs is a feeling for the way trees and stands behave. This is very important. It includes the ability to recognize nonsense in data or predictions and to relate predictions to field observation and experience. This generally depends on a broad background of experience in silviculture and mensuration quite as much as on any formal academic training. Along with this goes the need for the quantitative way of thinking. Formal courses can help to develop this, but the aptitude must be there. I can think of a number of people who have had this to an outstanding degree and who have done valuable work despite a modest formal academic background. Over time, people with the appropriate aptitude and experience tend to develop their own bag of tricks and ways of approaching problems, which are often effective ·'.:•: ,..._:,___.,......._.--.--- -...·---· -·-----· --. Predicting Forest Growth and Yield 81 even though you don't find them in the textbooks. This personal factor is one reason The why it is hard to demonstrate clearly that one model is superior to another. people involved are often quite as important as the type of model. We probably know enough about trees to The third essential component is data. But no manager would believe it. construct a plausible growth model without data. In any case, it would be too generalized to be of any practical use in a specific reallife situation. With really good data covering the full range of conditions for which predictions are wanted, it would be hard to go wrong. In real life we're somewhere in between these extremes. We may have only a little data. More often, we have a lot of data· but most of it has little relevance to the questions we have in mind. There can be a degree of substitution among these three components; strength in one can offset weakness in another. But statistics cannot replace the art and data components. Over the last number of people gaining experience shape with regard to decade or so the schools have been turning out a considerable well grounded in formal technique, and these people have been and skills. It seems to me that we are now probably in better the first two components I've listed than with respect to data. Our data problems are not usually a matter of quantity. They are matters of These are tied to modeling methodology and objec­ quality, compatibility, and kind. And, second, what should we tives. First, what can we do with the data we have? be doing to provide data for the things we will need to do in the future? One often finds a considerable difference in viewpoint between people with an The former are likely to inventory/management background and those in research. think in terms of averages of stands as they exist, and to distrust "research" results as representing unrealistic idealized conditions. The research types are likely to be appalled at the degree of lumping that goes on and the crudity of some of the applications and assumptions. It is useful to bear in mind the differences in objec­ There is a basic difference between what some of us have tives of these activities. termed the "growth trend" data, produced by inventories, and the "treatment response" data produced by properly designed field trials. Inventories are primarily concerned with determining what's out there now. The growth information they produce is information about the recent growth of existing It does not tell us much about growth stands, which is determined by past practices. very far in the future; it tells us little about response to stand treatments; and it tells us nothing about what would happen if we did something radically different. My own belief is that it is not possible to determine the quantitative effect of stand treatments from inventory data. I know there are those who disagree. Its objective is In contrast to this, we have the typical research installation. generally to determine the quantitative response to some treatment or treatments. Given a series of such trials over an appropriate range of initial conditions, we try to -. 82 --- .. --------------- Robert Curtis develop general expressions that can be incorporated in simulators and then used to answer management and growth prediction questions. The inventory and research activities obviously have to mesh somehow. We need to make the best use of whatever information we can get, from both sources. And, research-derived relationships must ultimately be applied to existing conditions as a starting point. This gets us into the questions listed below. There are maybe one or two of these for which I think I know the answer; a good many I have opinions about but no certainty that they are correct; and for a few of them I haven't the foggiest solution. QUESTIONS I. What are our objectives? Do we have several? of data and perhaps different types of models? 2. How do we mesh information from inventories and monitoring with experimental information? --in system development --in system applications (inventory projections, planning applications, silvicultural applications) --what "monitoring" (definitions, objectives, uses)? --local calibration --validation --feedback 3. As far as design is concerned, What should we do differently in the --what have we done wrong in the past? future? --what planned experiments are needed versus sampling of operational areas? --what about fixed area plots versus variable plots? --what about replication? (do we need it? what kind?) --what about permanent versus temporary plots? --what treatments should be included? (where do we most need information?) (mixed versus pure species, age --what stand conditions should be included? classes, etc.) 4. How does one get continuity, compatibility problem), and adequate quality control? Do they require different types (the "least common denominator"