N I V

advertisement

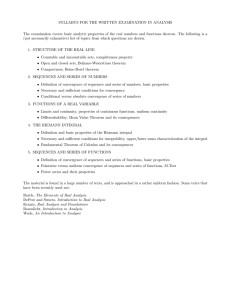

NONPARAMETRIC INSTRUMENTAL VARIABLES

ESTIMATION OF A QUANTILE REGRESSION MODEL

Joel Horowitz

Sokbae Lee

THE INSTITUTE FOR FISCAL STUDIES

DEPARTMENT OF ECONOMICS, UCL

cemmap working paper CWP09/06

NONPARAMETRIC INSTRUMENTAL VARIABLES ESTIMATION OF A QUANTILE

REGRESSION MODEL

by

Joel L. Horowitz

Department of Economics

Northwestern University

Evanston, IL 60208

U.S.A.

and

Sokbae Lee

Department of Economics

University College London

London WC1E 6BT

United Kingdom

May 2006

Abstract

We consider nonparametric estimation of a regression function that is identified by

requiring a specified quantile of the regression “error” conditional on an instrumental

variable to be zero. The resulting estimating equation is a nonlinear integral equation of

the first kind, which generates an ill-posed-inverse problem. The integral operator and

distribution of the instrumental variable are unknown and must be estimated

nonparametrically. We show that the estimator is mean-square consistent, derive its rate

of convergence in probability, and give conditions under which this rate is optimal in a

minimax sense. The results of Monte Carlo experiments show that the estimator behaves

well in finite samples.

JEL Codes: C13, C31

Key words: Statistical inverse, endogenous variable, instrumental variable, optimal rate,

nonlinear integral equation, nonparametric regression

The research of Joel L. Horowitz was supported in part by NSF Grant 00352675. The work of

both authors was supported in part by ESRC Grant RES-000-22-0704 and by the Leverhulme

Trust through its funding of the Centre for Microdata Methods and Practice and the research

program “Evidence, Inference, and Inquiry.”

NONPARAMETRIC INSTRUMENTAL VARIABLES ESTIMATION OF A QUANTILE

REGRESSION MODEL

1. Introduction

Quantile regression models are increasingly important in applied econometrics. This

paper is concerned with nonparametric estimation of a quantile regression model that has a

possibly endogenous explanatory variable and is identified through an instrumental variable.

Specifically, we estimate the function g in the model

(1.1)

Y = g( X ) + U

(1.2)

P (U ≤ 0 | W = w) = q ,

where Y is the dependent variable, X is an explanatory variable, W is an instrument for X , U

is an unobserved random variable, and q is a known constant satisfying 0 < q < 1 . We do not

assume that P (U ≤ 0 | X = x) is independent of x . Therefore, the explanatory variable X may

be endogenous in the quantile regression model (1.1)-(1.2). The function g is assumed to satisfy

regularity conditions but is otherwise unknown. In particular, it does not belong to a known,

finite-dimensional parametric family. The data are an iid random sample, {Yi , X i ,Wi : i = 1.,,,.n} ,

of (Y , X ,W ) . We present an estimator of g , derive its L2 rate of convergence in probability,

and provide conditions under which this rate is the fastest possible in a minimax sense.

Estimators of linear quantile regression models with endogenous right-hand side

variables are described by Amemiya (1982), Powell (1983), Chen and Portnoy (1996),

Chernozhukov and Hansen (2005a), and Ma and Koenker (2006). Chernozhukov and Hansen

(2004) and Januszewski (2002) use such models in economic applications.

Research on

nonparametric estimation of quantile regression models is relatively recent. Chesher (2003)

considers a triangular-array structure that is not necessarily additively separable and investigates

nonparametric identification of derivatives of the unknown functions in that structure.

Chernozhukov and Hansen (2005b) give conditions under which g in (1.1)-(1.2) is identified.

Chernozhukov, Imbens, and Newey (2004) give conditions for consistency of a series estimator

of g in a quantile instrumental-variables model that is not necessarily additively separable. The

rate of convergence of their estimator is unknown.

There has also been research on nonparametric estimation of g in the model

(1.3)

Y = g ( X ) + U ; E (U | W = w) = 0 .

1

Here, as in (1.1)-(1.2), X is a possibly endogenous explanatory variable and W is an instrument

for X , but the quantile restriction (1.2) is replaced by the condition E (U | W = w) = 0 . Blundell

and Powell (2003); Darolles, Florens, and Renault (2002); Florens (2003); Newey and Powell

(2003); Newey, Powell, and Vella (1999); Carrasco, Florens, and Renault (2005); Horowitz

(2005); and Hall and Horowitz (2005) discuss estimators of g in (1.3). Our estimator of g in

(1.1)-(1.2) is related to Hall’s and Horowitz’s (2005) estimator of g in (1.3), but for reasons that

will now be explained, estimating

g in (1.1)-(1.2) presents problems that are different from

those of estimating g in (1.3).

In both (1.1)-(1.2) and (1.3), the relation that identifies g is an operator equation,

(1.4)

Tg = θ ,

say, where T is a non-singular integral operator and θ is a function. T and θ are unknown but

can be estimated consistently without difficulty. However, T

−1

is discontinuous in both (1.1)-

(1.2) and (1.3), so g cannot be estimated consistently by replacing T and θ in (1.4) with

consistent estimators. This “ill-posed inverse problem” is familiar in the literature on integral

equations. See, for example, Groetsch (1984); Engl, Hanke, and Neubauer (1996), and Kress

(1999). It is dealt with by regularizing (that is, modifying) T to make the resulting inverse

operator continuous. As in Darolles, Florens, and Renault (2002) and Hall and Horowitz (2005),

we use Tikhonov (1963a, 1963b) regularization. This consists of choosing the estimator ĝ to

solve

(1.5)

2

minimize : Tˆϕ − θˆ + an ϕ

ϕ ∈G

2

,

where G is a parameter set (a set of functions in this case), Tˆ and θˆ , respectively, are consistent

estimators of T and θ ; {an } is a sequence of non-negative constants that converges to 0 as

n → ∞ ; and

ν

2

= ∫ν ( x)2 dx

for any square integrable function ν . In model (1.3), T and Tˆ are linear operators, and the

first-order condition for (1.5) is a linear integral equation (a Fredholm equation of the first kind).

In (1.1)-(1.2), however, T and Tˆ are nonlinear operators, and the first-order condition for (1.5)

is a nonlinear integral equation.

The nonlinearity of T

convergence of ĝ to g .

in (1.1)-(1.2) complicates the task of deriving the rate of

In contrast to the situation in many other nonlinear estimation

2

problems, using a Taylor series expansion to make a linear approximation to the first-order

condition is unattractive because, as a consequence of the ill-posed inverse problem, the

approximation error dominates other sources of estimation error and controls the rate of

convergence of ĝ unless very strong assumptions are made about the probability density

function of (Y , X ,W ) . To avoid making these assumptions, we use a modified version of a

method developed by Engl, Hanke, and Neubauer (1996, Theorem 10.7) to derive the rate of

convergence of ĝ . This method works directly from the objective function in (1.5), rather than

from the first-order condition. It requires us to assume that the norm of the second Fréchet

derivative of T is not too large (see assumption 6 in Section 3.2). It is an open question whether

the same rate of convergence of ĝ can be attained without making this assumption or one that is

similar to it.

The remainder of the paper is organized as follows. Section 2 presents the estimator for

the special case in which X and W are scalars. Section 3 gives conditions under which the

estimator is consistent, obtains its rate of convergence, and shows that the rate of convergence is

the fastest possible (in a minimax sense) under our assumptions. Section 4 extends the results of

sections 2 and 3 to a multivariate model in which X is a vector that may have some exogeneous

components. Section 5 presents the results of a Monte Carlo investigation of the estimator’s

finite-sample performance.

Concluding comments are given in Section 6.

The proofs of

theorems are in the appendix, which is Section 7.

2. The Estimator

This section describes our estimator of g in (1.1)-(1.2) when X and W are scalars. Let

FY | XW denote the distribution function of Y conditional on ( X ,W ) . We assume that the

conditional distribution of Y has a probability density function, fY | XW , with respect to Lebesgue

We also assume that ( X ,W ) has a probability density function with respect to

measure.

Lebesgue measure, f XW . Let fW denote the marginal density of W . Define FYXW = FY | XW f XW

and fYXW = fY | XW f XW .

Assume without loss of generality that the support of ( X ,W ) is

contained in [0,1]2 .

It follows from (1.1)-(1.2) that

(2.1)

1

∫0 FYXW [ g ( x), x, w]dx = qfW (w)

3

for almost every w . We assume that (2.1) uniquely identifies g up to a set of x values whose

Lebesgue measure is 0.

Chernozhukov and Hansen (2005b, Theorem 4) give sufficient

conditions for this assumption to hold.

Now define the operator T on L2 [0,1] by

(2.2)

1

(Tϕ )( w) = ∫ FYXW [ϕ ( x ), x, w]dx ,

0

where ϕ is any function in L2 [0,1] . Then (2.1) is equivalent to the operator equation

Tg = qfW .

Identifiability of g is equivalent to assuming that T is invertible. Thus,

(2.3)

g = qT

However, T

−1

−1

fW .

is discontinuous because the Fréchet derivative of T is a completely continuous

operator and, therefore, has an unbounded inverse if fYXW is “well behaved”. Consequently, g

cannot be estimated consistently by replacing T and fW with consistent estimators in (2.3). As

was explained in Section 1, we use Tikhonov regularization to deal with this problem.

We now describe the version of problem (1.5) that we solve to obtain the regularized

estimator of g . We assume that fYXW has r > 0 continuous derivatives with respect to any

combination of its arguments. 1

Let K denote a continuously differentiable kernel function

whose support is [−1,1] , is symmetrical about 0, and that satisfies

(2.4)

⎧1if j = 0

⎪

∫−1 v K (v)dv = ⎨

⎪0 if 1 ≤ j ≤ max(1, r − 1).

⎩

1

j

Let h > 0 denote a bandwidth parameter, and define K h (v ) = K (v / h) for any v ∈ [−1,1] . We

estimate fW , fYXW , and FYXW , respectively, by

1 n

fˆW ( w) = ∑ K h ( w − Wi )

nh i =1

1 n

fˆYXW ( y, x, w) = 3 ∑ K h ( y − Yi ) K h ( x − X i ) K h ( w − Wi ) ,

nh i =1

and

y

FˆYXW ( y, x, w) = ∫ fˆYXW (v, x, w) dv .

−∞

Define the operator Tˆ by

4

1

(Tˆϕ )( w) = ∫ FˆYXW [ϕ ( x), x, w]dx .

0

for any ϕ ∈ L2 [0,1] . Let ϕ denote the L2 norm of ϕ . Define G = {ϕ ∈ L2 [0,1]: ϕ

2

≤ M } for

some constant M < ∞ . Our estimator of g is any solution to the problem

(2.5)

gˆ = arg min Sn (ϕ ) ≡ Tˆϕ − qfˆW

ϕ ∈G

2

+ an ϕ

2

.

Under our assumptions, a function ĝ that minimizes S n always exists, though it may not be

unique (Bissantz, Hohage, and Munk. 2004).

3. Theoretical Properties

This section gives conditions under which the estimator ĝ of Section 2 is consistent,

derives the rate at which ĝ − g

2

converges in probability to 0, and gives conditions under which

this is the fastest possible rate, in a minimax sense.

3.1 Consistency

This section gives conditions under which E gˆ − g

2

→ 0 as n → ∞ .

Define

δ n = h 2 r + ( nh) −1 . Make the following assumptions.

Assumption 1: (a) The function g is identified. That is, (2.1) specifies g ( x) uniquely

up to a set of x values whose Lebesgue measure is 0. (b) g

2

≤ M for some constant M < ∞ .

Assumption 2: fYXW has r > 0 continuous derivatives with respect to any combination

of its arguments. These derivatives and fYXW are bounded in absolute value by M .

Assumption 3: As n → ∞ , an → 0 , δ n → 0 , and δ n / an → 0 .

Assumption 4:

The kernel function K

is supported on [−1,1] , continuously

differentiable, symmetrical about 0, and satisfies (2.4).

In assumption 2, r need not be an integer. If r is not an integer, then the assumption

means that

D[ r ] [ fYXW ( y1 , x1 , w1 ) − fYXW ( y2 , x2 , w2 )] ≤ M ( y1 , x1 , w1 ) − ( y2 , x2 , w2 )

r −[ r ]

,

where [r ] is the integer part of r , D[ r ] fYXW denotes any order [r ] derivative of fYXW , and

z1 − z2 is the Euclidean distance between the points z1 and z2 .

5

Assumptions 1(b) and 2 are standard in nonparametric estimation. Assumptions 3 and 4

are satisfied by a wide range of choices of h , an and K . The choice of h in applications is

discussed in Section 3.2.

Theorem 1: Let assumptions 1-4 hold. Then

(3.1)

2

lim E gˆ − g

n→∞

=0.

Result (3.1) implies that ĝ − g

2

converges in probability to 0 as n → ∞ . The next section

obtains the rate of convergence.

3.2 Rate of Convergence

In model (1.3), where T is a linear operator, the source of the ill-posed inverse problem

is that sequence of singular values of T (or, equivalently, eigenvalues of T *T , where T * is the

adjoint of T ) converges to 0. Consequently, the rate at which ĝ − g

2

converges to 0 depends

on the rate of convergence of the singular values (or eigenvalues). See Hall and Horowitz (2005).

In (1.1)-(1.2), where T is nonlinear, the source of the ill-posed inverse problem is convergence

to 0 of the singular values of the Fréchet derivative of T at g . Denote this derivative by Tg .

The rate of convergence of ĝ − g

2

in (1.1)-(1.2) depends on the rate of convergence of the

singular values of Tg or, equivalently, of the eigenvalues of Tg*Tg , where Tg* is the adjoint of

Tg . Accordingly, the regularity conditions for our rate of convergence result are framed in terms

of the spectral representation of Tg*Tg .

It is easy to show that the Frechet derivative of T at g is the operator Tg defined by

1

(Tg ϕ )( w) = ∫ fYXW [ g ( x ), x, w]ϕ ( x) dx .

0

The adjoint operator is defined by

1

(Tg*ϕ )( w) = ∫ fYXW [ g ( w), w, x ]ϕ ( x) dx .

0

We assume that Tg*Tg

is non-singular, so its eigenvalues are strictly positive.

Let

{λ j ,φ j : j = 1, 2,...} denote the eigenvalues and orthonormal eigenvectors of Tg*Tg ordered so that

λ1 ≥ λ2 ≥ ... > 0 . Under our assumptions, Tg*Tg is a completely continuous operator, so {φ j }

forms a basis for L2 [0,1] . Therefore, we may write

6

∞

(3.2)

g ( x ) = ∑ b jφ j ( x ) ,

j =1

where the Fourier coefficients b j are given by

1

b j = ∫ g ( x )φ j ( x) dx .

0

We make the following additional assumptions.

Assumption 5:

β − 1/ 2 ≤ α < 2 β − 1 ,

(a) There are constants α > 1 , β > 1 , and C0 < ∞ such that

j −α ≤ C0 λ j , and

| b j | ≤ C0 j − β

for all

j ≥ 1 . (b) Moreover,

r ≥ max[(2β + α − 1) / 2,(3β + α − 1/ 2) /(α + 1)] .

Assumption 6: There is a finite constant L > 0 such that

(3.3)

T ( g1 ) − T ( g 2 ) − Tg2 ( g1 − g 2 ) ≤ 0.5L g1 − g 2

2

for any g1 , g 2 ∈ L2 [0,1] and

∞

(3.4)

b 2j

∑ λ j < 1/ L .

j =1

Assumption 7:

The tuning parameters h and an satisfy h = Ch n −1/(2 r +1) and

an = Ca n −α /(2 β +α ) , where Ch and Ca are positive, finite constants.

In assumption 5(a), α characterizes the severity of the ill-posed inverse problem. As α

increases, the problem becomes more severe and, consequently, the fastest possible rate of

convergence of any estimator of g decreases. The parameter β characterizes the complexity of

g in the sense that if β is large, then g can be well approximated by the finite-dimensional

parametric model that is obtained by truncating the series on the right-hand side of (3.2) at j = J

for some small integer J . A finite-dimensional g can be estimated with a n −1/ 2 rate of

convergence, so the rate of convergence of ĝ − g

2

increases as β increases.

Assumption (5a) places tight restrictions on the rates of convergence of λ j and b j . This

is unavoidable for obtaining optimal rates of convergence with Tikhonov regularization. The

restrictions are not needed for consistent estimation, as Theorem 1 shows. In linear inverse

problems, the restrictions can sometimes be relaxed by using other forms of regularization. See,

for example, Carrasco, Florens, and Renault (2005) and Bissantz, Hohage, Munk, and Ruymgaart

(2006). However, there has been little research on the application of non-Tikhonov methods to

7

nonlinear inverse problems, especially when T in (1.4) is unknown and must be estimated.

Much additional research will be needed to determine the extent to which such methods are

useful in nonlinear problems with an unknown T .

Together, assumptions 2 and 5(a) imply that Tg*Tg is a completely continuous linear

operator, so its eigenvectors form a basis for L2 [0,1] . Assumption 2 implies that inequality (3.3)

holds for some L < ∞ , so (3.3) amounts to the definition of L . Inequality (3.4) restricts the norm

of the second Fréchet derivative of T . A sufficient condition for (3.4) is

−1

⎛ ∞ b 2j ⎞

sup ∂fYXW ( y, x, w) / ∂y < ⎜ ∑ ⎟ .

⎜ j =1 λ j ⎟

y , x,w

⎝

⎠

Restrictions similar to (3.4) are well-known in the theory of nonlinear integral equations. It is an

open question whether an estimator of g that has our rate of convergence can be achieved

without making an assumption similar to (3.4). Assumptions 2 and 5(b) imply that fW , and Tϕ

for any ϕ ∈ L2 [0,1] can be estimated with a rate of convergence in probability of

O p [ n − ( β −1/ 2+α / 2) /(2 β +α ) ] and that fYXW can be estimated with a rate of convergence of

O p [n − ( β −1/ 2) /(2 β +α ) ] . These rates are needed to obtain our rate of convergence of ĝ . Under

assumption 7, h has the optimal rate of convergence for nonparametric estimation of an r -times

differentiable density function of a scalar argument.

Accordingly, h can be chosen in

applications by, say, cross-validation applied to fˆW .

Let H = H( M , C0 , α , β , L) be the set of distributions of (Y , X ,W ) that satisfy

assumptions 1, 2, 5, and 6 with fixed values of M , C0 , α , β , and L . Our rate-of-convergence

result is given by the following theorem.

Theorem 2: Let assumptions 1-2 and 4-7 hold. Then

lim limsup sup PH ⎡ gˆ − g

⎣

D →∞ n→∞ H ∈H

2

> Dn−(2 β −1) /(2 β +α ) ⎤ = 0 .

⎦

As expected, the rate of convergence of ĝ − g

2

decreases as α increases (the ill-posed inverse

problem becomes more severe) and increases as β increases ( g increasingly resembles a finitedimensional parametric model).

The next theorem shows that the convergence rate in Theorem 2 is optimal in a minimax

sense under our assumptions. Let {g n } denote any sequence of estimators of g satisfying

g n

2

≤ M for some M < ∞ .

8

Theorem 3: Let assumptions 1-2 and 4-7 hold with α ≥ 2 . Then for every finite D > 0

liminf sup PH ⎡ g n − g

⎣

n→∞ H ∈H

2

> Dn −(2 β −1) /(2 β +α ) ⎤ > 0 .

⎦

Our proof of Theorem 3 requires α ≥ 2 . It is an open question whether the rate of

convergence n −(2 β −1) /(2 β +α ) is optimal when 1 < α < 2 .

4. Multivariate Model

This section extends the results of Sections 2 and 3 to a multivariate model in which X

is a vector that may have some exogenous components. We rewrite model (1.1)-(1.2) as

(4.1)

Y = g( X , Z ) + U

(4.2)

P (U ≤ 0 | W = w, Z = z ) = q ,

where Y is the dependent variable, X ∈ \ A (A ≥ 1) is a vector of possibly endogenous

explanatory variables, Z ∈ \ m ( m ≥ 0) is a vector of exogenous explanatory variables, and

W ∈ \A is a vector of instruments for X , U is an unobserved random variable, and q is a

known constant satisfying 0 < q < 1 . If m = 0 , then Z is not in the model. The problem is to

estimate g

nonparametrically from data consisting of the independent random sample

{Yi , X i ,Wi , Z i : i = 1,..., n} .

The estimator is obtained by applying the method of Section 2 after conditioning on Z .

To do this, let K A,h (ν ) =

∏ j =1 Kh (ν j / h) , where ν j

A

is the j ’th component of the A -vector ν .

Define K m,h (ν ) for an m -vector ν similarly. Let fWZ and fYXWZ , respectively, denote the

probability density functions of (W , Z ) and (Y , X ,W , Z ) . Define

FYXWZ ( y, x, w, z ) =

y

∫−∞ fYXWZ (ν , x, w, z)dν .

Define the following kernel estimators of fWZ , fYXWZ , and FYXWZ :

fˆWZ ( w, z ) =

1

nhA + m

fˆYXWZ ( w, z ) =

n

∑ KA,h (w − Wi ) Km,h ( z − Zi ) ,

i =1

n

1

nh 2A + m +1

∑ Kh ( y − Yi ) KA,h ( x − X i ) KA,h (w − Wi ) K m,h ( z − Zi ) ,

i =1

and

FˆYXWZ ( y, x, w, z ) =

y

∫−∞ fˆYXWZ (ν , x, w, z)dν .

9

For each z ∈ [0,1]m , define the operators T z and Tˆz on L2 [0,1]A by

(T zϕ z )( w) =

∫[0,1] FYXWZ [ϕ z ( x), x, w, z ]dx

(Tˆzϕ z )( w) =

∫[0,1] FˆYXWZ [ϕ z ( x), x, w, z ]dx ,

A

and

A

where ϕ z is any function on L2 [0,1]A .

The function g satisfies

(4.3)

(T z g )( w, z ) = qfWZ ( w, z ) .

The function g (⋅, z ) is identified if (4.3) has a unique solution (up to a set of x values of

Lebesgue measure 0) for the specified z . Define G = {ϕ ∈ L2 [0,1]A : ϕ

2

≤ M } for some constant

M < ∞ . For each z ∈ [0,1]m , the estimator of g (⋅, z ) is any solution to the problem

(4.4)

gˆ(⋅, z ) = arg min

ϕz ∈ G

{∫

[0,1]A

[(Tˆzϕ z )( w) − qfˆWZ ( w, z )]2 dw + an ∫

[0,1]A

}

ϕ z ( w)2 dw .

4.1 Consistency

This section gives conditions under which E gˆ − g

2

→ 0 as n → ∞ in model (4.1)-

(4.2). Define δ n = h 2 r + ( nhA + m ) −1 . Make the following assumptions, which are extensions of

the assumptions of Section 3.1.

Assumption 1′: (a) The function g is identified. (b)

∫[0,1] g ( x, z )

A

2

dx ≤ M for each

z ∈ [0,1]m and some constant M < ∞ .

Assumption 2′: fYXWZ has r > 0 continuous derivatives with respect to any combination

of its arguments. These derivatives and fYXWZ are bounded in absolute value by M .

Assumption 3′: As n → ∞ , an → 0 , δ n → 0 , and δ n / an → 0 .

Theorem 4: Let assumptions 1′-3′ and 4 hold. Then for each z ∈ [0,1]m ,

lim E ∫

n →∞

[0,1]A

[ gˆ ( x, z ) − g ( x, z )]2 dx = 0 .

4.2 Rate of Convergence

As when X and W are scalars, the rate of convergence of ĝ in the multivariate model

depends on the rate of convergence of the singular values of the Fréchet derivative of T z .

10

*

Accordingly, let Tgz denote the Fréchet derivative of T z at g , and let Tgz

denote the adjoint of

*

Tgz . Tgz and Tgz

, respectively, are the operators defined by

(Tgzϕ z )( w) =

∫[0,1]

fYXWZ [ g ( x, z ), x, w, z ]ϕ z ( x) dx

*

(Tgz

ϕ z )( w) =

∫[0,1]

fYXWZ [ g ( w, z ), w, x, z ]ϕ z ( x ) dx .

A

and

A

*

Assume that for each z ∈ [0,1]A , Tgz

Tgz is non-singular. Let {(λzj ,φ zj ) : j = 1, 2,...} denote the

*

Tgz ordered so that λz1 ≥ λz 2 ≥ ... > 0 . The eigenvectors

eigenvalues and eigenvectors of Tgz

{φ zj } form a complete, orthonormal basis for L2 [0,1]A . Thus, for each z ∈ L2 [0,1]A we can write

g ( x, z ) =

∞

∑ bzjφzj ( x) ,

j =1

where

bzj =

∫[0,1] g ( x, z )φz ( x)dx .

A

Now make the following additional assumptions, which extend those of Section 3.2.

Assumption 5′:

(a) There are constants α > 1 , β > 1 , and C0 < ∞ such that

β − 1/ 2 ≤ α < 2 β − 1 , j −α ≤ C0 λzj , and | bzj | ≤ C0 j − β uniformly in z ∈ [0,1]m for all j ≥ 1 . (b)

Moreover, r ≥ max{[A(2 β + α − 1) − m] / 2, r *} , where r * is the largest root of the equation

4[(α + 1) /(2 β + α )]r 2 − 2[(A + m)(2 β − 1) /(2β + α ) + A + 1 − m]r − (A + 1)m = 0 .

Assumption 6′: There is a finite constant L > 0 such that

T z ( g1 ) − T z ( g 2 ) − Tg 2 z ( g1 − g 2 ) ≤ 0.5L g1 − g 2

2

for any g1 , g 2 ∈ L2 [0,1] uniformly in z ∈ [0,1]m and

∞

bzj2

∑ λzj < 1/ L .

j =1

uniformly in z ∈ [0,1]m .

Assumption 7′:

The tuning parameters h and an satisfy h = Ch n −1/(2 r + A + m ) and

an = Ca n −τα /(2 β +α ) , where τ = 2r /(2r + m) and Ch and Ca are positive, finite constants.

11

Let HM = HM ( M , C0 , α , β , L, r , A, m) be the set of distributions of (Y , X , Z ,W ) that

satisfy assumptions 1′, 2′, 5′, and 6′ with fixed values of M , C0 , α , β , L , r , A , and m . The

multivariate extension of Theorems 2 and 3 is

Theorem 5: Let assumptions 1′-2′, 4, and 5′-7′ hold. Then for each z ∈ [0,1]m ,

(4.5)

lim limsup sup PH

D →∞ n→∞

H ∈HM

{∫

[0,1]A

}

[ gˆ ( x, z ) − g ( x, z )]2 dx > Dn −τ (2 β −1) /(2 β +α ) = 0 .

If, in addition,

(4.6)

[A(2 β + α − 1) − m]/ 2 ≥ r * ,

then for each z ∈ [0,1]m

(4.7)

liminf sup PH

n→∞ H ∈H

M

{∫

[0,1]A

}

[ gˆ ( x, z ) − g ( x, z )]2 dx > Dn −τ (2 β −1) /(2 β +α ) > 0 .

If m = 0 , then (4.6) simplifies to α ≥ 1 + 1/ A . The rate of convergence in Theorem 5 is

the same as that in Theorem 3 if A = 1 and m = 0 . The theorem shows that increasing m

decreases the rate of convergence of ĝ for any fixed r .

dimensionality of nonparametric estimation.

This is the familiar curse of

In addition, assumption 5′ implies that as A

increases, r must also increase to maintain the rate of convergence n−τ (2 β −1) /(2 β +α ) . This is a

form of the curse of dimensionality that is associated with the endogenous explanatory variable

X.

5. Monte Carlo Experiments

This section reports the results of Monte Carlo simulations that illustrate the finite-sample

performance of the estimator that is obtained by solving (2.5). Samples of (Y , X ,W ) were

generated from the model

Y = g(X ) + U ,

where

∞

g ( x) = 21/ 2 ∑ (−1) j +1 j −6 sin( jπ x)

j =1

and (U , X ,W ) is sampled from the distribution whose density is

12

∞

⎡ jπ

⎤

fUXW (u , x, w) = C ∑ a j sin ⎢ (u + 1) ⎥ sin( jπ x)sin( jπ w)

⎣ 2

⎦

j =1

∞

+ 0.15∑ d j sin[ jπ (u + 1)]sin(2 jπ x)sin( jπ w)

j =1

for (U , X ,W ) ∈ [ −1,1] × [0,1]2 . In this density,

⎪⎧ j −3 if j = 1,3,5,...

aj = ⎨

⎪⎩0 otherwise,

d j = j −10 , and

⎡⎛ 16 ⎞ ∞ a j ⎤

C = ⎢⎜ 3 ⎟ ∑ 3 ⎥

⎣⎢⎝ π ⎠ j =1 j ⎥⎦

−1

.

The density is cumbersome algebraically, but it is convenient for computations and has a

conventional shape. This is illustrated in Figures 1-3, which show graphs of the density of W ,

the density of X conditional on W , and the density of U conditional on ( X ,W ) .

For

computational purposes, the infinite series were truncated at j = 100 .

The kernel function used for density estimation is K ( x) = (15 /16)(1 − x 2 ) 2 for | x | ≤ 1 .

The estimates of g were computed by using the Levenberg-Marquardt method (Engl, Hanke and

Neubauer 1996, p. 285). The starting function was obtained by carrying out a cubic quantile

regression of Y on X without controlling for endogeneity.

Each experiment consisted of estimating g at the 99 points x = 0.01,0.02,...,0.99 . The

experiments were carried out in GAUSS using GAUSS pseudo-random number generators.

There were 500 Monte Carlo replications in each experiment. Experiments were carried out

using sample sizes n = 200 and n = 800 .

The results are summarized in Table 1 and illustrated graphically in Figures 4-5,

respectively, for n = 200 and n = 800 .

For each sample size, there are two values of the

bandwidth parameter, h (0.5 and 0.8), and two values of the regularization parameter, an (0.5

and 1). For estimation of fYXW the bandwidth 2h is used in the Y direction and h is used in the

X and W directions, because the standard deviation of Y is about double those of X and W .

The results in Table 1 show that the empirical integrated variance and integrated mean-square

error (IMSE) decrease as the sample size increases. The bias does not decrease if an remains

fixed. This is not surprising because an is the main source of estimation bias. Figures 4-5 show

13

g ( x) (dashed line), the Monte Carlo approximation to E[ gˆ ( x )] (solid line), and the estimates,

ĝ , whose IMSEs are the 25th, 50th, and 75th percentiles of the IMSEs of the 500 Monte Carlo

replications. The figures show, not surprisingly, that ĝ is biased but that its shape is similar to

that of g .

6. Conclusions

This paper has presented a nonparametric instrumental variables estimator of a quantile

regression model, derived the estimator’s rate of mean-square convergence in probability, and

given conditions under which this rate is the fastest possible in a minimax sense. The estimator’s

finite-sample performance has been illustrated by a small set of Monte Carlo experiments.

Several topics remain for future research.

The problem of deriving the asymptotic

distribution of ĝ appears to be quite difficult. In contrast to the situation in many other nonlinear

estimation problems, asymptotic normality cannot be obtained by using a Taylor series

approximation to linearize the first-order condition for (2.5). This is because, as was explained in

Section 1, the ill-posed inverse problem causes the error of the linear approximation to dominate

other sources of estimation error unless very strong assumptions are made about the distribution

of (Y , X ,W ) . This problem does not arise with the mean-regression estimator of Hall and

Horowitz (2005) and Horowitz (2005), because the first-order condition in the mean regression is

a linear equation for ĝ . Other topics for future research include determining whether the rate of

convergence of Theorem 2 is optimal when 1 < α < 2 (or when r < r * in Theorem 5) and finding

a method to choose the regularization parameter an in applications.

7. Mathematical Appendix: Proofs of Theorems

This appendix provides proofs of Theorems 1-3. Theorems 4-5 can be proved by

following the same steps after conditioning on Z .

6.1 Proof of Theorem 1

The proof is a modification of the proof of Theorem 2 of Bissantz, Hohage, and Munk

(2004). By (2.5),

(6.1)

Tˆ ( gˆ ) − qfˆW

2

+ an gˆ

In addition, E Tˆ ( g ) − T ( g )

2

2

≤ Tˆ ( g ) − qfˆW

2

2

+ an g .

= O (δ n ) and E fˆW − fW

14

2

= O(δ n ) . Therefore, by assumption 3,

E Tˆ ( g ) − qfˆW

2

2

≤ 2 E Tˆ ( g ) − T ( g ) + 2qE fˆW − fW

2

= O(δ n ).

Combining this result with (6.1) gives

E Tˆ ( gˆ ) − qfˆW

2

+ an E gˆ

2

≤ Cδ n + an g

2

for some constant C < ∞ and all sufficiently large n . Therefore, by assumption 3,

lim sup E gˆ

n →∞

2

2

≤ g .

Note, in addition, that E Tˆ ( gˆ ) − qfˆW

2

→ 0 as n → ∞ . Moreover, assumptions 1(b) and 2

imply that T is weakly closed. Consistency now follows from arguments identical to those used

to prove Theorem 2 of Bissantz, Hohage, and Munk (2004, p. 1777). Q.E.D.

6.2 Proof of Theorem 2

Assumptions 1-2 and 4-7 hold throughout this section. Let ⋅, ⋅ denote the inner product

in L2 [0,1] . Define ω g = (Tg Tg* ) −1Tg g and

(6.2)

g = g − an (Tg*Tg + an I ) −1Tg*ω g ,

where I is the identity operator. Observe that by (3.4),

(6.3)

L ωg < 1 .

Let r̂ and r be Taylor series remainder terms with the properties that

(6.4)

T ( gˆ ) = qfW + Tg ( gˆ − g ) + rˆ

and

(6.5)

T ( g ) = qfW + Tg ( g − g ) + r ,

2

2

where qfW = T ( g ) . By (3.3), rˆ ≤ ( L / 2) gˆ − g , and r ≤ ( L / 2) g − g .

Lemma 6.1: For any g ∈ G ,

15

(1 − L ω ) gˆ − g

g

2

≤ g − g

2

+ an−1 Tˆ ( g ) − T ( g ) + r + qfW − qfˆW

+ 2Tg ( g − g ) + anω g , ω g + an−1 Tg ( g − g )

2

2

+2 qfW − qfˆW , ω g + 2an−1 r + qfW − qfˆW , Tg ( g − g)

+2 Tˆ ( gˆ ) − T ( gˆ ), ω g + 2an−1 Tˆ ( g ) − T ( g ), Tg ( g − g ) .

Proof: By (6.5),

(6.6)

Tˆ ( g ) − qfˆW

2

= Tˆ ( g ) − T ( g ) + r + qfW − qfˆW

2

+ Tg ( g − g )

2

+2 r + qfW − qfˆW , Tg ( g − g ) + 2 Tˆ ( g ) − T ( g ), Tg ( g − g ) .

Also,

(6.7)

Tˆ ( gˆ ) − qfˆW

2

= Tˆ ( gˆ ) − qfˆW + anω g

2

+ an2 ω g

2

−2an Tˆ ( gˆ ) − T ( gˆ ), ω g − 2an Tˆ ( gˆ ) − qfW , ω g

−2an qfW − qfˆW , ω g − 2an ω g

2

.

Moreover,

(6.8)

g − g , g = g − g , Tg*ω g

= Tg ( g − g ), ω g .

By (6.4),

(6.9)

gˆ − g , g = gˆ − g , Tg*ω g

= Tg ( gˆ − g ), ω g

= T ( gˆ ) − qfW , ω g − rˆ, ω g .

By (2.5),

(6.10)

Tˆ ( gˆ ) − qfˆW

2

+ an2 gˆ

2

≤ Tˆ ( g ) − qfˆW

2

2

+ an2 g .

16

Rearranging and expanding terms in (6.10) gives

(6.11)

gˆ − g

2

⎡

≤ an−1 ⎢ Tˆ ( g ) − qfˆW

⎣

2

− Tˆ ( gˆ ) − qfˆW

2⎤

⎥⎦ + g − g

2

2

2

+2 g − g , g − 2 gˆ − g , g .

Combining (6.11) with (6.6)-(6.9) gives

(6.12)

gˆ − g

2

≤ 2 rˆ, ω g − an−1 Tˆ ( gˆ ) − qfˆW + anω g

+ an−1 Tˆ ( g ) − T ( g ) + r + qfW − qfˆW

2

+ g − g

+ 2Tg ( g − g ) + anω g , ω g

2

+ an−1 Tg ( g − g ) + 2 qfW − qfˆW , ω g + 2an−1 r + qfW − qfˆW , Tg ( g − g )

+2 Tˆ ( gˆ ) − T ( gˆ ), ω g + 2an−1 Tˆ ( g ) − T ( g ), Tg ( g − g ) .

The lemma follows by noting that the second term on the right-hand side of (6.12) is non-positive

and that 2 rˆ, ω g ≤ L ω g gˆ − g . Q.E.D.

2

Lemma 6.2: For any g ∈ G ,

(6.13)

an−1 Tˆ ( g ) − T ( g ) + r + qfW − qfˆW

2

2

⎛

L2

g − g

≤ 4an−1 ⎜ Tˆ ( g ) − T ( g ) +

⎜

4

⎝

+ qfW − qfˆW

2

= an3 (Tg Tg* + an I )−1ω g

(6.14)

2Tg ( g − g ) + anω g , ω g + an−1 Tg ( g − g )

(6.15)

2 qfW − qfˆW , ω g + 2an−1 r + qfW − qfˆW , Tg ( g − g )

≤ L ω g g − g

+ Lan g − g

2

2

2⎞

4

+ 2an qfW − qfˆW (Tg Tg* + an I )−1ω g

(Tg Tg* + an I ) −1ω g ,

and

17

⎟⎟ ,

⎠

2

,

2 Tˆ ( gˆ ) − T ( gˆ ), ω g + 2an−1 Tˆ ( g ) − T ( g ), Tg ( g − g )

(6.16)

≤ 2an Tˆ ( g ) − T ( g ) (Tg Tg* + an I ) −1ω g + 2 ω g ⎡Tˆ ( gˆ ) − T ( gˆ ) ⎤ − ⎡ Tˆ ( g ) − T ( g ) ⎤

⎣

⎦ ⎣

⎦

+ 2 ω g ⎡Tˆ ( g ) − T ( g ) ⎤ − ⎡Tˆ ( g ) − T ( g ) ⎤ .

⎣

⎦ ⎣

⎦

Proof: Inequality (6.13) follows from (6.5) and the relation

A+ B+C

2

(

2

≤4 A + C

2

+ C

2

)

for any functions A , B , and C .

To show (6.14), note that

an (Tg Tg* + an I ) −1 = I − Tg (Tg*Tg + an I ) −1Tg* .

(6.17)

By (6.2)

(6.18) T ( g − g ) = −anTg (Tg*Tg + an I ) −1Tg*ω g .

It follows from (6.17) and (6.18) that

(6.19)

anω g + T ( g − g ) = an2 (Tg Tg* + an I ) −1ω g .

Taking the squares of the norms of both sides of (6.19) and expanding the term on the left-hand

side yields

(6.20)

an2 ω g

2

2

+ Tg ( g − g ) + 2 anω g , Tg ( g − g ) = an4 (Tg Tg* + an I )−1ω g

2

.

Then (6.14) follows by dividing both sides of (6.20) by an .

We now turn to (6.15). First note that

(6.21)

r + qfW − qfˆW , Tg ( g − g ) = r + qfW − qfˆW , Tg ( g − g ) + anω g

− r + qfW − qfˆW , anω g .

It follows from (6.19) and (6.21) that

(6.22)

2 qfW − qfˆW , ω g + 2an−1 r + qfW − qfˆW , Tg ( g − g ) + anω g

= 2an r + qfW − qfˆW ,(Tg Tg* + an I ) −1ω g − 2 r , ω g .

Then (6.15) follows by applying the Cauchy-Schwarz and triangle inequalities to (6.22).

Now we prove (6.16). Observe that by (6.19) and algebra like that yielding (6.21),

18

2 Tˆ ( gˆ ) − T ( gˆ ), ω g + 2an−1 Tˆ ( g ) − T ( g ), Tg ( g − g )

= 2an Tˆ ( g ) − T ( g ),(Tg Tg* + an I )−1ω g + 2 ⎡ Tˆ ( gˆ ) − T ( gˆ ) ⎤ − ⎡ Tˆ ( g ) − T ( g ) ⎤ , ω g ,

⎣

⎦ ⎣

⎦

which yields (6.16) by the Cauchy-Schwarz and triangle inequalities. Q.E.D.

Lemma 6.3: The following relations hold uniformly over H ∈ H .

(a)

g − g

2

= O[n − (2 β −1) /(2 β +α ) ] ,

= O[n − (2 β −1) /(2 β +α ) ] ,

(b)

an−1 g − g

(c)

an3 (Tg Tg* + an I )−1ω g

(d)

an g − g

(e)

an qfW − qfˆW (Tg Tg* + an I )−1ω g = O p [n −(2 β −1) /(2 β +α ) ] ,

(f)

an Tˆ ( g ) − T ( g ) (Tg Tg* + an I )−1ω g = O p [n − (2 β −1) /(2 β +α ) ] ,

(g)

There are random variables Δ n = O p [n − ( β −1/ 2) /(2 β +α ) ] and Γ n = o p (1) such that

4

2

2

= O[n −(2 β −1) /(2 β +α ) ] ,

(Tg Tg* + an I ) −1ω g = O[n − (2 β −1) /(2 β +α ) ] ,

⎡ Tˆ ( gˆ ) − T ( gˆ ) ⎤ − ⎡ Tˆ ( g ) − T ( g ) ⎤ ≤ Δ n gˆ − g + Γ n gˆ − g 2 ,

⎣

⎦ ⎣

⎦

(h)

⎡ Tˆ ( g ) − T ( g ) ⎤ − ⎡ Tˆ ( g ) − T ( g ) ⎤ = O p [ n − (2 β −1) /(2 β +α ) ] .

⎣

⎦ ⎣

⎦

Proof: To prove (a), note that by (6.2) and Tg*ω g = g ,

g ( x) − g ( x) = −an

∞

∑ λ j + an φ j ( x) φ j ,Tg*ωg

1

j =1

= − an

∞

bj

∑ λ j + an φ j ( x).

j =1

Therefore,

g − g

2

=

∞

an2

∑ (λ

j =1

b 2j

j

+ an ) 2

= O[n − (2 β −1) /(2 β +α ) ],

where the second line follows from arguments identical to those used to prove equation (6.4) of

Hall and Horowitz (2005). This proves (a). It follows from (a) that

19

an−1 g − g

4

= O[n − (2 β −1) /(2 β +α ) ]

whenever α < 2β − 1 , thereby proving (b).

We now turn to (c). Define ψ j = Tg φ j / Tg φ j . Use ω g = (Tg Tg* ) −1Tg g and the singular

2

value decomposition Tg*ψ j = λ1/

j φ j to obtain

∞

1

ψ j ψ j , Tg g

j =1 λ j (λ j + an )

(Tg Tg* + an I ) −1ω g = ∑

∞

1

ψ j Tg*ψ j , g

(

)

λ

λ

+

a

j

n

j =1 j

=∑

∞

1

ψ j λ1/j 2φ j , g

j =1 λ j (λ j + an )

=∑

∞

bj

1/ 2

j =1 λ j (λ j

=∑

+ an )

ψ j.

Therefore,

(6.23)

(Tg Tg*

+ an I )ω g

2

∞

=∑

b 2j

j =1 λ j (λ j

+ an ) 2

= O[an(2 β −3α −1) / α ]

by arguments like those used to prove equation (6.4) of Hall and Horowitz (2005). Therefore, (c)

follows from an = Ca n −α /(2 β +α ) .

To prove (d), note that by (6.23)

(6.24)

an (Tg Tg* + an I ) −1ω g = O[an(2 β −α −1) /(2α ) ]

= O[n − (2 β −α −1) /(4 β + 2α ) ].

Therefore, (d) follows from (6.24) and (a), because α < 2β − 1 . Now by assumptions 2 and 5(b),

fˆW − fW

2

= O p [n −(2 β −1+α ) /(2 β +α ) ]

and

Tˆ − T

2

= O p [n − (2 β −1+α ) /(2 β +α ) ]

20

uniformly over H . Therefore, (e) and (f) follow from (6.24).

We now turn to (g). By the mean value theorem,

{⎡⎣Tˆ ( gˆ ) − T ( gˆ )⎤⎦ − ⎡⎣Tˆ ( g ) − T ( g )⎤⎦} (w)

1

= ∫ { fˆYXW [ g ( x), x, w] − fYXW [ g ( x), x, w]}[ gˆ ( x) − g ( x)]dx,

0

where g is between ĝ and g . Then by the Cauchy-Schwarz inequality

⎡Tˆ ( gˆ ) − T ( gˆ ) ⎤ − ⎡Tˆ ( g ) − T ( g ) ⎤

⎣

⎦ ⎣

⎦

2

2

1 1

= ∫ ⎛⎜ ∫ { fˆYXW [ g ( x), x, w] − fYXW [ g ( x), x, w]}[ gˆ ( x) − g ( x)]dx ⎞⎟ dw

0⎝ 0

⎠

1 1

1

≤ ∫ ⎛⎜ ∫ { fˆYXW [ g ( x), x, w] − fYXW [ g ( x), x, w]}2 dx ∫ [ gˆ ( x) − g ( x)]2 dx ⎞⎟ dw

0⎝ 0

0

⎠

=∫

1

0

1

∫0 { fˆYXW [ g ( x), x, w] − fYXW [ g ( x), x, w]}

2

2

dxdw gˆ − g .

But

1

1

∫0 ∫0 { fˆYXW [ g ( x), x, w] − fYXW [ g ( x), x, w]}

2

dxdw = O p [ n − (2 β −1) /(2 β +α ) ] + gˆ − g o p (1)

2

by assumptions 2 and 5(b), thereby yielding (g). Finally, (h) can be proved by combining (a) with

arguments similar to those used to prove (g). The lemma is now proved because the foregoing

arguments hold uniformly over H ∈ H . Q.E.D.

Proof of Theorem 2: The theorem follows by combining the results of lemmas 6.1-6.3

with L ω g < 1 . Q.E.D.

6.3 Proof of Theorem 3

It suffices to find a sequence of finite-dimensional models {g n } ∈ H for which

liminf PH ⎡ g n − g n

⎣

n →∞

2

> Dn − (2 β −1) /(2 β +α ) ⎤ > 0 .

⎦

To this end, let m denote the integer part of n−1/(2 β +α ) and f XW denote the density of ( X ,W ) .

Since α ≥ 2 , (2β + α − 1) / 2 ≥ (3β + α − 1/ 2) /(α + 1) .

Let r = (2 β + α − 1) / 2 .

f XW ( x, w) ≤ C for all ( x, w) ∈ [0,1]2 and some constant C < ∞ . Let

Y = gn ( X ) + U ,

21

Assume that

where U is independent of ( X ,W ) , and P (U ≤ 0) = q . Let FU and fU , respectively, denote the

distribution function and density of U . Assume that fU (0) > 0 and that FU is twice continuously

differentiable everywhere with | FU′′ (u ) | < M for all u and some M < ∞ . Define the operator Q

on L2 [0,1] by

(Πg )( x) =

1

∫0 π ( x, z) g ( z )dz ,

for any g ∈ L2 [0,1] , where

1

π ( x, z ) = fU (0) 2 ∫ f XW ( x, w) f XW ( z , w)dw .

0

Let {λ j ,φ j : j = 1, 2,...} denote the orthonormal eigenvalues and eigenvectors of Π ordered so

that λ1 ≥ λ2 ≥ ... > 0 . Assume that jα λ j is bounded away from 0 and 1 for all j . Set

g n ( x) = θ

∞

∑ j − β φ j ( x)

j =m

for some finite, constant θ > 0 . Then for any h ∈ L2 [0,1] ,

(T h)( w) =

1

∫0 FU [h( x) − gn ( x)] f XW ( x, w)dx ,

and the Fréchet derivative of T at g n is

(Tg n h)( w) = fU (0)

1

∫0 f XW ( x, w)[h( x) − gn ( x)]dx .

Assumption 6 is satisfied with L = MC whenever θ > 0 is sufficiently small.

Now let θˆ be an estimator of θ . Then

gˆ ( x) ≡ θˆ

∞

∑ j − β φ j ( x) .

j =m

is an estimator of g n ( x ) . Moreover,

(6.25)

gˆ − g n

where Rn =

2

∞

= (θˆ − θ ) 2 Rn ,

∑ j =m j −2β .

Note that n(2 β −1) /(2 β +α ) Rn is bounded away from 0 and 1 as n → ∞ .

In addition, fW is estimated by

fˆw ( w) = q −1 (T gˆ )( w) .

Define ψ j = Tg φ j / Tg φ j . Then a Taylor series approximation and singular value expansion give

22

fˆW ( w) − fW ( w) = q −1 (θˆ − θ )

∞

∑ j − β (Tgφ j )(w) + (θˆ − θ )2 O[n−(2β −1) /(2β +α ) ]

j =m

.

= q −1 (θˆ − θ )

∞

∑ j − β λ1/j 2ψ j (w) + (θˆ − θ )2 O[n−(2β −1) /(2β +α ) ].

j =m

Now,

n

(2 β +α −1) /(2 β +α )

∞

∑

2

2

j − β λ1/

j ψj

j =m

is bounded away from 0 and ∞ as n → ∞ . Therefore, there is a finite constant Cθ > 0 such that

(6.26) (θˆ − θ )2 ≥ Cθ n(2 β +α −1) /(2 β +α ) fˆW − fW

2

.

Combining (6.25) and (6.26) shows that there is a finite constant C g > 0 such that

(6.27)

n(2 β −1) /(2 β +α ) gˆ − g n

2

≥ Cg n(2 β +α −1) /(2 β +α ) fˆW − fW

2

.

The theorem now follows from (6.27) and the observation that with r = (2 β + α − 1) / 2 ,

O p [n(2 β +α −1) /(2 β +α ) ] is the fastest possible minimax rate of convergence of fˆW − fW

23

2

. Q.E.D.

FOOTNOTES

1

The results of this paper hold even if fYXW or its derivatives are discontinuous at one or more

boundaries of the support of (Y , X ,W ) provided that the kernel function K is replaced by a

boundary kernel.

24

REFERENCES

Amemiya, T. (1982). Two stage least absolute deviations estimators. Econometrica, 50, 689711.

Bissantz, N., T. Hohage, and A. Munk (2004). Consistency and rates of convergence of nonlinear

Tikhonov regularization with random noise. Inverse Problems, 20, 1773-1789.

Bissantz, N., T. Hohage, A. Munk, and F. Ruymgaart (2006). Convergence rates of general

regularization methods for statistical invetrse problems and applications, working paper, Institut

für Numerische und Angewandte Mathematik, Georg-August-Universität Göttingen, Germany.

Blundell, R. and J.L. Powell (2003). Endogeneity in nonparametric and semiparametric

regression models. In Advances in Economics and Econometrics: Theory and Applications, M.

Dewatripont, L.-P. Hansen, and S.J. Turnovsky, eds., 2, 312-357, Cambridge: Cambridge

University Press.

Carrasco, M., J.-P. Florens, and E. Renault (2005). Linear inverse problems in structural

econometrics: estimation based on spectral decomposition and regularization. In Handbook of

Econometrics, Vol. 6, E.E. Leamer and J.J. Heckman, eds, Amsterdam: North-Holland,

forthcoming.

Chen, L. and S. Portnoy (1996). Two-stage regression quantiles and two-stage trimmed least

squares estimators for structural equation models. Communications in Statistics, Theory and

Methods, 25, 1005-1032.

Chernozhukov, V. and C. Hansen (2005a). Instrumental quantile regression inference for

structural and treatment effect models. Journal of Econometrics, forthcoming.

Chernozhukov, V. and C. Hansen (2005b).

Econometrica, 73, 245-261.

An IV model of quantile treatment effects.

Chernozhukov, V. and C. Hansen (2004). The effects of 401(k) participation on the wealth

distribution: an instrumental quantile regression analysis. Review of Economics and Statistics,

86, 735-751.

Chernozhukov, V., G.W. Imbens, and W.K. Newey (2004). Instrumental variable identification

and estimation of nonseparable models via quantile conditions. Working paper, Department of

Economics, Massachusetts Institute of Technology, Cambridge, MA.

Chesher, A. (2003). Identification in nonseparable models. Econometrica, 71, 1405-1441.

Darolles, S., J.-P. Florens, and E. Renault (2002). Nonparametric instrumental regression.

Working paper, GREMAQ, University of Social Science, Toulouse, France.

Engl, H.W., M. Hanke, and A. Neubauer (1996).

Dordrecht: Kluwer Academic Publishers.

25

Regularization of Inverse Problems.

Florens, J.-P. (2003). Inverse problems and structural econometrics: the example of instrumental

variables. In Advances in Economics and Econometrics: Theory and Applications,

Dewatripont, L.-P. Hansen, and S.J. Turnovsky, eds., 2, 284-311, Cambridge: Cambridge

University Press.

Groetsch, C. (1984). The Theory of Tikhonov Regularization for Fredholm Equations of the First

Kind. London: Pitman.

Hall, P. and J.L. Horowitz (2005). Nonparametric methods for inference in the presence of

instrumental variables. Annals of Statistics, 33, 2904-2929.

Horowitz, J.L. (2005). Asymptotic normality of a nonparametric instrumental variables

estimator. Working paper, Department of Economics, Northwestern University, Evanston, IL.

Januszewski, S.I. (2002). The effect of air traffic delays on airline prices. Working paper,

Department of Economics, University of California at San Diego, La Jolla, CA.

Kress, R. (1999). Linear Integral Equations, 2nd edition. New York: Springer.

Ma, L. and R. Koenker (2006). Quantile regression methods for recursive structural equation

models. Journal of Econometrics, forthcoming.

Newey, W.K. and J.L. Powell (2003). Instrumental variable estimation of nonparametric models.

Econometrica, 71, 1565-1578.

Newey, W.K., J.L. Powell, and F. Vella (1999). Nonparametric estimation of triangular

simultaneous equations models. Econometrica, 67, 565-603.

Powell, J.L. (1983). The asymptotic normality of two-stage least absolute deviations estimators,

Econometrica. 50, 1569-1575.

Tikhonov, A.N. (1963a). Solution of incorrectly formulated problems and the regularization

method. Soviet Mathematics Doklady, 4, 1035-1038.

Tikhonov, A.N. (1963b). Regularization of incorrectly posed problems. Soviet Mathematics

Doklady, 4, 1624-1627.

26

Table 1.

Results of Monte Carlo Experiments

n

an

h

Bias2

Variance

MISE

200

0.5

0.5

1.0

1.0

0.5

0.8

0.5

0.8

0.0226

0.0795

0.0146

0.0247

0.0199

0.0220

0.0195

0.0203

0.0425

0.1015

0.0341

0.0450

800

0.5

0.5

1.0

1.0

0.5

0.8

0.5

0.8

0.0233

0.0816

0.0151

0.0258

0.0048

0.0054

0.0047

0.0050

0.0282

0.0870

0.0199

0.0308

Note: Bias2R, Variance, and MISE, respectively,

are Monte CarloR approxiR1

1

1

2

mations to 0 (E[ĝ(x)] − g(x)) dx, 0 (ĝ(x) − E[ĝ(x)])2 dx, and 0 (ĝ(x) −

g(x))2 dx.

27

Figure 1: The Density of W

28

Figure 2: The Density of X Conditional on W

29

Figure 3: The Density of U Conditional on X and W

30

Figure 4: Monte Carlo Results for n = 200

31

Figure 5: Monte Carlo Results for n = 800

32