Classical Hypothesis Testing Theory Adapted from Alexander Senf

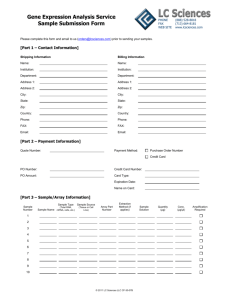

advertisement

Classical Hypothesis Testing

Theory

Adapted from Alexander Senf

Review

• 5 steps of classical hypothesis testing (Ch. 3)

1. Declare null hypothesis H0 and alternate

hypothesis H1

2. Fix a threshold α for Type I error (1% or 5%)

•

•

Type I error (α): reject H0 when it is true

Type II error (β): accept H0 when it is false

3. Determine a test statistic

•

7/31/2008

a quantity calculated from the data

2

Review

4. Determine what observed values of the test

statistic should lead to rejection of H0

•

Significance point K (determined by α)

5. Test to see if observed data is more extreme

than significance point K

•

•

7/31/2008

If it is, reject H0

Otherwise, accept H0

3

Overview of Ch. 9

– Simple Fixed-Sample-Size Tests

– Composite Fixed-Sample-Size Tests

– The -2 log λ Approximation

– The Analysis of Variance (ANOVA)

– Multivariate Methods

– ANOVA: the Repeated Measures Case

– Bootstrap Methods: the Two-sample t-test

– Sequential Analysis

7/31/2008

4

Simple Fixed-Sample-Size Tests

7/31/2008

5

The Issue

• In the simplest case, everything is specified

– Probability distribution of H0 and H1

• Including all parameters

– α (and K)

– But: β is left unspecified

• It is desirable to have a procedure that

minimizes β given a fixed α

– This would maximize the power of the test

• 1-β, the probability of rejecting H0 when H1 is true

7/31/2008

6

Most Powerful Procedure

• Neyman-Pearson Lemma

– States that the likelihood-ratio (LR) test is the most

powerful test for a given α

– The LR is defined as:

f1 ( X 1 ) f1 ( X 2 ) f1 ( X n )

LR

f0 ( X 1 ) f0 ( X 2 ) f0 ( X n )

– where

• f0, f1 are completely specified density functions for H0,H1

• X1, X2, … Xn are iid random variables

7/31/2008

7

Neyman-Pearson Lemma

– H0 is rejected when LR ≥ K

– With a constant K chosen such that:

P(LR ≥ K when H0 is true) = α

– Let’s look at an example using the NeymanPearson Lemma!

– Then we will prove it.

7/31/2008

8

Example

• Basketball players seem to be

taller than average

– Use this observation to formulate

our hypothesis H1:

• “Tallness is a factor in the

recruitment of KU basketball

players”

– The null hypothesis, H0, could be:

• “No, the players on KU’s team are a

just average height compared to the

population in the U.S.”

• “Average height of the team and the

population in general is the same”

7/31/2008

9

Example

• Setup:

– Average height of males in the US: 5’9 ½“

– Average height of KU players in 2008: 6’04 ½”

• Assumption: both populations are normal-distributed

centered on their respective averages (μ0 = 69.5 in, μ1 =

76.5 in) and σ = 2

( x 76.5 ) 2

( x 69.5 ) 2

• Sample size: 3

8

8

e

e

f1 ( x)

f 0 ( x)

2 2

2 2

– Choose α: 5%

7/31/2008

10

Example

• The two populations:

f0

f1

p

height (inches)

7/31/2008

11

Example

– Our test statistic is the Likelihood Ratio, LR

e

( x1 76.5 ) 2

8

f1 ( x1 ) f1 ( x2 ) f1 ( x3 ) 2 2

( x)

( x 69.5 )

f 0 ( x1 ) f 0 ( x2 ) f 0 ( x3 )

8

e

2 2

( x2 76.5 ) 2

8

e

2 2

2

1

e

e

( x2 69.5 ) 2

8

2 2

( x3 76.5 ) 2

8

2 2

e

( x3 69.5 ) 2

8

2 2

3

e

1

( xi 69.5) 2 ( xi 76.5) 2

8 i1

– Now we need to determine a significance point K

at which we can reject H0, given α = 5%

7/31/2008

• P(Λ(x) ≥ K | H0 is true) = 0.05, determine K

12

Example

– So we just need to solve for K’ and calculate K:

f

0

( x1 ) f 0 ( x2 ) f 0 ( x3 )dx1dx2 dx3 0.05

K1' K 2' K 3'

• How to solve this? Well, we only need one set of values

to calculate K, so let’s pick two and solve for the third:

f

0

( x1 ) f 0 ( x2 ) f 0 ( x3 )dx1dx2 dx3 0.05

6871 K 3'

• We get one result: K3’=71.0803

7/31/2008

13

Example

– Then we can just plug it in to Λ and calculate K:

3

K e

e

1

( K i' 69.5) 2 ( K i' 76.5) 2

8 i1

1

( 6869.5) 2 ( 6876.5) 2 ( 7169.5) 2 ( 7176.5) 2 ( 71.080369.5) 2 ( 71.080376.5) 2

8

1.663 *10 7

7/31/2008

14

Example

– With the significance point K = 1.663*10-7 we can

now test our hypothesis based on observations:

• E.g.: Sasha = 83 in, Darrell = 81 in, Sherron = 71 in

3

( X {83,81,71}) e

1

( X i 69.5 ) 2 ( X i 76.5 ) 2

8 i 1

(83,81,71) 1.446 *1012

• 1.446*1012 > 1.663*10-7

• Therefore, our hypothesis that tallness is a factor in the

recruitment of KU basketball players is true.

7/31/2008

15

Neyman-Pearson Proof

• Let A define region in the joint range of X1, X2,

… Xn such that LR ≥ K. A is the critical region.

– If A is the only critical region of size α we are done

L(H ) f (u ) f (u ) f (u )du du du

A

0

0

1

0

2

0

n

1

2

n

A

– Let’s assume another critical region of size α,

defined by B

L(H ) f (u ) f (u ) f (u )du du du

B

7/31/2008

0

0

1

0

2

0

n

1

2

n

B

16

Proof

– H0 is rejected if the observed vector (x1, x2, …, xn)

is in A or in B.

– Let A and B overlap in region C

– Power of the test: rejecting H0 when H1 is true

• The Power of this test using A is:

L(H ) f (u ) f (u ) f (u )du du du

A

7/31/2008

1

1

1

1

2

1

n

1

2

n

A

17

Proof

– Define: Δ = ∫AL(H1) - ∫BL(H1)

• The power of the test using A minus using B

f1 (u1 ) f1 (un )du1 dun f1 (u1 ) f1 (un )du1 dun

A

B

f1 (u1 ) f1 (un )du1 dun f1 (u1 ) f1 (un )du1 dun

A\C

B\C

• Where A\C is the set of points in A but not in C

• And B\C contains points in B but not in C

7/31/2008

18

Proof

– So, in A\C we have:

f1 (u1 ) f1 (u n )

K

f 0 (u1 ) f 0 (u n )

f1 (u1 ) f1 (un ) Kf0 (u1 ) f 0 (un )

– While in B\C we have:

f1 (u1 ) f1 (un ) Kf0 (u1 ) f 0 (un )

Why?

7/31/2008

19

Proof

– Thus

Kf 0 (u1 ) f 0 (un )du1 dun Kf 0 (u1 ) f 0 (un )du1 dun

A\C

B\C

Kf 0 (u1 ) f 0 (un )du1 dun Kf 0 (u1 ) f 0 (un )du1 dun

A

B

K K

0

– Which implies that the power of the test using A is

greater than or equal to the power using B.

7/31/2008

20

Composite Fixed-Sample-Size Tests

7/31/2008

21

Not Identically Distributed

• In most cases, random variables are not

identically distributed, at least not in H1

– This affects the likelihood function, L

– For example, H1 in the two-sample t-test is:

m

L

i 1

1

e

2

( x1i 1 ) 2

2 2

n

i 1

1

e

2

( x2 i 2 ) 2

2 2

– Where μ1 and μ2 are different

7/31/2008

22

Composite

– Further, the hypotheses being tested do not

specify all parameters

– They are composite

– This chapter only outlines aspects of composite

test theory relevant to the material in this book.

7/31/2008

23

Parameter Spaces

– The set of values the parameters of interest can take

– Null hypothesis: parameters in some region ω

– Alternate hypothesis: parameters in Ω

– ω is usually a subspace of Ω

• Nested hypothesis case

– Null hypothesis nested within alternate hypothesis

– This book focuses on this case

• “if the alternate hypothesis can explain the data

significantly better we can reject the null hypothesis”

7/31/2008

24

λ Ratio

• Optimality theory for composite tests suggests

this as desirable test statistic:

Lmax ( )

Lmax ()

• Lmax(ω): maximum likelihood when parameters are

confined to the region ω

• Lmax(Ω): maximum likelihood when parameters are

confined to the region Ω, defined by H1

• H0 is rejected when λ is sufficiently small (→ Type I error)

7/31/2008

25

Example: t-tests

• The next slides calculate the λ-ratio for the

two sample t-test (with the likelihood)

m

L

i 1

1

e

2

( x1i 1 ) 2

2 2

n

i 1

1

e

2

( x2 i 2 ) 2

2 2

– t-tests later generalize to ANOVA and T2 tests

7/31/2008

26

Equal Variance Two-Sided t-test

• Setup

– Random variables X11,…,X1m in group 1 are

Normally and Independently Distributed (μ1,σ2)

– Random variables X21,…,X2n in group 2 are NID

(μ2,σ2)

– X1i and X2j are independent for all i and j

– Null hypothesis H0: μ1= μ2 (= μ, unspecified)

– Alternate hypothesis H1: both unspecified

7/31/2008

27

Equal Variance Two-Sided t-test

• Setup (continued)

– σ2 is unknown and unspecified in H0 and H1

• Is assumed to be the same in both distributions

– Region ω is:

{1 2 ,0 2 }

– Region Ω is:

{ 1 , 2 ,0 2 }

7/31/2008

28

Equal Variance Two-Sided t-test

• Derivation

– H0: writing μ for the mean, when μ1= μ2, the

maximum over likelihood ω is at

ˆ X

X 11 X 12 X 1m X 21 X 22 X 2 n

mn

– And the (common) variance σ2 is

2

(

X

X

)

(

X

X

)

i1 1i

i1 2i

m

̂ 02

7/31/2008

2

n

mn

29

Equal Variance Two-Sided t-test

– Inserting both into the likelihood function, L

Lmax ( )

7/31/2008

1

(2ˆ )

2

0

m n

2

e

m n

2

30

Equal Variance Two-Sided t-test

– Do the same thing for region Ω

ˆ1 X 1

X 11 X 12 X 1m

m

ˆ 2 X 2

2

2

(

X

X

)

(

X

X

)

1

2

i 1 1i

i 1 2i

m

ˆ12

X 21 X 22 X 2 n

n

n

mn

– Which produces this likelihood Function, L

Lmax ()

7/31/2008

1

(2ˆ )

2

1

m n

2

e

m n

2

31

Equal Variance Two-Sided t-test

– The test statistic λ is then

e

m2 n

m n

2

ˆ12

Lmax ( ) (2ˆ 02 )

2

m2 n

Lmax ()

e

ˆ 0

2 m2 n

(2ˆ1 )

mn

2

It’s the same function, just

With different variances

7/31/2008

32

Equal Variance Two-Sided t-test

– We can then use the algebraic identity

m

n

m

n

( X 1i X ) ( X 2i X ) ( X 1i X 1 ) ( X 2i X 2 ) 2

2

i 1

2

i 1

– To show that

2

i 1

1

1t 2

m n2

– Where t is (from Ch. 3)

7/31/2008

i 1

mn

( X 1 X 2 )2

mn

mn

2

T

( X 1 X 2 ) mn

S mn

33

Equal Variance Two-Sided t-test

– t is the observed value of T

– S is defined in Ch. 3 as

m

n

2

(

X

X

)

(

X

X

)

1

2

1i

2i

2

S2

i 1

i 1

mn2

λ

We can plot λ as a

function of t:

(e.g. m+n=10)

7/31/2008

t

34

Equal Variance Two-Sided t-test

– So, by the monotonicity argument, we can use t2

or |t| instead of λ as test statistic

– Small values of λ correspond to large values of |t|

– Sufficiently large |t| lead to rejection of H0

– The H0 distribution of t is known

• t-distribution with m+n-2 degrees of freedom

– Significance points are widely available

• Once α has been chosen, values of |t| sufficiently large

to reject H0 can be determined

7/31/2008

35

http://www.socr.ucla.edu/Applets.dir/T-table.html

Equal Variance Two-Sided t-test

7/31/2008

36

Equal Variance One-Sided t-test

• Similar to Two-Sided t-test case

– Different region Ω for H1:

• Means μ1 and μ2 are not simply different, but one is

larger than the other μ1 ≥ μ2

{1 2 ,0 2 }

• If x1 x 2 then maximum likelihood estimates are the

same as for the two-sided case

7/31/2008

37

Equal Variance One-Sided t-test

• If x1 x 2 then the unconstrained maximum of the

likelihood is outside of ω

• The unique maximum is at ( x1 , x 2 ) , implying that the

maximum in ω occurs at a boundary point in Ω

• At this point estimates of μ1 and μ2 are equal ( x)

• At this point the likelihood ratio is 1 and H0 is not

rejected

• Result: H0 is rejected in favor of H1 (μ1 ≥ μ2) only for

sufficiently large positive values of t

7/31/2008

38

Example - Revised

• This scenario fits with our original example:

– H1 is that the average height of KU basketball

players is bigger than for the general population

– One-sided test

– We could assume that we don’t know the

averages for H0 and H1

– We actually don’t know σ (I just guessed 2 in the

original example)

7/31/2008

39

Example - Revised

• Updated example:

– Observation in group 1 (KU): X1 = {83, 81, 71}

– Observation in group 2: X2 = {65, 72, 70}

– Pick significance point for t from a table: tα = 2.132

• t-distribution, m+n-2 = 4 degrees of freedom, α = 0.05

– Calculate t with our observations

(78.3 69) 9

27.9

t

2.185

12.7673

5.2122 6

– t > tα, so we can reject H0!

7/31/2008

40

Comments

• Problems that might arise in other cases

– The λ-ratio might not reduce to a function of a

well-known test statistic, such as t

– There might not be a unique H0 distribution of λ

– Fortunately, the t statistic is a pivotal quantity

• Independent of the parameters not prescribed by H0

– e.g. μ, σ

– For many testing procedures this property does

not hold

7/31/2008

41

Unequal Variance Two-Sided t-test

• Identical to Equal Variance Two-Sided t-test

– Except: variances in group 1 and group 2 are no

longer assumed to be identical

•

•

•

•

•

7/31/2008

Group 1: NID(μ1, σ12)

Group 2: NID(μ2, σ22)

With σ12 and σ22 unknown and not assumed identical

Region ω = {μ1 = μ2, 0 < σ12, σ22 < +∞}

Ω makes no constraints on values μ1, μ2, σ12, and σ22

42

Unequal Variance Two-Sided t-test

– The likelihood function of (X11, X12, …, X1m, X21, X22,

…, X2n) then becomes

m

i 1

1

e

2 1

( x1i 1 ) 2

2 12

n

i 1

1

2 2

e

( x21i 2 ) 2

2 22

– Under H0 (μ1 = μ2 = μ), this becomes:

m

i 1

7/31/2008

1

e

2 1

( x1i ) 2

2 12

n

i 1

1

2 2

e

( x21i ) 2

2 22

43

Unequal Variance Two-Sided t-test

– Maximum likelihood estimates ̂ , ̂ 12 and ̂ 22 satisfy

the simultaneous equations:

(x

1i

2

1

ˆ )

ˆ

2i

2

2

ˆ )

ˆ

2

1

(x

ˆ 22

(x

ˆ

7/31/2008

(x

1i

0

ˆ ) 2

m

2i

ˆ ) 2

n

44

Unequal Variance Two-Sided t-test

– cubic equation in ̂

– Neither the λ ratio, nor any monotonic function

has a known probability distribution when H0 is

true!

– This does not lead to any useful testing statistic

• The t-statistic may be used as reasonably close

• However H0 distribution is still unknown, as it depends

on the unknown ratio σ12/σ22

• In practice, a heuristic is often used (see Ch. 3.5)

7/31/2008

45

The -2 log λ Approximation

7/31/2008

46

The -2 log λ Approximation

• Used when the λ-ratio procedure does not

lead to a test statistic whose H0 distribution is

known

– Example: Unequal Variance Two-Sided t-test

• Various approximations can be used

– But only if certain regularity assumptions and

restrictions hold true

7/31/2008

47

The -2 log λ Approximation

• Best known approximation:

– If H0 is true, -2 log λ has an asymptotic chi-square

distribution,

• with degrees of freedom equal to the difference in

parameters unspecified by H0 and H1, respectively.

• λ is the likelihood ratio

• “asymptotic” = “as the sample size → ∞”

– Provides an asymptotically valid testing procedure

7/31/2008

48

The -2 log λ Approximation

– Restrictions:

• Parameters must be real numbers that can take on

values in some interval

• The maximum likelihood estimator is found at a turning

point of the function

– i.e. a “real” maximum, not at a boundary point

• H0 is nested in H1 (as in all previous slides)

– These restrictions are important in the proof

• I skip the proof…

7/31/2008

49

The -2 log λ Approximation

• Instead:

– Our original basketball example, revised again:

• Let’s drop our last assumption, that the variance in the

population at large is the same as in the group of KU

basketball players.

• All we have left now are our observations and the

hypothesis that μ1 > μ2

– Where μ1 is the average height of Basketball players

• Observation in group 1 (KU): X1 = {83, 81, 71}

• Observation in group 2: X2 = {65, 72, 70}

7/31/2008

50

Example – Revised Again

– Using the Unequal Variance One-Sided t-Test

– We get:

7/31/2008

51

The Analysis of Variance (ANOVA)

7/31/2008

52

The Analysis of Variance (ANOVA)

• Probably the most frequently used hypothesis

testing procedure in statistics

• This section

– Derives of the Sum of Squares

– Gives an outline of the ANOVA procedure

– Introduces one-way ANOVA as a generalization of

the two-sample t-test

– Two-way and multi-way ANOVA

– Further generalizations of ANOVA

7/31/2008

53

Sum of Squares

• New variables (from Ch. 3)

– The two-sample t-test tests for equality of the

means of two groups.

– We could express the observations as:

X ij i Eij

i 1,2

– Where the Eij are assumed to be NID(0,σ2)

– H0 is μ1 = μ2

7/31/2008

54

Sum of Squares

– This can also be written as:

X ij i Eij

i 1,2

• μ could be seen as overall mean

• αj as deviation from μ in group j

– This model is overparameterized

• Uses more parameters than necessary

• Necessitates the requirement m1 n 2 0

• (always assumed imposed)

7/31/2008

55

Sum of Squares

– We are deriving a test procedure similar to the

two-sample two-sided t-test

– Using |t| as test statistic

• Absolute value of the T statistic

– This is equivalent to using t2

• Because it’s a monotonic function of |t|

– The square of the t statistic (from Ch. 3)

( X 1 X 2 ) mn

T

S mn

7/31/2008

56

Sum of Squares

– …can, after algebraic manipulations, be written as F

B

F ( m n 2)

W

– where

X

X

m

m

n

X2

1j

1

j 1

j 1

X2j

X

n

mX 1 nX 2

mn

mn

B

( X 1 X 2 ) 2 m( X 1 X ) 2 n ( X 2 X ) 2

mn

m

n

W ( X1 j X 1 ) ( X 2 j X 2 )2

2

j 1

7/31/2008

j 1

57

Sum of Squares

– B: between (among) group sum of squares

– W: within group sum of squares

– B + W: total sum of squares

• Can be shown to be:

m

(X

i 1

n

2

2

X

)

(

X

X

)

1i

2i

i 1

– Total number of degrees of freedom: m + n – 1

• Between groups: 1

• Within groups: m + n - 2

7/31/2008

58

Sum of Squares

– This gives us the F statistic F B (m n 2)

W

– Our goal is to test the significance of the

difference between the means of two groups

• B measures the difference

– The difference must be measured relative to the

variance within the groups

• W measures that

– The larger F is, the more significant the difference

7/31/2008

59

The ANOVA Procedure

• Subdivide observed total sum of squares into

several components

– In our case, B and W

• Pick appropriate significance point for a

chosen Type I error α from an F table

• Compare the observed components to test

our hypothesis

7/31/2008

60

F-Statistic

• Significance points depend on degrees of

freedom in B and W

– In our case, 1 and (m + n – 2)

7/31/2008

http://www.ento.vt.edu/~sharov/PopEcol/tables/f005.html

61

Comments

• The two-group case readily generalizes to any

number of groups.

• ANOVAs can be classified in various ways, e.g.

– fixed effects models

– mixed effects models

– random effects model

– Difference is discussed later

– For now we consider fixed effect models

• Parameter αi is fixed, but unknown, in group i

7/31/2008

X ij i Eij

62

Comments

• Terminology

– Although ANOVA contains the word ‘variance’

– What we actually test for is a equality in means

between the groups

• The different mean assumptions affect the variance,

though

• ANOVAs are special cases of regression

models from Ch. 8

7/31/2008

63

One-Way ANOVA

• One-Way fixed-effect ANOVA

• Setup and derivation

– Like two-sample t-test for g number of groups

– Observations (ni observations, i=1,2,…,g)

X i1 , X i 2 ,, X in

– Using overparameterized model for X

X ij i Eij

j 1,2,, ni

i 1,2, , g

– Eij assumed NID(0,σ2), Σniαi = 0, αi fixed in group i

7/31/2008

64

One-Way ANOVA

– Null Hypothesis H0 is: α1 = α2 = … = αg = 0

– Total sum of squares is

g

ni

( X

i 1 j 1

ij

X )2

– This is subdivided into B and W

g

g

ni

W ( X ij X i ) 2

B ni ( X i X ) 2

i 1 j 1

i 1

– with

ni

X ij

j 1

ni

Xi

7/31/2008

g

ni

X

i 1 j 1

X ij

N

g

N ni

i 1

65

One-Way ANOVA

– Total degrees of freedom: N – 1

• Subdivided into dfB = g – 1 and dfW = N - g

– This gives us our test statistic F

F

B Ng

*

W g 1

– We can now look in the F-table for these degrees

of freedom to pick significance points for B and W

– And calculate B and W from the observed data

– And accept or reject H0

7/31/2008

66

Example

• Revisiting the Basketball example

– Looking at it as a One-Way ANOVA analysis

• Observation in group 1 (KU): X1 = {83, 81, 71}

• Observation in group 2: X2 = {65, 72, 70}

– Total Sum of Squares:

(73.66 83) 2 (73.66 81) 2 (73.66 71) 2 (73.66 65) 2 (73.66 72) 2 (73.66 70) 2 239.3336

– B (between groups sum of squares)

g

B ni ( X i X ) 2 3(78.33 76.33) 2 3(69 76.33) 2 130.57

i 1

7/31/2008

67

Example

– W (within groups sum of squares)

g

ni

W ( X ij X i ) 2

i 1 j 1

((83 78.33) 2 (81 78.33) 2 (71 78.33) 2 ) ((65 69) 2 (72 69) 2 (70 69) 2 )

108.667

– Degrees of freedom

• Total: N-1 = 5

• dfB = g – 1 = 2 - 1 = 1

• dfW = N – g = 6 – 2 = 4

7/31/2008

68

Example

– Table lookup for df 1 and 4 and α = 0.05:

– Critical value: F = 7.71

– Calculate F from our data:

F

B N g 130.57 6 2

*

*

4.806

W g 1 108.667 2 1

– So… 4.806 < 7.71

– With ANOVA we actually accept H0!

• Seems to be the large variance in group 1

7/31/2008

69

Same Example – with Excel

• Screenshots:

7/31/2008

70

Excel

• Offers most of these tests, built-in

7/31/2008

71

Two-Way ANOVA

• Two-Way Fixed Effects ANOVA

• Overview only (in the scope of this book)

• More complicated setup; example:

– Expression levels of one gene in lung cancer patients

– a different risk classes

• E.g.: ultrahigh, very high, intermediate, low

– b different age groups

– n individuals for each risk/age combination

7/31/2008

72

Two-Way ANOVA

– Expression levels (our observations): Xijk

• i is the risk class (i = 1, 2, …, a)

• j indicates the age group

• k corresponds to the individual in each group (k = 1, …, n)

– Each group is a possible risk/age combination

• The number of individuals in each group is the same, n

• This is a “balanced” design

• Theory for unbalanced designs is more complicated and

not covered in this book

7/31/2008

73

Two-Way ANOVA

– The Xijk can be arranged in a table:

Risk category

1

2

3

4

1

n

n

n

n

2

n

n

n

n

3

n

n

n

n

4

n

n

n

n

5

n

n

n

n

Age group

j

i

Number of individuals in this

risk/age group (aka “cell”)

7/31/2008

This is a two-way table

74

Two-Way ANOVA

– The model adopted for each Xijk is

X ijk i j ij Eijk

i 1,2,, a

•

•

•

•

•

j 1,2,, b

k 1,2,, n

Where Eijk are NID(μ, α2)

The mean of Xijk is μ + αi + βi + δij

αi is a fixed parameter, additive for risk class i

βi is a fixed parameter, additive for age group i

δij is a fixed risk/age interaction parameter

– Should be added is a possible group/group interaction exists

7/31/2008

75

Two-Way ANOVA

– These constraints are imposed

• Σiαi = Σiβi = 0

• Σiδij = 0 for all j

• Σjδij = 0 for all i

– The total sum of squares is then subdivided into

four groups:

7/31/2008

•

•

•

•

Risk class sum of squares

Age group sum of squares

Interaction sum of squares

Within cells (“residual” or “error”) sum of squares

76

Two-Way ANOVA

– Associated with each sum of squares

• Corresponding degrees of freedom

• Hence also a corresponding mean square

– Sum of squares divided by degrees of freedom

– The mean squares are then compared using F

ratios to test for significance of various effects

• First – test for a significant risk/age interaction

• F-ratio used is ratio of interaction mean square and

within-cells mean square

7/31/2008

77

Two-Way ANOVA

– Example of interaction

7/31/2008

Age

– No evidence of interaction

Age

• If such an interaction is used, it may not be reasonable

to test for significant risk or age differences

• Example, μ in two risk classes, two age groups:

Risk

1

2

1

4

12

2

7

15

1

2

1

4

15

2

11

6

78

Multi-Way ANOVA

• One-way and two-way fixed effects ANOVAs

can be extended to multi-way ANOVAs

• Gets complicated

• Example: three-way ANOVA model:

X ijkm i j k ij ik jk ijk Eijkm

7/31/2008

79

Further generalizations of ANOVA

• The 2m factorial design

– A particular form of the one-way ANOVA

• Interactions between main effects

– m “factors” taken at two “levels”

• E.g. (1) Gender, (2) Tissue (lung, kidney), and (3) status

(affected, not affected)

– 2m possible combinations of levels/groups

– Can test for main effects and interactions

– Need replicated experiments

• n replications for each of the 2m experiments

7/31/2008

80

Further generalizations of ANOVA

– Example, m = 3, denoted by A, B, C

• 8 groups, {abc, ab, ac, bc, a, b, c, 1}

• Write totals of n observations Tabc, Tab, …, T1

• The total between sum of squares can be subdivided

into seven individual sums of squares

–

–

–

–

7/31/2008

Three main effects (A, B, C)

Three pair wise interactions (AB, AC, BC)

One triple-wise interaction (ABC)

Example: Sum of squares for A, and for BC, respectively

(Tabc Tab Tac Ta Tbc Tb T cT1 ) 2

8n

(Tabc Tab Tac Ta Tbc Tb T cT1 ) 2

8n

81

Further generalizations of ANOVA

– If m ≥ 5 the number of groups becomes large

– Then the total number of observations, n2m is large

– It is possible to reduce the number of observations

by a process …

• Confounding

– Interaction ABC probably very small and not

interesting

– So, prefer a model without ABC, reduce data

– There are ANOVA designs for that

7/31/2008

82

Further generalizations of ANOVA

• Fractional Replication

– Related to confounding

– Sometimes two groups cannot be distinguished

from each other, then they are aliases

• E.g. A and BC

– This reduces the need to experiments and data

– Ch. 13 talks more about this in the context of

microarrays

7/31/2008

83

Random/Mixed Effect Models

• So far: fixed effect models

– E.g. Risk class, age group fixed in previous example

• Multiple experiments would use same categories

• But: what if we took experimental data on several

random days?

• The days in itself have no meaning, but a “between

days” sum of squares must be extracted

– What if the days turn out to be important?

– If we fail to test for it, the significance of our procedure is

diminished.

– Days are a random category, unlike risk and age!

7/31/2008

84

Random/Mixed Effect Models

• Mixed Effect Models

– If some categories are fixed and some are random

– Symbols used:

• Greek letters for fixed effects

• Uppercase Roman letters for random effects

• Example: two-way mixed effect model with

– Risk class a and days d and n values collected each day, the

appropriate model is written:

X ikl i Dl Gil Eikl

7/31/2008

85

Random/Mixed Effect Models

• Random effect model have no fixed categories

• The details on the ANOVA analysis depend on

which effects are random and which are fixed

• In a microarray context (more in Ch. 13)

– There tend to be several fixed and several random

effects, which complicates the analysis

– Many interactions simply assumed zero

7/31/2008

86

Multivariate Methods

ANOVA: the Repeated Measures Case

Bootstrap Methods: the Twosample t-test

All skipped …

7/31/2008

87

Sequential Analysis

7/31/2008

88

Sequential Analysis

• Sequential Probability Ratio

– Sample size not known in advance

– Depends on outcomes of successive observations

– Some of this theory is in BLAST

• Basic Local Alignment Search Tool

– The book focuses on discreet random variables

7/31/2008

89

Sequential Analysis

– Consider:

•

•

•

•

•

•

•

Random variable Y with distribution P(y;ξ)

Tests usually relate to the value of parameter ξ

H0: ξ is ξ0

H1: ξ is ξ1

We can choose a value for the Type I error α

And a value for the Type II error β

Sampling then continues while

P( y1 ; 1 ) P( y2 ; 1 ) P( yn ; 1 )

A

B

P( y1 ; 0 ) P( y2 ; 0 ) P( yn ; 0 )

7/31/2008

90

Sequential Analysis

– A and B are chosen to correspond to an α and β

– Sampling continues until the ratio is less than A

(accept H0) or greater than B (reject H0)

– Because these are discreet variables, boundary

overshoot usually occurs

• We don’t expect to exactly get values α and β

– Desired values for α and β approximately achieved

by using

A

7/31/2008

1

B

1

91

Sequential Analysis

– It is also convenient to take logarithms, which

gives us:

P( yi ; 1 )

1

log

log

log

1

P ( yi ; 0 )

i

– Using

S1, 0 ( y ) log

– We can write

7/31/2008

P ( y; 1 )

P ( y; 0 )

1

log

S1,0 ( yi ) log

1

i

92

Sequential Analysis

• Example: sequence matching

– H0: p0 = 0.25 (probability of a match is 0.25)

– H1: p1 = 0.35 (probability of a match is 0.35)

– Type I error α and Type II error β chosen 0.01

– Yi: 1 if there is a match at position i, otherwise 0

– Sampling continues while

– with

7/31/2008

1

log

S1, 0 (Yi ) log 99

99 i

(0.35)Yi (0.65) (1Yi )

S1, 0 (Yi ) log

(0.25)Yi (0.75) (1Yi )

93

Sequential Analysis

– S can be seen as the support offered by Yi for H1

– The inequality can be re-written as

9.581 (Yi 0.2984) 9.581

i

– This is actually a random walk with step sizes

0.7016 for a match and -0.2984 for a mismatch

7/31/2008

94

Sequential Analysis

• Power Function for a Sequential Test

– Suppose the true value of the parameter of

interest is ξ

– We wish to know the probability that H1 is

accepted, given ξ

– This probability is the power Ρ(ξ) of the test

( )

7/31/2008

*

1

1 *

*

1

1 (

(

) (

)

)

95

Sequential Analysis

– Where θ* is the unique non-zero solution to θ in

P ( y; 1 )

1

P ( y; )

yR

P ( y; 0 )

– R is the range of values of Y

– Equivalently, θ* is the unique non-zero solution to

θ in

P( y; )e

S1, 0 ( y )

1

yR

– Where S is defined as before

7/31/2008

96

Sequential Analysis

– This is very similar to Ch. 7 – Random Walks

– The parameter θ* is the same as in Ch. 7

– And it will be the same in Ch 10 – BLAST

– < skipping the random walk part >

7/31/2008

97

Sequential Analysis

• Mean Sample Size

– The (random) number of observations until one or

the other hypothesis is accepted

– Find approximation by ignoring boundary

overshoot

– Essentially identical method used to find the mean

number of steps until the random walk stops

7/31/2008

98

Sequential Analysis

– Two expressions are calculated for ΣiS1,0(Yi)

• One involves the mean sample size

• By equating both expressions, solve for mean sample

size

1

S

(

y

)

(

1

(

))

log

(

)

log

i 1,0 i

1

P(Yi ; 1 )

P(Yi ; 1 )

E ( S1,0 (Yi )) E log

P(Yi ; ) log

P(Yi ; 0 ) yR

P(Yi ; 0 )

7/31/2008

99

Sequential Analysis

– So, the mean sample size is:

(1 ( )) log( 1 ) ( ) log( 1 )

P ( y ;1 )

P

(

y

;

)

log

yR

P ( y ; 0 )

– Both numerator and denominator depend on Ρ(ξ),

and so also on θ*

– A generalization applies if Q(y) of Y has different

distribution than H0 and H1 – relevant to BLAST

(1 ( )) log( 1 ) ( ) log( 1 )

P ( y ;1 )

Q

(

y

)

log

yR

P ( y ; 0 )

7/31/2008

100

Sequential Analysis

• Example

– Same sequence matching example as before

• H0: p0 = 0.25 (probability of a match is 0.25)

• H1: p1 = 0.35 (probability of a match is 0.35)

• Type I error α and Type II error β chosen 0.01

– Mean sample size equation is:

9.190( p) 4.595

13

p log 75 (1 p ) log 15

– Mean sample size is when H0 is true: 194

– Mean sample size is when H1 is true: 182

7/31/2008

101

Sequential Analysis

• Boundary Overshoot

– So far we assumed no boundary overshoot

– In practice, there will almost always be, though

• Exact Type I and Type II errors different from α and β

– Random walk theory can be used to assess how

significant the effects of boundary overshoot are

– It can be shown that the sum of Type I and Type II

errors is always less than α + β (also individually)

– BLAST deals with this in a novel way -> see Ch. 10

7/31/2008

102