Document 12586574

advertisement

Yosef Cohen

Evolutionary Distributions

November 22, 2007

c 2007, Yosef Cohen

Copyright Consistency is not a virtue, Coherency is.

I have known extremely incoherent men

who were extremely consistent.

Preface

Evolutionary distributions (ED) are a special kind of partial differential equations (PDE).1 They incorporate the processes that lead to population growth,

such as immigration and birth, and decline, such ad emigration and death.

Declines are subject to natural selection; the latter is influenced by the environment and interactions with coevolving organisms. Growth is subject to

mutations (random or otherwise). An open system of ED represents a collection of functionally distinct ED (primary producers, primary consumers, prey,

predators and so on) along with environmental input such as solar radiation,

temperature (as in global warming) and so on.

Within an ED, ecological interactions may involve the usual interactions with

“your own kind”; e.g., collaboration, competition, cannibalism and parasitism.

Between ED, interactions may involve predator-prey, parasitism and so on.

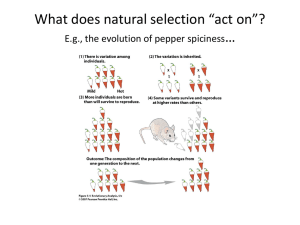

The quintessential premise of ED is that evolution by natural selection acts

directly on phenotypes and indirectly on genotypes—a hemophiliac dies because he bleeds to death, not because he owns (or owned by) some genetic

make up. Turning common belief on its face and contrary to what Richard

Dawkins professed in his ground-breaking The Selfish Gene (Dawkins, 1990),

from our perspective, genes serve phenotypes, not the other way around. ED

present us with the opportunity to supplement and in some cases do away

with evolutionary games as means to study evolutionary ecology

To relate to a comprehensive theory of ED (in the context of evolutionary

theory) requires solid understanding of the potential behavior of solutions of

ED, where the latter may be hyperbolic, elliptic or parabolic systems of PDE.

In most cases, the PDE models of the evolutionary process result in semi- or

at worst quasi-linear systems of PDE. We shall not encounter fully nonlinear

ED.

1

All acronyms are used in both singular and plural.

VIII

We begin with a motivating example (Chapter 1). We then provide an almost self contained introduction to PDE (Chapters 2, 3 and 4). To make the

subject matter accessible, we introduce PDE at an intuitive level as much as

possible. This means that some of the material in the introductory chapters

may seem at first irrelevant. Yet, if you are not familiar with PDE, the introductory chapters will hopefully motivate you to delve further into the subject.

Solutions of PDE represent a rich range of behaviors. Once you go over the

introductory chapters, you should be able to judge for yourself how useful (or

not) are ED to understanding evolution by natural selection.

After the introduction to PDE—which of course you may skip if you are familiar with the subject—we move on to the analysis of the consequences of

ED (single and systems) with respect to ecological interactions such as competition, predation, parasitism and so on. The guiding principles are biological

“reality” (or otherwise). Consequently, we will not be able to solve most of the

ED we discuss. So, we are going to sacrifice generality for biological reality and

thus rely on numerical solutions. We shall assume that for the most part, if a

real (co-)evolutionary system is inadequately encapsulated in a mathematical

framework—as is the case, for example, for ill-posed Cauchy problems—then

attempting numerical solutions will fail. Therefore, we shall not be infatuated with existence problems. If a solution to an ED does not exist, then the

mathematical model is nonsense.

A quick look at the Table of Contents reveals the organization of this work.

All of the results with respect to PDE are well known. Most of the results with

respect to ED are new in the sense that they have not been published before.

In all honesty, it is not possible to pin-point the prerequisite background for

complete comprehension of the material in this book. Much of it depends on

your abilities, background and most of all, level of commitment. To pursue the

subject matter without exposure to advanced calculus and ordinary differential equations (ODE) will probably require more than one reading. You may

find consolation in the fact that other than high school (but then during my

time high schools were different), I have no formal training in Mathematics.

The idea of ED resulted from long discussions about evolutionary games and

my dissatisfaction with some of their aspects. These spanned a period of about

10 years, during which I collaborated with Tom Vincent and Joel Brown,

working on evolutionary games. It was in the summer of 2003, when we met

at Itaska State Park in Minnesota (where the Mississippi river begins) that I

finally decided to abandon my work in the evolutionary games area and follow

the ED path. Were it not for the two of them, I do not know if my work on

ED would have happened.

IX

Yosef Cohen

November 22, 2007

St. Paul, Minnesota

Contents

1

Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1

1.1 A motivating example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1

1.1.1 The population model . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2

1.1.2 Mutations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

1.1.3 Selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4

1.1.4 The evolutionary distribution . . . . . . . . . . . . . . . . . . . . . . .

5

1.1.5 The evolution of efficiency . . . . . . . . . . . . . . . . . . . . . . . . . .

7

1.1.6 The evolution of efficiency with competition . . . . . . . . . .

9

1.1.7 Evolution through two phenotypic traits . . . . . . . . . . . . . . 11

1.1.8 Cooperation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.1.9 Directional mutations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.2 Evolutionary distributions in context . . . . . . . . . . . . . . . . . . . . . . . 15

1.2.1 Phenotypes, genotypes and natural selection . . . . . . . . . . 15

1.2.2 Evolutionary ecology and evolutionary games . . . . . . . . . 16

1.2.3 Stability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.2.4 The origins of the theory of ED . . . . . . . . . . . . . . . . . . . . . 18

1.2.5 The ordinary differential equations approach . . . . . . . . . . 18

XII

2

Contents

Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.1 Vectors and matrices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.1.1 Vectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.1.2 Matrices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.1.3 Eigenvalues and eigenvectors . . . . . . . . . . . . . . . . . . . . . . . . 26

2.2 Derivatives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.2.1 Partial derivatives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.2.2 The tangent plane and the approximation formula . . . . . 29

2.2.3 Critical points . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.3 Vector fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.3.1 Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

2.3.2 Tangent and normal vectors . . . . . . . . . . . . . . . . . . . . . . . . 36

2.3.3 Gradients . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.3.4 Vector fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.3.5 Lagrange multipliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.4 Integrals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.4.1 Multiple integrals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.4.2 Line integrals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.4.3 Surface integrals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

3

Introduction to first order PDE . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

3.2 First-order PDE with two independent variables . . . . . . . . . . . . . 70

3.2.1 Integral curves . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

3.2.2 Semilinear equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

3.2.3 Quazilinear equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

3.2.4 Solutions with discontinuous first derivatives . . . . . . . . . . 89

Contents

XIII

3.2.5 Weak solutions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

3.2.6 Shocks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

3.2.7 Nonuniqueness of weak solutions . . . . . . . . . . . . . . . . . . . . 98

3.3 First order PDE with n independent variables . . . . . . . . . . . . . . . 101

3.3.1 Characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

3.3.2 Conservation laws . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

3.3.3 Variable transformations . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

3.4 First order systems with two independent variables . . . . . . . . . . 111

3.4.1 Cauchy data and characteristics . . . . . . . . . . . . . . . . . . . . . 111

3.4.2 The Cauchy-Kowalevski theorem . . . . . . . . . . . . . . . . . . . . 113

3.4.3 The system ODE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

3.4.4 Weak solutions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

3.4.5 Shocks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

3.4.6 Causality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

3.4.7 Canonical form . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

3.5 First order systems with n independent variables . . . . . . . . . . . . 123

4

Introduction to second order PDE . . . . . . . . . . . . . . . . . . . . . . . . . 127

4.1 Classes of scalar PDE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

4.1.1 Linear . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

4.1.2 Semilinear . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

4.1.3 Quazilinear . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

4.2 Variable transformations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

4.3 Distributions and Riemann functions . . . . . . . . . . . . . . . . . . . . . . . 130

4.4 Semilinear PDE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

4.4.1 Cauchy data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

4.4.2 Characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

XIV

Contents

4.4.3 Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

4.4.4 Canonical forms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

4.5 Semilinear hyperbolic equations . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

4.6 Semilinear elliptic equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

4.6.1 The Poisson’s equation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

4.6.2 Maximum principle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

4.6.3 Green’s functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

4.7 Another approach to elliptic PDE . . . . . . . . . . . . . . . . . . . . . . . . . 146

4.7.1 Self adjoint operators and Green’s identities . . . . . . . . . . 146

4.7.2 Well-posedness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

4.7.3 Green’s functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

4.7.4 Laplace’s equation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

4.7.5 Eigenvalues and eigenfunctions . . . . . . . . . . . . . . . . . . . . . . 160

4.8 Semilinear parabolic equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

4.8.1 Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

4.8.2 Well posed boundary data . . . . . . . . . . . . . . . . . . . . . . . . . . 165

4.8.3 Uniqueness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

4.8.4 Maximum value theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

4.8.5 Green’s functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

4.8.6 Transforms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

4.8.7 Similarity solutions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

4.8.8 Another approach . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

5

Single evolutionary distributions . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

5.1 Construction of ED . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

5.1.1 Evolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

5.1.2 Single evolutionary distributions . . . . . . . . . . . . . . . . . . . . 176

Contents

XV

5.1.3 Conservation law derivation . . . . . . . . . . . . . . . . . . . . . . . . 179

5.2 Steady state and stability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

5.2.1 Steady state . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

5.3 ED - environment interactions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

5.3.1 Evolution of exploited populations . . . . . . . . . . . . . . . . . . . 186

5.3.2 Global climate change . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

5.3.3 Carrying capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

5.3.4 Driven ED . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.4 Competition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.5 Cooperation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.6 Cannibalism . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.7 Parasitism . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.8 Directional mutations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.9 Linked mutations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.10 Discontinuities and shocks in ED . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.11 Assortative mating . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

6

Multiple-evolutionary-distributions . . . . . . . . . . . . . . . . . . . . . . . . 191

6.1 Conservation law derivation of multiple ED . . . . . . . . . . . . . . . . . 191

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

Notations

1

Motivation

We start with a motivating example and then discuss evolutionary distributions (ED). Section 1.1 is more than just an example. We will use the results of

the example to advance some general ideas about evolution by natural selection and the role that ED might play in “rediscovering” Darwin’s work. If you

are not familiar with partial differential equations (PDE), the presentation in

this chapter might seem obscure on first reading. We shall explain things in

detail in the following chapters.

1.1 A motivating example

Currently, there is much hoopla about the production of ethanol from agricultural crops. It supposedly reduces the energy-dependence of oil-poor countries

on oil-rich countries. For example, sugar beet pulp—otherwise of little economic value to farmers—can be ingested by microorganisms who then effuse

methanol which in turn may be transformed to ethanol. The latter presents

an alternative to energy consumption from fossil fuel.

Imagine a population of microorganisms in a well-mixed liquid mixture of

sugar beet pulp. Suppose that survival and reproduction of each microorganism depends mostly on a single phenotypic trait which effects the organism’s

efficiency in converting sugar beet pulp to methanol and consequently the

ability of the phenotype to multiply.1 Denote this phenotypic trait by x ∈

(0, 1), and assume that x is inherited. The notation implies that x can have

values in the interval (0, 1). In the process of reproduction, the progeny inherit their progenitor’s value of x with small variation. The variation happens

1

Efficiency may be defined as units of energy produced per units of energy consumed.

2

1 Motivation

because of small random mutations. The mutations are manifested in changes

in the microorganism’s efficiency to produce methanol. These mutations are

a fact of life and they arise because, for example, the presence of ultraviolet

radiation that cause “errors” in DNA replication. We also assume that the efficiency of converting pulp to methanol affects the survival of the phenotypic

x value. For us, the survival of values of x in the population is important.

The survival of a an individual organism (phenotype) who carries that trait

is of no consequence unless it is related to the survival of the value of x in

the population. The survival of specific values of x in the population (carriers

of these values are called phenotypes) comes about perhaps because efficient

microorganisms reproduce faster than inefficient ones.

Because the phenotypic trait (the value of a particular x): (i ) affects survival

of the trait value (not necessarily of the organism itself), (ii ) is inherited and

(iii ) is subject to random mutations, we call x an adaptive trait. Next, we cast

this story in a a mathematical framework that mimics the process of evolution

by natural selection.

1.1.1 The population model

There are two approaches to modeling the process as described thus far. One

is discrete and the other continuous. In the discrete approach, we follow the

fate of each individual microorganism at each small time increment, sum these

fates and obtain population dynamics—a tedious and hardly useful approach

in this case. The other is through a continuous approximation. Let u (x, t)

denote the density of phenotypes x at time t of the microorganism population

and write

u (x, t + ∆t) = u (x, t) + ∆t (β (·) − µ (·))

where ∆t is a short time interval, β (·) and µ (·) are rate functions of some

arguments that result in growth and decline of the population density. When

the decline function µ (·) depends at least on x and u (written as µ (u, x)), we

call it the selection function. Rearranging, we obtain

u (x, t + ∆t) − u (x, t)

= β (·) − µ (·) .

∆t

We assume that the limit on the left hand side when ∆t → 0 exists and equals

the right hand side. Therefore,

∂

u (x, t) = β (·) − µ (·)

∂t

(∂u/∂t denotes the partial derivative of u with respect to t). It is well known

that when small (i.e., when resources are plenty), populations grow exponentially. So to a first approximation, we define

1.1 A motivating example

3

β (u (x, t)) := ru (x, t)

(:= denotes equal by definition) where r—the growth coefficient when the

population is small—is a constant. Assume that as the population grows,

the microorganisms exhaust their supply of raw material to convert and also

produce autotoxins. Again, to a first approximation, we may write

µ (u (x, t)) = µ

e (u (x, t)) u (x, t)

r

u (x, t) u (x, t)

=

k

r

2

= [u (x, t)] .

k

Letting u ≡ u (x, t), where ≡ denotes equal for all x and t), we write

∂t u = ru −

r 2

u

k

(1.1)

where ∂t u := ∂u/∂t is the partial derivative of u with respect to t. This is the

most ubiquitous single population model, the so-called logistic growth.

1.1.2 Mutations

Next, we assume that mutations: (i ) occur at birth; i.e., they are imposed on

the growth term of (1.1), (ii ) are small and (iii ) are random. Let η be the

mutation rate. Then after each instant ∆t, we have the following contributions

to u (x, t):

no mutations = (1 − η) ru (x, t) ,

1

mutations from u (x + ∆x, t) = ηru (x + ∆x, t) ,

2

1

mutations from u (x − ∆x, t) = ηru (x − ∆x, t)

2

where ∆x is small compared to x. So

1

ru (x, t) = (1 − η) ru (x, t) + ηr (u (x + ∆x, t) + u (x − ∆x, t)) .

2

(1.2)

Using Taylor series expansion around v, we obtain

u (x + ∆x, t) = u (v, t) + ∆x∂v u (v, t) +

x ≤ v ≤ x + ∆x

and around w

1

2

(∆x) ∂vv u (v, t) + o (u (v, t)) ,

2

4

1 Motivation

u (x − ∆x, t) = u (w, t) − ∆x∂w u (w, t) +

1

2

(−∆x) ∂ww u (w, t) + o (u (w, t)) ,

2

x − ∆x ≤ w ≤ x

where o (u(x)) means that lim∆y→0 o (u (y)) /∆y = 0 and ∂xx denotes the

second partial derivative. Taking ∆x → 0, substituting into (1.2) and dropping

o (·), we obtain

ru (x, t) = (1 − η) ru (x, t) +

1

1

2

ηr u (x, t) + ∆x∂x u (x, t) + (∆x) ∂xx u (x, t) +

2

2

1

2

u (x, t) − ∆x∂x u (x, t) + (−∆x) ∂xx u (x, t)

2

which gives

1

2

ru (x, t) = r u (x, t) + (∆x) η∂xx u (x, t) .

2

With mutations added, (1.1) becomes

2

(∆x)

∂t u = r u +

η∂xx u

2

!

−

r 2

u .

k

2

Absorbing (∆x) /2 into η (in which case η becomes very small), we write

∂t u = r (u + η∂xx u) −

r 2

u .

k

(1.3)

Equation (1.3) is known as the Fisher reaction diffusion equation It has been

analyzed extensively (see for example Murray, 2003) from a different perspective than ours. Rearranging and abstracting, we write (1.3) in a more general

form

∂t u + b∂xx u = f (x, t, u) .

(1.4)

In the mathematical vernacular, if f is nonlinear in u, and b is positive (certainly the case here), then (1.4) is referred to as a second order semi-linear

elliptic partial differential equation. If f is linear in u, then (1.4) is said to be

linear. Classifying PDE; e.g., as elliptic and semi-linear, is useful for then we

can say something about certain attributes of their solutions (e.g., existence)

without further ado.

1.1.3 Selection

So far, (1.3) incorporates mutations. To add selection, we must admit that

there is some advantage–in terms of transferring certain values of x to progeny

1.1 A motivating example

5

more than other values—to being a phenotype x as opposed to y for x 6= y at

some time, t. This advantage depends not only on x and t, but also on u(x, t).

The latter reflects the fact that selection is density dependent. One way to

effect the advantage of being x as opposed to y is to assume that there is some

value of x, say x

b, at which mortality of the x phenotypes is at its minimum.

This is where the environment results in selection of some phenotypes over

others. We use the term selection to emphasize the differences in mortality

rate among phenotypes because of differing values of their trait. If we suppose

that selection is reflected in differing carrying capacity for different values of

x, then one choice for k in (1.3) is

" 2 #

x−x

b

k (x) = k1 exp −

(1.5)

σx

which we may refer to as a phenotypic specific carrying capacity. Equation

(1.5) says: “If you are a phenotype with value x

b, then your carrying capacity

is at its maximum (and your mortality is at its minimum) compared to other

phenotypes with values different from x

b. Any deviation from x

b results in

lower carrying capacity. The amount by which the carrying capacity declines

depends on the magnitude of σx ; the smaller it is, the larger the decline per

unit of deviation from x

b.” For this reason, we call σx the phenotypic plasticity.

So in our example, we admit that increased efficiency in converting pulp to

methanol comes with a price (say vulnerability to autotoxins) and that there

is some optimal value, x

b. In other words, there is no “free lunch”. If there was,

we would have seen an evolution toward 100% efficiency, which contradicts

(at least) the laws of thermodynamics. An alternative to (1.5) is using |x −

2

x

b| instead of (x − x

b) , which is then known as the double exponential. We

can, if we so please, let x := σ, in which case phenotypic plasticity itself is an

adaptive trait.

1.1.4 The evolutionary distribution

With mutations and selection influencing the density of phenotypes via the

adaptive trait values, we have captured the essence of evolution by natural

selection in

r

∂t u = r (u + η∂xx u) −

u2 .

(1.6)

k (x)

To obtain a solution, we must specify the data which consist of initial and

boundary conditions. For initial data, we specify

u (x, 0) = g (x)

where g (x) is a known function. Now boundary conditions arise because x

manifests a physical quantity, and as such, it is usually constrained. For example, body temperature cannot exceed about 42◦ C because proteins denaturate (like in a hard boiled egg). It cannot go below 0◦ C because then proteins

6

1 Motivation

crystallize and harm cell membranes. For our methanol producing microorganisms, x, in representing efficiency, must be between 0 and 1. We write it

as x ∈ (0, 1) (for x and set {y}, x ∈ {y} says that x is a member of (in) {y}).

Once we add the boundary conditions along 0 and 1 for any t ≥ 0, we have

x ∈ [0, 1] for all t ≥ 0. The notation (a, b) for a < b says that the interval

between a and b includes neither a nor b. The interval [a, b] includes both a

and b. These are called open and closed intervals. There are two corresponding

half open intervals.

Both initial and boundary conditions must be compatible in the sense that at

t = 0, the boundary conditions must equal to the initial condition u (x, 0) at

the boundaries; i.e.,

g (x) = u (x, 0) , g (x) = u (x, 0)

(1.7)

where x and x are the lower and upper boundaries of x. In choosing the

boundary conditions, we have a few alternatives. The most common are the

so-called Dirichlet, Neumann and periodic boundary conditions. We write

these boundary conditions as

Dirichlet: u (x, t) = c1 , u (x, t) = c2 ,

Neumann: ∂x u (x, t) = ∂x u (x, t) = 0,

Periodic: u (x, t) = u (x, t) .

This is not the most general way to write boundary conditions, but for our

needs it suffices. In the case of the Neumann conditions, the derivatives are

taken with respect to the outward normal (a directional derivative perpendicular to the tangent to the boundary at x and at x). For our purpose,

the Neumann boundary conditions are the most natural. They simply say

that there is no flow across boundaries. The Dirichlet boundary conditions

are somewhat unnatural—there is no particular reason to believe that u =

some value on the boundaries. The periodic boundary conditions are useful

in cases where we have reason to believe that the u on both boundaries are

equal. We shall use mostly the Neumann boundary conditions. Occasionally,

and for (admittedly) numerical convenience, we shall use periodic boundary

conditions. We invite those who prefer the Dirichlet or any other boundary

conditions (e.g., Robbins) to follow the developments along a parallel track. It

will be extremely interesting to show that different boundary conditions lead

to substantially different conclusions about the the outcome of the evolutionary processes involved.

Our fully specified model of evolution under natural selection, which we call

the evolutionary distribution (ED), is

1.1 A motivating example

∂t u = r (u + η∂xx u) −

r

u2 ,

k (x)

7

x ∈ (x, x) ,

(1.8)

u (x, 0) = g (x) ,

∂x u (x, t) = ∂x u (x, t) = 0

where k (x) is given in (1.5). Because we use the Neumann boundary conditions, it will often be convenient to rescale x to x ∈ (0, 2π) and set u (x, 0)

= A + A sin (x) where A and B are constants. This results in compatible

Neumann boundary and initial conditions in (1.7). Figure 1.1 illustrates com-

u (x ,0 )

∂ x u (x ,0 ) at x = x

∂ x u (x ,0 ) at x = x

x

x

Fig. 1.1: The Neumann boundary conditions must be compatible with the

initial conditions.

patible boundary and initial conditions conditions: at t = 0, we have ∂x u(x, 0)

= 0 at x and at x. For t > 0, the solution of our PDE must continue to satisfy

∂x u(x, t) = 0 on both ends.

We will follow three ED: with selection operating through phenotypes efficiency with respect to carrying capacity, with selection operating on u (x, t)

through the carrying capacity and competition and with two adaptive traits.

1.1.5 The evolution of efficiency

With the following parameter values

8

1 Motivation

r = 0.25, k1 = 1 000, η = 5 × 10−7 , σk = 2,

x = 0, x = 2π, x

b = π, T = 40, g (x) = 100

(1.9)

and data

u (x, 0) = g (x) ,

∂x u (x, t) = ∂x u (x, t) = 0

we obtain the numerical solution illustrated in Figure 1.2.2 The left panel

Fig. 1.2: Left - trajectory of the ED (1.8). Right - initial (horizontal line) and

the stable fixed ED at T = 40.

shows the trajectory of the evolving distribution and the right the initial and

the fixed stable distribution of phenotypes at T = 40. The density of the most

fit phenotypes (with processing efficiency of 50%) is 1 998 (say individuals per

mm3 ).

For us, fitness of a phenotype is a function of its density when the ED is

stable. If the stability is fixed, then fitness is simply the phenotype’s density.

When we say phenotype’s density, we mean the density of individuals who

carry a particular value of the phenotypic trait, x. If the ED is stable, but

periodic, with period τ , then the fitness of a phenotype is given by

Z t+τ

φ(x) =

u

e(x, ζ)dζ

(1.10)

t

where φ denotes fitness and u

e denotes the stable ED. If the ED is chaotic,

then once it enters its attractor, fitness is given by

2

We shall obtain an explicit solution to this ED later.

1.1 A motivating example

Z

φ(x) =

t

9

∞

u

e(x, ζ)dζ.

If the ED is unstable, then fitness is undefined, but instantaneous fitness is

by u(x, t). From this quick introduction of fitness and the solution of (1.8) we

conclude that at any time, fitness, or instantaneous fitness, is a function of x.

Contrary to the traditional mathematical literature in evolutionary ecology

(where ODE are used), we do not require that at fixed stable equilibrium φ(x)

= 0. In fact, phenotypes of different fitness can (and must) coexist.

Thus, we already see that ED give us a way to answer the following: How

come we see so many phenotypes in natural systems that may be stable? For

example, mature trees in old growth forests (wherever they still are) are of

a variety of heights, trunk diameters, shades of leaves (or needles) and so

on. Some of these differences are produced by local random conditions and

events, such as soil nutrients. Others are maintained by genes at the service

of phenotypes.

1.1.6 The evolution of efficiency with competition

Now consider competition among the microorganisms. We assume that similar

phenotypes compete most for resources. Operationally, one may envision the

microorganisms living in a mixture where there are small differences in the

quality of the sugar beet pulp. Similar phenotypes exhaust the supply of pulp

that suit them most. Competition is mediated through mortality. So in (1.6),

we modify u2 and write F (u, x) u (x, t) instead. Here, F (·) accounts for the

competition between phenotypes. We now write

" 2 #

Z x

x−ξ

F (u, x) := k2

exp −

u (ξ, t) dξ, k2 ≥ 1.

(1.11)

σF

x

The exponent takes its maximum when x = ξ. At t, we integrate the competition between x and all phenotypes, weighed by the densities u (ξ, t) and

u (x, t). Thus competition and thereby selection become density dependent.

Let

Z x

2

exp −ξ 2 dξ.

erf (x) := √

π 0

Then

√ σF π

x−x

x−x

F (u, x) = k2

erf

− erf

u (x, t) .

2

σF

σF

Now the ED (1.8) becomes

10

1 Motivation

∂t u = r (u + η∂xx u) −

u (x, 0) = g (x) ,

∂x u (x, t) = ∂x u (x, t) = 0

rF (x)

2

(u (x, t)) ,

k (x)

x ∈ (0, 2π) ,

(1.12)

where F (x) does not depend on u (x, t). We keep the same parameter values

as in (1.9) and add

k2 = 100, σF = 1

(σF is the phenotypic plasticity with respect to competitive ability) and thus

obtain Figure 1.3. To view the impact of competition on the ED, compare

Fig. 1.3: Trajectory of the ED (left) and initial (horizontal line) and fixed

stable distribution at T = 40.

the left panels of Figures 1.2 and 1.3. Without competition, the most fit

phenotypes reach a density of 1 998; with competition, they reach 11.28. In

addition, the total density without competition is 5 × 107 , with competition,

it is 596 938 (all numerical results are approximate).

Figure 1.3 reveals an interesting feature: the density of the phenotypes at

the extremes (the most and least efficient microorganisms with regard to producing methanol) is higher than that of nearby phenotypes. Why? because

they are exposed to less competition than the phenotypes in the interior—

recall that we are integrating from x to x so the phenotypes at the extreme

left do not compete with phenotypes on their left and those on the extreme

right do not compete with phenotypes on their right. Hence, the density of

phenotypes on the boundaries is less depressed than that of phenotypes in

the interior. In fact, it is a matter of the choice of parameter values to obtain

complete isolation of the extreme phenotypes from the rest of the phenotypes.

1.1 A motivating example

11

Given small mutations, they then become reproductively isolated (a kind of

sympatric speciation if you will).

1.1.7 Evolution through two phenotypic traits

Consider two evolutionary traits, x := [x1 , x2 ], where x1 is associated with the

efficiency of processing sugar beet pulp to methanol and x2 is associated with

the competitive ability (say resistance to autotoxins). Assume that mutations

on x1 , denoted by η1 are independent of those on x2 , denoted by η2 . We

now reconsider (1.1). The mutations into ru (x1 , x2 , t) come from (with the

assigned proportions):

1

1 1

η1 η2 u (x1 − ∆, x2 − ∆, t) , η1 (1 − η2 ) u (x1 − ∆, x2 , t) ,

2 2

2

1

1 1

η1 (1 − η2 ) u (x1 − ∆, x2 + ∆, t) , . . . , η1 η2 u (x1 + ∆, x2 + ∆, t) .

2

2 2

Expanding all of these terms in u around (x1 , x2 ) and collecting terms we

obtain

!

2

X

rF (x2 ) 2

ηi ∂xi xi u −

u .

(1.13)

∂t u = r u +

k (x1 )

i=1

(with x in the appropriate set). To examine the ED, we use (1.5) with x

replaced by x1 and (1.11) with x replaced by x2 . Equation (1.13) with the

data

x : = [x1 , x2 ] , x := [x1 , x2 ] , u (x, 0) = g (x) ,

∂xi u (xi , t) = ∂xi u (xi , t) = 0, i = 1, 2

establish our ED. We adopt the same parameter values as before with the

appropriate substitutions for x1 and x2 . Figure 1.4 illustrates the fixed stable

ED. The density of the (π, π) phenotypes is now depressed (to 7.09) and

the total population is 2.593 1 × 106 . The release from extra competition

at the boundaries has now more marked effect than when selection for both

competition and carrying capacity are mediated by a single adaptive trait. The

remarkable fact is that when we link selection for efficiency and for competitive

ability to two different adaptive traits, the density of all phenotypes is larger

compared to when both selective pressures are linked to a single trait. In other

words, evolution by natural selection favors ED with multiple adaptive traits!

Let n be the cardinality (number of elements) of the set of adaptive traits.

This set of traits, along with their boundaries, define what we call the adaptive

space. So each ED is living in a space of n + 2 dimensions (n for x, one for

t and and one for u). In the case of a system of say m ED, each living in

an ni (i = 1, . . ., m) adaptive spaces, we define the evolutionary space as the

12

1 Motivation

Fig. 1.4: Fixed stable ED (left) and cross cuts at x1 = 0 and 2π (lower curve)

and at x1 = π (upper curve).

collection of m adaptive spaces along with m densities, ui (x, t). Therefore, the

evolutionary space has n1 + . . . + nm + m + 1 dimensions. The evolutionary

space is comprised of m manifolds, all intersecting along t.

If one is willing to interpret multiple traits as a measure of diversity, then

little wonder that evolution by natural selection results in ever more diverse

organisms with respect to their number of adaptive traits. Often we refer to

such organisms as “higher” or more complex compared to those with just a few

adaptive traits. Of course, what leads to jumps in the dimensionality of ED

(i.e., increase in the number of adaptive traits) remains an open question that

must be considered in the context of evolution by natural selection. A jump

in the dimensionality of the adaptive space may be likened to macroevolution.

The latter—according to our framework—occurs when a mutation, however

small, is “novel” in the sense that it allows phenotypes who carry them to

adapt to selection which previously had not been part of their repertoire of

adaptive traits. Note that we do not require large mutations to effect changes

in the dimensionality of the adaptive space (and thereby the dimensionality

of the evolutionary space), which we call macroevolution.

Here is another tantalizing possibility. Recall the definition of fitness; e.g.,

as in (1.10). To keep the discussion simple, assume that the ED is stable

and fixed. Does fitness increase monotonically with the dimensionality of the

adaptive space? Or perhaps dimensionality in the adaptive (and evolutionary)

space has some value at which fitness is at a global maximum. In other words,

you adapt, but not to everything for at some point, adapting to too many

things might hurt your fitness. All of these possibilities raise the specter of an

evolutionary space with dimensionality that is not fixed. This is in addition

to the idea of ever changing landscape of the adaptive space itself, as defined,

1.1 A motivating example

13

for example, by Vincent and Brown (2005). We shall have more to say about

these possibilities later.

1.1.8 Cooperation

Let us modify (1.11) to

Z

"

x

F (u, x) := k2

exp

x

x−ξ

σF

2 #

u (ξ, t) dξ, k2 ≥ 1.

(1.14)

Here, like phenotypes cooperate more than dislike phenotypes. Keep all parameters as before; just increase T to 1 000 and observe what happens (Figure

1.5): As opposed to the case of competition (Figure 1.4), now all phenotypes

Fig. 1.5: Fixed stable ED with selection on carrying capacity and cooperation.

bunch up in the middle. Remarkably, the density is depressed compared to

evolution with competition. Because the qualitative behavior of the numerical

solution (e.g., bunching here or there), is not specific to the choice of parameter values, we have a general result: Phenotypes achieve higher fitness under

competition than under cooperation. If the results of this example reflect a

ubiquitous phenomenon, then no wonder that in Nature, competition is more

frequently observed than cooperation. This conclusion is model-specific. It will

be interesting to see if such conclusion is not model-specific.

14

1 Motivation

1.1.9 Directional mutations

Let us revisit the case of a single trait with selection for carrying capacity and

competition (Section 1.1.6). Assign η1 to mutations to the left (to x − ∆x)

and η2 to mutations to the right. Then Taylor series expansion results in

1

u − ∆ (η1 − ∆η2 ) ∂x u + ∆2 (η1 + η2 ) ∂xx u.

2

1 2

Redefine ζ1 := ∆ (η1 − η2 ), ζ2 = ∆ (η1 + η2 ) and now

2

r

F (u, x) u.

∂t u = r (u − ζ1 ∂x u + ζ2 ∂xx u) −

k (x)

motivating-example.nb

To observe the effect of unequal mutation rates, we adopt the ED from Section

1.1.6 with η2 vastly larger than η1 (Figure 1.6). The selection for efficiency

1

t

18

z

16

z

14

12

10

0

1

2

3

T

4

x

Fig. 1.6: Unequal mutation rates.

now plays a minor role and competition a major role. As expected, the faster

mutation rate pushes phenotypes to the right edge of the ED with slight

increase in the total density of phenotypes (666 292) compared to the same

ED with equal mutation rates. These effects become recognizable only when

the mutation rate to the faster side is ridiculously large—as in the case of

selective breeding in animal husbandry.

By how much does the solution change when we drop the second term in the

Taylor series expansion? With η1 = 0.05 and η2 = 0.015, we obtain ζ1 = 10−4

5

6

1.2 Evolutionary distributions in context

15

and ζ2 = 10−6 . The results with the model parameters as in Section 1.1.6

were virtually identical. With this in mind, it makes sense to pursue analysis

of the ED of the first order partial differential equation (PDE):

∂t u = r (u − ζ1 ∂x u) −

r

F (u, x) u, x ∈ (x, x)

k (x)

(1.15)

and data

u (x, 0) = g (x) (x) , u (x, t) = ux , u (x, t) = ux

where x, x, g (x), ux and ux are given. As usual, we must make sure that

u (x, 0) = g (x) , u (x, 0) = g (x) .

Later, we shall obtain an explicit solution to a flavor of this ED.

1.2 Evolutionary distributions in context

Here we put the theory of evolutionary distributions in context (Figure 1.7).

Evolutionary theory

Ecology

Evolutionary

Ecology

ED

Molecular

Genetics

Population Ecology

Population

Genetics

Genetics

Fig. 1.7: Evolutionary distributions in the context of Evolution, Ecology and

genetics.

1.2.1 Phenotypes, genotypes and natural selection

The theory of ED reflects a quintessential recognition: Natural selection works

on phenotypes, never directly on genotypes. An organism does not die directly

16

1 Motivation

because a certain genetic makeup does not agree with a certain cause of mortality; it dies directly because of a certain phenotypic trait does not agree with

a certain cause of mortality. Take for example hemophilia. Between the death

from bleeding and the hemophiliac genotype there stands a chain of events.

In broad brush terms, blood does not reach vital organs. It does not because

of bleeding. The bleeding occurs because of the inability of blood to coagulate. Blood coagulation is influenced by certain proteins whose production is

engineered by genes (one can identify many more chains, but these suffice to

make our point). So we may choose some time-scaling phenotypic trait that

reflects how fast (if at all) blood coagulates.

If we admit that death is an instantaneous event, then it occurs for one, and

only one reason. Therefore, if (i ) we have a framework to follow the dynamics

of phenotypes in a Darwinian evolutionary context and (ii ) if we can map

genotypes to phenotypes and then back to genotypes, then (iii ) the problem of

the genetic population dynamics is reduced to algebraic relationship between

it and the dynamics of ED. The mapping need not be one-to-one for we can

always use probability to map phenotypes to genotypes.

1.2.2 Evolutionary ecology and evolutionary games

Evolutionary ecology models—that is, models that integrate evolutionary processes with population ecology—usually start with

u̇ = H (x, u) u

(1.16)

where u is a vector, H is a matrix of “instantaneous fitness” functions and x is

a vector (possibly of vectors) of adaptive traits—all of appropriate dimensions.

From here on, dots denote derivatives with respect to time. To (1.16) one then

adds dynamics of the adaptive traits (called strategies)

ẋ = f (x, u) .

(1.17)

With these two equations, ecological and evolutionary dynamics become intertwined (Brown and Vincent, 1987; Abrams et al., 1993; Vincent et al.,

1993, 1996; Taylor and Day, 1997; Cohen et al., 2000b). This starting point,

sparked by the work of Maynard-Smith (1982), turns the system into an evolutionary game. The approach above has variations such as matrix games and

differential games. One important variation is the inclusion of space. This

turns a model of a coevolutionary system from ordinary differential equations

to a system of partial differential equations (see Dieckmann et al., 2000, in

particular Chapter 22).

The approach in (1.16) and (1.17)—and its relatives—triggered a profound

change in the perception of coevolution—one no longer looks to maximize

1.2 Evolutionary distributions in context

17

adaptations under constraints (essentially an optimization criterion), but

rather maximize adaptations in the context of other interacting organisms

whose adaptations are maximized by coevolution (essentially a game theoretic criterion). These developments in evolutionary ecology parallel those in

economics, where starting with Nash (1954), emphasis has shifted from optimization to game theoretic solutions of capitalistic market problems.

As an aside, there are some fundamental differences between evolutionary

games in ecology and most games in economics. In evolutionary games, the

players are not individuals, they are strategies. The players are not required

to be rational, they are not required to know the rules and they might not

even know they are playing.

The game theoretic criterion for solution (a solution concept if you like) in

evolutionary ecology is the so-called evolutionarily stable strategies (ESS),

introduced by Maynard-Smith (1982). According to this criterion, coevolving

organisms exhibit a set of genetically-based adaptations that taken together

prevent mutants from coexisting with the ESS set of phenotypes. But alas, in

the course of its development, evolutionary game theory “lost” interest in the

input of the environment to the outcome of the game (but see for example

Cohen et al., 2000a). For example, the solution to the prisoner’s dilemma game

should be quite obvious if a lynching mob is lurking outside the jail house.

When stable, ED meet the criteria of ESS and do away with the various

stability concepts that are associated with ESS. In the limited case of smooth

(dynamical) evolutionary games, ED may serve as an alternative approach

to the study of evolution by natural selection. The addition of interactions

among phenotypes, as articulated with F in (1.11) and (1.14) incorporate the

elements of games in the usual sense.

1.2.3 Stability

The justification for using the ESS concept is that without stability we are not

likely to observe the consequences of evolution in Nature. In general, stability

has been the holy grail of mathematical ecology. This is one place where we

part from traditional approaches to evolutionary ecology problems through

mathematical modeling. There are at least two reasons to object to the concept of stability in mathematical ecology. First, all species are doomed to

extinction. One needs go no further than the famous gambler’s ruin theorem

in probability theory (e.g. Chiang, 1968) to justify this statement. Heuristically, the theorem states that if one gambles against a house with unlimited

resources, then regardless of one’s winning odds, destruction is guaranteed. So

if we view evolution by natural selection as a very large number of gambling

contests between organisms (the players) and Nature, and if we admit that

18

1 Motivation

Nature has unlimited resources (in the sense that it never “dies”), then all

individuals of a species will sooner or later perish.

The second reason we object to the edification of the stability concept has to

do with the argument that “if it is not stable, you are not likely to observe

it”. Stability is an absolute concept. For the sake of argument, suppose that a

mathematical model indicates a slow decay of a population to zero. Then who

is to say that we are not likely to observe the population if it goes extinct at t

= 1020 (whatever t’s units are)? How short should time to extinction be before

we can claim that we are not likely to observe the population? Consequently,

we are not going to be overly concerned with the concept of stability. We will

content ourselves by asserting that a mathematical model of the evolutionary

process does not explode arbitrarily close to its initial conditions. With all

this in mind, stability is a useful concept. Particularly in exploring where a

system is going, even if it never gets there.

1.2.4 The origins of the theory of ED

The theory here builds on the foregoing by using the concept of ED. In fact,

stable ED correspond to ESS. In the case of ED, reaction diffusion models are

derived from first principles concerning ecological and evolutionary processes.

Reaction diffusion is a large topic both in mathematics and ecology. Smoller

(1982) and Murray (2003) are good references on the subject. The former

is more mathematically oriented than the latter. An expository account is

given in Britton (1986). Reaction-diffusion models are of considerable interest

in ecology (Segel and Jackson, 1972; Levin and Segel, 1976; Rosen, 1977;

Mimura and Murray, 1978; Okubo, 1980; Conway, 1984; Pease et al., 1989;

Dieckmann et al., 2000; Alonso et al., 2002). A somewhat related approach to

the one taken here was introduced by Slatkin (1981).

In developing the theory of ED, the influential work by Kimura (1994) must

be kept in mind. The approach Kimura and his coworkers took, however, concentrated on population genetics and they were mostly interested in deriving

moments of the distribution of genotypes.

1.2.5 The ordinary differential equations approach

Equations (1.16) and (1.17) represent a large class of models in ecology and

epidemiology. We shall refer to these as the ordinary differential equations

approach, or ODE models. There are several assumptions implicit in models

that belong to this class: (i ) reproduction is by cloning; (ii ) u is large; (iii )

when strategy is included (as in 1.17), u represents some moment of the

1.2 Evolutionary distributions in context

19

dynamics (usually the mean with respect to x) of u (x, t); (iv ) stochastic

effects can be faithfully represented by u; and (v ) u are smooth functions of

x and t. Some of these assumptions were discussed by Dieckmann et al. (1995),

Doebeli and Ruxton (1997), Law et al. (1997) and Geritz and Kisdi (2000). In

practice, conclusions from ODE models routinely violate the assumptions or

are stated without regard to the assumptions. For example, some applications

of (1.16) produce negative population densities; this is particularly true when

oscillations are involved. To circumvent such problems, authors—explicitly or

implicitly—enlarge this class of models to

f (u (t)) if u (t) ≥ ε

u̇ (t) =

, u (0) = f0

(1.18)

0

otherwise

where ε > 0. This is a class of non-smooth (or even discontinuous) functions

and care must be taken in solving (1.18).

To proceed from (1.16) and (1.17), one usually identifies a set of adaptive

traits and writes (1.17) as

u̇ (xi , x, t) = f (u (xi , x, t)) ,

u (0) = f0

(1.19)

where now xi is the set of adaptive traits of species i. The dimension of xi

is mi . Here x is a setPof real numbers of all adaptive traits of all species

n

with dimension m := i=1 mi . Next, the dynamics of x (called the strategy dynamics) may be isolated by several methods, each involving additional

assumptions (see Murray, 2003; Weibull, 1995; Fudenburg and Levine, 1998;

Hofbauer and Sigmund, 1998; Samuelson, 1998; Gintis, 2000; Cressman, 2003;

Hofbauer and Sigmund, 2003; Vincent and Brown, 2005). For example, in the

case of adaptive dynamics (Dieckmann and Law, 1996; Champagnat et al.,

2001), the additional assumptions are: (i ) mutations are rare and at small

time intervals the probability that more than one mutation occurs is of order

zero; (ii ) x is a moment (usually mean) of some distribution of phenotypes.

Without implicitly assuming some distribution of phenotypes and encapsulating the distribution in x, one cannot observe strategy dynamics. Therein lies

the rub (see below). The nice thing about the adaptive dynamics approach is

that with considerations from measure theory (Doob, 1994), the derivation of

the strategy dynamics justifies the fact that no matter how large the population, it still can be viewed as a collection of individuals (Dieckmann and Law,

1996). Furthermore, in analyzing predator-prey coevolution, Dieckmann et al.

(1995) showed that with some level of stochasticity, deterministic models (i.e.,

where u is the mean path) do represent the mean of the stochastic path.

Other approaches to deriving the strategy dynamics (e.g. Abrams et al., 1993)

involve the assumption that population densities change much faster than

strategy values. Therefore, one assumes that the populations are at equilibrium and only strategy dynamics need to be considered. This assumption is

circumvented by the approaches that Abrams (1992) and Vincent and Brown

20

1 Motivation

(2005) take (see also Cohen et al., 2000b), where population dynamics need

not be ignored. Vincent and Brown (2005) refer to the strategy dynamics with

the related population dynamics as “Darwinian dynamics”.

Regardless of the approach one takes, strategy dynamics usually take on the

form

ẋi (t) = gi (v, x, t) , x (0) = x0 , i = 1, . . . , n.

(1.20)

Here gi is a vector valued function (usually a gradient of some other function)

of dimension mi and v is a vector (of dimension mi ) of the so-called virtual

strategies. To derive (1.20) from (1.19), one needs to add the assumptions

that f and g are at least twice differentiable. These assumptions are required

because one needs to examine special points (e.g., maxima and minima) on

the strategy dynamics.

The basic achievement in deriving gi is that it gives invasion-ability criteria.

e, that result in negative

Specifically, from gi we derive values of x, call them x

e (with respect to u). It should be

gradient on gi for all i and for all x 6= x

noted that in the case of Darwinian dynamics, (1.20) becomes

ẋi (t) = gi (v, x, u, t) , x (0) = x0 ,

i = 1, . . . , n

(1.21)

and the strategy dynamics can no longer be divorced from the population

e on the dynamics of all other values of

dynamics. Because of the effect of x

e the ESS of the system (1.20) or the system (1.19) and (1.21). So

x we call x

far, the strategies are assumed unbounded. Bounded strategy spaces introduce

e is in the interior of

complications that can be avoided if one assumes that x

the strategy space.

At any rate, the same violations or misuse of the assumptions behind (1.16)

apply to evolutionary models, with one crucial addition: Behind each x hide

distributions of the density of phenotypes of u. In fact, the dynamics of x

represent the dynamics of moments of these distributions (usually the mean).

This raises the possibility that we may be following dynamics of phenotypes

whose distribution can hardly be represented by their mean. Worse yet, we

may be following the dynamics of phenotypes that do not exist (Figure 1.8).

In fact, we can change the parameter values in the example with competition

(Section 1.1.6) such that all surviving phenotypes are on the boundaries. The

mean of the surviving phenotypes, as evolutionary games would have it, is in

a place that no phenotypes exist! Furthermore, because ESS is an outcome

e cannot be determined by traditional stability

of a game, the stability of x

analysis (except for the case of Darwinian dynamics). Hence, the proliferation

of stability criteria for ESS (Eshel, 1996; Apaloo, 1997; Taylor and Day, 1997).

By examining the stability of ED, some of the ESS related issues can be

simplified and enriched: (i ) stability of ED satisfies the ESS criterion; (ii ) ED

bypass the potential problems that arise when strategy dynamics are followed

by some distribution moments; (iii ) for fixed point stability, ESS requires that

21

u ( x, t )

1.2 Evolutionary distributions in context

x

^x

x

Fig. 1.8: A bounded distribution (between x and x) of phenotypes at time

t. Note that there are no phenotypes at the mean adaptive trait, x

b.

The figure illustrates a snapshot of some ED at t.

the fitness of all species participating in the ESS be zero whereas ED admit

any finite value of fitness at stability; (iv ) the ODE approach either assumes

a number of species or obtains them from the solution of the game whereas

the ED approach is infinite dimensional; (v ) using the machinery of PDE,

ED can deal with solutions that are more general—in a sense to be clarified

later—than the ODE approach (in particular, the idea of weak solutions); (vi )

a related issue is that ED (through PDE) can sometimes deal with solutions

in which continuity of solutions breaks down or the initial conditions are not

continuous. Yet, ED do suffer from limitations which will be pointed out as

we proceed. Some of these limitation are common to the ODE approach.

For example, we do require large populations so that it remains valid to use

derivatives. Other limitations relate to the complexity and richness of the

behavior of (even linear) PDE and to the fact that a comprehensive theory of

PDE is not going to be available any time (if ever) soon.

Finally, we emphasize that for us, the “stuff” of evolution is not species. It is

a distribution of phenotypes in the adaptive space. For example, one may be

tempted to identify two species in Figure 1.8. Yet, here we are, over 150 years

since Darwin’s time, and we are yet to come up with a satisfactory definition

of species, which is one of the most fundamental concepts in all of biology.

So rather talk about species, we talk about types. The latter are identified

as intervals around special points on the ED. Such intervals may indicate

reproductive isolation of phenotypes.