Scientific Certainty Argumentation Methods (SCAMs):

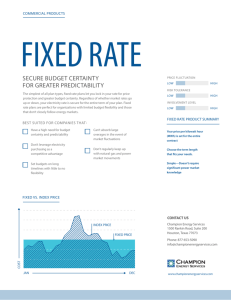

advertisement